问题背景

WT 引擎会使用压缩算法将内存 page 压缩之后,再按照 4KB 对齐后写入磁盘。

可以使用 stats() 命令查看表的创建参数:

mongos> db.coll1.stats().wiredTiger.creationString access_pattern_hint=none,allocation_size=4KB,app_metadata=(formatVersion=1),assert=(commit_timestamp=none,durable_timestamp=none,read_timestamp=none),block_allocation=best,block_compressor=snappy,cache_resident=false,checksum=on,colgroups=,collator=,columns=,dictionary=0,encryption=(keyid=,name=),exclusive=false,extractor=,format=btree,huffman_key=,huffman_value=,ignore_in_memory_cache_size=false,immutable=false,internal_item_max=0,internal_key_max=0,internal_key_truncate=true,internal_page_max=4KB,key_format=q,key_gap=10,leaf_item_max=0,leaf_key_max=0,leaf_page_max=32KB,leaf_value_max=64MB,log=(enabled=false),lsm=(auto_throttle=true,bloom=true,bloom_bit_count=16,bloom_config=,bloom_hash_count=8,bloom_oldest=false,chunk_count_limit=0,chunk_max=5GB,chunk_size=10MB,merge_custom=(prefix=,start_generation=0,suffix=),merge_max=15,merge_min=0),memory_page_image_max=0,memory_page_max=10m,os_cache_dirty_max=0,os_cache_max=0,prefix_compression=false,prefix_compression_min=4,source=,split_deepen_min_child=0,split_deepen_per_child=0,split_pct=90,type=file,value_format=u

其中本次要讨论的算法,涉及的参数有:

- internal_page_max=4KB:硬盘上 btree 的非叶子节点最大 4KB

- leaf_page_max=32KB: 硬盘上 btree 的叶子节点最大 32KB

- memory_page_max=10m: 内存中的 page 最大 10MB

为什么硬盘上的 page 大小会有 4KB 和 32KB 的限制?

个人认为:

1.如果限制太小,则不能发挥硬盘的 IO 能力(硬盘都是按块读取的,而且为了提升 IO 能力都会有一定的 readahead 预读机制)。另外也会导致 btree 的高度更高以及压缩效率降低。

2.如果限制太大,会导致读放大比较严重。

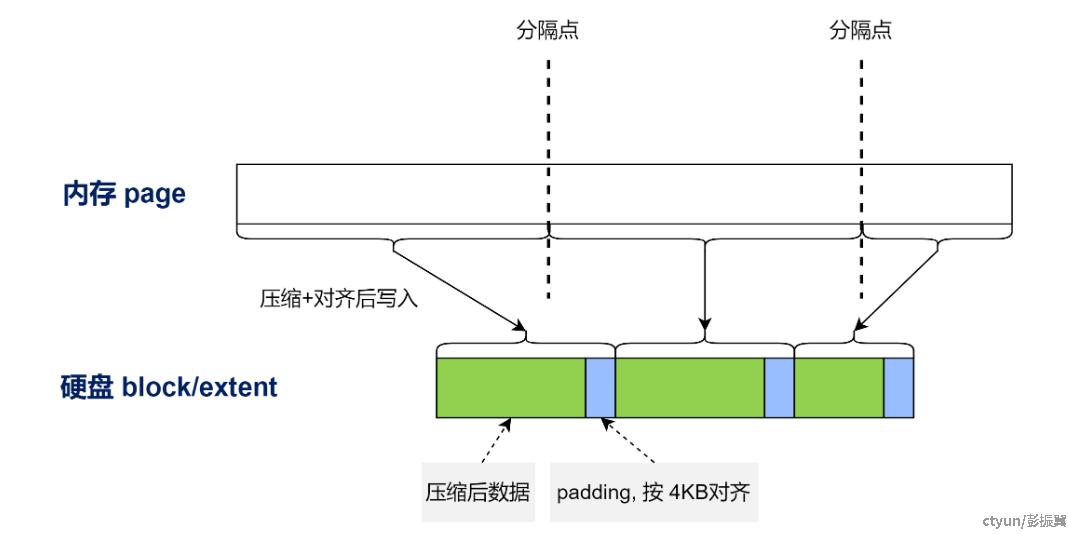

内存 page 可能比硬盘上的 page(也叫做 block/extent) 大很多,因此在 checkpoint/evict 流程将内存 page 写入硬盘时,需要先 split 成多个大小合适的 page,然后再压缩后写入。

理想的分隔结果是:分隔后的 page 在压缩之后,大小正好等于或者非常接近 internal_page_max 和 leaf_page_max。 这样 padding 所占的比例就会比较小,空间浪费较小。

但是数据的压缩比例可能是动态变化的,如何准确预估压缩比例并确定分割点,就是本次要讨论的问题。

算法实现

每个 btree 都维护了变量,来记录理想情况下,压缩前 page 的原始大小。也就是上文提到的理想分隔位置:

- maxintlpage_precomp:理想情况下,非叶子节点 page 的原始大小,初始值 4KB.

- maxleafpage_precomp:理想情况下,叶子节点 page 的原始大小,初始值 32KB * 4 = 128KB.

每次内存 page 会根据上述 precomp 值进行分隔。然后在压缩并写入硬盘后,根据实际压缩大小以及数据原始大小,再去跟新 precomp 值。

简单来说,就是按照压缩后的大小,计算到 internal_page_max 或 leaf_page_max 的距离是否超过 10%,来动态调整。

以 leaf page 为例,如果本次压缩后的大小为 28KB,距离 32KB 有 4KB 差距,大于 32KB * 10%. 此时 precomp 值需要向上调整。

反之,需要向下调整。

核心代码参考 __rec_compression_adjust :

/*

* __rec_compression_adjust --

* Adjust the pre-compression page size based on compression results.

*/

static inline void

__rec_compression_adjust(WT_SESSION_IMPL *session, uint32_t max, size_t compressed_size,

bool last_block, uint64_t *adjustp)

{

WT_BTREE *btree;

uint64_t adjust, current, new;

u_int ten_percent;

btree = S2BT(session);

ten_percent = max / 10; // 是否调整的参考

/*

* Changing the pre-compression size updates a shared memory location

* and it's not uncommon to be pushing out large numbers of pages from

* the same file. If compression creates a page larger than the target

* size, decrease the pre-compression size. If compression creates a

* page smaller than the target size, increase the pre-compression size.

* Once we get under the target size, try and stay there to minimize

* shared memory updates, but don't go over the target size, that means

* we're writing bad page sizes.

* Writing a shared memory location without a lock and letting it

* race, minor trickiness so we only read and write the value once.

*/

WT_ORDERED_READ(current, *adjustp);

WT_ASSERT(session, current >= max);

if (compressed_size > max) { // 压缩后大小大于目标值,向下调整

/*

* The compressed size is GT the page maximum. Check if the pre-compression size is larger

* than the maximum. If 10% of the page size larger than the maximum, decrease it by that

* amount. Else if it's not already at the page maximum, set it there.

*

* Note we're using 10% of the maximum page size as our test for when to adjust the

* pre-compression size as well as the amount by which we adjust it. Not updating the value

* when it's close to the page size keeps us from constantly updating a shared memory

* location, and 10% of the page size is an OK step value as well, so we use it in both

* cases.

*/

adjust = current - max;

if (adjust > ten_percent)

new = current - ten_percent;

else if (adjust != 0)

new = max;

else

return;

} else { // 压缩后大小小于目标值,向上调整

/*

* The compressed size is LTE the page maximum.

*

* Don't increase the pre-compressed size on the last block, the last block might be tiny.

*

* If the compressed size is less than the page maximum by 10%, increase the pre-compression

* size by 10% of the page, or up to the maximum in-memory image size.

*

* Note we're using 10% of the maximum page size... see above.

*/

if (last_block || compressed_size > max - ten_percent)

return;

adjust = current + ten_percent;

if (adjust < btree->maxmempage_image)

new = adjust;

else if (current != btree->maxmempage_image)

new = btree->maxmempage_image;

else

return;

}

// 调整完成,更新 precomp 值

*adjustp = new;

}