python使用多线程写入数据时,可能会存在写入的一些数据丢失现象。如示例代码1所示,反复修改写入文件的两种方式和加入buffering缓冲也时存在数据丢失现象。

示例代码1:

import time

import threading

from queue import Queue

def func(num):

time.sleep(1)

thread_name = threading.current_thread().name

data = str(num) + "当前线程:" + thread_name + '\n'

print(data)

with open('text.txt', 'a', encoding='utf-8') as f:

f.write(data)

# f = open('text.txt', 'a', encoding='utf-8', buffering=100)

# f.write(data)

# f.close()

if __name__ == '__main__':

queue = Queue()

for i in range(1000):

queue.put(i)

# 开启100个线程

for _ in range(100):

thread = threading.Thread(target=func, args=(queue.get(),))

thread.start()

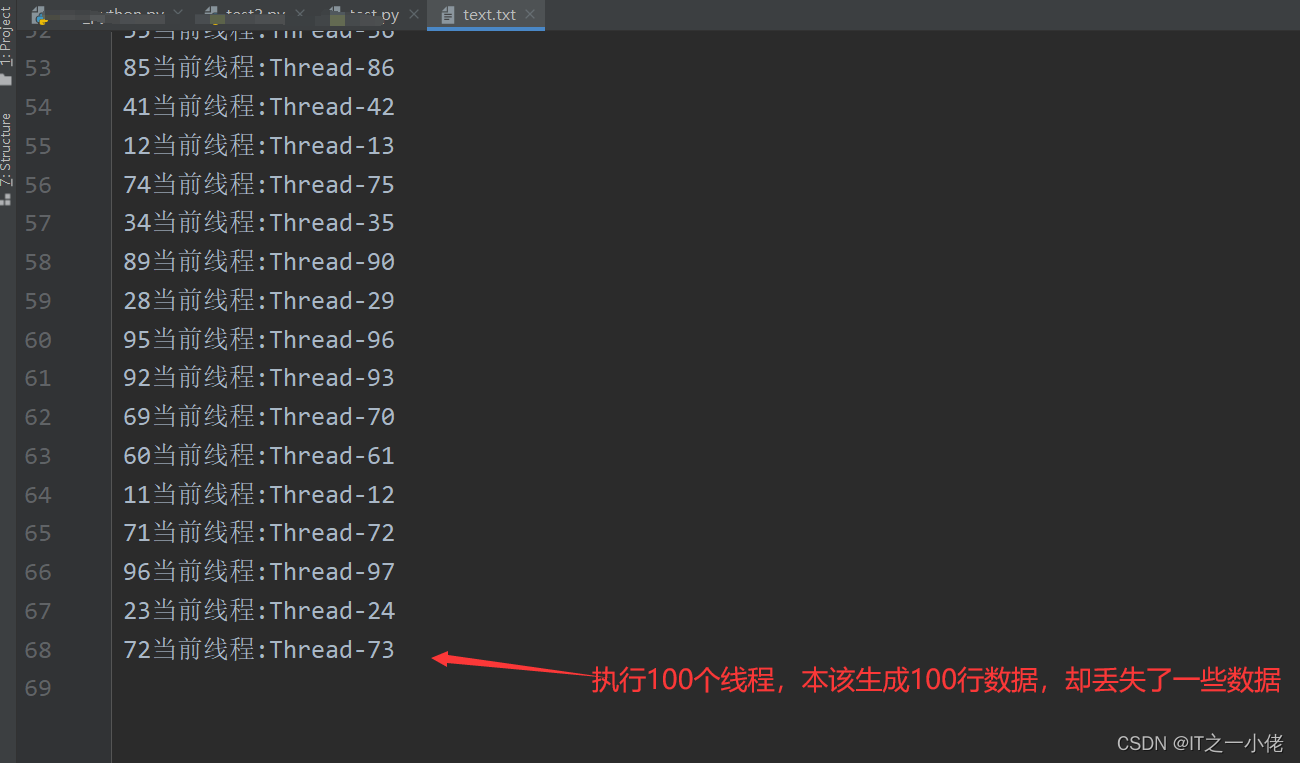

运行结果:

百度众多帖子也未找到答案,最后加入了锁机制,竟然解决了数据丢失的问题!如示例代码2所示。

示例代码2:

import time

import threading

from queue import Queue

from threading import Lock

mutex = Lock()

def func(num):

time.sleep(1)

thread_name = threading.current_thread().name

data = str(num) + "当前线程:" + thread_name + '\n'

print(data)

mutex.acquire()

with open('text.txt', 'a', encoding='utf-8') as f:

f.write(data)

mutex.release()

# f = open('text.txt', 'a', encoding='utf-8', buffering=100)

# f.write(data)

# f.close()

if __name__ == '__main__':

queue = Queue()

for i in range(10000):

queue.put(i)

# 开启10000个线程

for _ in range(10000):

thread = threading.Thread(target=func, args=(queue.get(),))

thread.start()

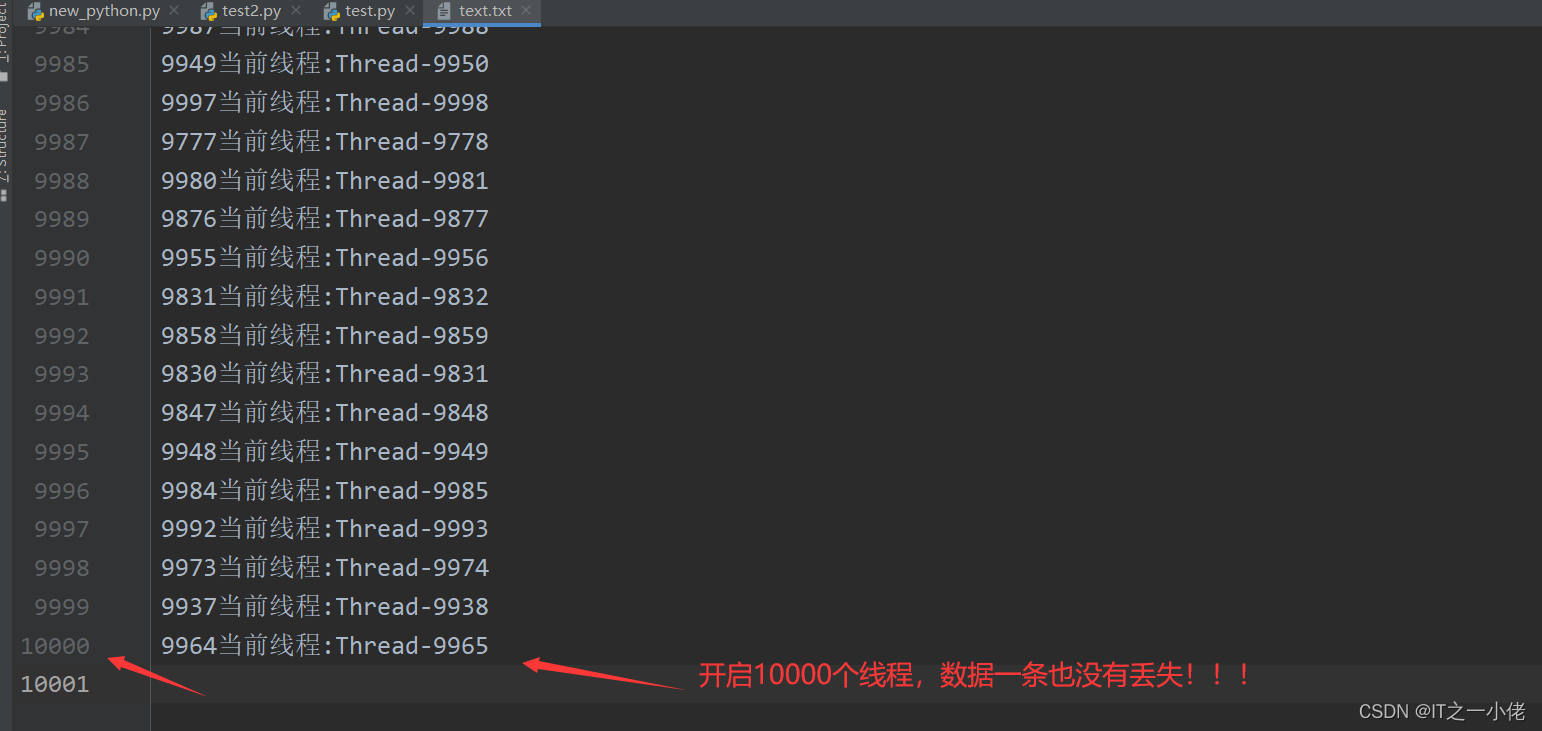

运行结果:

各位大佬若有好的办法,欢迎评论区留言......