接下来绘制我们AI案例的图形。

通过plt.plot(loss_log) 传进要进行可视化的数据集loss_log,然后调用plt.show() 显示可视化结果。

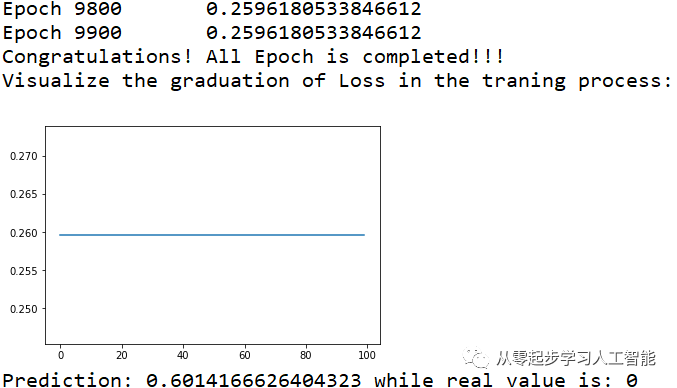

运行Create_AI_Framework_In5Classes(Day4)的Neuron_Network_Entry.py代码,显示结果如下:

+1 V1 V2Hidden layer creation: 1 N[1][1] N[1][2] N[1][3] N[1][4]Output layer: OutputThe weight from 1 at layers[0] to 4 at layers[1] : 0.5595701564768045The weight from 1 at layers[0] to 5 at layers[1] : 0.5159597459698031The weight from 1 at layers[0] to 6 at layers[1] : -0.779980825223883The weight from 1 at layers[0] to 7 at layers[1] : 0.5698720988525292The weight from 2 at layers[0] to 4 at layers[1] : 0.5026611064720412The weight from 2 at layers[0] to 5 at layers[1] : 0.7782648372109493The weight from 2 at layers[0] to 6 at layers[1] : -0.3284764004608184The weight from 2 at layers[0] to 7 at layers[1] : -0.719846313530961The weight from 4 at layers[1] to 8 at layers[2] : 0.07758460145811097The weight from 5 at layers[1] to 8 at layers[2] : 0.29861850707703974The weight from 6 at layers[1] to 8 at layers[2] : 0.5566544292456734The weight from 7 at layers[1] to 8 at layers[2] : -0.11011479258876289Epoch 0 0.2596180533846612Epoch 100 0.2596180533846612Epoch 200 0.2596180533846612Epoch 300 0.2596180533846612Epoch 400 0.2596180533846612Epoch 500 0.2596180533846612Epoch 600 0.2596180533846612Epoch 700 0.2596180533846612Epoch 800 0.2596180533846612Epoch 900 0.2596180533846612Epoch 1000 0.2596180533846612Epoch 1100 0.2596180533846612Epoch 1200 0.2596180533846612Epoch 1300 0.2596180533846612Epoch 1400 0.2596180533846612Epoch 1500 0.2596180533846612Epoch 1600 0.2596180533846612Epoch 1700 0.2596180533846612Epoch 1800 0.2596180533846612Epoch 1900 0.2596180533846612Epoch 2000 0.2596180533846612Epoch 2100 0.2596180533846612Epoch 2200 0.2596180533846612Epoch 2300 0.2596180533846612Epoch 2400 0.2596180533846612Epoch 2500 0.2596180533846612Epoch 2600 0.2596180533846612Epoch 2700 0.2596180533846612Epoch 2800 0.2596180533846612Epoch 2900 0.2596180533846612Epoch 3000 0.2596180533846612Epoch 3100 0.2596180533846612Epoch 3200 0.2596180533846612Epoch 3300 0.2596180533846612Epoch 3400 0.2596180533846612Epoch 3500 0.2596180533846612Epoch 3600 0.2596180533846612Epoch 3700 0.2596180533846612Epoch 3800 0.2596180533846612Epoch 3900 0.2596180533846612Epoch 4000 0.2596180533846612Epoch 4100 0.2596180533846612Epoch 4200 0.2596180533846612Epoch 4300 0.2596180533846612Epoch 4400 0.2596180533846612Epoch 4500 0.2596180533846612Epoch 4600 0.2596180533846612Epoch 4700 0.2596180533846612Epoch 4800 0.2596180533846612Epoch 4900 0.2596180533846612Epoch 5000 0.2596180533846612Epoch 5100 0.2596180533846612Epoch 5200 0.2596180533846612Epoch 5300 0.2596180533846612Epoch 5400 0.2596180533846612Epoch 5500 0.2596180533846612Epoch 5600 0.2596180533846612Epoch 5700 0.2596180533846612Epoch 5800 0.2596180533846612Epoch 5900 0.2596180533846612Epoch 6000 0.2596180533846612Epoch 6100 0.2596180533846612Epoch 6200 0.2596180533846612Epoch 6300 0.2596180533846612Epoch 6400 0.2596180533846612Epoch 6500 0.2596180533846612Epoch 6600 0.2596180533846612Epoch 6700 0.2596180533846612Epoch 6800 0.2596180533846612Epoch 6900 0.2596180533846612Epoch 7000 0.2596180533846612Epoch 7100 0.2596180533846612Epoch 7200 0.2596180533846612Epoch 7300 0.2596180533846612Epoch 7400 0.2596180533846612Epoch 7500 0.2596180533846612Epoch 7600 0.2596180533846612Epoch 7700 0.2596180533846612Epoch 7800 0.2596180533846612Epoch 7900 0.2596180533846612Epoch 8000 0.2596180533846612Epoch 8100 0.2596180533846612Epoch 8200 0.2596180533846612Epoch 8300 0.2596180533846612Epoch 8400 0.2596180533846612Epoch 8500 0.2596180533846612Epoch 8600 0.2596180533846612Epoch 8700 0.2596180533846612Epoch 8800 0.2596180533846612Epoch 8900 0.2596180533846612Epoch 9000 0.2596180533846612Epoch 9100 0.2596180533846612Epoch 9200 0.2596180533846612Epoch 9300 0.2596180533846612Epoch 9400 0.2596180533846612Epoch 9500 0.2596180533846612Epoch 9600 0.2596180533846612Epoch 9700 0.2596180533846612Epoch 9800 0.2596180533846612Epoch 9900 0.2596180533846612Congratulations! All Epoch is completed!!!Visualize the graduation of Loss in the traning process:Prediction: 0.6014166626404323 while real value is: 0Prediction: 0.6106283371692053 while real value is: 1Prediction: 0.5844340698422799 while real value is: 1Prediction: 0.5936875249772561 while real value is: 0

打印出Epoch的损失度,注意,每隔100个Epoch打印一次误差。并打印一行语句:“Congratulations! All Epoch is completed!!!”,这里的损失度没有改变,损失度的绘图结果为:

Neuron_Network_Entry.py中运用BackPropagation.applyBackPragation,然后根据计算后的结果计算一次损失度,每隔100次Epoch打印一次,但损失度并没有做改变,那没有做改变的原因是什么?是因为之前在BackPropagation.py中实现的Back Propagation算法并没有实际更新我们的神经网络,但BackPropagation.py代码中实现的确实是Back Propagation的过程。BackPropagation.py代码中有一个非常小的细节要修改,我们将在下一章节进行修改,修改代码以后,损失度结果图形将立即改变,最初的时候曲线可能非常高,然后是一个渐渐的降低的过程。改进优化的结果可能精确度在95%以上,误差在5%以内。

至此,从编写框架的角度讲,我们已经走完了Tensorflow、Pytorch最原始的第一步。以后除了精确度达到95%以上,甚至要达到99.5%以上,需要怎么做?作为人工智能的开发者,将对这个特别感兴趣,一生将围绕降低损失度工作,就像损失度Loss就是所有AI框架终身的魔咒一样,所有的框架都想在最短时间内最大程度的降低损失度,包括使用不同的学习率、激活函数等,都是为最大程度的降低损失度,例如使用Sigmoid计算的损失度,使用ReLU的方式计算的损失度,损失度不一样,都是为了最快时间最大程度的降低损失度,接近真实值。这是所有的框架,包括Tensorflow、Pytorch,终身奋斗的目标!怎么在最短时间(毫秒级别),训练最大程度的符合真实的情况,最好没有损失度,这也是人工智能应用开发者终身的的魔咒!