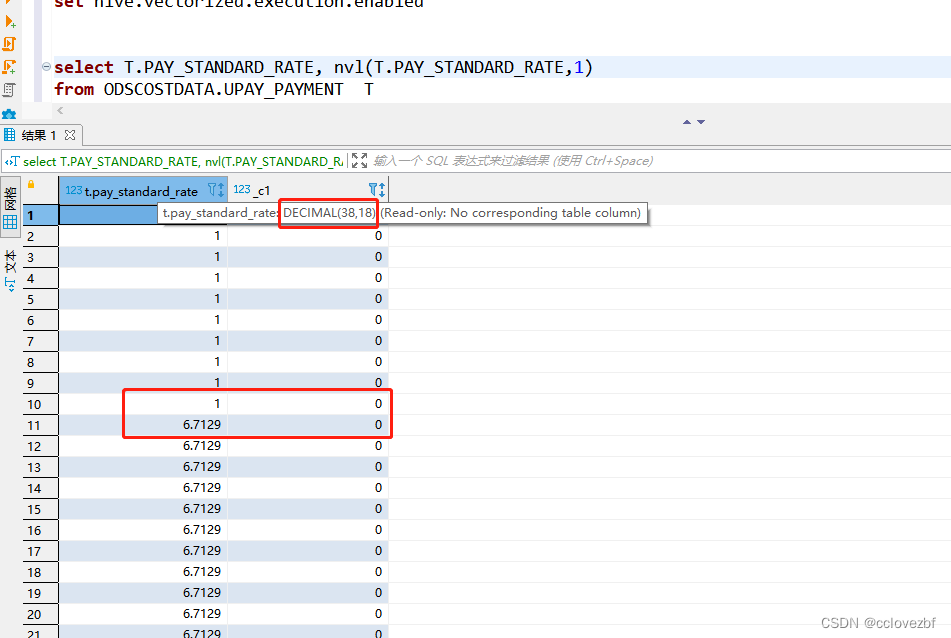

话不多说直接上图 hive版本为2.1.2-cdh6.3.2

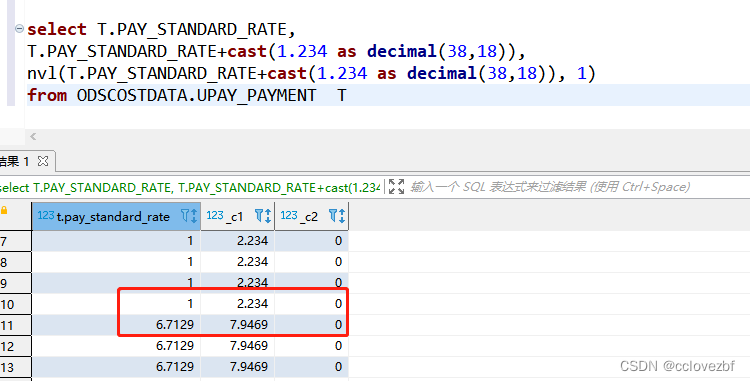

一个nvl (decimal,1)结果=0 怎么就出现bug了呢???

按道理不是两列值相等么,真是服了,怎么这么多bug,直接百度再说,结果是我的文章,,难道要我自己借鉴自己?

——————————————————————————————————————————

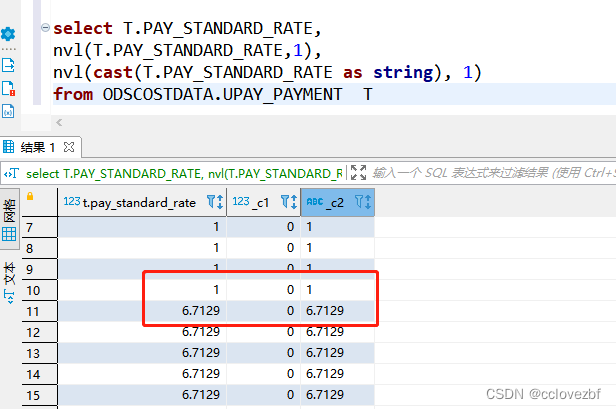

将decimal转string

继续研究,根据我上次研究的问题,decimal容易出现精度丢失等问题,我记得我当时转了string就好了。那么测试下,结果ok,但是decimal报错的问题还是没解决。

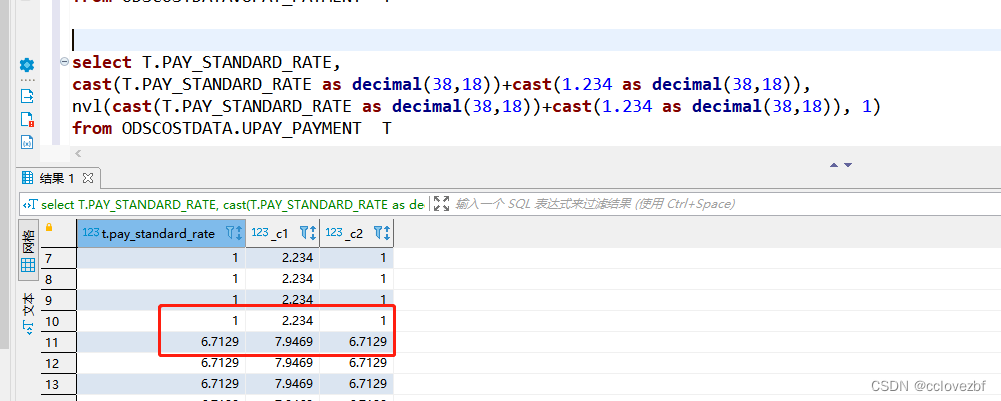

继续测试

这次测试发现了一个很神奇的东西。

nvl +了一个值 居然就正确了,但是和原先的值又不一样。所以这个值是一个错误的正确值。

这个和上面的基本一样只是,没有把t.pay_standard_rate转为decimal 因为本身就是decimal.

结果值完全不一样。。

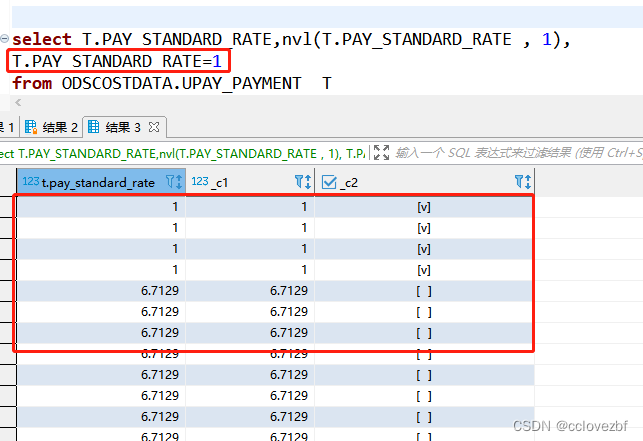

测试中最有意思的事来了

注意看 这个sql和之前的sql一模一样就是多了个判断这个值是否是=0.

然后就出现了正确的结果!!!!!!!!!!

这时候就有疑问了,我查另外一列和我查nvl(T.PAY_STANDARD_RATE , 1) 有关系吗?

我抓周树人和鲁迅有关系么? 你说瞎子坑,又不是说我李青打得不好?老子叫盖伦又不叫德玛.

大杀招

还是要依靠explain.既然你说周树人和鲁迅不一样,那我就把你们两个好好检查下

explain select

T.PAY_STANDARD_RATE,

nvl(T.PAY_STANDARD_RATE , 1),

T.PAY_STANDARD_RATE=1

from ODSCOSTDATA.UPAY_PAYMENT T

STAGE DEPENDENCIES:

Stage-1 is a root stage

Stage-0 depends on stages: Stage-1

STAGE PLANS:

Stage: Stage-1

Spark

DagName: hive_20220525095616_c2aec1ed-f5ba-4625-a587-e323d8ac3e1c:3954

Vertices:

Map 1

Map Operator Tree:

TableScan

alias: t

Statistics: Num rows: 600 Data size: 67284 Basic stats: COMPLETE Column stats: NONE

Select Operator

expressions: pay_standard_rate (type: decimal(38,18)), NVL(pay_standard_rate,1) (type: decimal(38,18)), (pay_standard_rate = 1) (type: boolean)

outputColumnNames: _col0, _col1, _col2

Statistics: Num rows: 600 Data size: 67284 Basic stats: COMPLETE Column stats: NONE

File Output Operator

compressed: false

Statistics: Num rows: 600 Data size: 67284 Basic stats: COMPLETE Column stats: NONE

table:

input format: org.apache.hadoop.mapred.SequenceFileInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveSequenceFileOutputFormat

serde: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

Stage: Stage-0

Fetch Operator

limit: -1

Processor Tree:

ListSink

explain

select T.PAY_STANDARD_RATE,

nvl(T.PAY_STANDARD_RATE , 1)

from ODSCOSTDATA.UPAY_PAYMENT T

STAGE DEPENDENCIES:

Stage-1 is a root stage

Stage-0 depends on stages: Stage-1

STAGE PLANS:

Stage: Stage-1

Spark

DagName: hive_20220525095658_abf11a23-3e89-47fa-ad1b-8e84b3988910:3955

Vertices:

Map 1

Map Operator Tree:

TableScan

alias: t

Statistics: Num rows: 600 Data size: 67284 Basic stats: COMPLETE Column stats: NONE

Select Operator

expressions: pay_standard_rate (type: decimal(38,18)), NVL(pay_standard_rate,1) (type: decimal(38,18))

outputColumnNames: _col0, _col1

Statistics: Num rows: 600 Data size: 67284 Basic stats: COMPLETE Column stats: NONE

File Output Operator

compressed: false

Statistics: Num rows: 600 Data size: 67284 Basic stats: COMPLETE Column stats: NONE

table:

input format: org.apache.hadoop.mapred.SequenceFileInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveSequenceFileOutputFormat

serde: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

Execution mode: vectorized

Stage: Stage-0

Fetch Operator

limit: -1

Processor Tree:

ListSink

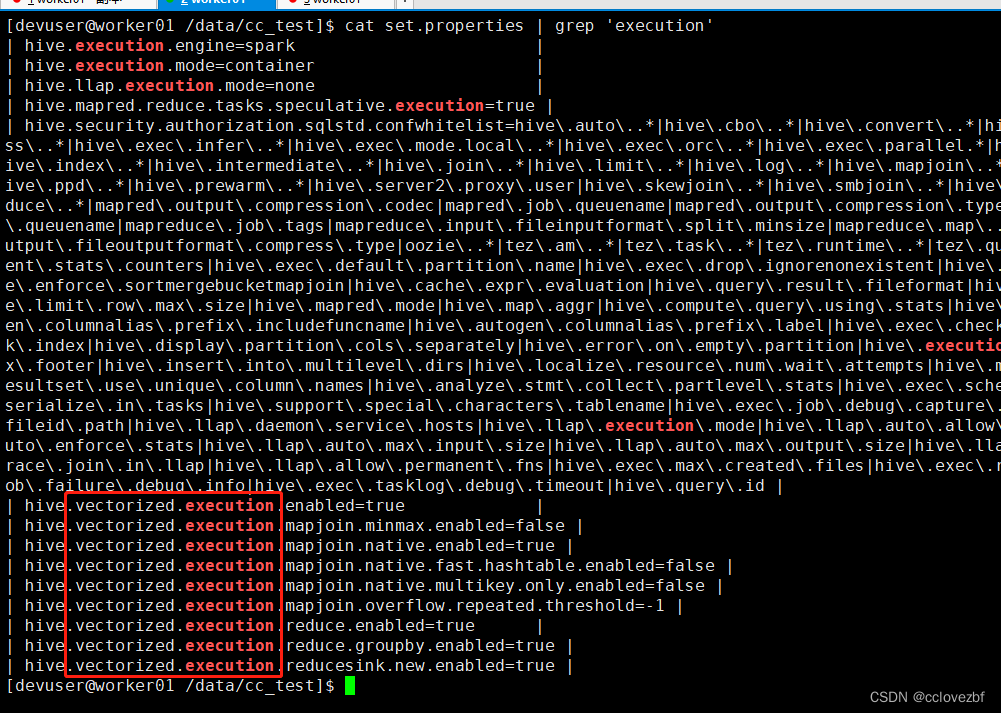

注意看下面的 多了一行 Execution mode: vectorized .

这个又是啥?因为我之前研究过这个 vectorized,所以我知道这是啥。。。。

给小伙伴一个办法。set 列出所有属性值

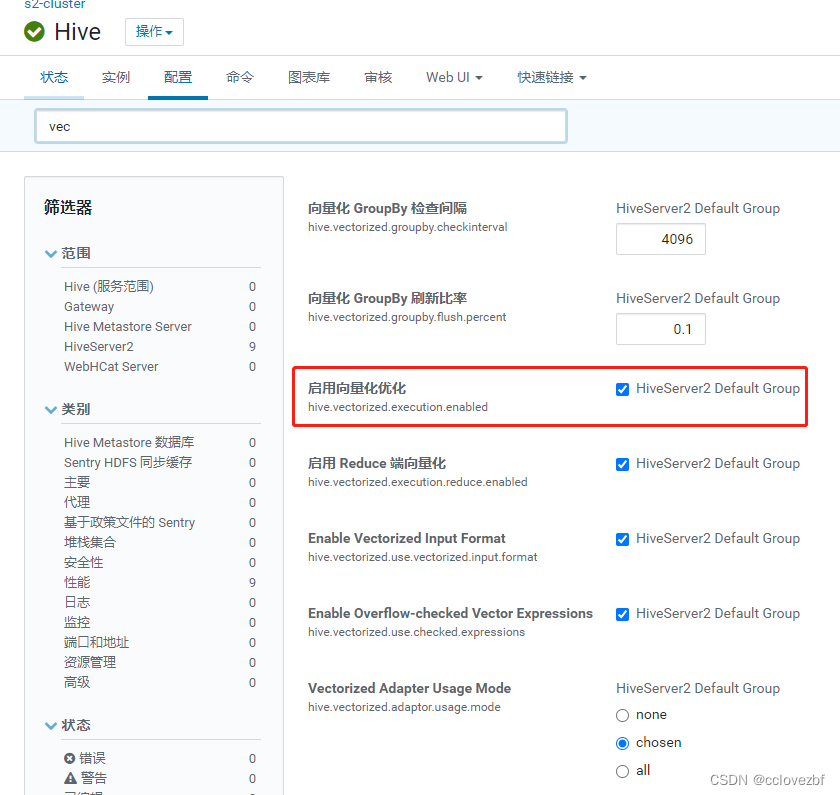

结论

经过测试 hive.vectorized.execution.enabled=true改为false不使用矢量化模式就可解决bug !!!

扩展学习

这个矢量化是什么?为什么他自然开启,开启了这么多bug,开启有什么好处?

Vectorized Query Execution - Apache Hive - Apache Software Foundation https://cwiki.apache.org/confluence/display/Hive/Vectorized+Query+Execution

https://cwiki.apache.org/confluence/display/Hive/Vectorized+Query+Execution

Vectorized query execution is a Hive feature that greatly reduces the CPU usage for typical query operations like scans, filters, aggregates, and joins. A standard query execution system processes one row at a time. This involves long code paths and significant metadata interpretation in the inner loop of execution. Vectorized query execution streamlines operations by processing a block of 1024 rows at a time. Within the block, each column is stored as a vector (an array of a primitive data type). Simple operations like arithmetic and comparisons are done by quickly iterating through the vectors in a tight loop, with no or very few function calls or conditional branches inside the loop. These loops compile in a streamlined way that uses relatively few instructions and finishes each instruction in fewer clock cycles, on average, by effectively using the processor pipeline and cache memory. A detailed design document is attached to the vectorized query execution JIRA, at [HIVE-4160] Vectorized Query Execution in Hive - ASF JIRA.

简单的来说这个减少cpu使用率,批量查询,加快查询速度

开启方式1. set hive.vectorized.execution.enabled = true

2.hive表格式必须是orc

Supported data types and operations

The following data types are currently supported for vectorized execution:

tinyintsmallintintbigintbooleanfloatdoubledecimaldatetimestamp(see Limitations below)string

Using other data types will cause your query to execute using standard, row-at-a-time execution.

使用其他类型会不执行vectorized模式,变成一次一条...

The following expressions can be vectorized when used on supported types:

- arithmetic: +, -, *, /, %

- AND, OR, NOT

- comparisons <, >, <=, >=, =, !=, BETWEEN, IN ( list-of-constants ) as filters

- Boolean-valued expressions (non-filters) using AND, OR, NOT, <, >, <=, >=, =, !=

- IS [NOT] NULL

- all math functions (SIN, LOG, etc.)

- string functions SUBSTR, CONCAT, TRIM, LTRIM, RTRIM, LOWER, UPPER, LENGTH

- type casts

- Hive user-defined functions, including standard and generic UDFs

- date functions (YEAR, MONTH, DAY, HOUR, MINUTE, SECOND, UNIX_TIMESTAMP)

- the IF conditional expression

注意啊 这上面说的都是 在where里 比较 计算 还是用 vectorized mode。

但是我之前是在select 语句里的select 用到了 column =1 这种就会退出矢量化模式

最后说下如果有相同的小伙伴遇到这个问题。

set hive.vectorized.execution.enabled = false

或者在select 里加一列 column=1 随便等于几都行,主要是退出矢量化模式。

也可以通过explain,看下当前sql是否在矢量化模式。