1. 离线安装docker

- 官网下载Docker安装包拷贝到离线机器上进行安装:

#解压安装包并复制到/usr/bin/目录下 tar -xvf docker-24.0.5.tgz cp docker/* /usr/bin/ - 注册service设置开机自启动docker:

#新建docker.service文件 vim /etc/systemd/system/docker.service #docker.service文件写入以下内容 [Unit] Description=Docker Application Container Engine Documentation=h提提ps://docs.docker.com After=network-online.target firewalld.service Wants=network-online.target [Service] Type=notify # the default is not to use systemd for cgroups because the delegate issues still # exists and systemd currently does not support the cgroup feature set required # for containers run by docker ExecStart=/usr/bin/dockerd ExecReload=/bin/kill -s HUP $MAINPID # Having non-zero Limit*s causes performance problems due to accounting overhead # in the kernel. We recommend using cgroups to do container-local accounting. LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity # Uncomment TasksMax if your systemd version supports it. # Only systemd 226 and above support this version. #TasksMax=infinity TimeoutStartSec=0 # set delegate yes so that systemd does not reset the cgroups of docker containers Delegate=yes # kill only the docker process, not all processes in the cgroup KillMode=process # restart the docker process if it exits prematurely Restart=on-failure StartLimitBurst=3 StartLimitInterval=60s [Install] WantedBy=multi-user.target添加文件权限,执行以下命令:

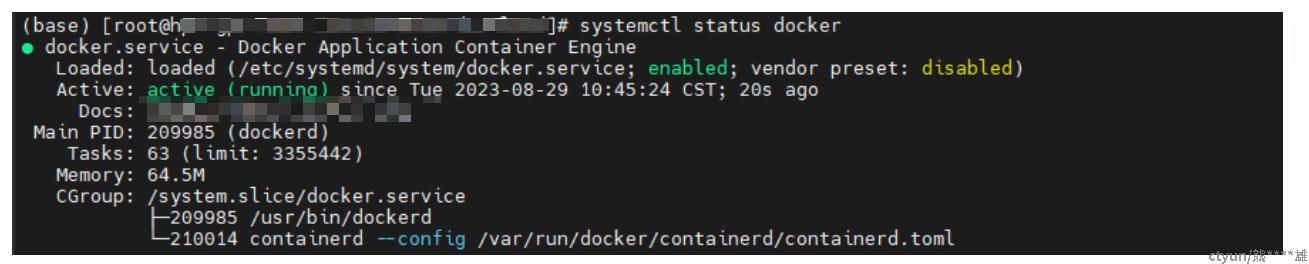

chmod +x /etc/systemd/system/docker.service #添加文件权限 systemctl daemon-reload #重载unit配置文件 systemctl start docker #启动Docker systemctl enable docker.service #设置开机自启 - 验证是否安装成功:

systemctl status docker #查看Docker状态 docker -v #查看Docker版本

2. 离线安装Nvidia-Container组件

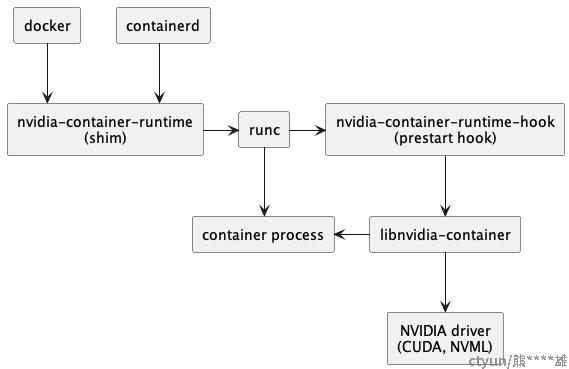

要在容器内调用GPU,需要安装Nvidia-container的相关组件,依赖关系如下(来源Nvidia官网):

- 在官网下载以下安装文件:

libnvidia-container1-1.12.1-1.x86_64.rpm libnvidia-container-tools-1.12.1-1.x86_64.rpm nvidia-container-toolkit-base-1.12.1-1.x86_64.rpm nvidia-container-toolkit-1.12.1-1.x86_64.rpm -

将以上文件放在一个文件夹传到离线机器后进行安装:

(base) [root@localhost]# ll -h total 4.8M -rw-r--r-- 1 root root 1011K Aug 30 10:50 libnvidia-container1-1.12.1-1.x86_64.rpm -rw-r--r-- 1 root root 55K Aug 30 10:50 libnvidia-container-tools-1.12.1-1.x86_64.rpm -rw-r--r-- 1 root root 801K Aug 30 10:50 nvidia-container-toolkit-1.12.1-1.x86_64.rpm -rw-r--r-- 1 root root 2.9M Aug 30 10:53 nvidia-container-toolkit-base-1.12.1-1.x86_64.rpm (base) [root@localhost]# rpm -ivh *.rpm warning: libnvidia-container1-1.12.1-1.x86_64.rpm: Header V4 RSA/SHA512 Signature, key ID f796ecb0: NOKEY Verifying... ################################# [100%] Preparing... ################################# [100%] Updating / installing... 1:nvidia-container-toolkit-base-1.1################################# [ 25%] 2:libnvidia-container1-1.12.1-1 ################################# [ 50%] 3:libnvidia-container-tools-1.12.1-################################# [ 75%] 4:nvidia-container-toolkit-1.12.1-1################################# [100%] - 重启Docker:

sudo systemctl restart docker

3. 离线加载容器镜像进行ChatGLM2-6B模型微调和推理

-

从

.tar文件离线加载模型镜像-

在联网机器上制作好模型镜像后,用docker save命令将镜像打包成

.tar文件 -

用scp命令传到离线机器上

-

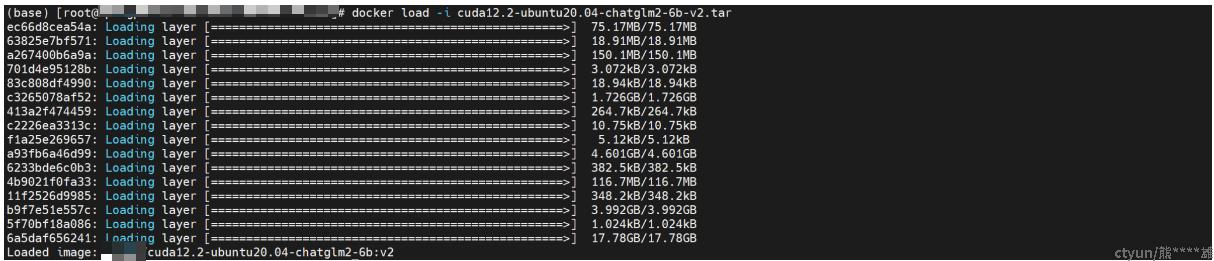

在离线机器上执行以下命令从

.tar文件导入镜像:docker load -i cuda12.2-ubuntu20.04-chatglm2-6b-v2.tar

- 查看导入的镜像:

docker images

-

-

基于镜像启动容器

在有GPU的机器上可以用

--gpus参数指定可用的GPU,这里选择设置为--gpus all,--shm-size参数设置容器共享内存大小为8G(默认为64MB,后面跑模型不够用,会报错)docker run -it -u root --name cuda12.2-ubuntu20.04-chatglm2-6b-v2 --gpus all --shm-size 8G [image_name]:[tag] /bin/bash -

P-Tuning v2微调

进入

/home/user-x/ChatGLM2-6B/ptuning目录,把训练脚本train.sh中的--model_name_or_path设置为../pretrained_model/chatglm2-6b从本地加载模型参数,可以根据情况修改NUM_GPUS、--per_device_train_batch_size、--quantization_bit,其中--quantization_bit参数省略不写则进行fp16训练;同时增加2>&1 | tee命令将终端输出保存到文件。修改后的脚本如下:

PRE_SEQ_LEN=128 LR=2e-2 NUM_GPUS=8 OUTPUT_DIR=output/adgen-chatglm2-6b-pt-$PRE_SEQ_LEN-$LR mkdir -p ${OUTPUT_DIR} torchrun --standalone --nnodes=1 --nproc-per-node=$NUM_GPUS main.py \ --do_train \ --do_eval \ --predict_with_generate True \ --train_file AdvertiseGen/train.json \ --validation_file AdvertiseGen/dev.json \ --preprocessing_num_workers 10 \ --prompt_column content \ --response_column summary \ --overwrite_cache \ --model_name_or_path ../pretrained_model/chatglm2-6b \ --output_dir $OUTPUT_DIR \ --overwrite_output_dir \ --max_source_length 64 \ --max_target_length 128 \ --per_device_train_batch_size 1 \ --per_device_eval_batch_size 1 \ --gradient_accumulation_steps 16 \ --predict_with_generate \ --max_steps 3000 \ --logging_steps 10 \ --save_steps 1000 \ --learning_rate $LR \ --pre_seq_len $PRE_SEQ_LEN 2>&1 | tee ./output/adgen-chatglm2-6b-pt-$PRE_SEQ_LEN-$LR/output.log #--quantization_bit 4然后运行训练脚本开始训练:

bash train.sh -

全量参数Finetune

进入

/home/user-x/ChatGLM2-6B/ptuning目录,把训练脚本ds_train_finetune.sh中的--model_name_or_path设置为../pretrained_model/chatglm2-6b从本地加载模型参数,修改后的脚本如下:LR=1e-4 MASTER_PORT=$(shuf -n 1 -i 10000-65535) deepspeed --num_gpus=8 --master_port $MASTER_PORT main.py \ --deepspeed deepspeed.json \ --do_train \ --train_file AdvertiseGen/train.json \ --test_file AdvertiseGen/dev.json \ --prompt_column content \ --response_column summary \ --overwrite_cache \ --model_name_or_path ../pretrained_model/chatglm2-6b \ --output_dir ./output/adgen-chatglm2-6b-ft-$LR \ --overwrite_output_dir \ --max_source_length 64 \ --max_target_length 64 \ --per_device_train_batch_size 2 \ --per_device_eval_batch_size 1 \ --gradient_accumulation_steps 1 \ --predict_with_generate \ --max_steps 5000 \ --logging_steps 10 \ --save_steps 1000 \ --learning_rate $LR \ --fp16运行

ds_train_finetune.sh脚本开始全参数finetune:bash ds_train_finetune.sh

4. 可能遇到的问题及解决方法

- 遇到

“exits with return code = -7”报错,这是因为容器的共享内存(默认为64MB)不足导致的,在执行docker run命令启动容器时加上--shm-size参数可以设置容器共享内存大小。 - 打包的大模型镜像比较大,可能会遇到机器存储空间不足的问题,这时候可以看一下机器上是否还有多余的磁盘空间,方法如下:

- 用df-h命令查看磁盘空间:

(base) [root@localhost]# df -h Filesystem Size Used Avail Use% Mounted on devtmpfs 378G 0 378G 0% /dev tmpfs 378G 0 378G 0% /dev/shm tmpfs 378G 27M 378G 1% /run tmpfs 378G 0 378G 0% /sys/fs/cgroup /dev/mapper/system-lv_root 60G 24G 37G 39% / /dev/sda3 1.9G 166M 1.7G 10% /boot /dev/sda2 975M 7.3M 968M 1% /boot/efi tmpfs 76G 0 76G 0% /run/user/0 #可以看到/dev/mapper/system-lv_root分区只有60G - 查看分区情况:

(base) [root@localhost ~]# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sda 8:0 0 446.6G 0 disk ├─sda1 8:1 0 122M 0 part ├─sda2 8:2 0 976.6M 0 part /boot/efi ├─sda3 8:3 0 1.9G 0 part /boot └─sda4 8:4 0 443.7G 0 part ├─system-lv_root 253:0 0 60G 0 lvm / └─system-lv_swap 253:1 0 16G 0 lvm nvme1n1 259:0 0 2.9T 0 disk nvme0n1 259:1 0 2.9T 0 disk #sda4总共有443.7G,却只用了60G + 16G - 查看是否有空余磁盘空间:

(base) [root@localhost ~]# vgdisplay --- Volume group --- VG Name system System ID Format lvm2 Metadata Areas 1 Metadata Sequence No 3 VG Access read/write VG Status resizable MAX LV 0 Cur LV 2 Open LV 1 Max PV 0 Cur PV 1 Act PV 1 VG Size <443.64 GiB PE Size 4.00 MiB Total PE 113571 Alloc PE / Size 19456 / 76.00 GiB Free PE / Size 94115 / <367.64 GiB VG UUID L9GoH5-bBN6-aNE3-ymz2-uINq-fseh-Ufa1KA #Free PE / Size显示还有367.64G可用 - 进行扩容:

#给/dev/mapper/system-lv_root增加200G (base) [root@localhost ~]# lvextend -L +200G /dev/mapper/system-lv_root Size of logical volume system/lv_root changed from 60.00 GiB (15360 extents) to 260.00 GiB (66560 extents). Logical volume system/lv_root successfully resized. #扩展xfs文件系统 (base) [root@localhost ~]# xfs_growfs /dev/mapper/system-lv_root meta-data=/dev/mapper/system-lv_root isize=512 agcount=16, agsize=983040 blks = sectsz=4096 attr=2, projid32bit=1 = crc=1 finobt=1, sparse=1, rmapbt=0 = reflink=1 data = bsize=4096 blocks=15728640, imaxpct=25 = sunit=64 swidth=64 blks naming =version 2 bsize=4096 ascii-ci=0, ftype=1 log =internal log bsize=4096 blocks=7680, version=2 = sectsz=4096 sunit=1 blks, lazy-count=1 realtime =none extsz=4096 blocks=0, rtextents=0 data blocks changed from 15728640 to 68157440 - 查看扩容后的磁盘空间:

#成功将/dev/mapper/system-lv_root扩容到260G (base) [root@localhost ~]# df -h Filesystem Size Used Avail Use% Mounted on devtmpfs 378G 0 378G 0% /dev tmpfs 378G 0 378G 0% /dev/shm tmpfs 378G 27M 378G 1% /run tmpfs 378G 0 378G 0% /sys/fs/cgroup /dev/mapper/system-lv_root 260G 25G 236G 10% / /dev/sda3 1.9G 166M 1.7G 10% /boot /dev/sda2 975M 7.3M 968M 1% /boot/efi tmpfs 76G 0 76G 0% /run/user/0

- 用df-h命令查看磁盘空间: