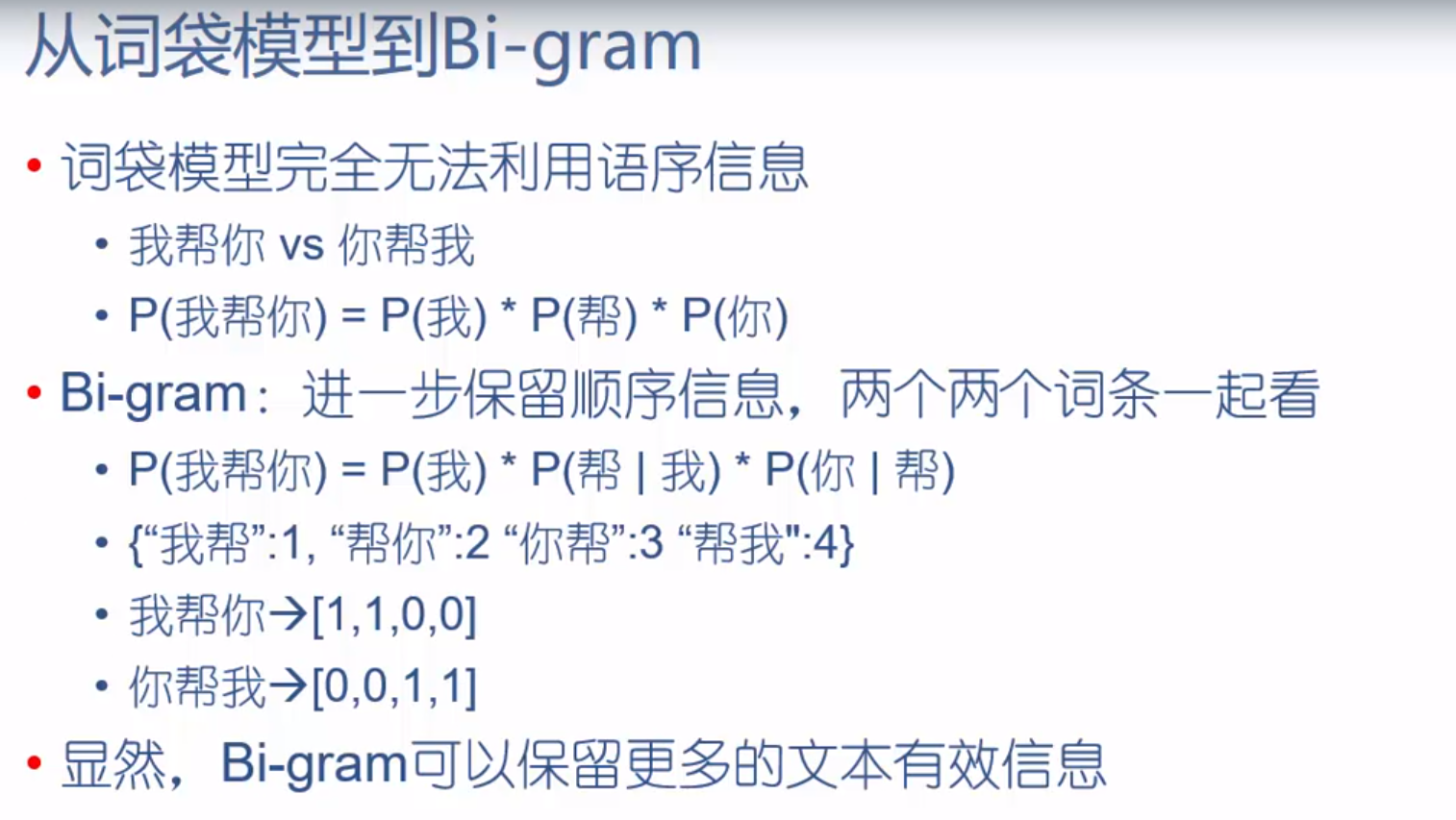

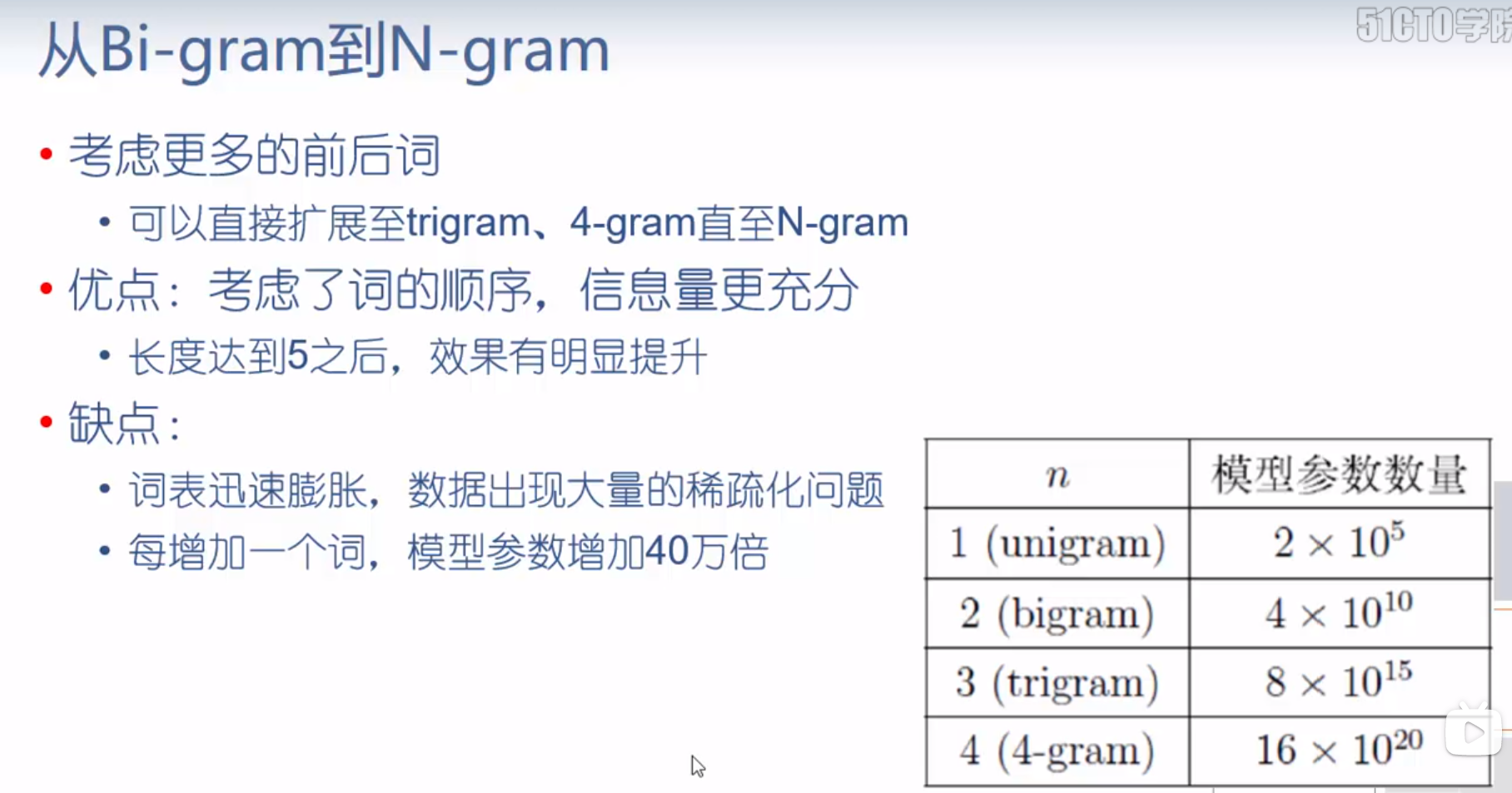

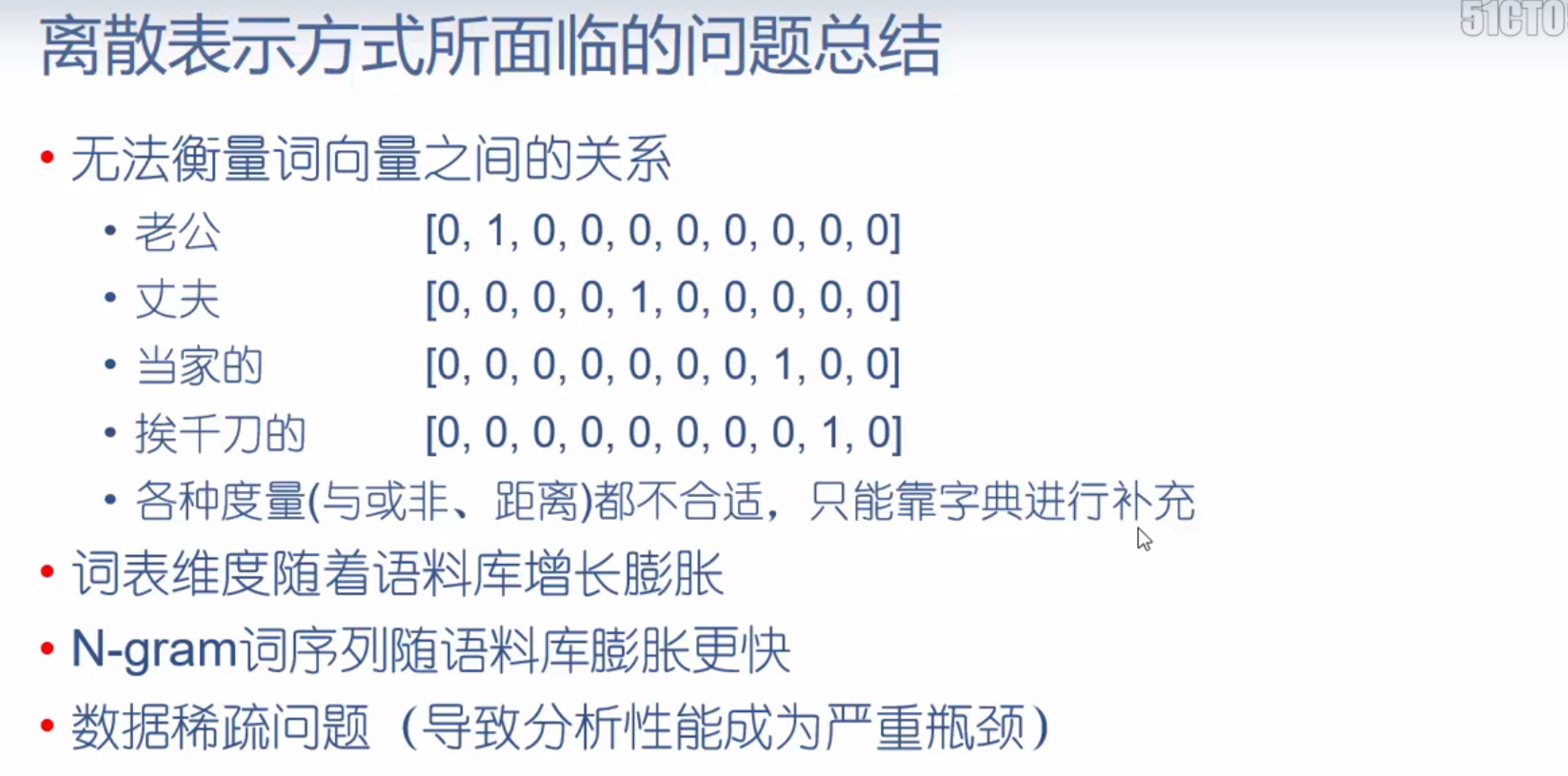

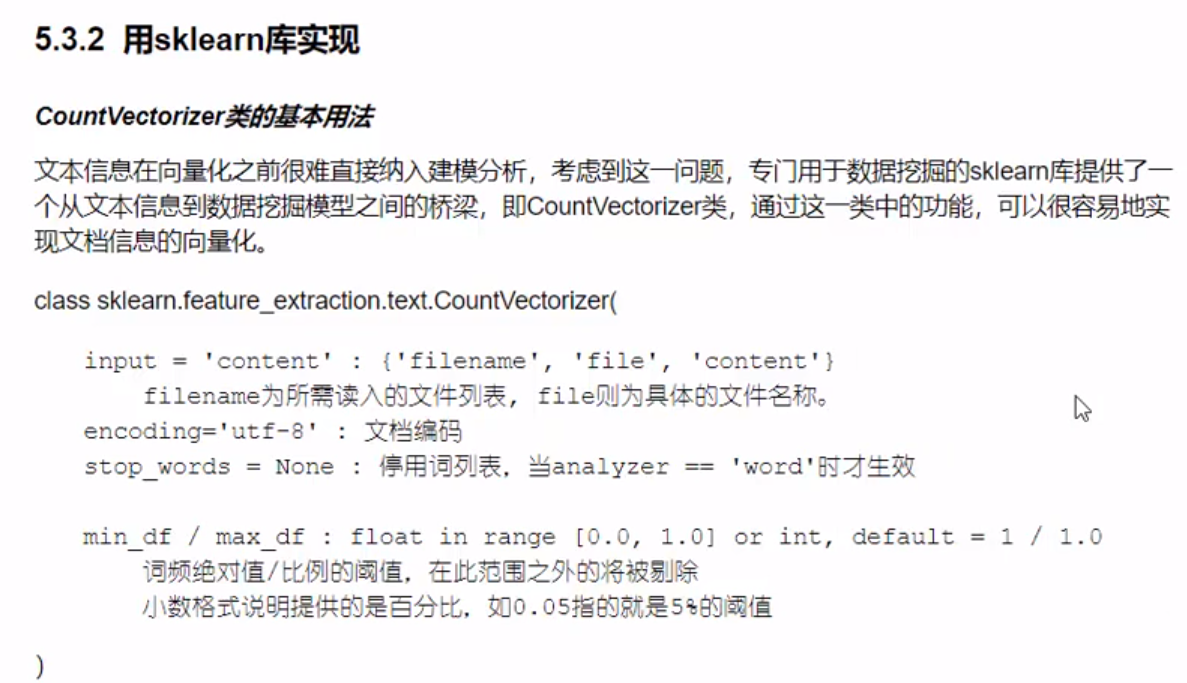

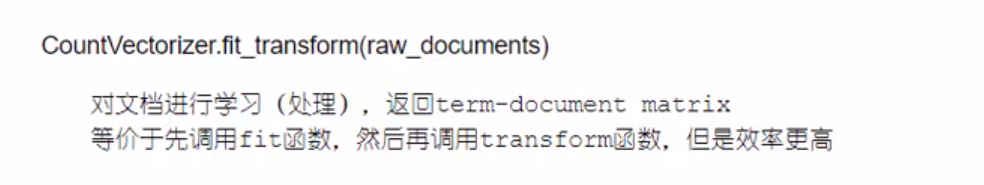

文档信息的向量化-sklearns库、N-gram模型、分布式表示和共现矩阵

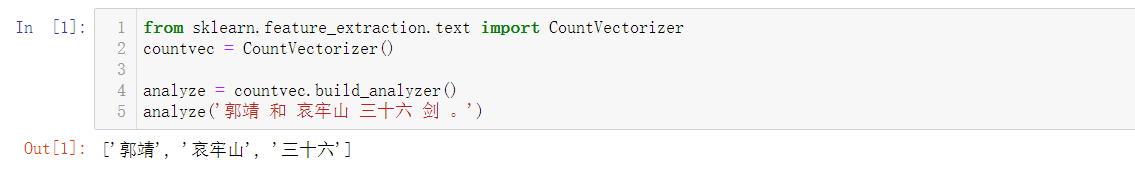

from sklearn.feature_extraction.text import CountVectorizer

countvec = CountVectorizer()

analyze = countvec.build_analyzer()

analyze('郭靖 和 哀牢山 三十六 剑 。')

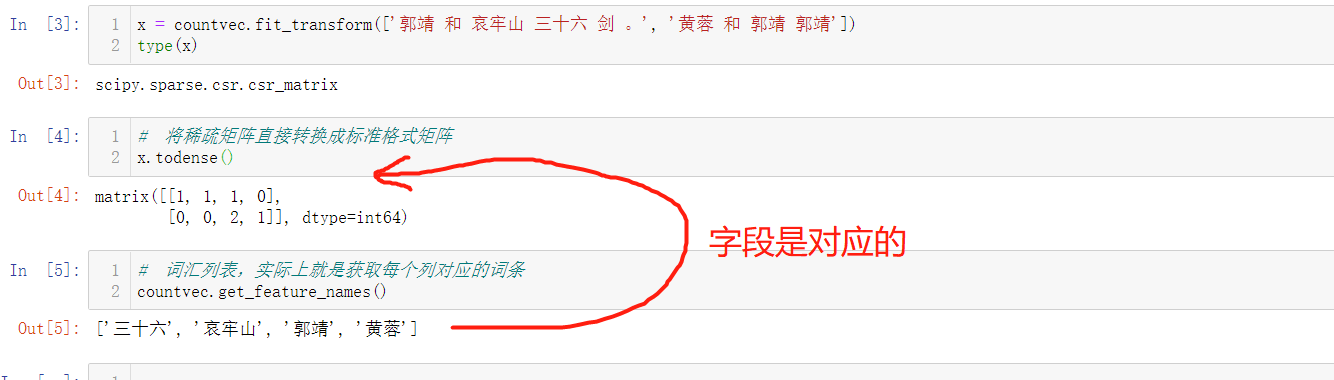

x = countvec.fit_transform(['郭靖 和 哀牢山 三十六 剑 。', '黄蓉 和 郭靖 郭靖'])

type(x)# 将稀疏矩阵直接转换成标准格式矩阵

x.todense()# 词汇列表,实际上就是获取每个列对应的词条

countvec.get_feature_names()

# 词条字典

countvec.vocabulary_

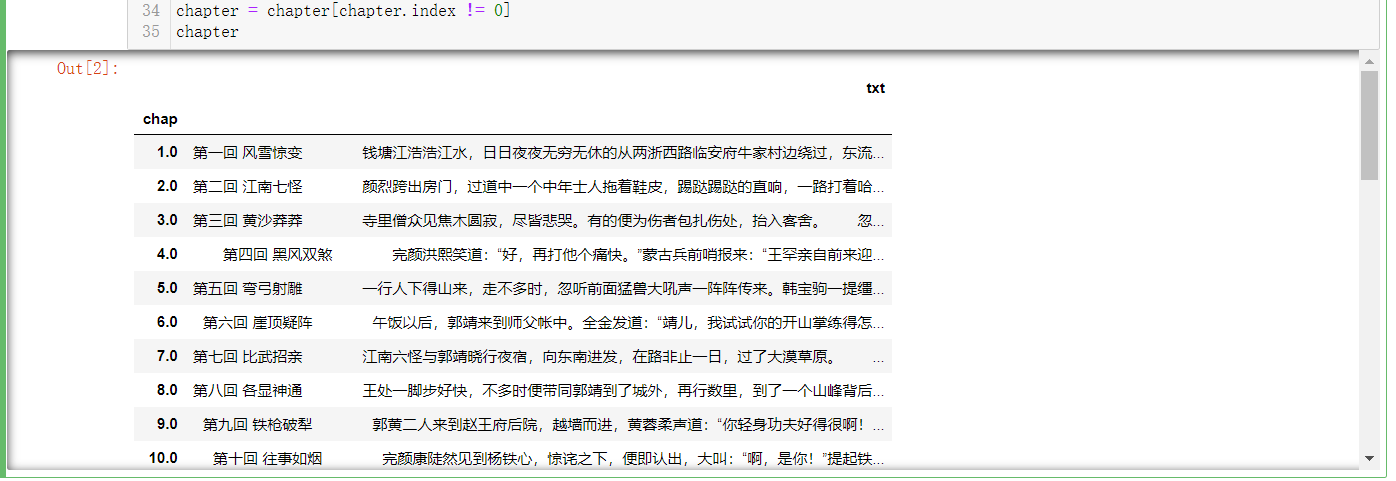

金庸小说-->射雕英雄传-->数据处理

import pandas as pd

raw = pd.read_table('../data/金庸-射雕英雄传txt精校版.txt', names=['txt'], encoding="GBK")

# 章节判断用变量预处理

def m_head(tmpstr):

return tmpstr[:1]

def m_mid(tmpstr):

return tmpstr.find("回 ")

raw['head'] = raw.txt.apply(m_head)

raw['mid'] = raw.txt.apply(m_mid)

raw['len'] = raw.txt.apply(len)

# 章节判断

chapnum = 0

for i in range(len(raw)):

if raw['head'][i] == "第" and raw['mid'][i] > 0 and raw['len'][i] < 30:

chapnum += 1

if chapnum >= 40 and raw['txt'][i] == "附录一:成吉思汗家族":

chapnum = 0

raw.loc[i, 'chap'] = chapnum

# 删除临时变量

del raw['head']

del raw['mid']

del raw['len']

rawgrp = raw.groupby('chap')

chapter = rawgrp.agg(sum) # 只有字符串的情况下,sum函数自动转为合并字符串

chapter = chapter[chapter.index != 0]

chapter

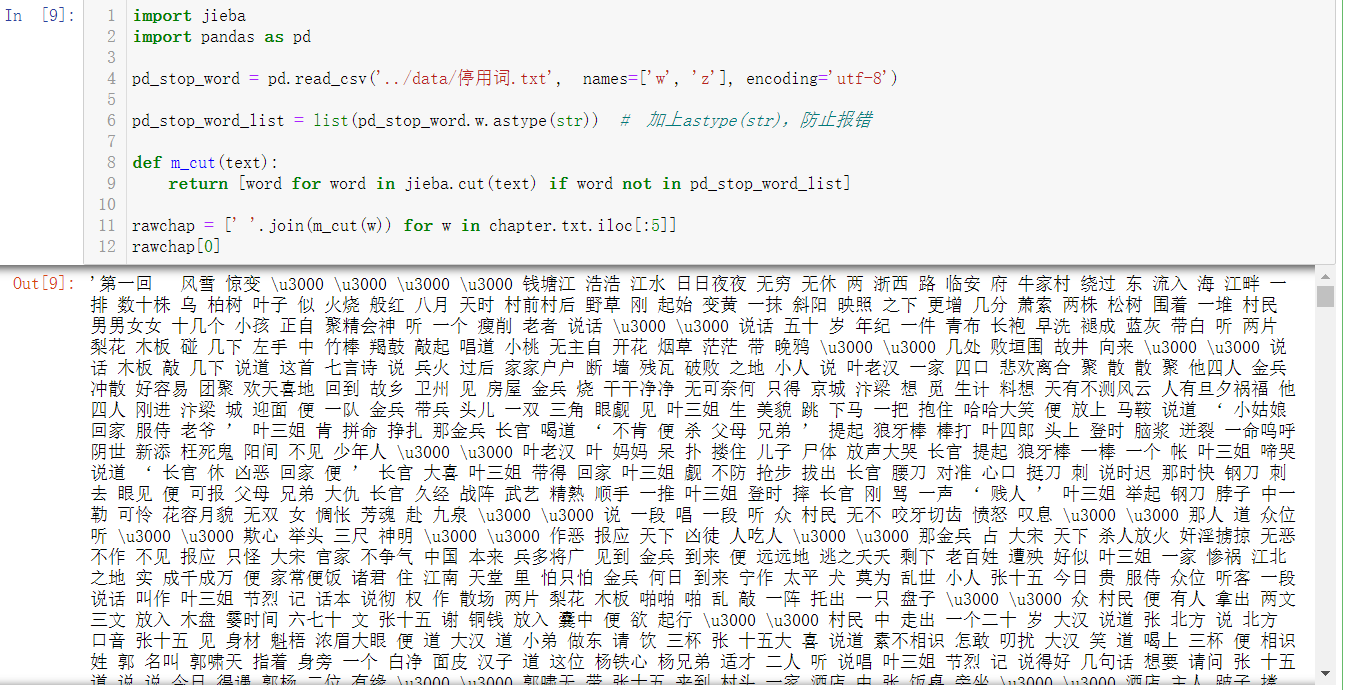

import jieba

import pandas as pd

pd_stop_word = pd.read_csv('../data/停用词.txt', names=['w', 'z'], encoding='utf-8')

pd_stop_word_list = list(pd_stop_word.w.astype(str)) # 加上astype(str),防止报错

def m_cut(text):

return [word for word in jieba.cut(text) if word not in pd_stop_word_list]

rawchap = [' '.join(m_cut(w)) for w in chapter.txt.iloc[:5]]

rawchap[0]

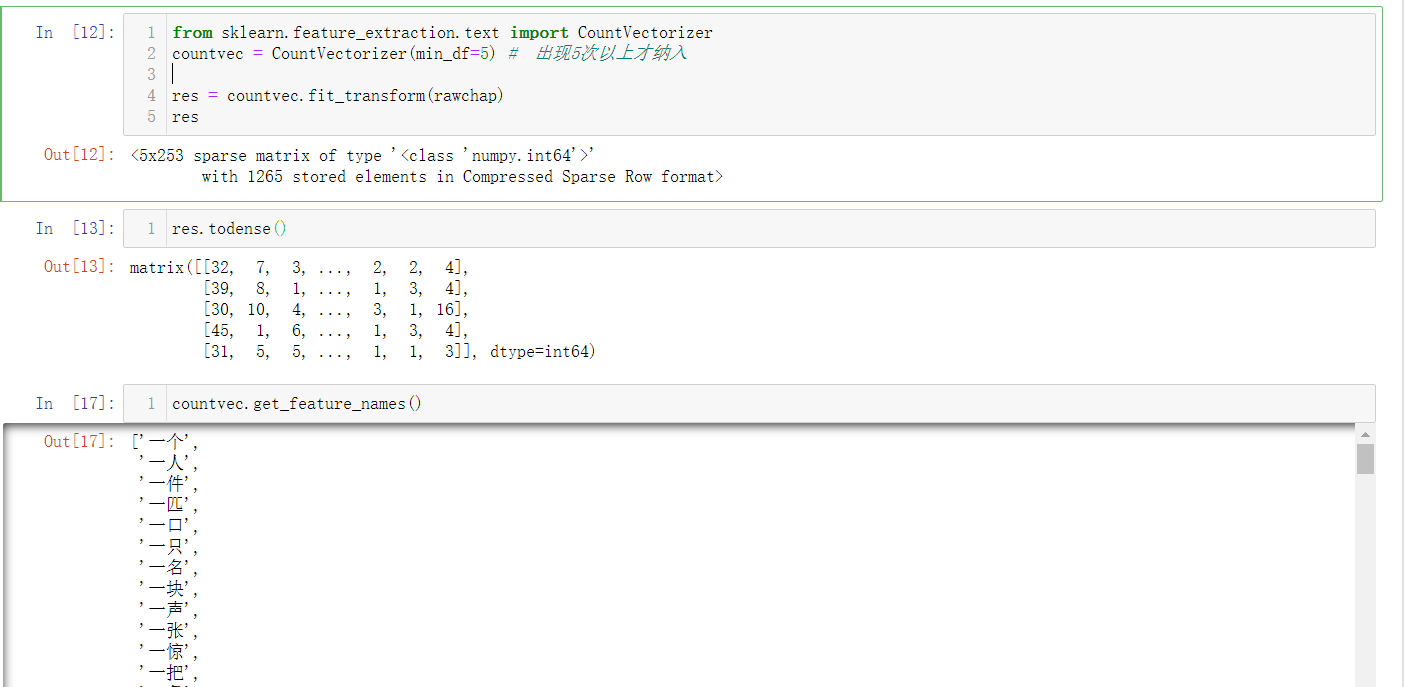

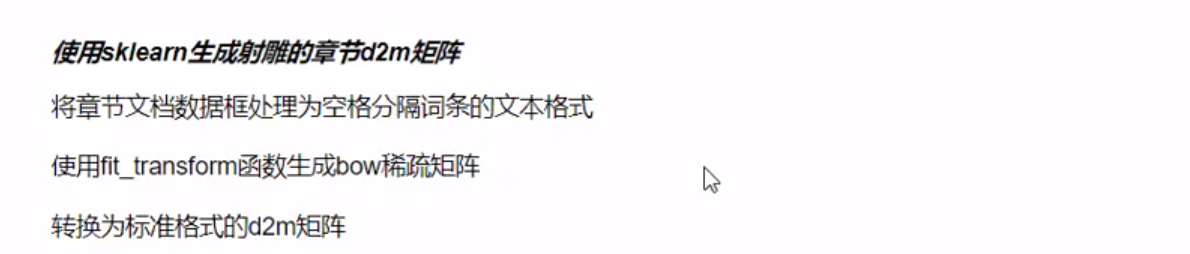

from sklearn.feature_extraction.text import CountVectorizer

countvec = CountVectorizer(min_df=5) # 出现5次以上才纳入

res = countvec.fit_transform(rawchap)

resres.todense()countvec.get_feature_names()