Docker swarm 集群通过 docker cli 来创建,并通过docker cli来实现应用的部署和集群的管理。

Docker swarm集群的搭建相对简单,这里使用三台虚拟机(一个管理节点,两个worker节点)来简单演示下集群的搭建过程。

|-----------------------------------------------------------------|

| hostname | IP |

|-----------------------------------------------------------------|

| master | 192.168.223.31 |

|-----------------------------------------------------------------|

| node-01 | 192.168.223.32 |

|-----------------------------------------------------------------|

| node-02 | 192.168.223.33 |

|-----------------------------------------------------------------|

虚拟机和docker-ce的安装这里就不做赘述了,安装后关闭系统默认的防火墙,关闭selinux,安装最新的docker-ce-20.10.17,并且修改docker.service,增加下面的参数配置。

# vi /lib/systemd/system/docker.service

在 ExecStart=/usr/bin/dockerd 后添加 -H tcp://0.0.0.0:2375 -H unix://var/run/docker.sock ,修改后的配置如下所示

[Service]

Type=notify

# the default is not to use systemd for cgroups because the delegate issues still

# exists and systemd currently does not support the cgroup feature set required

# for containers run by docker

ExecStart=/usr/bin/dockerd -H tcp://0.0.0.0:2375 -H unix://var/run/docker.sock -H fd:// --containerd=/run/containerd/containerd.sock

修改后,记得reload配置,并且重启docker服务

# systemctl daemon-reload

# systemctl restart docker.service下面就给大家直接贴出我搭建和使用swarm集群过程的相关命令的记录。

---初始化集群的管理节点

# docker swarm init --advertise-addr 192.168.223.31

Swarm initialized: current node (betygbmft1wkh4kmrf5wx45mc) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-66syvw9yv8xr457lk4eviixyhd5fviw1gg3ktkwa1nkdp2rb44-14530poeryamdfwlssfynmxhk 192.168.223.31:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

---从节点加入到管理节点

# docker swarm join --token SWMTKN-1-66syvw9yv8xr457lk4eviixyhd5fviw1gg3ktkwa1nkdp2rb44-14530poeryamdfwlssfynmxhk 192.168.223.31:2377

This node joined a swarm as a worker.

----查看集群的节点、状态

# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

betygbmft1wkh4kmrf5wx45mc * master Ready Active Leader 20.10.17

nq4urvgyfdjedabze9p5oll6q node-01 Ready Active 20.10.17

t6wtou7b5jm07qltlrp35ha85 node-02 Ready Active 20.10.17

---创建网络

# docker network create --opt encrypted --driver overlay --attachable webnet

7e0huckau0g8olzsyvdts8ta2

---创建nginx服务(1)

# docker service create --replicas 2 --network webnet --name nginx --publish published=80,target=80 nginx:1.22.0

gf8n3ad4k1pkxqwej6xpdthhe

overall progress: 2 out of 2 tasks

1/2: running [==================================================>]

2/2: running [==================================================>]

verify: Service converged

or

---创建nginx服务(2)

# docker service create --replicas 3 --network webnet --name nginx -p 80:80 nginx:1.22.0

or

---创建nginx服务(3)- 仅允许在非manager节点上运行创建的服务,即只能在node节点上运行服务的副本

# docker service create --replicas 3 --constraint node.role!=manager --network webnet --name nginx -p 80:80 nginx:1.22.0

---查看服务信息

# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

gf8n3ad4k1pk nginx replicated 2/2 nginx:1.22.0 *:80->80/tcp

---查看nginx进程

# docker service ps nginx

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

4vxulka6ofd2 nginx.1 nginx:1.22.0 master Running Running about a minute ago

res3qre7ywcx nginx.2 nginx:1.22.0 node-01 Running Running 3 minutes ago

---查看服务明细

# docker service inspect nginx

---服务扩展

# docker service scale nginx=3

nginx scaled to 3

overall progress: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

verify: Service converged

# docker service ps nginx

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

4vxulka6ofd2 nginx.1 nginx:1.22.0 master Running Running 10 minutes ago

res3qre7ywcx nginx.2 nginx:1.22.0 node-01 Running Running 11 minutes ago

xpwlus8bbo6z nginx.3 nginx:1.22.0 node-02 Running Running 25 seconds ago

---删除服务

# docker service rm nginx

nginx使用docker stack deploy部署portainer-ce和portainer-agent,已实现对swarm集群的监控、展示。

首先,我们在master节点和node节点上先pull需要的镜像

# docker pull portainer/portainer-ce

Using default tag: latest

latest: Pulling from portainer/portainer-ce

772227786281: Pull complete

96fd13befc87: Pull complete

35fb5a8b85ea: Pull complete

6665edb137b0: Pull complete

Digest: sha256:f716a714e6cdbb04b3f3ed4f7fb2494ce7eb4146e94020e324b2aae23e3917a9

Status: Downloaded newer image for portainer/portainer-ce:latest

docker.io/portainer/portainer-ce:latest

# docker pull portainer/agent:2.14.0

2.14.0: Pulling from portainer/agent

772227786281: Already exists

96fd13befc87: Already exists

3902c362cca3: Pull complete

a215b10008ab: Pull complete

434a8ea542bc: Pull complete

c1c68f189caa: Pull complete

Digest: sha256:8440499b6e1cda88442cf6c58c7fb6bad317708b796a747604ce76f65cc788ba

Status: Downloaded newer image for portainer/agent:2.14.0

docker.io/portainer/agent:2.14.0

# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

portainer/portainer-ce latest e8e975c3a7f0 6 days ago 278MB

portainer/agent 2.14.0 25e2624e6d49 6 days ago 166MB

nginx 1.22.0 b3c5c59017fb 10 days ago 142MB

第二步,编写portainer-agent-stack.yml文件

version: '3.2'

services:

agent:

image: portainer/agent:latest

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- /var/lib/docker/volumes:/var/lib/docker/volumes

networks:

- agent_network

deploy:

mode: global

placement:

constraints: [node.platform.os == linux]

portainer:

image: portainer/portainer-ce:2.14.0

command: -H tcp://tasks.agent:9001 --tlsskipverify

ports:

- "9443:9443"

- "9000:9000"

- "8000:8000"

volumes:

- portainer_data:/data

networks:

- agent_network

deploy:

mode: replicated

replicas: 1

placement:

constraints: [node.role == manager]

networks:

agent_network:

driver: overlay

attachable: true

volumes:

portainer_data:备注:上面yml文件中的constraints字段的值,即node.role == manager,来限制portainer服务仅允许运行在swarm集群的管理节点上。

第三步,部署、启动portainer-ce和portainer-agent

# docker stack deploy -c portainer-agent-stack.yml portainer

Creating network portainer_agent_network

Creating service portainer_agent

Creating service portainer_portainer

# docker stack ls

NAME SERVICES ORCHESTRATOR

portainer 2 Swarm

# docker stack ps portainer

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

y66nxsac6of4 portainer_agent.betygbmft1wkh4kmrf5wx45mc portainer/agent:2.14.0 master Running Running 11 seconds ago

91uog63d7mth portainer_agent.nq4urvgyfdjedabze9p5oll6q portainer/agent:2.14.0 node-01 Running Running 12 seconds ago

eahaqvm3gyzc portainer_agent.t6wtou7b5jm07qltlrp35ha85 portainer/agent:2.14.0 node-02 Running Running 11 seconds ago

wy03vu1phwdq portainer_portainer.1 portainer/portainer-ce:latest master Running Running 8 seconds ago 部署、运行成功之后,我们可以通过下面的地址来访问portainer-ce的管理页面。

https://192.168.223.31:9443/

首次登录,需要在登录页面输入一个不少于12位的密码,以创建登录用户。在主页面我们可以看到系统的一个概况。

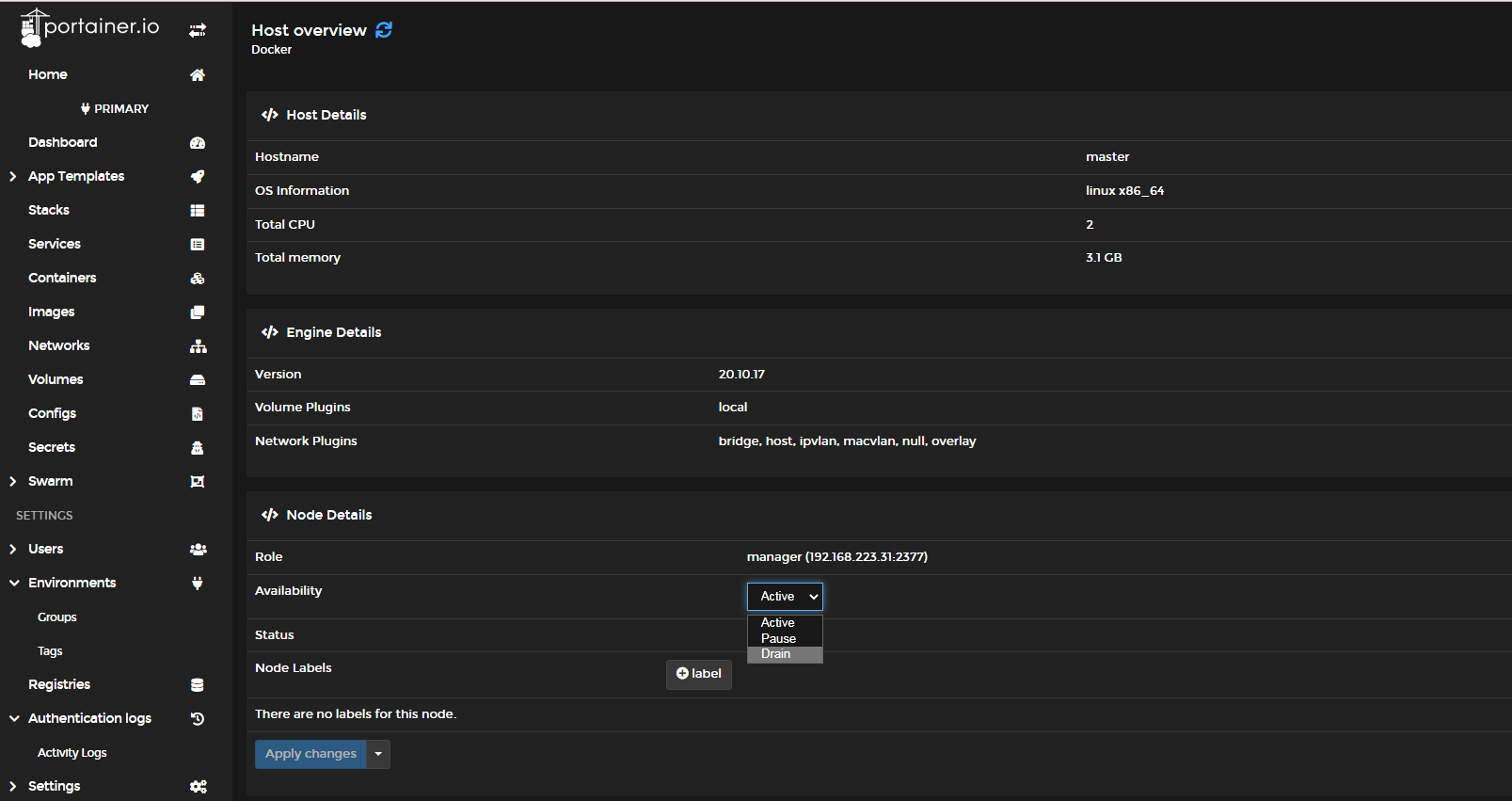

查看swarm集群的信息

修改集群节点的状态,可以暂停节点,也可以驱逐当前节点,默认是Active状态。

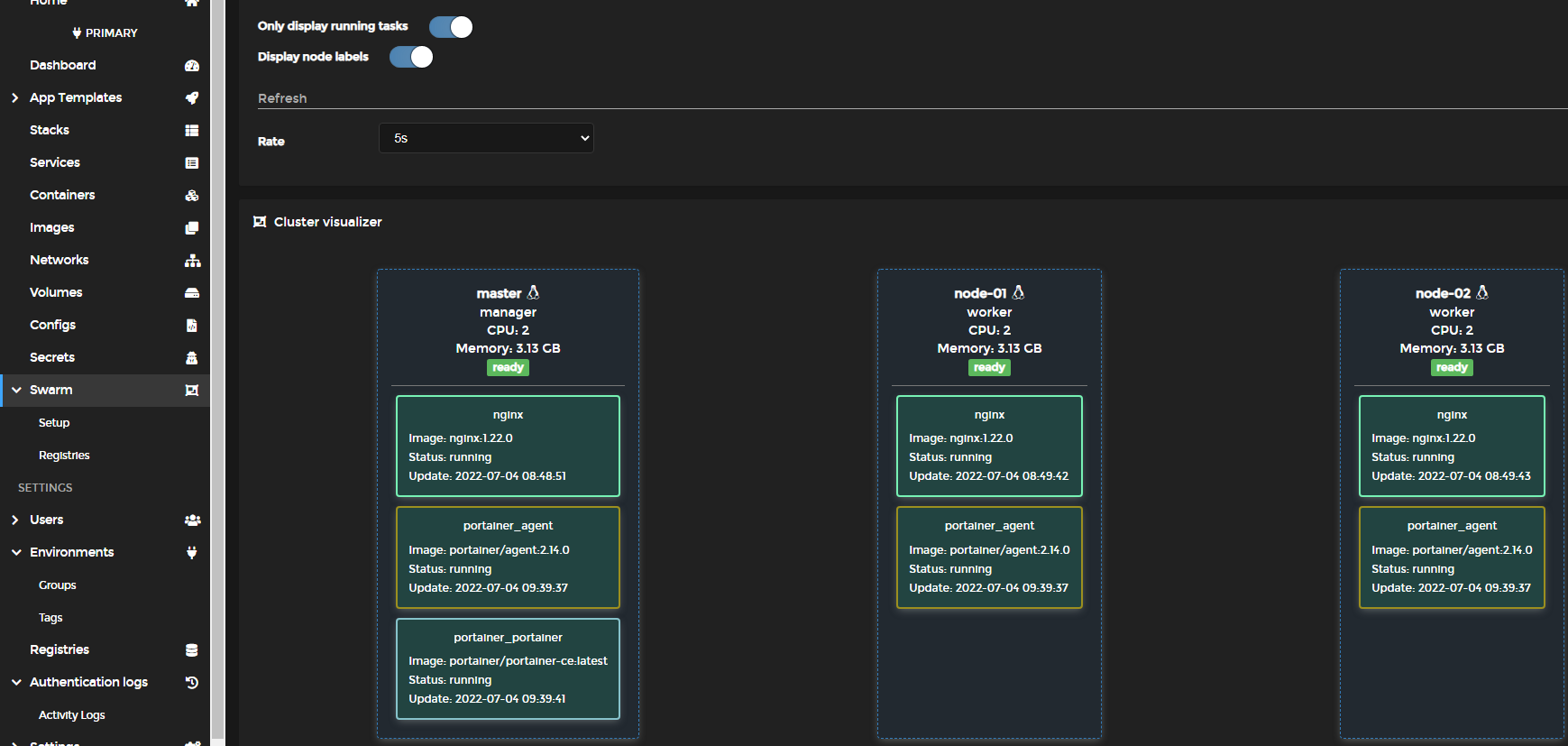

集群可视化(Cluster Visualizer):

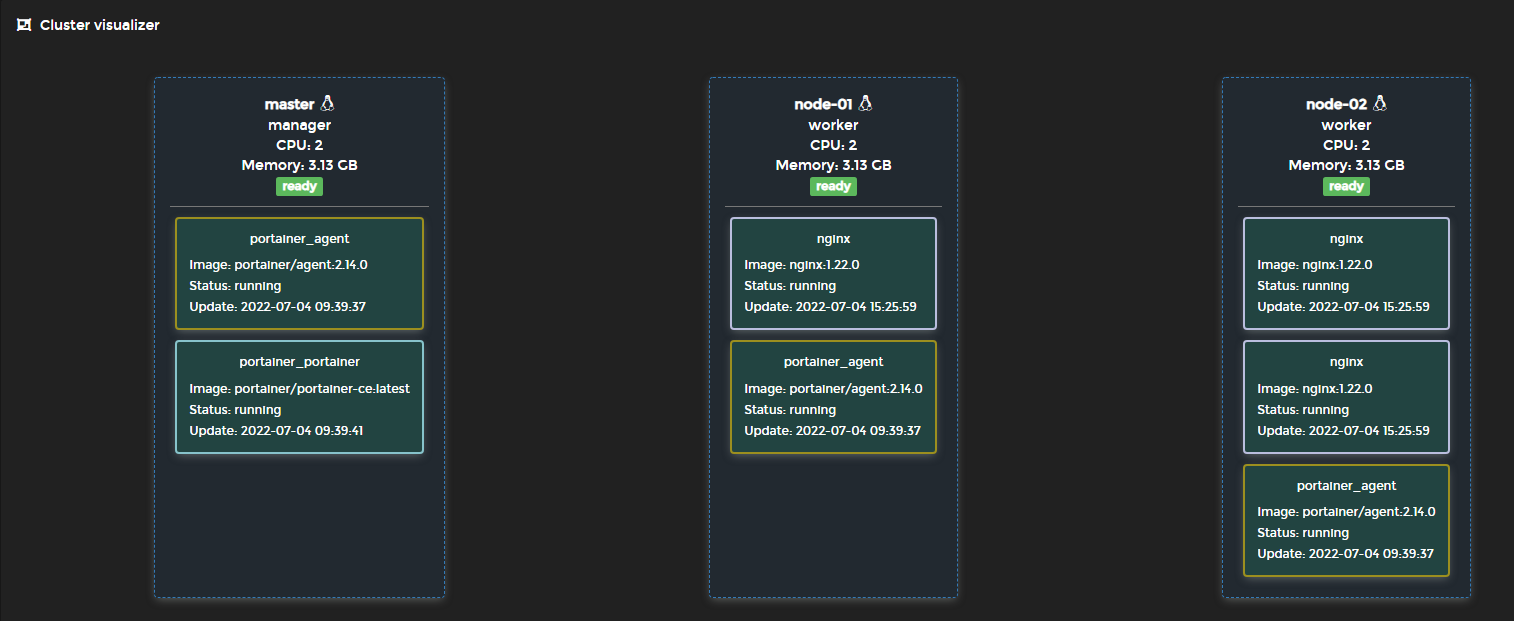

禁止在Manager节点创建Nginx服务后的Visualizer,可以发现Nginx服务只在Node节点上启动和运行了。

Docker swarm集群的特性:

1)当swarm集群所有节点关机后重启,重启后,之前创建的服务都可以自动启动;

2)当swarm集群某个从节点宕机,从节点上运行的容器会在其他节点上启动,以确保replicas设置的副本数不变;

3)当swarm集群只有一个主节点,且该主节点宕机,那么主节点上运行的服务,不会在从节点上再启动,replicas指定的服务副本数将无法得到保障;

4)当集群的主节点先启动的时候,有可能所有的副本都会在主节点上启动(如下所示,3个replicas全部在master节点上启动了),这时候我们为了使node节点的资源被合理利用,就需要将主节点上的多余的节点stop,之后被stop的节点就会在其他的node节点上均衡启动。由此我们可以得出一个结论,在启动swarm集群服务器时,最好先启动node节点,然后再启动master节点,这样可以使得管理节点创建的服务可以均衡的分布到node节点和master节点。

[root@master ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

ba190e2c7691 nginx:1.22.0 "/docker-entrypoint.…" 27 seconds ago Up 20 seconds 80/tcp nginx.3.6ift78belu1ypa22qt3ib5tcu

3a79485f8638 nginx:1.22.0 "/docker-entrypoint.…" 28 seconds ago Up 20 seconds 80/tcp nginx.1.l3k4csim2kii8552k1wyg0dq4

33812961a987 nginx:1.22.0 "/docker-entrypoint.…" 28 seconds ago Up 20 seconds 80/tcp nginx.2.mplcqurndpu8h6fw8ml8nj6cs