Linux Bridge 的表现其实跟我们家用的交换机是非常类似的,它通过自学习的方式来维护一张 MAC 地址表,用于数据的快速转发,而不需要在全部端口进行广播。而今天我们要学习的另一个更高级的虚拟交换机: Open vSwitch(之后简称为 ovs),它的功能更接近于企业级交换机,支持二层和三层操作,支持更多的隧道协议,同时也可以很方便的集成到 SDN 中。

ovs + veth pair 打通 namespace 间网络

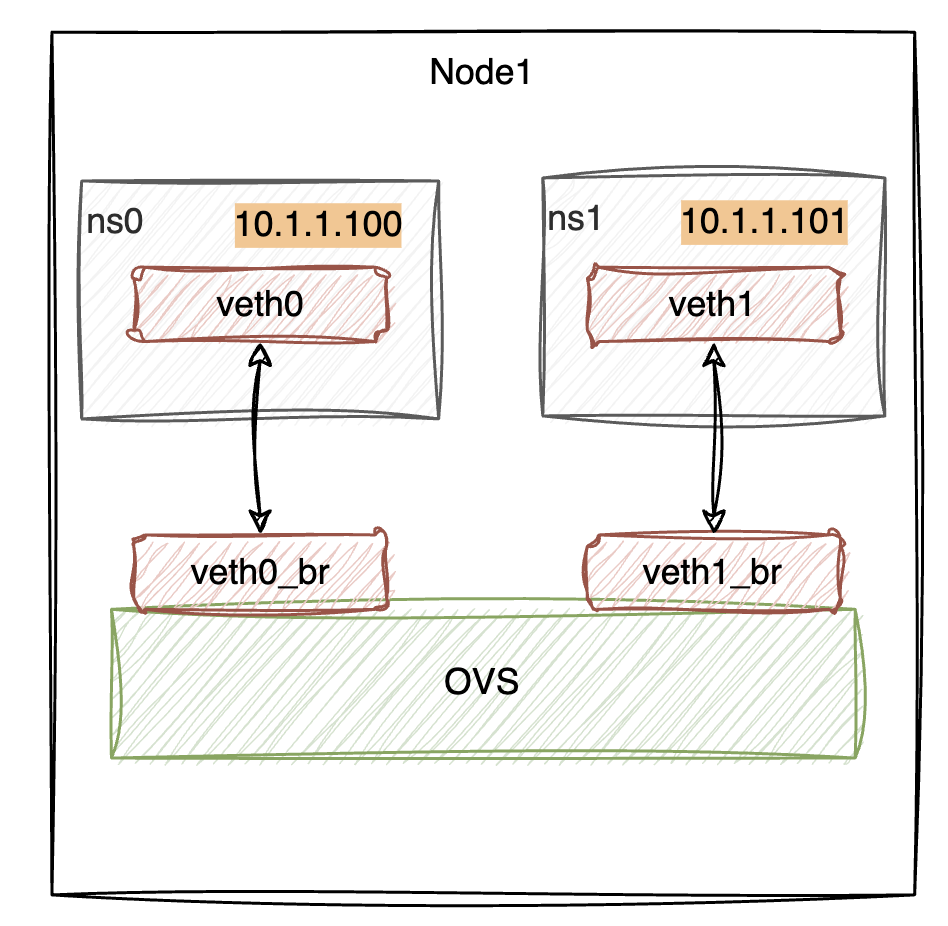

我们先尝试使用 ovs + veth pair 来打通不同 namespace 之间的网络,有之前对 Linux Bridge 的学习,这部分应该比较容易理解,拓扑图如下:

首先创建两个 namespace,ns0和ns1:

$ ip netns add ns0

$ ip netns add ns1

$ ip netns list

ns1 (id: 1)

ns0 (id: 0)

创建 veth pair 设备,将veth0和veth1分别放入ns0和ns1,并设置 ip、启用设备:

$ ip link add veth0 type veth peer name veth0_br

$ ip link set dev veth0 netns ns0

$ ip netns exec ns0 ip addr add 10.1.1.100/24 dev veth0

$ ip netns exec ns0 ip link set dev veth0 up

$ ip link set veth0_br up

$ ip link add veth1 type veth peer name veth1_br

$ ip link set dev veth1 netns ns1

$ ip netns exec ns1 ip addr add 10.1.1.101/24 dev veth1

$ ip netns exec ns1 ip link set dev veth1 up

$ ip link set veth1_br up

最后创建 ovs 设备br0,将 veth pair 的 br 一端插入br0:

$ ovs-vsctl add-br br0

$ ip link set br0 up

$ ovs-vsctl add-port br0 veth0_br

$ ovs-vsctl add-port br0 veth1_br

通过 Open vSwitch 提供的ovs-vsctl工具,我们可以查看br0各 Port 的情况:

$ ovs-vsctl show

a094b4b3-903a-428d-9b42-b60cd4a607ce

Bridge br0

Port veth0_br

Interface veth0_br

Port veth1_br

Interface veth1_br

Port br0

Interface br0

type: internal

ovs_version: "2.17.7"

此时通过veth0 ping veth1,可以看到网络已经正常打通了,ovs br0跟 Linux Bridge 一样,提供了基础的二层交换机的能力。

$ ip netns exec ns0 ping -c1 10.1.1.101

PING 10.1.1.101 (10.1.1.101) 56(84) bytes of data.

64 bytes from 10.1.1.101: icmp_seq=1 ttl=64 time=0.556 ms

--- 10.1.1.101 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.556/0.556/0.556/0.000 ms

ovs 流表

我们在 Linux Bridge 中,可以通过brctl showmacs BRIDGE_NAME来查看特定 Bridge 的 MAC 地址表,比如:

$ brctl showmacs linux_br0

port no mac addr is local? ageing timer

2 82:c4:73:f0:75:df yes 0.00

2 82:c4:73:f0:75:df yes 0.00

2 a2:60:3b:b0:83:44 no 26.80

1 ce:85:09:8a:b1:7b yes 0.00

1 ce:85:09:8a:b1:7b yes 0.00

1 da:ff:de:36:22:8e no 72.11

通过 MAC 地址表,我们可以很直观的看到交换机各个端口对应的 MAC 地址信息,但是在 ovs 中,它并不是通过简单的 MAC 地址表来实现转发策略的,而是使用的另一种机制:Open Flow。我们来看一下br0中的流表,通过 Open vSwitch 提供的ovs-ofctl dump-flows命令可以进行查看:

$ ovs-ofctl dump-flows br0

cookie=0x0, duration=3249.482s, table=0, n_packets=66, n_bytes=5902, priority=0 actions=NORMAL

可以看到br0中有一条默认的规则,它的 actions 是NORMAL,代表br0对所有的数据帧会按照设备上正常的 L2/L3 层逻辑进行处理。

我们尝试对br0的默认行为进行一些调整,比如,从veth0_br口进入的数据包,全部丢弃。通过ovs-ofctl add-flow可以对指定的交换机添加流表规则:

$ ovs-ofctl add-flow br0 in_port=veth0_br,actions=drop

$ ovs-ofctl dump-flows br0

cookie=0x0, duration=2.054s, table=0, n_packets=0, n_bytes=0, in_port="veth0_br" actions=drop

cookie=0x0, duration=3268.365s, table=0, n_packets=72, n_bytes=6266, priority=0 actions=NORMAL

此时再次通过veth0 ping veth1,可以看到已经无法 ping 通了。

root@bridge1:~# ip netns exec ns0 ping -c1 10.1.1.101

PING 10.1.1.101 (10.1.1.101) 56(84) bytes of data.

--- 10.1.1.101 ping statistics ---

1 packets transmitted, 0 received, 100% packet loss, time 0ms

我们添加的流表规则很简单,但是体现了 ovs 的很重要的能力,就是“可编程”,通过调整交换的流表规则,我们可以改变交换机的默认行为,这种能力在 SDN 的架构下非常有利。

faucet + ovs

我们使用 faucet 这个开源的 SDN 控制器来验证 ovs 的能力,faucet 的安装教程官方写的很清楚,这里就不再赘述。

修改 faucet 的配置,这里我们定义了交换机sw1,它具有 5 个 port,port1 / port2 / port3 划在 vlan 100 下,port4 / port5 划在 vlan 200:

$ cat faucet.yaml

vlans:

it:

vid: 100

hr:

vid: 200

dps:

sw1:

dp_id: 0x1

hardware: "Open vSwitch"

interfaces:

1:

name: "host1"

native_vlan: it

2:

name: "host2"

native_vlan: it

3:

name: "host3"

native_vlan: it

4:

name: "host4"

native_vlan: hr

5:

name: "host5"

native_vlan: hr

启动 faucet 后,它会监听本地的6653端口。此时可以查看它的日志:

Nov 01 08:28:32 faucet INFO version 1.10.8

Nov 01 08:28:32 faucet INFO Reloading configuration

Nov 01 08:28:32 faucet INFO configuration /etc/faucet/faucet.yaml changed, analyzing differences

Nov 01 08:28:32 faucet INFO Add new datapath DPID 1 (0x1)

我们通过 ovs 来创建br0,命令如下,比较重要的是通过set-controller br0 tcp:127.0.0.1:6653指明当前br0的控制器的地址,也就是我们刚才通过 faucet 启动的服务地址。

ovs-vsctl add-br br0 \

-- set bridge br0 other-config:datapath-id=0000000000000001 \

-- add-port br0 p1 -- set interface p1 ofport_request=1 \

-- add-port br0 p2 -- set interface p2 ofport_request=2 \

-- add-port br0 p3 -- set interface p3 ofport_request=3 \

-- add-port br0 p4 -- set interface p4 ofport_request=4 \

-- add-port br0 p5 -- set interface p5 ofport_request=5 \

-- set-controller br0 tcp:127.0.0.1:6653 \

-- set controller br0 connection-mode=out-of-band

当br0创建成功后,我们再次查看 faucet 的日志,可以看到br0已经正常接入控制器:

Nov 01 08:29:34 faucet.valve INFO DPID 1 (0x1) sw1 port desc stats

Nov 01 08:29:34 faucet.valve INFO DPID 1 (0x1) sw1 delta in up state: set() => {1, 2, 3, 4, 5, 4294967294}

Nov 01 08:29:34 faucet.valve INFO DPID 1 (0x1) sw1 Port 1 fabricating ADD status True

Nov 01 08:29:34 faucet.valve INFO DPID 1 (0x1) sw1 status change: Port 1 up status True reason ADD state 0

Nov 01 08:29:34 faucet.valve INFO DPID 1 (0x1) sw1 Port 1 (host1) up

Nov 01 08:29:34 faucet.valve INFO DPID 1 (0x1) sw1 Configuring VLAN it vid:100 untagged: Port 1,Port 2,Port 3

Nov 01 08:29:34 faucet.valve INFO DPID 1 (0x1) sw1 Port 2 fabricating ADD status True

Nov 01 08:29:34 faucet.valve INFO DPID 1 (0x1) sw1 status change: Port 2 up status True reason ADD state 0

Nov 01 08:29:34 faucet.valve INFO DPID 1 (0x1) sw1 Port 2 (host2) up

Nov 01 08:29:34 faucet.valve INFO DPID 1 (0x1) sw1 Configuring VLAN it vid:100 untagged: Port 1,Port 2,Port 3

Nov 01 08:29:34 faucet.valve INFO DPID 1 (0x1) sw1 Port 3 fabricating ADD status True

Nov 01 08:29:34 faucet.valve INFO DPID 1 (0x1) sw1 status change: Port 3 up status True reason ADD state 0

Nov 01 08:29:34 faucet.valve INFO DPID 1 (0x1) sw1 Port 3 (host3) up

Nov 01 08:29:34 faucet.valve INFO DPID 1 (0x1) sw1 Configuring VLAN it vid:100 untagged: Port 1,Port 2,Port 3

Nov 01 08:29:34 faucet.valve INFO DPID 1 (0x1) sw1 Port 4 fabricating ADD status True

Nov 01 08:29:34 faucet.valve INFO DPID 1 (0x1) sw1 status change: Port 4 up status True reason ADD state 0

Nov 01 08:29:34 faucet.valve INFO DPID 1 (0x1) sw1 Port 4 (host4) up

Nov 01 08:29:34 faucet.valve INFO DPID 1 (0x1) sw1 Configuring VLAN hr vid:200 untagged: Port 4,Port 5

Nov 01 08:29:34 faucet.valve INFO DPID 1 (0x1) sw1 Port 5 fabricating ADD status True

Nov 01 08:29:34 faucet.valve INFO DPID 1 (0x1) sw1 status change: Port 5 up status True reason ADD state 0

Nov 01 08:29:34 faucet.valve INFO DPID 1 (0x1) sw1 Port 5 (host5) up

Nov 01 08:29:34 faucet.valve INFO DPID 1 (0x1) sw1 Configuring VLAN hr vid:200 untagged: Port 4,Port 5

Nov 01 08:29:34 faucet.valve ERROR DPID 1 (0x1) sw1 send_flow_msgs: DP not up

Nov 01 08:29:34 faucet.valve INFO DPID 1 (0x1) sw1 Cold start configuring DP

Nov 01 08:29:34 faucet.valve INFO DPID 1 (0x1) sw1 Port 1 (host1) configured

Nov 01 08:29:34 faucet.valve INFO DPID 1 (0x1) sw1 Port 2 (host2) configured

Nov 01 08:29:34 faucet.valve INFO DPID 1 (0x1) sw1 Port 3 (host3) configured

Nov 01 08:29:34 faucet.valve INFO DPID 1 (0x1) sw1 Port 4 (host4) configured

Nov 01 08:29:34 faucet.valve INFO DPID 1 (0x1) sw1 Port 5 (host5) configured

Nov 01 08:29:34 faucet.valve INFO DPID 1 (0x1) sw1 Configuring VLAN it vid:100 untagged: Port 1,Port 2,Port 3

Nov 01 08:29:34 faucet.valve INFO DPID 1 (0x1) sw1 Configuring VLAN hr vid:200 untagged: Port 4,Port 5

此时查看br0的流表,可以看到控制器创建的默认规则:

$ ovs-ofctl dump-flows br0

cookie=0x5adc15c0, duration=47.546s, table=0, n_packets=0, n_bytes=0, priority=4096,in_port=p1,vlan_tci=0x0000/0x1fff actions=mod_vlan_vid:100,resubmit(,1)

cookie=0x5adc15c0, duration=47.546s, table=0, n_packets=0, n_bytes=0, priority=4096,in_port=p2,vlan_tci=0x0000/0x1fff actions=mod_vlan_vid:100,resubmit(,1)

cookie=0x5adc15c0, duration=47.546s, table=0, n_packets=0, n_bytes=0, priority=4096,in_port=p3,vlan_tci=0x0000/0x1fff actions=mod_vlan_vid:100,resubmit(,1)

cookie=0x5adc15c0, duration=47.545s, table=0, n_packets=0, n_bytes=0, priority=4096,in_port=p4,vlan_tci=0x0000/0x1fff actions=mod_vlan_vid:200,resubmit(,1)

cookie=0x5adc15c0, duration=47.545s, table=0, n_packets=0, n_bytes=0, priority=4096,in_port=p5,vlan_tci=0x0000/0x1fff actions=mod_vlan_vid:200,resubmit(,1)

cookie=0x5adc15c0, duration=47.545s, table=0, n_packets=0, n_bytes=0, priority=0 actions=drop

cookie=0x5adc15c0, duration=47.546s, table=1, n_packets=0, n_bytes=0, priority=20490,dl_type=0x9000 actions=drop

cookie=0x5adc15c0, duration=47.546s, table=1, n_packets=0, n_bytes=0, priority=20480,dl_src=ff:ff:ff:ff:ff:ff actions=drop

cookie=0x5adc15c0, duration=47.546s, table=1, n_packets=0, n_bytes=0, priority=20480,dl_src=0e:00:00:00:00:01 actions=drop

cookie=0x5adc15c0, duration=47.546s, table=1, n_packets=0, n_bytes=0, priority=4096,dl_vlan=100 actions=CONTROLLER:96,resubmit(,2)

cookie=0x5adc15c0, duration=47.546s, table=1, n_packets=0, n_bytes=0, priority=4096,dl_vlan=200 actions=CONTROLLER:96,resubmit(,2)

cookie=0x5adc15c0, duration=47.546s, table=1, n_packets=0, n_bytes=0, priority=0 actions=resubmit(,2)

cookie=0x5adc15c0, duration=47.546s, table=2, n_packets=0, n_bytes=0, priority=0 actions=resubmit(,3)

cookie=0x5adc15c0, duration=47.547s, table=3, n_packets=0, n_bytes=0, priority=8240,dl_dst=01:00:0c:cc:cc:cc actions=drop

cookie=0x5adc15c0, duration=47.547s, table=3, n_packets=0, n_bytes=0, priority=8240,dl_dst=01:00:0c:cc:cc:cd actions=drop

cookie=0x5adc15c0, duration=47.547s, table=3, n_packets=0, n_bytes=0, priority=8240,dl_vlan=100,dl_dst=ff:ff:ff:ff:ff:ff actions=strip_vlan,output:p1,output:p2,output:p3

cookie=0x5adc15c0, duration=47.547s, table=3, n_packets=0, n_bytes=0, priority=8240,dl_vlan=200,dl_dst=ff:ff:ff:ff:ff:ff actions=strip_vlan,output:p4,output:p5

cookie=0x5adc15c0, duration=47.547s, table=3, n_packets=0, n_bytes=0, priority=8236,dl_dst=01:80:c2:00:00:00/ff:ff:ff:ff:ff:f0 actions=drop

cookie=0x5adc15c0, duration=47.547s, table=3, n_packets=0, n_bytes=0, priority=8216,dl_vlan=100,dl_dst=01:80:c2:00:00:00/ff:ff:ff:00:00:00 actions=strip_vlan,output:p1,output:p2,output:p3

cookie=0x5adc15c0, duration=47.547s, table=3, n_packets=0, n_bytes=0, priority=8216,dl_vlan=100,dl_dst=01:00:5e:00:00:00/ff:ff:ff:00:00:00 actions=strip_vlan,output:p1,output:p2,output:p3

cookie=0x5adc15c0, duration=47.547s, table=3, n_packets=0, n_bytes=0, priority=8216,dl_vlan=200,dl_dst=01:80:c2:00:00:00/ff:ff:ff:00:00:00 actions=strip_vlan,output:p4,output:p5

cookie=0x5adc15c0, duration=47.547s, table=3, n_packets=0, n_bytes=0, priority=8216,dl_vlan=200,dl_dst=01:00:5e:00:00:00/ff:ff:ff:00:00:00 actions=strip_vlan,output:p4,output:p5

cookie=0x5adc15c0, duration=47.547s, table=3, n_packets=0, n_bytes=0, priority=8208,dl_vlan=100,dl_dst=33:33:00:00:00:00/ff:ff:00:00:00:00 actions=strip_vlan,output:p1,output:p2,output:p3

cookie=0x5adc15c0, duration=47.547s, table=3, n_packets=0, n_bytes=0, priority=8208,dl_vlan=200,dl_dst=33:33:00:00:00:00/ff:ff:00:00:00:00 actions=strip_vlan,output:p4,output:p5

cookie=0x5adc15c0, duration=47.547s, table=3, n_packets=0, n_bytes=0, priority=8192,dl_vlan=100 actions=strip_vlan,output:p1,output:p2,output:p3

cookie=0x5adc15c0, duration=47.547s, table=3, n_packets=0, n_bytes=0, priority=8192,dl_vlan=200 actions=strip_vlan,output:p4,output:p5

cookie=0x5adc15c0, duration=47.547s, table=3, n_packets=0, n_bytes=0, priority=0 actions=drop

可以看到默认生成的流表规则还是比较复杂的(这还是在我们 faucet 配置比较简单的情况下),如果逐条分析,需要花费不少时间,好在 ovs 已经给我们提供了相关工具,可以帮助我们理解和分析交换机上数据包的处理过程。

如下,我们通过指定-generate参数,尝试生成一个数据帧,这个数据帧从p1端口进入,其 src mac 是dl_src=00:11:11:00:00:00,dst mac 是dl_dst=00:22:22:00:00:00,ovs-appctl ofproto/trace会帮我们分析这个数据帧会匹配哪些流表规则:

$ ovs-appctl ofproto/trace br0 in_port=p1,dl_src=00:11:11:00:00:00,dl_dst=00:22:22:00:00:00 -generate

Flow: in_port=1,vlan_tci=0x0000,dl_src=00:11:11:00:00:00,dl_dst=00:22:22:00:00:00,dl_type=0x0000

bridge("br0")

-------------

0. in_port=1,vlan_tci=0x0000/0x1fff, priority 4096, cookie 0x5adc15c0

push_vlan:0x8100

set_field:4196->vlan_vid

goto_table:1

1. dl_vlan=100, priority 4096, cookie 0x5adc15c0

CONTROLLER:96

goto_table:2

2. priority 0, cookie 0x5adc15c0

goto_table:3

3. dl_vlan=100, priority 8192, cookie 0x5adc15c0

pop_vlan

output:1

>> skipping output to input port

output:2

output:3

Final flow: unchanged

Megaflow: recirc_id=0,eth,in_port=1,dl_src=00:11:11:00:00:00,dl_dst=00:22:22:00:00:00,dl_type=0x0000

Datapath actions: push_vlan(vid=100,pcp=0),userspace(pid=0,controller(reason=1,dont_send=0,continuation=0,recirc_id=1,rule_cookie=0x5adc15c0,controller_id=0,max_len=96)),pop_vlan,2,3

对table 0中匹配的规则,我们可以看到实际执行了两个动作,一个是push_vlan:0x8100,这是在数据包的头部添加一个802.1Q VLAN标签,另一个是set_field:4196->vlan_vid,表示将 4196 赋值给数据包的 VLAN ID 字段。这个操作是合理的,还记得我们在 faucet 中定义了,对 port1 而言,它相当于一个 access 口,经过该口的数据帧如果不带 VLAN ID,则会打上该口默认的 VLAN ID。

另外,这里可能还有疑惑的是 4196 的值,明明我们 port1 默认的 VLAN ID 是 100,但这里传入的却是 4196。这是因为在 OpenFlow 1.3 中定义了 vlan_vid 只占用 13 个bit,且如果 VLAN 头存在的话,其最高位需要设置为 1,所以 4196 转为 16 进制是 0x1064,其中代表确切的 VLAN ID的值部分是 0x64,也就是 100,跟我们之前的配置是一致的。

对table 1中的规则,此时交换机并不知道应该如何处理这个数据帧,所以它将该数据帧交给 SDN 控制器处理,我们查看 faucet 的日志,可以看到此时控制器经过学习,已经知道00:11:11:00:00:00来自 port1:

Nov 01 09:03:31 faucet.valve INFO DPID 1 (0x1) sw1 L2 learned on Port 1 00:11:11:00:00:00 (L2 type 0x0000, L2 dst 00:22:22:00:00:00, L3 src None, L3 dst None) Port 1 VLAN 100 (1 hosts total)

同时,faucet 也会将对应的流表规则下发给交换机br0,br0下次再接收到该数据帧的时候,就会知道应该怎么处理,而不是每次都询问 faucet:

$ ovs-ofctl dump-flows br0 | grep -i 11:11

cookie=0x5adc15c0, duration=60.806s, table=1, n_packets=0, n_bytes=0, hard_timeout=249, idle_age=60, priority=8191,in_port=1,dl_vlan=100,dl_src=00:11:11:00:00:00 actions=resubmit(,2)

cookie=0x5adc15c0, duration=60.807s, table=2, n_packets=0, n_bytes=0, idle_timeout=399, idle_age=60, priority=8192,dl_vlan=100,dl_dst=00:11:11:00:00:00 actions=strip_vlan,output:1

table 2没有可执行的 actions,直接转给了table 3中匹配的规则,table 3中主要是通过pop_vlan,先将数据帧中的 VLAN 头移除掉,然后将该数据帧转发到同在 VLAN 100 的 port2 和 port3(port1 是数据帧的入端口,不用转发)。

此时,我们-generate生成的数据帧就trace完毕,可以看到它最终被转发到了同一 VLAN 的其它端口下,符合预期,并且 ovs br0和控制器也在接收到该帧的过程中学习生成了新的流表规则。

我们再生成一个反向的数据帧,流程跟上述基本一致,不再详细分析。

$ ovs-appctl ofproto/trace br0 in_port=p2,dl_src=00:22:22:00:00:00,dl_dst=00:11:11:00:00:00 -generate

Flow: in_port=2,vlan_tci=0x0000,dl_src=00:22:22:00:00:00,dl_dst=00:11:11:00:00:00,dl_type=0x0000

bridge("br0")

-------------

0. in_port=2,vlan_tci=0x0000/0x1fff, priority 4096, cookie 0x5adc15c0

push_vlan:0x8100

set_field:4196->vlan_vid

goto_table:1

1. dl_vlan=100, priority 4096, cookie 0x5adc15c0

CONTROLLER:96

goto_table:2

2. dl_vlan=100,dl_dst=00:11:11:00:00:00, priority 8192, cookie 0x5adc15c0

pop_vlan

output:1

Final flow: unchanged

Megaflow: recirc_id=0,eth,in_port=2,dl_src=00:22:22:00:00:00,dl_dst=00:11:11:00:00:00,dl_type=0x0000

Datapath actions: push_vlan(vid=100,pcp=0),userspace(pid=0,controller(reason=1,dont_send=0,continuation=0,recirc_id=3,rule_cookie=0x5adc15c0,controller_id=0,max_len=96)),pop_vlan,1

此时br0中又会生成新的关于00:22:22:00:00:00的流表规则:

ovs-ofctl dump-flows br0 | grep -i 22:22

cookie=0x5adc15c0, duration=42.376s, table=1, n_packets=0, n_bytes=0, hard_timeout=284, idle_age=42, priority=8191,in_port=2,dl_vlan=100,dl_src=00:22:22:00:00:00 actions=resubmit(,2)

cookie=0x5adc15c0, duration=42.376s, table=2, n_packets=0, n_bytes=0, idle_timeout=434, idle_age=42, priority=8192,dl_vlan=100,dl_dst=00:22:22:00:00:00 actions=strip_vlan,output:2

也就是对于00:11:11:00:00:00和00:22:22:00:00:00,现在br0流表都已经知道应该如何处理,所以我们再次尝试从p2向p1发送数据帧:

$ ovs-appctl ofproto/trace br0 in_port=p2,dl_src=00:22:22:00:00:00,dl_dst=00:11:11:00:00:00 -generate

Flow: in_port=2,vlan_tci=0x0000,dl_src=00:22:22:00:00:00,dl_dst=00:11:11:00:00:00,dl_type=0x0000

bridge("br0")

-------------

0. in_port=2,vlan_tci=0x0000/0x1fff, priority 4096, cookie 0x5adc15c0

push_vlan:0x8100

set_field:4196->vlan_vid

goto_table:1

1. in_port=2,dl_vlan=100,dl_src=00:22:22:00:00:00, priority 8191, cookie 0x5adc15c0

goto_table:2

2. dl_vlan=100,dl_dst=00:11:11:00:00:00, priority 8192, cookie 0x5adc15c0

pop_vlan

output:1

Final flow: unchanged

Megaflow: recirc_id=0,eth,in_port=2,dl_src=00:22:22:00:00:00,dl_dst=00:11:11:00:00:00,dl_type=0x0000

Datapath actions: 1

从trace展示的流表项中我们可以看到,此时交换机已经没有再询问控制器这个数据帧应该如何处理了,而是在table 2中直接将数据帧转发到了 port1,因此,可以粗略的认为只有首包才会交由控制器决定如何处理,并向 ovs 交换机下发流表规则,而之后的类似的数据帧则是 ovs 交换机基于流表自行决策,避免频繁的与控制器交互。