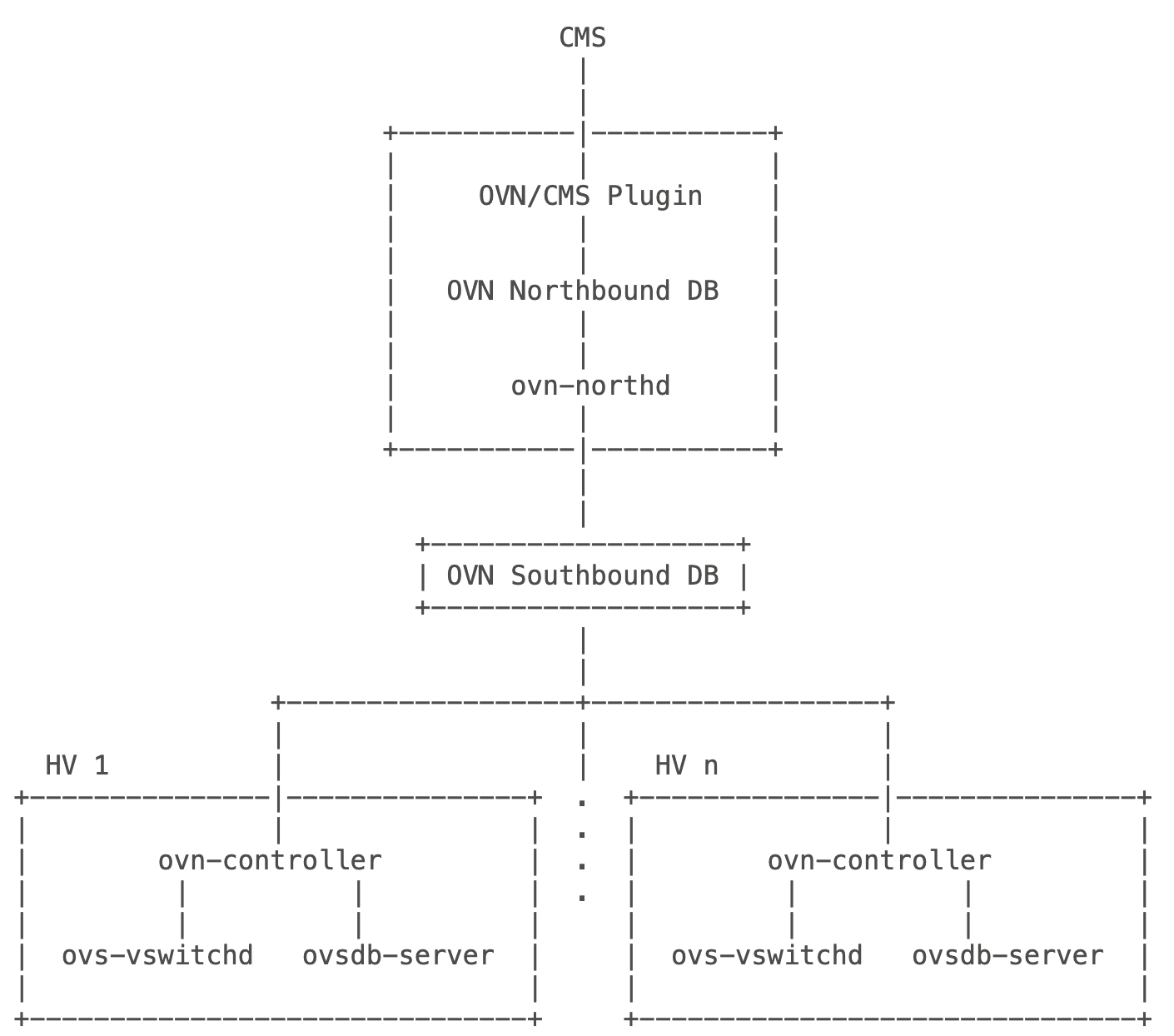

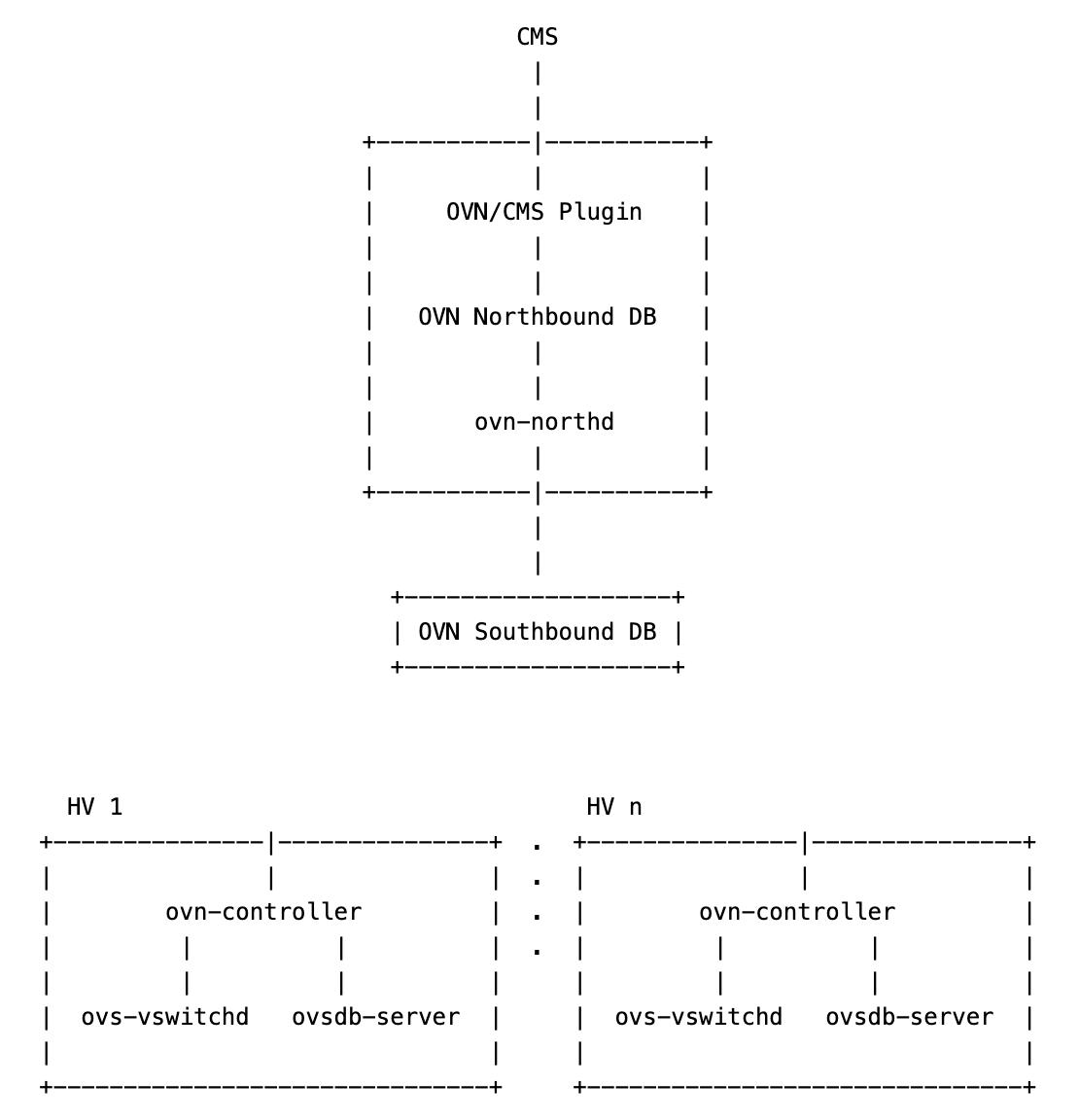

OVN 架构

如上图是 OVN 官方给出的架构图,各个组件的大致功能如下:

CMS:云管平台,给最终用户使用的管理端,比如 OpenStack;OVN/CMS plugin:是CMS的一部分,将用户配置的逻辑网络转换为 OVN 定义的结构数据,写入北向数据库中;OVN Northbound DB:OVN 北向数据库,是ovsdb-server实例;ovn-northd:将 OVN 北向数据库中的逻辑网络信息转换为 OVN 南向数据库的物理网络配置,并写入 OVN 南向数据库;OVN Southbound DB:OVN 南向数据库,是ovsdb-server实例;ovn-controller:运行在每个hypervisor节点上,将 OVN 南向数据库中的物理网络配置转换为 OpenFlow 流表,并下发到 OVS;ovs-vswitchd:OVS 的核心模块;ovsdb-server:存储 OVS 的配置信息,包括端口、vlan、QoS 等;

如上的这些组件可以分为两大类:

central:中心节点,包括OVN/CMS plugin、OVN Northbound DB、ovn-northd、OVN Southbound DB;hypervisor:工作节点,包括ovn-controller、ovs-vswitchd、ovsdb-server;

OVN 从北到南的组件的处理过程,就是将逻辑网络转换为物理网络的过程,后面我们的实验会让大家慢慢理解这一点。

在本文中,我们会提供ovs1和ovs2两台机器,来搭建实验环境,其中ovs1和ovs2都将作为hypervisor角色,而ovs1会同时兼具central角色。

| Hostname | OS | IP | |

|---|---|---|---|

| ovs1 | Ubuntu 22.04 | 192.168.31.154 | central + hypervisor |

| ovs2 | Ubuntu 22.04 | 192.168.31.47 | hypervisor |

环境搭建

安装 OVS

在ovs1和ovs2中都需要安装 OVS,这里我们直接通过apt安装即可:

apt install -y openvswitch-switch

在安装完成后,会以服务方式启动ovs-vswitchd和ovsdb-server进程,可以查看一下是否正常安装:

$ ps -ef | grep -i ovs

root 3409 1 0 15:27 ? 00:00:00 ovsdb-server /etc/openvswitch/conf.db -vconsole:emer -vsyslog:err -vfile:info --remote=punix:/var/run/openvswitch/db.sock --private-key=db:Open_vSwitch,SSL,private_key --certificate=db:Open_vSwitch,SSL,certificate --bootstrap-ca-cert=db:Open_vSwitch,SSL,ca_cert --no-chdir --log-file=/var/log/openvswitch/ovsdb-server.log --pidfile=/var/run/openvswitch/ovsdb-server.pid --detach

root 3472 1 0 15:27 ? 00:00:00 ovs-vswitchd unix:/var/run/openvswitch/db.sock -vconsole:emer -vsyslog:err -vfile:info --mlockall --no-chdir --log-file=/var/log/openvswitch/ovs-vswitchd.log --pidfile=/var/run/openvswitch/ovs-vswitchd.pid --detach

其中ovs-vswitchd负责与 OpenFlow 控制器通信,交换 OpenFlow 流表规则。而ovsdb-server则是一个文件数据库,用于存储 OVS 的配置信息,数据库 schema 定义在/usr/share/openvswitch/vswitch.ovsschema,数据库文件在/etc/openvswitch/conf.db。

通过ovsdb-client工具可以对该文件数据库进行操作,可以看到 OVS 定义的数据库和数据表:

$ ovsdb-client list-dbs unix:/var/run/openvswitch/db.sock

Open_vSwitch

_Server

$ ovsdb-client list-tables unix:/var/run/openvswitch/db.sock

Table

-------------------------

Controller

Bridge

Queue

IPFIX

NetFlow

Open_vSwitch

CT_Zone

QoS

Datapath

SSL

Port

sFlow

Flow_Sample_Collector_Set

CT_Timeout_Policy

Mirror

Flow_Table

Interface

AutoAttach

Manager

安装 OVN

OVS 安装完毕后,我们继续需要安装 OVN 组件,如上文所述,我们将ovs1同时作为central和hypervisor,将ovs2仅作为hypervisor。

首先在ovs1上安装hypervisor相关组件,因为刚才我们已经在两个节点都装了 OVS,所以这里我们只需要安装 OVN 中的ovn-controller即可:

$ apt install -y ovn-common ovn-host

可以看到ovn-controller组件已经正常启动:

$ systemctl status ovn-controller.service

● ovn-controller.service - Open Virtual Network host control daemon

Loaded: loaded (/lib/systemd/system/ovn-controller.service; static)

Active: active (running) since Thu 2023-11-09 16:26:18 CST; 31s ago

Process: 4837 ExecStart=/usr/share/ovn/scripts/ovn-ctl start_controller --ovn-manage-ovsdb=no --no-monitor $OVN_CTL_OPTS (code=exited, status=0/SUCCESS)

Main PID: 4865 (ovn-controller)

Tasks: 4 (limit: 9431)

Memory: 1.8M

CPU: 70ms

CGroup: /system.slice/ovn-controller.service

└─4865 ovn-controller unix:/var/run/openvswitch/db.sock -vconsole:emer -vsyslog:err -vfile:info --no-chdir --log-file=/var/log/ovn/ovn-controller.log --pidfile=/var/run/ovn/ovn-controller.pid

然后再在ovs1上安装central组件:

$ apt install -y ovn-central

在安装完成后,可以看到 OVN 的南北向数据库都已经正常启动,跟 OVS 的 DB 一样,它们都是ovsdb-server的实例,只是对应的 schema 定义不同。

$ root@ovs1:~# ps -ef | grep -i ovsdb-server

...

root 5248 1 0 16:27 ? 00:00:00 ovsdb-server -vconsole:off -vfile:info --log-file=/var/log/ovn/ovsdb-server-nb.log --remote=punix:/var/run/ovn/ovnnb_db.sock --pidfile=/var/run/ovn/ovnnb_db.pid --unixctl=/var/run/ovn/ovnnb_db.ctl --remote=db:OVN_Northbound,NB_Global,connections --private-key=db:OVN_Northbound,SSL,private_key --certificate=db:OVN_Northbound,SSL,certificate --ca-cert=db:OVN_Northbound,SSL,ca_cert --ssl-protocols=db:OVN_Northbound,SSL,ssl_protocols --ssl-ciphers=db:OVN_Northbound,SSL,ssl_ciphers /var/lib/ovn/ovnnb_db.db

root 5252 1 0 16:27 ? 00:00:00 ovsdb-server -vconsole:off -vfile:info --log-file=/var/log/ovn/ovsdb-server-sb.log --remote=punix:/var/run/ovn/ovnsb_db.sock --pidfile=/var/run/ovn/ovnsb_db.pid --unixctl=/var/run/ovn/ovnsb_db.ctl --remote=db:OVN_Southbound,SB_Global,connections --private-key=db:OVN_Southbound,SSL,private_key --certificate=db:OVN_Southbound,SSL,certificate --ca-cert=db:OVN_Southbound,SSL,ca_cert --ssl-protocols=db:OVN_Southbound,SSL,ssl_protocols --ssl-ciphers=db:OVN_Southbound,SSL,ssl_ciphers /var/lib/ovn/ovnsb_db.db

南北向数据库具体的表定义可以分别参考如下 spec,我们这里仅列出所有表名,方便大家理解南北向数据库逻辑上的区别:

$ ovsdb-client list-tables unix:/var/run/ovn/ovnnb_db.sock

Table

---------------------------

Logical_Router_Static_Route

Meter_Band

NB_Global

ACL

Copp

Logical_Switch

Meter

Load_Balancer_Group

HA_Chassis_Group

Address_Set

BFD

NAT

Load_Balancer

QoS

DNS

Forwarding_Group

Connection

SSL

Load_Balancer_Health_Check

Gateway_Chassis

HA_Chassis

Logical_Router_Port

Logical_Router_Policy

Logical_Router

Port_Group

Logical_Switch_Port

DHCP_Options

$ ovsdb-client list-tables unix:/var/run/ovn/ovnsb_db.sock

Table

----------------

RBAC_Role

FDB

RBAC_Permission

Meter_Band

SB_Global

Port_Binding

Meter

HA_Chassis_Group

Address_Set

BFD

Load_Balancer

DNS

IP_Multicast

Connection

Logical_DP_Group

Encap

SSL

Gateway_Chassis

DHCPv6_Options

Chassis_Private

HA_Chassis

IGMP_Group

Service_Monitor

Chassis

Port_Group

MAC_Binding

Multicast_Group

Controller_Event

DHCP_Options

Datapath_Binding

Logical_Flow

同时,在 ovs2 上也要安装工作节点的组件:

$ apt install ovn-host ovn-common

ovn-controller 连接到 ovnsb-db

现在我们在ovs1上安装了central和hypervisor组件,在ovs2上安装了hypervisor组件,但现有的组件连接情况如下:

可以看到ovn-controller此时还没有连接到OVN Southbound DB,所以我们首先开放南向数据库端口6442,该端口后续会提供给所有ovn-controller进行连接。

$ ovn-sbctl set-connection ptcp:6642:192.168.31.154

netstat -lntp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

...

tcp 0 0 192.168.31.154:6642 0.0.0.0:* LISTEN 5252/ovsdb-server

...

将ovs1上的ovn-controller连接到 OVN 南向数据库192.168.31.154:6642。

$ ovs-vsctl set Open_vSwitch . external-ids:ovn-remote="tcp:192.168.31.154:6642" external-ids:ovn-encap-ip="192.168.31.154" external-ids:ovn-encap-type=geneve external-ids:system-id=ovs1

简单解释一下这条命令:

ovs-vsctl会直接操作 OVS 的ovsdb-server,这里指明会操作Open_vSwitch数据库;external-ids字段可以参考官方的说明:Key-value pairs for use by external frameworks that integrate with Open vSwitch, rather than by Open vSwitch itself,可以看到这个字段更多是用于跟第三方系统的集成,而非 OVS 自行使用。结合刚才看到的 OVN 的架构图,很容易联想到这些字段主要是ovn-controller使用;ovn-remote代表 OVN 南向数据库的地址,ovn-controller会使用该地址来连接到 OVN 南向数据库;ovn-encap-ip代表其它的chassis在使用隧道协议连接到当前chassis时需要使用的 IP,而ovn-encap-type则指明隧道协议的类型,ovn-controller在连接到南向数据库后,会默认创建br-int实例,并根据当前的配置对br-int进行初始化;system-id用于表示 OVS 物理主机的唯一标识;

在ovs1上我们同时查看ovn-controller的日志,默认路径是/var/log/ovn/ovn-controller.log,可以看到已经正常连接到 OVN 南向数据库,并且会进行一些初始操作,比如创建br-int网桥,行为符合我们的预期。

$ tail -200f /var/log/ovn/ovn-controller.log

2023-11-09T08:40:09.282Z|00009|reconnect|INFO|tcp:192.168.31.154:6642: connecting...

2023-11-09T08:40:09.282Z|00010|reconnect|INFO|tcp:192.168.31.154:6642: connected

2023-11-09T08:40:09.288Z|00011|features|INFO|unix:/var/run/openvswitch/br-int.mgmt: connecting to switch

2023-11-09T08:40:09.288Z|00012|rconn|INFO|unix:/var/run/openvswitch/br-int.mgmt: connecting...

2023-11-09T08:40:09.288Z|00013|features|INFO|OVS Feature: ct_zero_snat, state: supported

2023-11-09T08:40:09.288Z|00014|main|INFO|OVS feature set changed, force recompute.

2023-11-09T08:40:09.288Z|00015|ofctrl|INFO|unix:/var/run/openvswitch/br-int.mgmt: connecting to switch

2023-11-09T08:40:09.288Z|00016|rconn|INFO|unix:/var/run/openvswitch/br-int.mgmt: connecting...

2023-11-09T08:40:09.289Z|00017|rconn|INFO|unix:/var/run/openvswitch/br-int.mgmt: connected

2023-11-09T08:40:09.289Z|00018|main|INFO|OVS OpenFlow connection reconnected,force recompute.

2023-11-09T08:40:09.289Z|00019|rconn|INFO|unix:/var/run/openvswitch/br-int.mgmt: connected

2023-11-09T08:40:09.290Z|00020|main|INFO|OVS feature set changed, force recompute.

2023-11-09T08:40:09.290Z|00001|pinctrl(ovn_pinctrl0)|INFO|unix:/var/run/openvswitch/br-int.mgmt: connecting to switch

2023-11-09T08:40:09.290Z|00002|rconn(ovn_pinctrl0)|INFO|unix:/var/run/openvswitch/br-int.mgmt: connecting...

2023-11-09T08:40:09.291Z|00003|rconn(ovn_pinctrl0)|INFO|unix:/var/run/openvswitch/br-int.mgmt: connected

在ovs2上也要执行类似的操作,将ovs2上的ovn-controller也连接到ovs1上的 OVN 南向数据库。

$ ovs-vsctl set Open_vSwitch . external-ids:ovn-remote="tcp:192.168.31.154:6642" external-ids:ovn-encap-ip="192.168.31.47" external-ids:ovn-encap-type=geneve external-ids:system-id=ovs2

此时在ovs1上,我们能看到 OVN 的chassis已经配置好:

ovn-sbctl show

Chassis ovs2

hostname: ovs2

Encap geneve

ip: "192.168.31.47"

options: {csum="true"}

Chassis ovs1

hostname: ovs1

Encap geneve

ip: "192.168.31.154"

options: {csum="true"}

同时在ovs1和ovs2上,能看到默认创建的br-int网桥,以及type: geneve的端口:

# ovs1

$ ovs-vsctl show

780cc937-43c3-403b-969c-80d3cf43c97f

Bridge

fail_mode: secure

datapath_type: system

Port br-int

Interface br-int

type: internal

Port ovn-ovs2-0

Interface ovn-ovs2-0

type: geneve

options: {csum="true", key=flow, remote_ip="192.168.31.47"}

ovs_version: "2.17.7"

# ovs2

$ovs-vsctl show

6b213440-7418-4627-96c5-cb7210882373

Bridge br-int

fail_mode: secure

datapath_type: system

Port br-int

Interface br-int

type: internal

Port ovn-ovs1-0

Interface ovn-ovs1-0

type: geneve

options: {csum="true", key=flow, remote_ip="192.168.31.154"}

ovs_version: "2.17.7"

由于隧道协议使用了geneve,所以在物理机上会多出一个genev_sys_6081设备,会在内核层面监听6081端口,后续所有走隧道协议的数据包都会经过该端口:

$ ip a

...

6: br-int: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether e2:22:de:8e:a0:d3 brd ff:ff:ff:ff:ff:ff

7: genev_sys_6081: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 65000 qdisc noqueue master ovs-system state UNKNOWN group default qlen 1000

link/ether 3e:f5:7a:03:5b:fa brd ff:ff:ff:ff:ff:ff

inet6 fe80::f022:ffff:fed1:9233/64 scope link

valid_lft forever preferred_lft forever

root@ovs1:~# ip -d link show dev genev_sys_6081

7: genev_sys_6081: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 65000 qdisc noqueue master ovs-system state UNKNOWN mode DEFAULT group default qlen 1000

link/ether 3e:f5:7a:03:5b:fa brd ff:ff:ff:ff:ff:ff promiscuity 1 minmtu 68 maxmtu 65465

geneve external id 0 ttl auto dstport 6081 udp6zerocsumrx

openvswitch_slave addrgenmode eui64 numtxqueues 1 numrxqueues 1 gso_max_size 65536 gso_max_segs 65535

$ netstat -lnup

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

...

udp 0 0 0.0.0.0:6081 0.0.0.0:* -

...

OVN 实践

Logic Switch

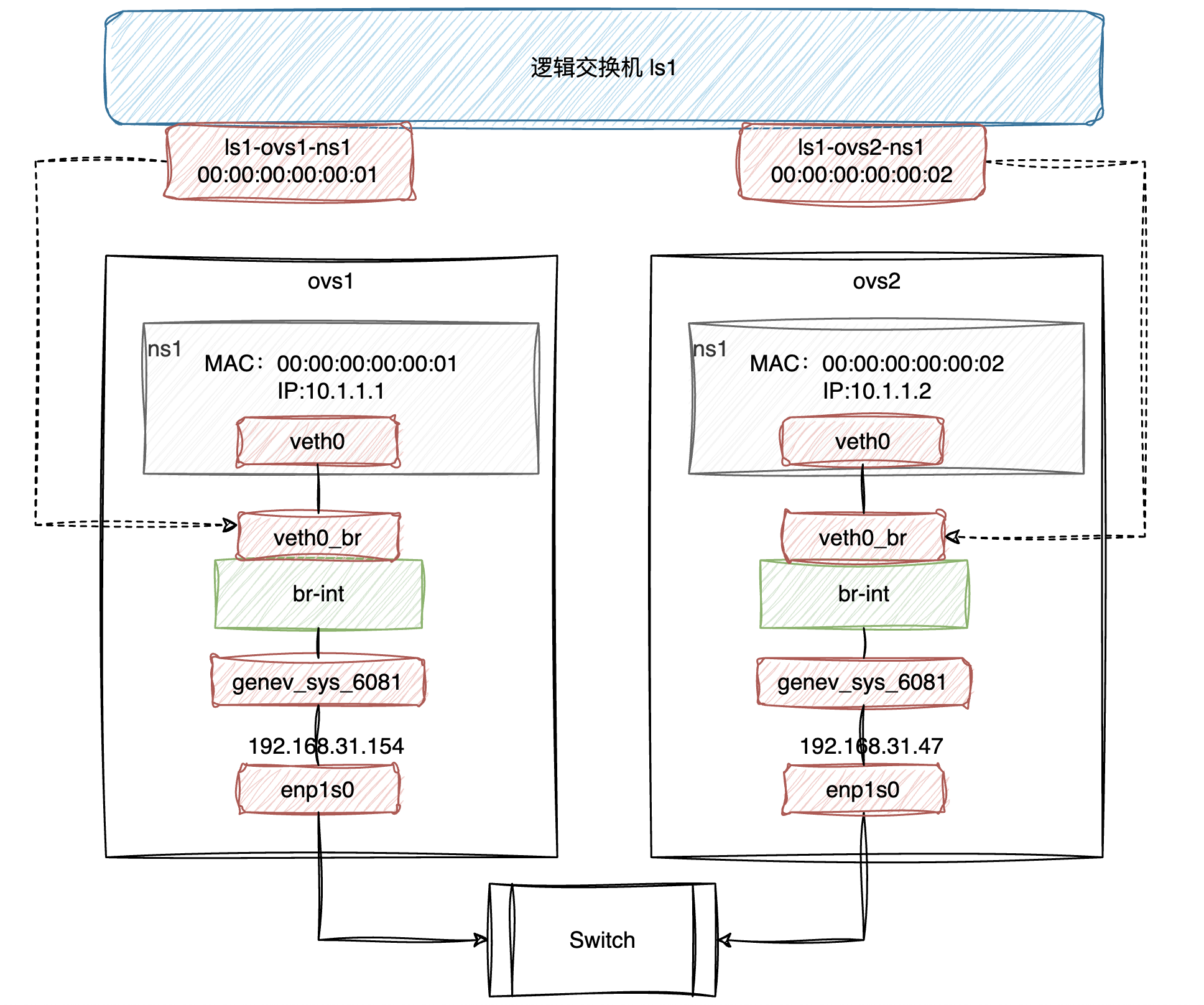

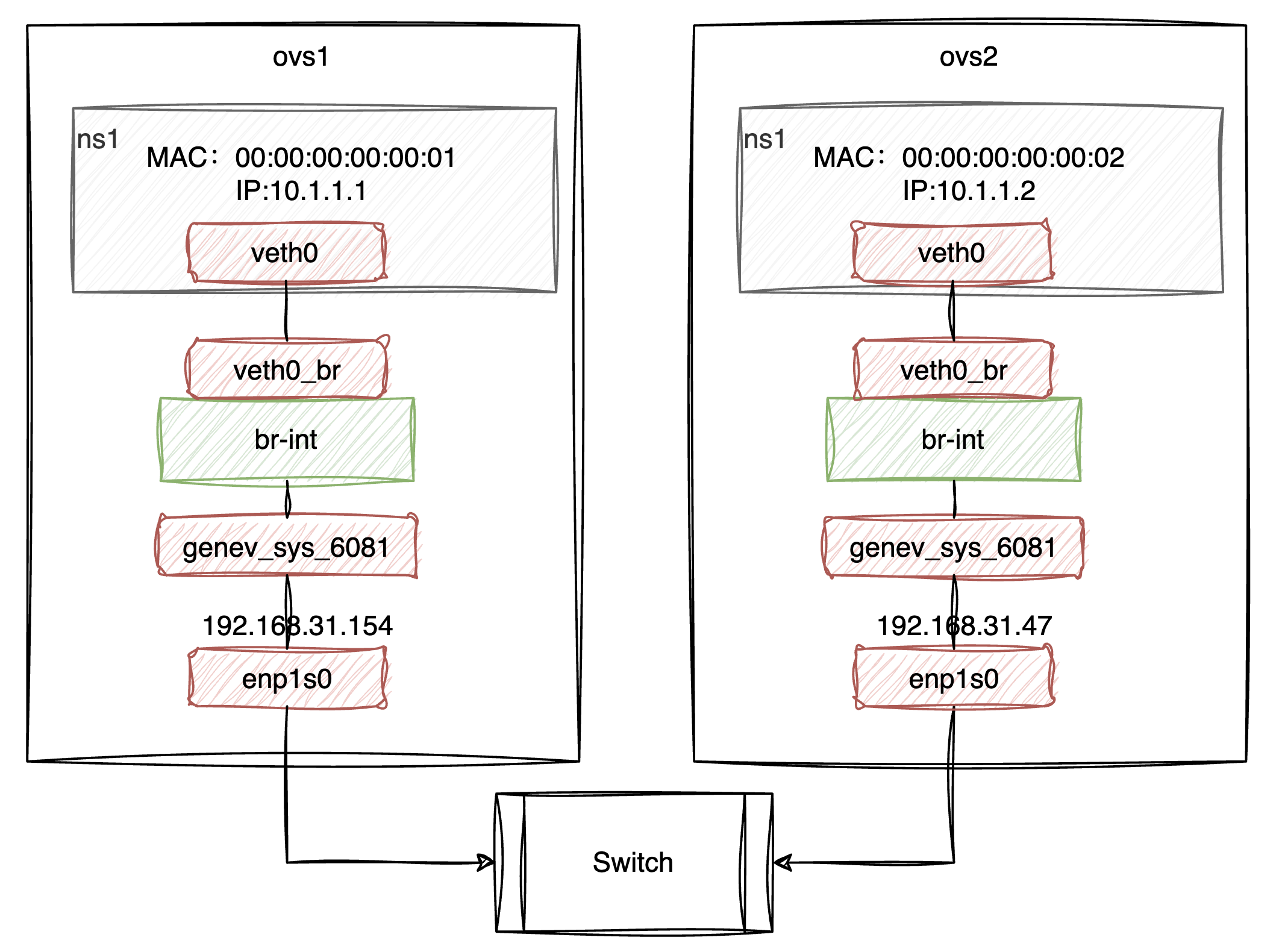

我们先通过 OVN 来搭建一个 Logic Switch,其最终的逻辑结构如下,目前可以还有一些疑惑,但没关系,我们在搭建的过程中来逐步理解其中的细节。

首先,整个图除最顶部的 Logic Switch 部分外,其它部分应该是比较熟悉的(不清楚的推荐看本文顶部的前置阅读),所以我们先将下半部分的环境搭建出来。在ovs1上,创建命名空间ns1,并添加 veth pair 设备对,将其一端放入ns1,另一端接入br-int:

$ ip netns add ns1

$ ip link add veth0 type veth peer name veth0_br

$ ip link set veth0 netns ns1

$ ip netns exec ns1 ip link set veth0 address 00:00:00:00:00:01

$ ip netns exec ns1 ip addr add 10.1.1.1/24 dev veth0

$ ip netns exec ns1 ip link set veth0 up

$ ip link set veth0_br up

$ ovs-vsctl add-port br-int veth0_br

$ ovs-vsctl show

780cc937-43c3-403b-969c-80d3cf43c97f

Bridge br-int

fail_mode: secure

datapath_type: system

Port veth0_br

Interface veth0_br

Port br-int

Interface br-int

type: internal

Port ovn-ovs2-0

Interface ovn-ovs2-0

type: geneve

options: {csum="true", key=flow, remote_ip="192.168.31.47"}

ovs_version: "2.17.7"

在ovs2上也执行类似的动作:

$ ip netns add ns1

$ ip link add veth0 type veth peer name veth0_br

$ ip link set veth0 netns ns1

$ ip netns exec ns1 ip link set veth0 address 00:00:00:00:00:02

$ ip netns exec ns1 ip addr add 10.1.1.2/24 dev veth0

$ ip netns exec ns1 ip link set veth0 up

$ ip link set veth0_br up

$ ovs-vsctl add-port br-int veth0_br

$ ovs-vsctl show

6b213440-7418-4627-96c5-cb7210882373

Bridge br-int

fail_mode: secure

datapath_type: system

Port br-int

Interface br-int

type: internal

Port ovn-ovs1-0

Interface ovn-ovs1-0

type: geneve

options: {csum="true", key=flow, remote_ip="192.168.31.154"}

Port veth0_br

Interface veth0_br

ovs_version: "2.17.7"

此时已搭建好的环境如下图所示:

这时候如果通过ovs1的veth0去 ping ovs2的veth0,是无法 ping 通的,我们可以看下两边的br-int的流表:

# ovs1

$ ovs-ofctl dump-flows br-int

cookie=0x0, duration=1319.155s, table=0, n_packets=0, n_bytes=0, priority=100,in_port="ovn-ovs2-0" actions=move:NXM_NX_TUN_ID[0..23]->OXM_OF_METADATA[0..23],move:NXM_NX_TUN_METADATA0[16..30]->NXM_NX_REG14[0..14],move:NXM_NX_TUN_METADATA0[0..15]->NXM_NX_REG15[0..15],resubmit(,38)

cookie=0x0, duration=1319.179s, table=37, n_packets=0, n_bytes=0, priority=150,reg10=0x2/0x2 actions=resubmit(,38)

cookie=0x0, duration=1319.179s, table=37, n_packets=0, n_bytes=0, priority=150,reg10=0x10/0x10 actions=resubmit(,38)

cookie=0x0, duration=1319.179s, table=37, n_packets=0, n_bytes=0, priority=0 actions=resubmit(,38)

cookie=0x0, duration=1319.179s, table=39, n_packets=0, n_bytes=0, priority=0 actions=load:0->NXM_NX_REG0[],load:0->NXM_NX_REG1[],load:0->NXM_NX_REG2[],load:0->NXM_NX_REG3[],load:0->NXM_NX_REG4[],load:0->NXM_NX_REG5[],load:0->NXM_NX_REG6[],load:0->NXM_NX_REG7[],load:0->NXM_NX_REG8[],load:0->NXM_NX_REG9[],resubmit(,40)

cookie=0x0, duration=1319.179s, table=64, n_packets=0, n_bytes=0, priority=0 actions=resubmit(,65)

# ovs2

$ ovs-ofctl dump-flows br-int

cookie=0x0, duration=905.110s, table=0, n_packets=0, n_bytes=0, priority=100,in_port="ovn-ovs1-0" actions=move:NXM_NX_TUN_ID[0..23]->OXM_OF_METADATA[0..23],move:NXM_NX_TUN_METADATA0[16..30]->NXM_NX_REG14[0..14],move:NXM_NX_TUN_METADATA0[0..15]->NXM_NX_REG15[0..15],resubmit(,38)

cookie=0x0, duration=905.110s, table=37, n_packets=0, n_bytes=0, priority=150,reg10=0x2/0x2 actions=resubmit(,38)

cookie=0x0, duration=905.110s, table=37, n_packets=0, n_bytes=0, priority=150,reg10=0x10/0x10 actions=resubmit(,38)

cookie=0x0, duration=905.110s, table=37, n_packets=0, n_bytes=0, priority=0 actions=resubmit(,38)

cookie=0x0, duration=905.110s, table=39, n_packets=0, n_bytes=0, priority=0 actions=load:0->NXM_NX_REG0[],load:0->NXM_NX_REG1[],load:0->NXM_NX_REG2[],load:0->NXM_NX_REG3[],load:0->NXM_NX_REG4[],load:0->NXM_NX_REG5[],load:0->NXM_NX_REG6[],load:0->NXM_NX_REG7[],load:0->NXM_NX_REG8[],load:0->NXM_NX_REG9[],resubmit(,40)

cookie=0x0, duration=905.110s, table=64, n_packets=0, n_bytes=0, priority=0 actions=resubmit(,65)

根据br-int的流表,我们知道无法 ping 通是正常的,因为流表项中并没有对00:00:00:00:00:01或00:00:00:00:00:02的处理逻辑。那这个时候我们有两种选择,一是分别登录到 ovs1 和 ovs2,然后通过ovs-ofctl去直接操作br-int,为它添加合适的流表项;二是使用 OVN 提供的能力,我们直接通过ovn-nbctl来创建 Logic Switch,然后 OVN 将我们的逻辑网络转化为实际的流表项,下发到ovs1和ovs2的br-int中,就我们本篇文章的目的,我们采用第二种思路。

第一步,我们首先在ovs1上添加 Logic Switch ls1:

# 创建 Logic Switch ls1

ovn-nbctl ls-add ls1

# 为 Logic Switch 添加端口 ls1-ovs1-ns1,该端口的 MAC 地址设备为 00:00:00:00:00:01,与 ovs1 上的 veth0 设备保持一致

$ ovn-nbctl lsp-add ls1 ls1-ovs1-ns1

$ ovn-nbctl lsp-set-addresses ls1-ovs1-ns1 00:00:00:00:00:01

$ ovn-nbctl lsp-set-port-security ls1-ovs1-ns1 00:00:00:00:00:01

# 为 Logic Switch 添加端口 ls1-ovs2-ns1,该端口的 MAC 地址设备为 00:00:00:00:00:02,与 ovs2 上的 veth0 设备保持一致

$ ovn-nbctl lsp-add ls1 ls1-ovs2-ns1

$ ovn-nbctl lsp-set-addresses ls1-ovs2-ns1 00:00:00:00:00:02

$ ovn-nbctl lsp-set-port-security ls1-ovs2-ns1 00:00:00:00:00:02

然后分别登录到ovs1和ovs2,将逻辑端口与物理端口绑定:

# ovs1

$ ovs-vsctl set interface veth0_br external-ids:iface-id=ls1-ovs1-ns1

$ tail -200f /var/log/ovn/ovn-controller.log

2023-11-09T09:14:09.069Z|00021|binding|INFO|Claiming lport ls1-ovs1-ns1 for this chassis.

2023-11-09T09:14:09.069Z|00022|binding|INFO|ls1-ovs1-ns1: Claiming 00:00:00:00:00:01

2023-11-09T09:14:09.128Z|00023|binding|INFO|Setting lport ls1-ovs1-ns1 ovn-installed in OVS

2023-11-09T09:14:09.128Z|00024|binding|INFO|Setting lport ls1-ovs1-ns1 up in Southbound

# ovs2

$ ovs-vsctl set interface veth0_br external-ids:iface-id=ls1-ovs2-ns1

$ tail -200f /var/log/ovn/ovn-controller.log

2023-11-09T09:15:36.638Z|00021|binding|INFO|Claiming lport ls1-ovs2-ns1 for this chassis.

2023-11-09T09:15:36.638Z|00022|binding|INFO|ls1-ovs2-ns1: Claiming 00:00:00:00:00:02

2023-11-09T09:15:36.696Z|00023|binding|INFO|Setting lport ls1-ovs2-ns1 ovn-installed in OVS

2023-11-09T09:15:36.696Z|00024|binding|INFO|Setting lport ls1-ovs2-ns1 up in Southbound

这里我们稍微解释一下如上的行为:

- 第一步,我们通过

ovn-nbctl创建了 Logic Switchls1,同时也创建了逻辑端口ls1-ovs1-ns1和ls1-ovs2-ns1,如果光从逻辑网络的角度来说,ls1-ovs1-ns1和ls1-ovs2-ns1天然是互通的,因为他们在同一交换机上。这也是我们的目标网络期望,我们希望ovs1的veth0与ovs2的veth0互通; - 第二步,我们声明了插在

br-int上的veth0_br物理端口(这里叫物理端口并不是因为veth0_br是物理网卡,但它在内核中确实真实存在的,这是有别于第一步创建的逻辑端口)与逻辑端口的绑定关系;

经过这两步后,对于 OVN 而言,它就已经清楚我们要做的事情是将ls1上的ls1-ovs1-ns1和ls1-ovs2-ns1两个逻辑端口打通,并且也清楚这两个逻辑端口关联的物理端口在哪个chassis上,这样它就有足够的信息去创建流表,并下发到对应的br-int;

这时候我们再来观察ovs1上的流表,可以看到已经增加了很多的流表项,并且其中有对00:00:00:00:00:01的处理(本文删除了很多无关的流表项):

$ ovs-ofctl dump-flows br-int

cookie=0xfb09aa0d, duration=124.890s, table=8, n_packets=1, n_bytes=70, priority=50,reg14=0x1,metadata=0x1,dl_src=00:00:00:00:00:01 actions=resubmit(,9)

cookie=0x3bf4a8e8, duration=124.889s, table=10, n_packets=0, n_bytes=0, priority=90,arp,reg14=0x1,metadata=0x1,dl_src=00:00:00:00:00:01,arp_sha=00:00:00:00:00:01 actions=resubmit(,11)

cookie=0xad934169, duration=124.889s, table=10, n_packets=0, n_bytes=0, priority=90,icmp6,reg14=0x1,metadata=0x1,dl_src=00:00:00:00:00:01,nw_ttl=255,icmp_type=136,icmp_code=0,nd_tll=00:00:00:00:00:01 actions=resubmit(,11)

cookie=0xad934169, duration=124.889s, table=10, n_packets=0, n_bytes=0, priority=90,icmp6,reg14=0x1,metadata=0x1,dl_src=00:00:00:00:00:01,nw_ttl=255,icmp_type=136,icmp_code=0,nd_tll=00:00:00:00:00:00 actions=resubmit(,11)

cookie=0xad934169, duration=124.889s, table=10, n_packets=0, n_bytes=0, priority=90,icmp6,reg14=0x1,metadata=0x1,dl_src=00:00:00:00:00:01,nw_ttl=255,icmp_type=135,icmp_code=0,nd_sll=00:00:00:00:00:00 actions=resubmit(,11)

cookie=0xad934169, duration=124.889s, table=10, n_packets=0, n_bytes=0, priority=90,icmp6,reg14=0x1,metadata=0x1,dl_src=00:00:00:00:00:01,nw_ttl=255,icmp_type=135,icmp_code=0,nd_sll=00:00:00:00:00:01 actions=resubmit(,11)

cookie=0x5fd1762a, duration=124.889s, table=32, n_packets=0, n_bytes=0, priority=50,metadata=0x1,dl_dst=00:00:00:00:00:01 actions=load:0x1->NXM_NX_REG15[],resubmit(,37)

cookie=0xd305c89f, duration=124.889s, table=49, n_packets=0, n_bytes=0, priority=50,reg15=0x1,metadata=0x1,dl_dst=00:00:00:00:00:01 actions=resubmit(,64)

cookie=0x9744e616, duration=124.890s, table=65, n_packets=0, n_bytes=0, priority=100,reg15=0x1,metadata=0x1 actions=output:"veth0_br"

再执行一下 ping,可以看到网络已经正常互通:

$ ip netns exec ns1 ping -c1 10.1.1.2

PING 10.1.1.2 (10.1.1.2) 56(84) bytes of data.

64 bytes from 10.1.1.2: icmp_seq=1 ttl=64 time=2.34 ms

--- 10.1.1.2 ping statistics ---

1 packets mitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 2.340/2.340/2.340/0.000 m

我们来尝试 trace 看一下我们的数据包是怎么流通的,这里的 trace 也分为两个层面,逻辑层面和物理层面,我们先看逻辑层面,通过ovn-trace可以看到:

$ ovn-trace --detailed ls1 'inport == "ls1-ovs1-ns1" && eth.src == 00:00:00:00:00:01 && eth.dst == 00:00:00:00:00:02'

# reg14=0x1,vlan_tci=0x0000,dl_src=00:00:00:00:00:01,dl_dst=00:00:00:00:00:02,dl_type=0x0000

ingress(dp="ls1", inport="ls1-ovs1-ns1")

----------------------------------------

0. ls_in_port_sec_l2 (northd.c:5652): inport == "ls1-ovs1-ns1" && eth.src == {00:00:00:00:00:01}, priority 50, uuid fb09aa0d

next;

24. ls_in_l2_lkup (northd.c:8626): eth.dst == 00:00:00:00:00:02, priority 50, uuid 6fbf2824

outport = "ls1-ovs2-ns1";

output;

egress(dp="ls1", inport="ls1-ovs1-ns1", outport="ls1-ovs2-ns1")

---------------------------------------------------------------

9. ls_out_port_sec_l2 (northd.c:5749): outport == "ls1-ovs2-ns1" && eth.dst == {00:00:00:00:00:02}, priority 50, uuid b47a0dad

output;

/* output to "ls1-ovs2-ns1", type "" */

逻辑层面的流表非常简单,流量就是从ls1-ovs1-ns1进来,从ls1-ovs2-ns1出去。我们再 trace 看一下实际的 OVS 交换机的流表项:

root@ovs1:~# ovs-appctl ofproto/trace br-int in_port=veth0_br,dl_src=00:00:00:00:00:01,dl_dst=00:00:00:00:00:02 -generate

Flow: in_port=2,vlan_tci=0x0000,dl_src=00:00:00:00:00:01,dl_dst=00:00:00:00:00:02,dl_type=0x0000

bridge("br-int")

----------------

0. in_port=2, priority 100, cookie 0x9744e616

set_field:0x2->reg13

set_field:0x1->reg11

set_field:0x3->reg12

set_field:0x1->metadata

set_field:0x1->reg14

resubmit(,8)

8. reg14=0x1,metadata=0x1,dl_src=00:00:00:00:00:01, priority 50, cookie 0xfb09aa0d

resubmit(,9)

9. metadata=0x1, priority 0, cookie 0xe1f6688a

resubmit(,10)

10. metadata=0x1, priority 0, cookie 0x206647c1

resubmit(,11)

11. metadata=0x1, priority 0, cookie 0xd9c15b2f

resubmit(,12)

12. metadata=0x1, priority 0, cookie 0x16271336

resubmit(,13)

13. metadata=0x1, priority 0, cookie 0x6755a0af

resubmit(,14)

14. metadata=0x1, priority 0, cookie 0xf93be0b2

resubmit(,15)

15. metadata=0x1, priority 0, cookie 0xe1c12540

resubmit(,16)

16. metadata=0x1, priority 65535, cookie 0x893f7d6

resubmit(,17)

17. metadata=0x1, priority 65535, cookie 0x3348210c

resubmit(,18)

18. metadata=0x1, priority 0, cookie 0xcc0ba6b

resubmit(,19)

19. metadata=0x1, priority 0, cookie 0x24ff1f92

resubmit(,20)

20. metadata=0x1, priority 0, cookie 0xbcbe5df

resubmit(,21)

21. metadata=0x1, priority 0, cookie 0xa1e819cc

resubmit(,22)

22. metadata=0x1, priority 0, cookie 0xde6b38e7

resubmit(,23)

23. metadata=0x1, priority 0, cookie 0xc2515403

resubmit(,24)

24. metadata=0x1, priority 0, cookie 0x774a7f92

resubmit(,25)

25. metadata=0x1, priority 0, cookie 0x2d51d29e

resubmit(,26)

26. metadata=0x1, priority 0, cookie 0xfb6ad009

resubmit(,27)

27. metadata=0x1, priority 0, cookie 0x15e0df4b

resubmit(,28)

28. metadata=0x1, priority 0, cookie 0xa714633b

resubmit(,29)

29. metadata=0x1, priority 0, cookie 0x24266311

resubmit(,30)

30. metadata=0x1, priority 0, cookie 0x82ba2720

resubmit(,31)

31. metadata=0x1, priority 0, cookie 0x939bced7

resubmit(,32)

32. metadata=0x1,dl_dst=00:00:00:00:00:02, priority 50, cookie 0x6fbf2824

set_field:0x2->reg15

resubmit(,37)

37. reg15=0x2,metadata=0x1, priority 100, cookie 0xf1bcaf3a

set_field:0x1/0xffffff->tun_id

set_field:0x2->tun_metadata0

move:NXM_NX_REG14[0..14]->NXM_NX_TUN_METADATA0[16..30]

-> NXM_NX_TUN_METADATA0[16..30] is now 0x1

output:1

-> output to kernel tunnel

Final flow: reg11=0x1,reg12=0x3,reg13=0x2,reg14=0x1,reg15=0x2,tun_id=0x1,metadata=0x1,in_port=2,vlan_tci=0x0000,dl_src=00:00:00:00:00:01,dl_dst=00:00:00:00:00:02,dl_type=0x0000

Megaflow: recirc_id=0,eth,in_port=2,dl_src=00:00:00:00:00:01,dl_dst=00:00:00:00:00:02,dl_type=0x0000

Datapath actions: set(tunnel(tun_id=0x1,dst=192.168.31.47,ttl=64,tp_dst=6081,geneve({class=0x102,type=0x80,len=4,0x10002}),flags(df|csum|key))),2

可以看到对于物理层面而言,最终我们会output to kernel tunnel,也就是会进入geneve隧道网络。

为了验证我们的想法,我们抓包看下实际的数据包:

# ovs1 上执行 ping

root@ovs1:~# ip netns exec ns1 ping -c1 10.1.1.2

PING 10.1.1.2 (10.1.1.2) 56(84) bytes of data.

64 bytes from 10.1.1.2: icmp_seq=1 ttl=64 time=2.34 ms

--- 10.1.1.2 ping statistics ---

1 packets mitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 2.340/2.340/2.340/0.000 m

# ovs2 上抓取 enp1s0 网卡的包,可以看到 Geneve 封装后的数据包

¥ tcpdump -n -vvv -i enp1s0 port 6081

tcpdump: listening on enp1s0, link-type EN10MB (Ethernet), snapshot length 262144 bytes

17:23:02.178390 IP (tos 0x0, ttl 64, id 45826, offset 0, flags [DF], proto UDP (17), length 142)

192.168.31.154.17461 > 192.168.31.47.6081: [bad udp cksum 0xc0a5 -> 0xf147!] Geneve, Flags [C], vni 0x1, options [class Open Virtual Networking (OVN) (0x102) type 0x80(C) len 8 data 00010002]

IP (tos 0x0, ttl 64, id 61779, offset 0, flags [DF], proto ICMP (1), length 84)

10.1.1.1 > 10.1.1.2: ICMP echo request, id 22757, seq 1, length 64

17:23:02.179570 IP (tos 0x0, ttl 64, id 38874, offset 0, flags [DF], proto UDP (17), length 142)

192.168.31.47.9016 > 192.168.31.154.6081: [bad udp cksum 0xc0a5 -> 0x1245!] Geneve, Flags [C], vni 0x1, options [class Open Virtual Networking (OVN) (0x102) type 0x80(C) len 8 data 00020001]

IP (tos 0x0, ttl 64, id 3198, offset 0, flags [none], proto ICMP (1), length 84)

10.1.1.2 > 10.1.1.1: ICMP echo reply, id 22757, seq 1, length 64

# ovs2 上抓取 genev_sys_6081 网卡的包,可以看到 Geneve 解封装后的数据包

$ tcpdump -n -vvv -i genev_sys_6081

tcpdump: listening on genev_sys_6081, link-type EN10MB (Ethernet), snapshot length 262144 bytes

17:23:02.178439 IP (tos 0x0, ttl 64, id 61779, offset 0, flags [DF], proto ICMP (1), length 84)

10.1.1.1 > 10.1.1.2: ICMP echo request, id 22757, seq 1, length 64

17:23:02.179544 IP (tos 0x0, ttl 64, id 3198, offset 0, flags [none], proto ICMP (1), length 84)

10.1.1.2 > 10.1.1.1: ICMP echo reply, id 22757, seq 1, length 64

好,Login Switch 的部分到此结束,这个逻辑网络非常简单,但是我们从中可以理解 OVN 最重要的事情,就是将我们声明的逻辑网络,转换为最终的物理网络的流表。

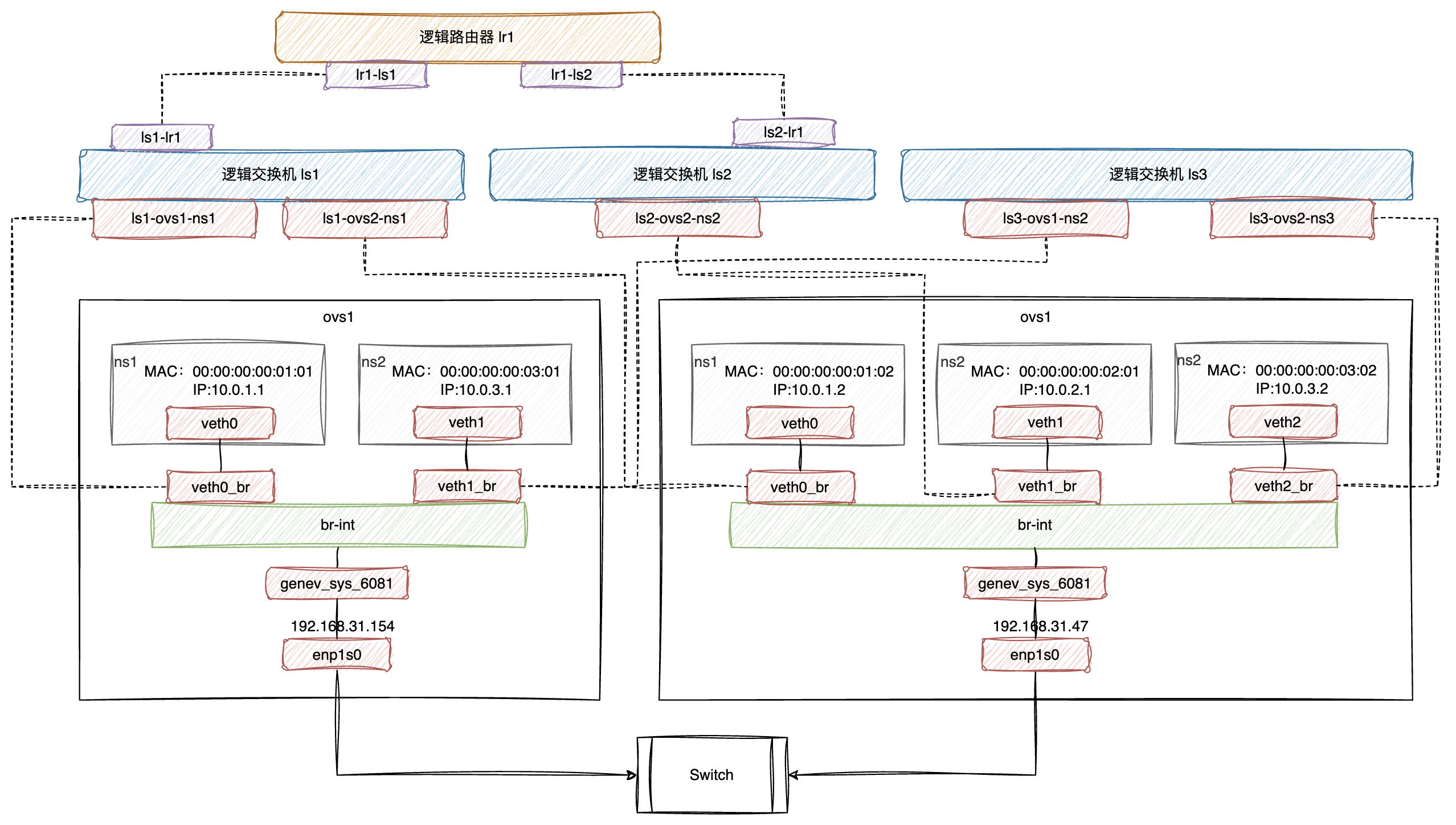

Logic Router

刚才我们尝试创建了 Logic Switch ,这次我们尝试通过创建 Logic Router 来打通三层网络,最终的逻辑网络结构如下:

跟 Logic Switch 一样,我们还是先创建物理网络部分,对ovs1:

$ ip netns add ns1

$ ip link add veth0 type veth peer name veth0_br

$ ip link set veth0 netns ns1

$ ip netns exec ns1 ip link set veth0 address 00:00:00:00:01:01

$ ip netns exec ns1 ip addr add 10.0.1.1/24 dev veth0

$ ip netns exec ns1 ip link set veth0 up

$ ip link set veth0_br up

$ ovs-vsctl add-port br-int veth0_br

$ ip netns add ns2

$ ip link add veth1 type veth peer name veth1_br

$ ip link set veth1 netns ns2

$ ip netns exec ns2 ip link set veth1 address 00:00:00:00:03:01

$ ip netns exec ns2 ip addr add 10.0.3.1/24 dev veth1

$ ip netns exec ns2 ip link set veth1 up

$ ip link set veth1_br up

$ ovs-vsctl add-port br-int veth1_br

$ ovs-vsctl show

7629ddb1-4771-495b-8ab6-1432bd9913bd

Bridge br-int

fail_mode: secure

datapath_type: system

Port ovn-ovs2-0

Interface ovn-ovs2-0

type: geneve

options: {csum="true", key=flow, remote_ip="192.168.31.47"}

Port br-int

Interface br-int

type: internal

Port veth0_br

Interface veth0_br

Port veth1_br

Interface veth1_br

ovs_version: "2.17.7"

对ovs2:

$ ip netns add ns1

$ ip link add veth0 type veth peer name veth0_br

$ ip link set veth0 netns ns1

$ ip netns exec ns1 ip link set veth0 address 00:00:00:00:01:02

$ ip netns exec ns1 ip addr add 10.0.1.2/24 dev veth0

$ ip netns exec ns1 ip link set veth0 up

$ ip link set veth0_br up

$ ovs-vsctl add-port br-int veth0_br

$ ip netns add ns2

$ ip link add veth1 type veth peer name veth1_br

$ ip link set veth1 netns ns2

$ ip netns exec ns2 ip link set veth1 address 00:00:00:00:02:01

$ ip netns exec ns2 ip addr add 10.0.2.1/24 dev veth1

$ ip netns exec ns2 ip link set veth1 up

$ ip link set veth1_br up

$ ovs-vsctl add-port br-int veth1_br

$ ip netns add ns3

$ ip link add veth2 type veth peer name veth2_br

$ ip link set veth2 netns ns3

$ ip netns exec ns3 ip link set veth2 address 00:00:00:00:03:02

$ ip netns exec ns3 ip addr add 10.0.3.2/24 dev veth2

$ ip netns exec ns3 ip link set veth2 up

$ ip link set veth2_br up

$ ovs-vsctl add-port br-int veth2_br

$ ovs-vsctl show

43452548-e283-4fd4-836c-0fcba8f4e543

Bridge br-int

fail_mode: secure

datapath_type: system

Port ovn-ovs1-0

Interface ovn-ovs1-0

type: geneve

options: {csum="true", key=flow, remote_ip="192.168.31.154"}

Port veth2_br

Interface veth2_br

Port veth1_br

Interface veth1_br

Port br-int

Interface br-int

type: internal

Port veth0_br

Interface veth0_br

ovs_version: "2.17.7"

物理网络创建完毕,我们开始创建 Logic Switch:

$ ovn-nbctl ls-add ls1

$ ovn-nbctl lsp-add ls1 ls1-ovs1-ns1

$ ovn-nbctl lsp-set-addresses ls1-ovs1-ns1 00:00:00:00:01:01

$ ovn-nbctl lsp-set-port-security ls1-ovs1-ns1 00:00:00:00:01:01

$ ovn-nbctl lsp-add ls1 ls1-ovs2-ns1

$ ovn-nbctl lsp-set-addresses ls1-ovs2-ns1 00:00:00:00:01:02

$ ovn-nbctl lsp-set-port-security ls1-ovs2-ns1 00:00:00:00:01:02

$ ovn-nbctl ls-add ls2

$ ovn-nbctl lsp-add ls2 ls2-ovs2-ns2

$ ovn-nbctl lsp-set-addresses ls2-ovs2-ns2 00:00:00:00:02:01

$ ovn-nbctl lsp-set-port-security ls2-ovs2-ns2 00:00:00:00:02:01

$ ovn-nbctl ls-add ls3

$ ovn-nbctl lsp-add ls3 ls3-ovs1-ns2

$ ovn-nbctl lsp-set-addresses ls3-ovs1-ns2 00:00:00:00:03:01

$ ovn-nbctl lsp-set-port-security ls3-ovs1-ns2 00:00:00:00:03:01

$ ovn-nbctl lsp-add ls3 ls3-ovs2-ns3

$ ovn-nbctl lsp-set-addresses ls3-ovs2-ns3 00:00:00:00:03:02

$ ovn-nbctl lsp-set-port-security ls3-ovs2-ns3 00:00:00:00:03:02

将逻辑端口与物理端口进行绑定:

# ovs1

$ ovs-vsctl set interface veth0_br external-ids:iface-id=ls1-ovs1-ns1

$ ovs-vsctl set interface veth1_br external-ids:iface-id=ls3-ovs1-ns2

# ovs2

$ ovs-vsctl set interface veth0_br external-ids:iface-id=ls1-ovs2-ns1

$ ovs-vsctl set interface veth1_br external-ids:iface-id=ls2-ovs2-ns2

$ ovs-vsctl set interface veth2_br external-ids:iface-id=ls3-ovs2-ns3

如上的逻辑与之前 Logic Switch 的实验并没有差别,此时,在同一 Logic Switch 下的网络已经正常能通:

# ovs1

$ ip netns exec ns1 ping -c 1 10.0.1.2

PING 10.0.1.2 (10.0.1.2) 56(84) bytes of data.

64 bytes from 10.0.1.2: icmp_seq=1 ttl=64 time=1.80 ms

--- 10.0.1.2 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 1.803/1.803/1.803/0.000 ms

# ovs1

$ ip netns exec ns2 ping -c 1 10.0.3.2

PING 10.0.3.2 (10.0.3.2) 56(84) bytes of data.

64 bytes from 10.0.3.2: icmp_seq=1 ttl=64 time=1.55 ms

--- 10.0.3.2 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 1.553/1.553/1.553/0.000 ms

但这时候我们对网路提出更高的要求,我们希望10.0.1.0/24网段到10.0.2.0/24网段间也能互通,这时候就需要创建 Logic Router lr1,将ls1和ls2连接起来,在三层打通:

# 创建 Logic Router,并声明路由器上用于连接交换机 ls1 和 ls2 的端口

$ ovn-nbctl lr-add lr1

$ ovn-nbctl lrp-add lr1 lr1-ls1 00:00:00:00:01:00 10.0.1.254/24

$ ovn-nbctl lrp-add lr1 lr1-ls2 00:00:00:00:02:00 10.0.2.254/24

# 在交换机 ls1 和 ls2 上创建用于连接到路由器的端口

$ ovn-nbctl lsp-add ls1 ls1-lr1

$ ovn-nbctl lsp-set-type ls1-lr1 router

$ ovn-nbctl lsp-set-addresses ls1-lr1 00:00:00:00:01:00

$ ovn-nbctl lsp-set-options ls1-lr1 router-port=lr1-ls1

$ ovn-nbctl lsp-add ls2 ls2-lr1

$ ovn-nbctl lsp-set-type ls2-lr1 router

$ ovn-nbctl lsp-set-addresses ls2-lr1 00:00:00:00:02:00

$ ovn-nbctl lsp-set-options ls2-lr1 router-port=lr1-ls2

# 由于本次实验涉及三层网络,所以需要为所有逻辑交换机的端口绑定 IP

$ ovn-nbctl lsp-set-addresses ls1-lr1 "00:00:00:00:01:00 10.0.1.254"

$ ovn-nbctl lsp-set-addresses ls1-ovs1-ns1 "00:00:00:00:01:01 10.0.1.1"

$ ovn-nbctl lsp-set-addresses ls1-ovs2-ns1 "00:00:00:00:01:02 10.0.1.2"

$ ovn-nbctl lsp-set-addresses ls2-lr1 "00:00:00:00:02:00 10.0.2.254"

$ ovn-nbctl lsp-set-addresses ls2-ovs2-ns2 "00:00:00:00:02:01 10.0.2.1"

$ ovn-nbctl show

switch e8dd7d1c-1a76-4232-a511-daf404eea3e3 (ls2)

port ls2-lr1

type: router

addresses: ["00:00:00:00:02:00 10.0.2.254"]

router-port: lr1-ls2

port ls2-ovs2-ns2

addresses: ["00:00:00:00:02:01 10.0.2.1"]

switch fb8916b4-8676-47a4-89c4-12620056dc4f (ls1)

port ls1-lr1

type: router

addresses: ["00:00:00:00:01:00 10.0.1.254"]

router-port: lr1-ls1

port ls1-ovs2-ns1

addresses: ["00:00:00:00:01:02 10.0.1.2"]

port ls1-ovs1-ns1

addresses: ["00:00:00:00:01:01 10.0.1.1"]

switch 5273a84f-2398-4b27-8a1a-06e476de9619 (ls3)

port ls3-ovs2-ns3

addresses: ["00:00:00:00:03:02"]

port ls3-ovs1-ns2

addresses: ["00:00:00:00:03:01"]

router 0f68975a-5e4b-4229-973c-7fdae9db22c7 (lr1)

port lr1-ls1

mac: "00:00:00:00:01:00"

networks: ["10.0.1.254/24"]

port lr1-ls2

mac: "00:00:00:00:02:00"

networks: ["10.0.2.254/24"]

其实到此为止,通过创建 Logic Router lr1 ,我们已经将ls1和ls2打通了,但是此时尝试跨网段 ping 是无法 ping 通的,原因是物理网络的路由缺失,我们需要添加跨网段的路由:

$ ip netns exec ns1 ping -c 1 10.0.2.1

ping: connect: Network is unreachable

# ovs1

$ ip netns exec ns1 ip route add 10.0.2.0/24 via 10.0.1.254 dev veth0

$ ip netns exec ns1 route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

10.0.1.0 0.0.0.0 255.255.255.0 U 0 0 0 veth0

10.0.2.0 10.0.1.254 255.255.255.0 UG 0 0 0 veth0

# ovs2

$ ip netns exec ns2 ip route add 10.0.1.0/24 via 10.0.2.254 dev veth1

$ ip netns exec ns2 route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

10.0.1.0 10.0.2.254 255.255.255.0 UG 0 0 0 veth1

10.0.2.0 0.0.0.0 255.255.255.0 U 0 0 0 veth1

此时再 ping,就发现跨网段的网络已经正常能通:

$ ip netns exec ns1 ping -c 1 10.0.2.1

PING 10.0.2.1 (10.0.2.1) 56(84) bytes of data.

64 bytes from 10.0.2.1: icmp_seq=1 ttl=63 time=2.46 ms

--- 10.0.2.1 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 2.460/2.460/2.460/0.000 ms

通过ovn-trace,查看逻辑流表信息,重点关注 ingress 和 egress 的行,可以看到进入ls1的数据包是经过 Logic Router lr1的中转,最终投递到ls2上的:

ovn-trace --summary ls1 'inport == "ls1-ovs1-ns1" && eth.src == 00:00:00:00:01:01 && ip4.src == 10.0.1.1 && eth.dst == 00:00:00:00:01:00 && ip4.dst == 10.0.2.1 && ip.ttl == 64 && icmp4.type == 8'

# icmp,reg14=0x1,vlan_tci=0x0000,dl_src=00:00:00:00:01:01,dl_dst=00:00:00:00:01:00,nw_src=10.0.1.1,nw_dst=10.0.2.1,nw_tos=0,nw_ecn=0,nw_ttl=64,nw_frag=no,icmp_type=8,icmp_code=0

ingress(dp="ls1", inport="ls1-ovs1-ns1") {

next;

outport = "ls1-lr1";

output;

egress(dp="ls1", inport="ls1-ovs1-ns1", outport="ls1-lr1") {

next;

output;

/* output to "ls1-lr1", type "patch" */;

ingress(dp="lr1", inport="lr1-ls1") {

xreg0[0..47] = 00:00:00:00:01:00;

next;

reg9[2] = 1;

next;

next;

reg7 = 0;

next;

ip.ttl--;

reg8[0..15] = 0;

reg0 = ip4.dst;

reg1 = 10.0.2.254;

eth.src = 00:00:00:00:02:00;

outport = "lr1-ls2";

flags.loopback = 1;

next;

next;

reg8[0..15] = 0;

next;

next;

eth.dst = 00:00:00:00:02:01;

next;

output;

egress(dp="lr1", inport="lr1-ls1", outport="lr1-ls2") {

reg9[4] = 0;

next;

output;

/* output to "lr1-ls2", type "patch" */;

ingress(dp="ls2", inport="ls2-lr1") {

next;

next;

outport = "ls2-ovs2-ns2";

output;

egress(dp="ls2", inport="ls2-lr1", outport="ls2-ovs2-ns2") {

output;

/* output to "ls2-ovs2-ns2", type "" */;

};

};

};

};

};

};