1.服务器信息

部署所有虚拟机是通过VMware Workstation Pro搭建的三台虚拟机。

虚拟机信息如下:

| 角色 | ip | 系统 | |

| master | 192.168.67.151 | centos 7.6 | hadoop3.2.1 |

| work1 | 192.168.67.152 | centos 7.6 | hadoop3.2.1 |

| work2 | 192.168.67.153 | centos 7.6 | hadoop3.2.1 |

2.环境准备

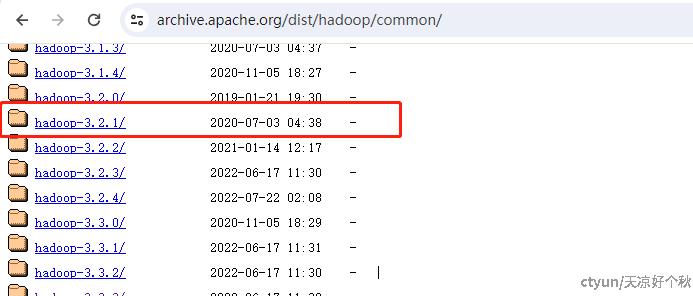

2.1 从官网下载hadoop

2.2 关闭selinux ,防火墙,hostname,hosts,配置ssh互信

关闭selinux(3台)

#修改selinux配置,修改SELINUX=disabled

vim /etc/selinux/config

关闭防火墙(3台)

#关闭防火墙

systemctl stop firewalld

#设置防火墙开启不启动

systemctl disable firewalld

修改主机名称(3台)

#其他节点修改成work1~2

hostnamectl set-hostname master

添加主机解析(3台)

192.168.67.151 master

192.168.67.152 work1

192.168.67.153 work2

设置ssh互信

ssh-keygen #一路回车,生成秘钥ssh-copy-id root@worker1 #将公钥拷贝到worker1~2

ssh-copy-id root@worker2

3. hadoop安装

3.1 安装java(3台)

#!/bin/bash

echo "安装JDK"

filePath=./jdk-8u191-linux-x64.tar.gz

installDir=/usr/local/java

mkdir -vp $installDir

#rm -rf /usr/local/java/jdk*

tar -zxvf $filePath -C $installDir

cat >> /etc/profile << EOF

export JAVA_HOME=/usr/local/java/jdk1.8.0_191

export JRE_HOME=/usr/local/java/jdk1.8.0_191/jre

EOF

echo -e '\nexport CLASSPATH=$JRE_HOME/lib:$JAVA_HOME/lib:$JRE_HOME/lib/charsets.jar' >> /etc/profile

echo -e '\nexport PATH=$JAVA_HOME/bin:$JRE_HOME/bin:$PATH' >> /etc/profile

source /etc/profile

chmod -R 755 $installDir

echo "安装JDK完成"

安装完成需要执行下 source /etc/profile

3.2 解压hadoop,修改配置文件

cd /data/hadoop/src/hadoop-3.2.1/etc/hadoop

vi hadoop-env.sh #添加java_home

export JAVA_HOME=/usr/local/java/jdk1.8.0_191

vi yarn-env.sh #添加java_home

export JAVA_HOME=/usr/local/java/jdk1.8.0_191

vi workers

worker1

worker2

修改core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://master:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/data/hadoop/tmp</value>

</property>

</configuration>

修改hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>master:9001</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/data/hadoop/namenode</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/data/hadoop/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

</configuration>

修改yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.auxservices.

mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>master:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>master:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>master:8035</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>master:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>master:8088</value>

</property>

</configuration>

修改启动脚本 添加如下内容,3.2版本用root用户启动会有报错

cd /data/hadoop/src/hadoop-3.2.1/sbin

vi start-dfs.sh

##添加如下内容

HDFS_DATANODE_USER=root

HDFS_DATANODE_SECURE_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

vi stop-dfs.sh

#添加如下内容

HDFS_DATANODE_USER=root

HDFS_DATANODE_SECURE_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

vi start-yarn.sh

#添加如下内容

YARN_RESOURCEMANAGER_USER=root

HADOOP_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root

vi stop-yarn.sh

#添加如下内容

YARN_RESOURCEMANAGER_USER=root

HADOOP_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root

添加hadoop环境变量

vi /etc/profile

HADOOP_HOME=/data/hadoop/src/hadoop-3.2.1/

export PATH=$PATH:$HADOOP_HOME/bin更新环境变量

source /etc/profile

将hadoop目录拷贝到worker节点上

scp -r /data/hadoop/ root@192.168.67.152:/data

scp -r /data/hadoop/ root@192.168.67.153:/data

初始化hadoop集群

hadoop namenode -format

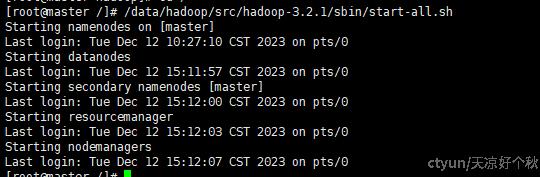

启动hadoop集群

/data/hadoop/src/hadoop-3.2.1/sbin/start-all.sh

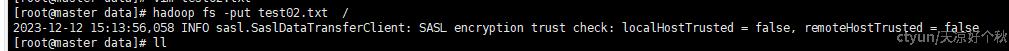

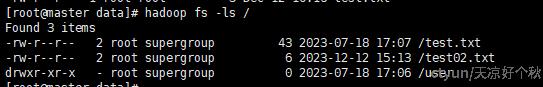

测试集群

上传一个文件到集群

#上传

hadoop fs -put test02.txt /

#查看文件

hadoop fs -ls /

执行hadoop job

cd /data/hadoop/src/hadoop-3.2.1/share/hadoop/mapreduce/

hadoop jar ./hadoop-mapreduce-examples-3.2.1.jar pi 5 10Number of Maps = 5

Samples per Map = 10

2023-12-12 15:15:42,094 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

Wrote input for Map #0

2023-12-12 15:15:42,238 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

Wrote input for Map #1

2023-12-12 15:15:42,262 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

Wrote input for Map #2

2023-12-12 15:15:42,690 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

Wrote input for Map #3

2023-12-12 15:15:42,775 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

Wrote input for Map #4

Starting Job

2023-12-12 15:15:42,845 INFO impl.MetricsConfig: Loaded properties from hadoop-metrics2.properties

2023-12-12 15:15:42,894 INFO impl.MetricsSystemImpl: Scheduled Metric snapshot period at 10 second(s).2023-12-12 15:15:46,226 INFO mapred.LocalJobRunner: Finishing task: attempt_local427066532_0001_r_000000_0

2023-12-12 15:15:46,226 INFO mapred.LocalJobRunner: reduce task executor complete.

2023-12-12 15:15:46,425 INFO mapreduce.Job: map 100% reduce 100%

2023-12-12 15:15:46,425 INFO mapreduce.Job: Job job_local427066532_0001 completed successfully

2023-12-12 15:15:46,434 INFO mapreduce.Job: Counters: 36

File System Counters

FILE: Number of bytes read=1913980

FILE: Number of bytes written=5033552

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=2360

HDFS: Number of bytes written=3755

HDFS: Number of read operations=89

HDFS: Number of large read operations=0

HDFS: Number of write operations=45

HDFS: Number of bytes read erasure-coded=0

Map-Reduce Framework

Map input records=5

Map output records=10

Map output bytes=90

Map output materialized bytes=140

Input split bytes=715

Combine input records=0

Combine output records=0

Reduce input groups=2

Reduce shuffle bytes=140

Reduce input records=10

Reduce output records=0

Spilled Records=20

Shuffled Maps =5

Failed Shuffles=0

Merged Map outputs=5

GC time elapsed (ms)=39

Total committed heap usage (bytes)=3155165184

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=590

File Output Format Counters

Bytes Written=97

Job Finished in 3.642 seconds

Estimated value of Pi is 3.28000000000000000000