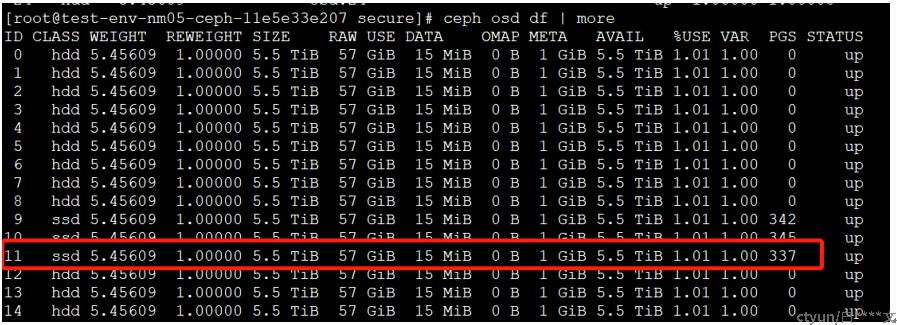

Ceph 设备类。默认情况下,OSD将根据Linux内核公开的硬件属性自动将其设备类设置为HDD、SSD或NVMe

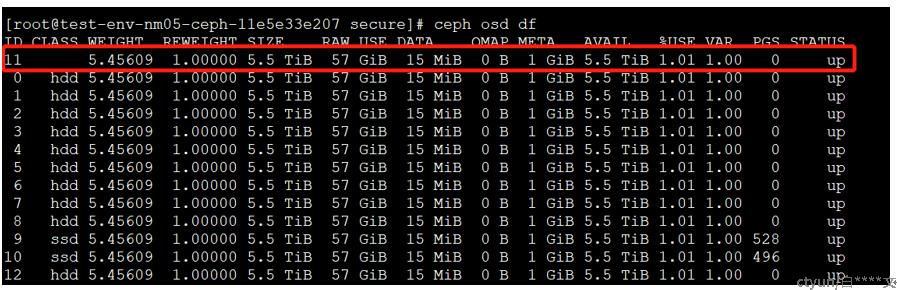

查询当前系统中的设备类:

# ceph osd crush class ls

[

"hdd"

]

创建名为ssd的设备类:

# ceph osd crush class create ssd

created class ssd with id 1 to crush map

查询当前设备类下的所有osd:

# osd crush class ls-osd + class名

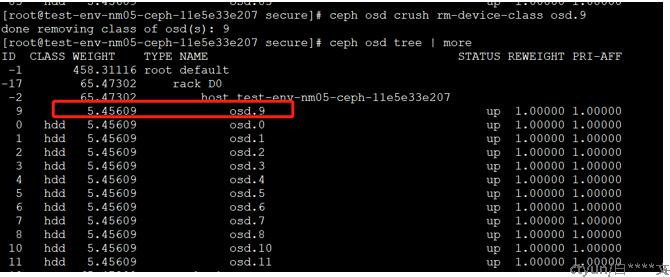

删除某个osd的设备类配置标签:

# ceph osd crush rm-device-class osd.9

done removing class of osd(s): 9

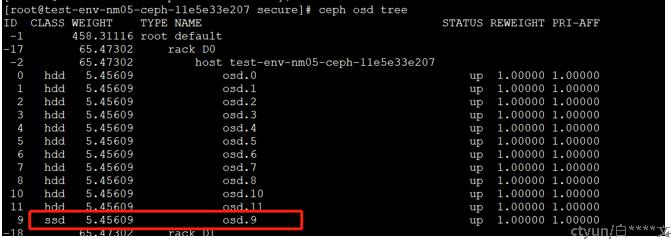

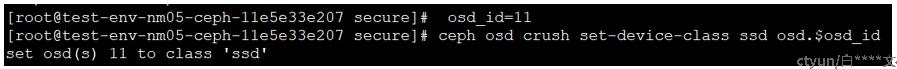

设置某个osd的设备类标签:

# ceph osd crush set-device-class ssd osd.$osd_id

set osd(s) 9 to class 'ssd'

查看当前ssd设备类中的osd:

# ceph osd crush class ls-osd ssd

9

10

11

21

22

23

33

34

35

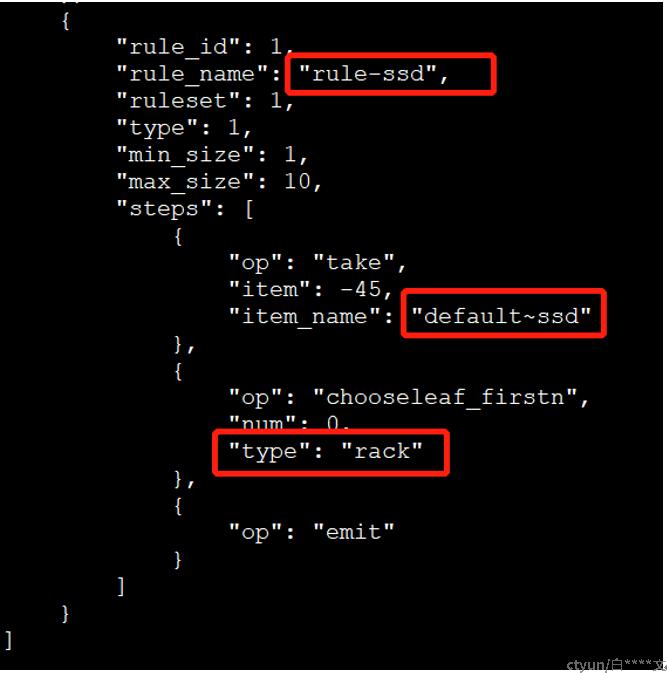

创建一个副本的rule,指定新的ssd设备类和故障域为rack:

# ceph osd crush rule create-replicated rule-ssd default rack ssd

# ceph osd crush rule ls

replicated_rule

rule-ssd

创建pool

# ceph osd pool create ssdpool 1024 1024 replicated rule-ssd

# ceph osd pool ls detail

pool 1 'ssdpool' replicated size 3 min_size 2 crush_rule 1 object_hash rjenkins pg_num 1024 pgp_num 1024 autoscale_mode warn last_change 418 flags hashpspool stripe_width 0

创建image,查看数据分布:

# rbd create ssdpool/imagetest --size 100G

查看pool中的对象:

# rados ls -p ssdpool

rbd_directory

rbd_info

rbd_id.imagetest

rbd_header.7795dd7a2999

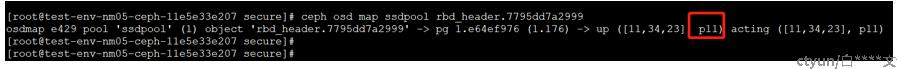

查看对象分布,只分布在ssd标签的osd上:

# ceph osd map ssdpool rbd_header.7795dd7a2999

osdmap e425 pool 'ssdpool' (1) object 'rbd_header.7795dd7a2999' -> pg 1.e64ef976 (1.176) -> up ([11,34,23], p11) acting ([11,34,23], p11)

删除osd.11的设备类标签,数据分布立马发生变化,后台做恢复任务:

Osd.11上的pg分布会全部迁移出来:

再将osd.11设置为ssd设备标签:

数据分布重新分不到osd.11上:

PG重新做均衡分布: