1. 执行hive插件安装命令

[qying@qying1 ranger-2.2.0-hive-plugin]$ ./install.properties

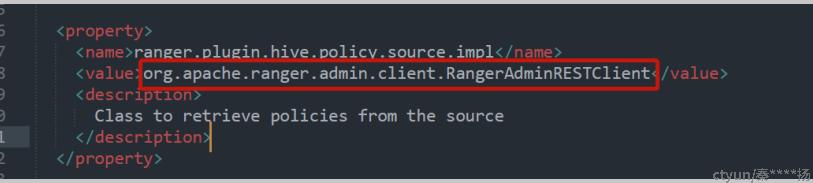

2. 安装hive插件后,hive配置文件中会有安全相关的内容

2.1 security文件指定了客户端

Ranger客户端,用于从ranger服务器拉取角色权限信息

org.apache.ranger.admin.client.RangerAdminRESTClient

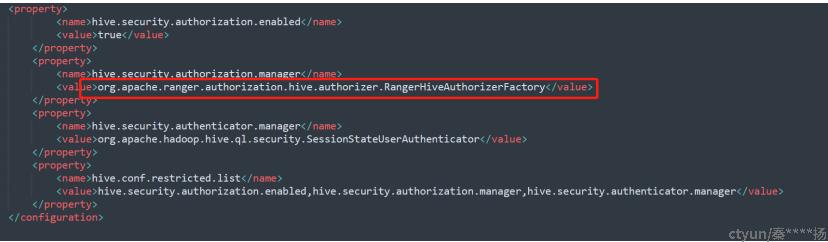

2.2hive-site文件指定hive授权的工厂类:负责创建ranger权限器

指定hive授权的工厂类:负责创建ranger权限器

工厂类:

org.apache.ranger.authorization.hive.authorizer.RangerHiveAuthorizerFactory

权限管理器:

org.apache.ranger.authorization.hive.authorizer.RangerHiveAuthorizer

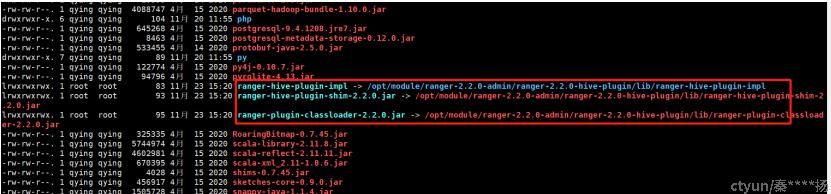

3. 查看hive的lib目录

引入相关权限认证的jar包

4. jar包说明

ranger-plugin-classloader加载插件ranger-%-plugin-impl 扩展的jar包信息

public RangerPluginClassLoader(String pluginType, Class<?> pluginClass ) throws Exception {

super(RangerPluginClassLoaderUtil.getInstance().getPluginFilesForServiceTypeAndPluginclass(pluginType, pluginClass), null);

componentClassLoader = AccessController.doPrivileged(

new PrivilegedAction<MyClassLoader>() {

public MyClassLoader run() {

return new MyClassLoader(Thread.currentThread().getContextClassLoader());

}

}

);

}2.ranger-hive-plugin-shim.jar 重写hive的授权方法

原始hive授权类:org.apache.hadoop.hive.ql.security.authorization.plugin.HiveAuthorizer

实现后的授权类:org.apache.ranger.authorization.hive.authorizer.RangerHiveAuthorizer

实现类的初始化流程:

1.创建授权器

public class RangerHiveAuthorizerFactory implements HiveAuthorizerFactory {

@Override

public HiveAuthorizer createHiveAuthorizer(HiveMetastoreClientFactory metastoreClientFactory,

HiveConf conf,

HiveAuthenticationProvider hiveAuthenticator,

HiveAuthzSessionContext sessionContext)

throws HiveAuthzPluginException {

//创建ranger授权器

return new RangerHiveAuthorizer(metastoreClientFactory, conf, hiveAuthenticator, sessionContext);

}

}

2.创建plugin

org.apache.ranger.authorization.hive.authorizer.RangerHiveAuthorizer#RangerHiveAuthorizer

public RangerHiveAuthorizer(HiveMetastoreClientFactory metastoreClientFactory,

HiveConf hiveConf,

HiveAuthenticationProvider hiveAuthenticator,

HiveAuthzSessionContext sessionContext) {

//设置参数

super(metastoreClientFactory, hiveConf, hiveAuthenticator, sessionContext);

RangerHivePlugin plugin = hivePlugin;

if(plugin == null) {

synchronized(RangerHiveAuthorizer.class) {

plugin = hivePlugin;

if(plugin == null) {

String appType = "unknown";

if(sessionContext != null) {

switch(sessionContext.getClientType()) {

case HIVECLI:

appType = "hiveCLI";

break;

case HIVESERVER2:

appType = "hiveServer2";

break;

}

}

plugin = new RangerHivePlugin(appType);

//插件初始化

plugin.init();

hivePlugin = plugin;

}

}

}

}

3.继续初始化

org.apache.ranger.authorization.hive.authorizer.RangerHivePlugin#init

@Override

public void init() {

//继续初始化

super.init();

RangerHivePlugin.UpdateXaPoliciesOnGrantRevoke = getConfig().getBoolean(RangerHadoopConstants.HIVE_UPDATE_RANGER_POLICIES_ON_GRANT_REVOKE_PROP, RangerHadoopConstants.HIVE_UPDATE_RANGER_POLICIES_ON_GRANT_REVOKE_DEFAULT_VALUE);

RangerHivePlugin.BlockUpdateIfRowfilterColumnMaskSpecified = getConfig().getBoolean(RangerHadoopConstants.HIVE_BLOCK_UPDATE_IF_ROWFILTER_COLUMNMASK_SPECIFIED_PROP, RangerHadoopConstants.HIVE_BLOCK_UPDATE_IF_ROWFILTER_COLUMNMASK_SPECIFIED_DEFAULT_VALUE);

RangerHivePlugin.DescribeShowTableAuth = getConfig().get(RangerHadoopConstants.HIVE_DESCRIBE_TABLE_SHOW_COLUMNS_AUTH_OPTION_PROP, RangerHadoopConstants.HIVE_DESCRIBE_TABLE_SHOW_COLUMNS_AUTH_OPTION_PROP_DEFAULT_VALUE);

String fsSchemesString = getConfig().get(RANGER_PLUGIN_HIVE_ULRAUTH_FILESYSTEM_SCHEMES, RANGER_PLUGIN_HIVE_ULRAUTH_FILESYSTEM_SCHEMES_DEFAULT);

fsScheme = StringUtils.split(fsSchemesString, FILESYSTEM_SCHEMES_SEPARATOR_CHAR);

if (fsScheme != null) {

for (int i = 0; i < fsScheme.length; i++) {

fsScheme[i] = fsScheme[i].trim();

}

}

}

4.初始化RangerBasePlugin

org.apache.ranger.plugin.service.RangerBasePlugin#init

public void init() {

// refresher刷新器,policyEngine策略器 置空操作

cleanup();

AuditProviderFactory providerFactory = AuditProviderFactory.getInstance();

if (!providerFactory.isInitDone()) {

if (pluginConfig.getProperties() != null) {

//审计器

providerFactory.init(pluginConfig.getProperties(), getAppId());

} else {

LOG.error("Audit subsystem is not initialized correctly. Please check audit configuration. ");

LOG.error("No authorization audits will be generated. ");

}

}

if (!pluginConfig.getPolicyEngineOptions().disablePolicyRefresher) {

// 创建刷新器

refresher = new PolicyRefresher(this);

LOG.info("Created PolicyRefresher Thread(" + refresher.getName() + ")");

//设置后台运行

refresher.setDaemon(true);

//从服务器拉取角色,策略等信息

refresher.startRefresher();

}

for (RangerChainedPlugin chainedPlugin : chainedPlugins) {

chainedPlugin.init();

}

}

5.刷新器开启刷新角色,策略信息

org.apache.ranger.plugin.util.PolicyRefresher#startRefresher

public void startRefresher() {

//加载角色

loadRoles();

//加载策略

loadPolicy();

//线程启动

super.start();

policyDownloadTimer = new Timer("policyDownloadTimer", true);

try {

//定时往阻塞队列放任务,提醒线程从ranger服务的加载角色,策略信息

policyDownloadTimer.schedule(new DownloaderTask(policyDownloadQueue), pollingIntervalMs, pollingIntervalMs);

} catch (IllegalStateException exception) {

policyDownloadTimer = null;

}

}

5.hive授权接口类HiveAuthorizer 的方法:

重点关注两个方法

1.获取表数据过滤的权限信息

org.apache.hadoop.hive.ql.security.authorization.plugin.HiveAuthorizer#filterListCmdObjects

1.1过滤表名

AuthorizationMetaStoreFilterHook

//过滤表名

@Override

public List<String> filterTableNames(String catName, String dbName, List<String> tableList)

throws MetaException {

List<HivePrivilegeObject> listObjs = getHivePrivObjects(dbName, tableList);

return getTableNames(getFilteredObjects(listObjs));

}

1.2 获取过滤的对象信息

private List<HivePrivilegeObject> getFilteredObjects(List<HivePrivilegeObject> listObjs) throws MetaException {

SessionState ss = SessionState.get();

HiveAuthzContext.Builder authzContextBuilder = new HiveAuthzContext.Builder();

authzContextBuilder.setUserIpAddress(ss.getUserIpAddress());

authzContextBuilder.setForwardedAddresses(ss.getForwardedAddresses());

try {

// 从授权器权限获取过滤对象

return ss.getAuthorizerV2().filterListCmdObjects(listObjs, authzContextBuilder.build());

} catch (HiveAuthzPluginException e) {

LOG.error("Authorization error", e);

throw new MetaException(e.getMessage());

} catch (HiveAccessControlException e) {

// authorization error is not really expected in a filter call

// the impl should have just filtered out everything. A checkPrivileges call

// would have already been made to authorize this action

LOG.error("AccessControlException", e);

throw new MetaException(e.getMessage());

}

}

1.3 获取权限数据的逻辑

org.apache.ranger.authorization.hive.authorizer.RangerHiveAuthorizer#filterListCmdObjects

public List<HivePrivilegeObject> filterListCmdObjects(List<HivePrivilegeObject> objs,

HiveAuthzContext context)

throws HiveAuthzPluginException, HiveAccessControlException {

RangerPerfTracer perf = null;

RangerHiveAuditHandler auditHandler = new RangerHiveAuditHandler();

if(RangerPerfTracer.isPerfTraceEnabled(PERF_HIVEAUTH_REQUEST_LOG)) {

perf = RangerPerfTracer.getPerfTracer(PERF_HIVEAUTH_REQUEST_LOG, "RangerHiveAuthorizer.filterListCmdObjects()");

}

List<HivePrivilegeObject> ret = null;

// bail out early if nothing is there to validate!

if (objs == null) {

} else if (objs.isEmpty()) {

ret = objs;

} else if (getCurrentUserGroupInfo() == null) {

ret = objs;

} else {

// get user/group info

//获取用户、组信息

UserGroupInformation ugi = getCurrentUserGroupInfo(); // we know this can't be null since we checked it above!

HiveAuthzSessionContext sessionContext = getHiveAuthzSessionContext();

String user = ugi.getShortUserName();

Set<String> groups = Sets.newHashSet(ugi.getGroupNames());

Set<String> roles = getCurrentRolesForUser(user, groups);

if (ret == null) { // if we got any items to filter then we can't return back a null. We must return back a list even if its empty.

ret = new ArrayList<HivePrivilegeObject>(objs.size());

}

for (HivePrivilegeObject privilegeObject : objs) {

if (LOG.isDebugEnabled()) {

HivePrivObjectActionType actionType = privilegeObject.getActionType();

HivePrivilegeObjectType objectType = privilegeObject.getType();

String objectName = privilegeObject.getObjectName();

String dbName = privilegeObject.getDbname();

List<String> columns = privilegeObject.getColumns();

List<String> partitionKeys = privilegeObject.getPartKeys();

String commandString = context == null ? null : context.getCommandString();

String ipAddress = context == null ? null : context.getIpAddress();

}

// 创建需要过滤的资源信息

RangerHiveResource resource = createHiveResourceForFiltering(privilegeObject);

} else {

//创建 ranger-hive 权限请求对象

RangerHiveAccessRequest request = new RangerHiveAccessRequest(resource, user, groups, roles, context, sessionContext);

//通过 ranger-client 缓存去判断资源是否能访问

RangerAccessResult result = hivePlugin.isAccessAllowed(request, auditHandler);

}

}

}

auditHandler.flushAudit();

return ret;

}

1.4 通过request请求从服务端拉取信息并校验

org.apache.ranger.plugin.service.RangerBasePlugin#isAccessAllowed

public RangerAccessResult isAccessAllowed(RangerAccessRequest request, RangerAccessResultProcessor resultProcessor) {

RangerAccessResult ret = null;

RangerPolicyEngine policyEngine = this.policyEngine;

if (policyEngine != null) {

//从缓存中计算权限

ret = policyEngine.evaluatePolicies(request, RangerPolicy.POLICY_TYPE_ACCESS, null);

}

if (ret != null) {

for (RangerChainedPlugin chainedPlugin : chainedPlugins) {

// 有个插件可以控制结果

RangerAccessResult chainedResult = chainedPlugin.isAccessAllowed(request);

if (chainedResult != null) {

updateResultFromChainedResult(ret, chainedResult);

}

}

}

if (policyEngine != null) {

policyEngine.evaluateAuditPolicies(ret);

}

if (resultProcessor != null) {

resultProcessor.processResult(ret);

}

return ret;

}

2.获取行列过去及数据脱敏等逻辑

org.apache.hadoop.hive.ql.security.authorization.plugin.HiveAuthorizer#applyRowFilterAndColumnMasking

授权类信息:org.apache.ranger.authorization.hive.authorizer.RangerHiveAuthorizer