一、应用场景

KNI(Kernel NIC Interface)是DPDK为用户态和内核协议栈交互提供的一种机制。本质上就是在内核注册一个虚拟网口,使用队列机制和用户态进行报文交换,和其他虚拟口的实现差不多。

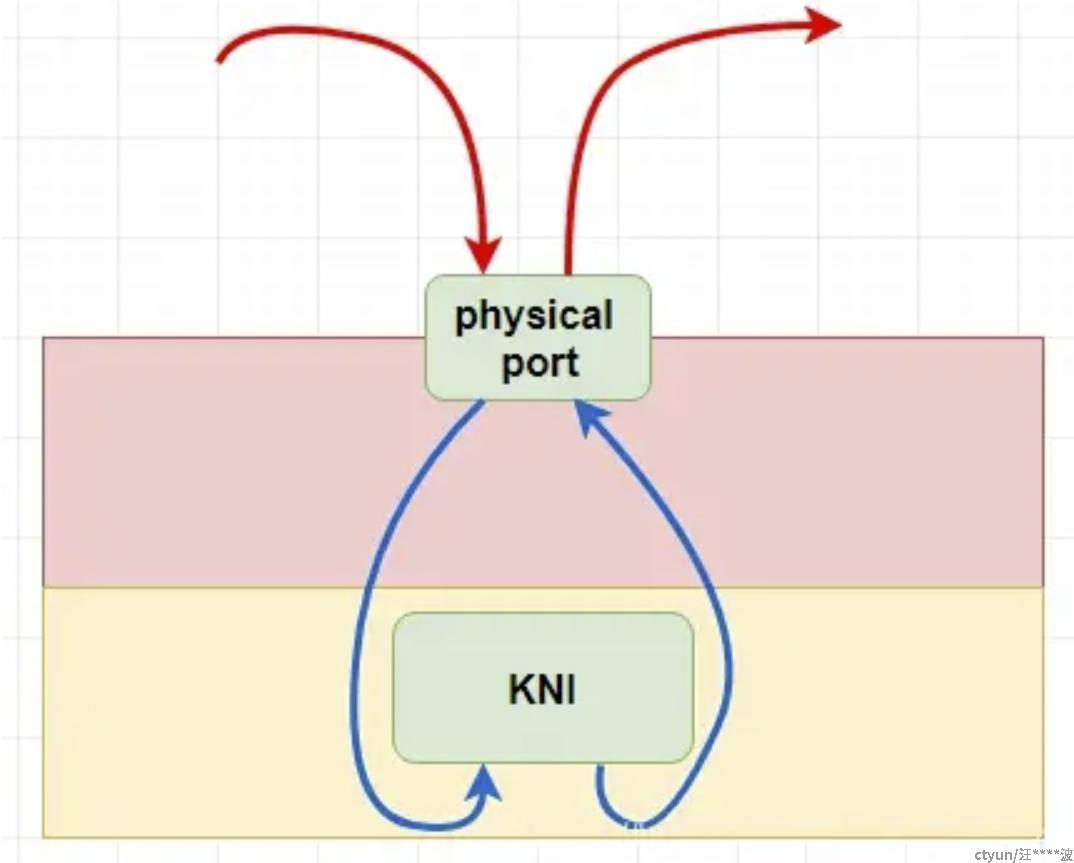

可以用下图描述kni的作用:

kni的基本使用场景如上,在DPDK中,当物理口收到报文但是用户态无法处理时,将报文转换写入队列,以此模拟KNI口收到了报文,报文经过协议栈处理后发出,相当于写入KNI和物理口的发送队列,

二、KNI内核模块

KNI实现需要内核支持,本质上就是注册一个misc设备,通过用户ioctl命令注册netdevice结构。

[kernel/linux/kni/kni_misc.c]

static int __init kni_init(void)

{

int rc;

if (kni_parse_kthread_mode() < 0) {

pr_err("Invalid parameter for kthread_mode\n");

return -EINVAL;

}

if (multiple_kthread_on == 0)

pr_debug("Single kernel thread for all KNI devices\n");

else

pr_debug("Multiple kernel thread mode enabled\n");

if (kni_parse_carrier_state() < 0) {

pr_err("Invalid parameter for carrier\n");

return -EINVAL;

}

...

rc = register_pernet_subsys(&kni_net_ops);

rc = misc_register(&kni_misc);

if (rc != 0) {

pr_err("Misc registration failed\n");

goto out;

}

/* Configure the lo mode according to the input parameter */

kni_net_config_lo_mode(lo_mode);

return 0;

...

}首先是检测模块参数(蓝色部分是默认值):

- lo_mode lo_mode_none /lo_mode_fifo/lo_mode_fifo_skb

- kthread_mode single / multiple (在multiple mode下,每个port可以有多个kni口,每个kni口对应一个kni thread)

- carrier off / on

具体懒的展开了,模块参数注释写的很详细。

struct kni_net {

unsigned long device_in_use; /* device in use flag */

struct mutex kni_kthread_lock;

struct task_struct *kni_kthread;

struct rw_semaphore kni_list_lock;

struct list_head kni_list_head;

};misc register以前的文章也说过,就是一个字符设备框架,具体看下方法:

static struct miscdevice kni_misc = {

.minor = MISC_DYNAMIC_MINOR,

.name = KNI_DEVICE, /* #define KNI_DEVICE "kni" */

.fops = &kni_fops,

};

static const struct file_operations kni_fops = {

.owner = THIS_MODULE,

.open = kni_open,

.release = kni_release,

.unlocked_ioctl = (void *)kni_ioctl,

.compat_ioctl = (void *)kni_compat_ioctl,

};具体功能用户态调用再展开,这里知道misc_register后,系统为我们建立的/dev/kni就行了

三、用户态使用

3.1 rte_kni_init

int rte_kni_init(unsigned int max_kni_ifaces __rte_unused)

{

/* Check FD and open */

if (kni_fd < 0) {

kni_fd = open("/dev/" KNI_DEVICE, O_RDWR);

if (kni_fd < 0) {

RTE_LOG(ERR, KNI,

"Can not open /dev/%s\n", KNI_DEVICE);

return -1;

}

}

return 0;

}就是打开了字符文件/dev/kni,看下内核的动作:

static int kni_open(struct inode *inode, struct file *file)

{

struct net *net = current->nsproxy->net_ns;

struct kni_net *knet = net_generic(net, kni_net_id);

/* kni device can be opened by one user only per netns */

if (test_and_set_bit(KNI_DEV_IN_USE_BIT_NUM, &knet->device_in_use))

return -EBUSY;

file->private_data = get_net(net);

pr_debug("/dev/kni opened\n");

return 0;

}3.2 rte_kni_alloc

struct rte_kni * rte_kni_alloc(struct rte_mempool *pktmbuf_pool,

const struct rte_kni_conf *conf, struct rte_kni_ops *ops)

{

...

kni = __rte_kni_get(conf->name);

if (kni != NULL) {

RTE_LOG(ERR, KNI, "KNI already exists\n");

goto unlock;

}

te = rte_zmalloc("KNI_TAILQ_ENTRY", sizeof(*te), 0);

if (te == NULL) {

RTE_LOG(ERR, KNI, "Failed to allocate tailq entry\n");

goto unlock;

}

kni = rte_zmalloc("KNI", sizeof(struct rte_kni), RTE_CACHE_LINE_SIZE);

if (kni == NULL) {

RTE_LOG(ERR, KNI, "KNI memory allocation failed\n");

goto kni_fail;

}

snprintf(kni->name, RTE_KNI_NAMESIZE, "%s", conf->name);

if (ops)

memcpy(&kni->ops, ops, sizeof(struct rte_kni_ops));

else

kni->ops.port_id = UINT16_MAX;

...

}分配每个kni口对应的结构,并管理起来:

[lib/librte_kni/rte_kni.c]

struct rte_kni {

char name[RTE_KNI_NAMESIZE]; /**< KNI interface name */

uint16_t group_id; /**< Group ID of KNI devices */

uint32_t slot_id; /**< KNI pool slot ID */

struct rte_mempool *pktmbuf_pool; /**< pkt mbuf mempool */

unsigned mbuf_size; /**< mbuf size */

const struct rte_memzone *m_tx_q; /**< TX queue memzone */

const struct rte_memzone *m_rx_q; /**< RX queue memzone */

const struct rte_memzone *m_alloc_q;/**< Alloc queue memzone */

const struct rte_memzone *m_free_q; /**< Free queue memzone */

struct rte_kni_fifo *tx_q; /**< TX queue */

struct rte_kni_fifo *rx_q; /**< RX queue */

struct rte_kni_fifo *alloc_q; /**< Allocated mbufs queue */

struct rte_kni_fifo *free_q; /**< To be freed mbufs queue */

const struct rte_memzone *m_req_q; /**< Request queue memzone */

const struct rte_memzone *m_resp_q; /**< Response queue memzone */

const struct rte_memzone *m_sync_addr;/**< Sync addr memzone */

/* For request & response */

struct rte_kni_fifo *req_q; /**< Request queue */

struct rte_kni_fifo *resp_q; /**< Response queue */

void * sync_addr; /**< Req/Resp Mem address */

struct rte_kni_ops ops; /**< operations for request */

};接着根据rte_kni_conf信息配置rte_kni_device_info:

memset(&dev_info, 0, sizeof(dev_info));

dev_info.bus = conf->addr.bus;

dev_info.devid = conf->addr.devid;

dev_info.function = conf->addr.function;

dev_info.vendor_id = conf->id.vendor_id;

dev_info.device_id = conf->id.device_id;

dev_info.core_id = conf->core_id;

dev_info.force_bind = conf->force_bind;

dev_info.group_id = conf->group_id;

dev_info.mbuf_size = conf->mbuf_size;

dev_info.mtu = conf->mtu;

memcpy(dev_info.mac_addr, conf->mac_addr, ETHER_ADDR_LEN);

snprintf(dev_info.name, RTE_KNI_NAMESIZE, "%s", conf->name);这里顺便把初始化的参数也列一下:

struct rte_kni_conf {

/*

* KNI name which will be used in relevant network device.

* Let the name as short as possible, as it will be part of

* memzone name.

*/

char name[RTE_KNI_NAMESIZE];

uint32_t core_id; /* Core ID to bind kernel thread on */

uint16_t group_id; /* Group ID */

unsigned mbuf_size; /* mbuf size */

struct rte_pci_addr addr;

struct rte_pci_id id;

__extension__

uint8_t force_bind : 1; /* Flag to bind kernel thread */

char mac_addr[ETHER_ADDR_LEN]; /* MAC address assigned to KNI */

uint16_t mtu;

};接下来会分配队列内存资源,注释写的很明白了,就不多贴代码了,主要包括:

tx_q/rx_q/alloc_q/free_q/req_q/resp_q,同时填充alloc_q

/* Allocate mbufs and then put them into alloc_q */

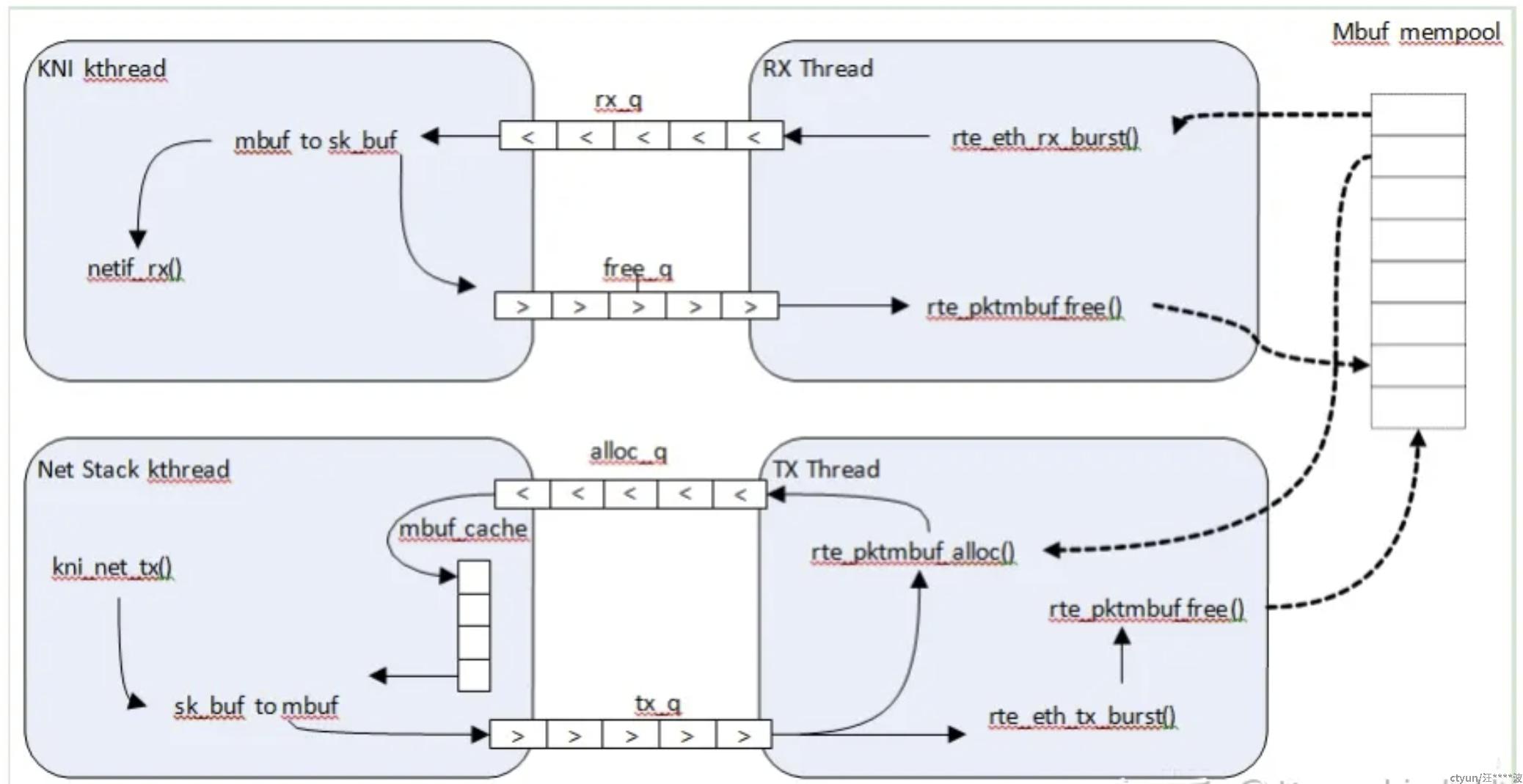

kni_allocate_mbufs(kni);在继续向下之前分析一下用户态与内核态报文交互的方式,来看DPDK用户手册的一张图:

从ingress方向看,rte_eth_rx_burst时mbuf mempool中分配内存,通过rx_q发送给KNI,KNI线程将mbuf从rx_q中出队,将其转换成skb后,就将原mbuf通过free_q归还给用户rx thread,并由rx_thread释放,可以看出ingress方向的mbuf完全由用户态rx_thread自行管理。

从egress方向看,当KNI口发包时,从 mbuf cache中取得一个mbuf,将skb内容copy到mbuf中,进入tx_q队列,tx_thread将该mbuf出队并完成发送,因为发送后该mbuf会被释放。所以要重新alloc一个mbuf通过alloc_q归还给kernel。这部分mbuf是上面用户态填充的alloc_q。

kni的资源初始化完成后使用IOCTL创建内核对应的虚拟网口:

ioctl(kni_fd, RTE_KNI_IOCTL_CREATE, &dev_info);驱动执行kni_ioctl_create,我们分几块来看,在进行一些必要的检查后,分配注册netdev:

static int kni_ioctl_create(struct net *net, uint32_t ioctl_num, unsigned long ioctl_param)

{

net_dev = alloc_netdev(sizeof(struct kni_dev), dev_info.name,

#ifdef NET_NAME_USER

NET_NAME_USER,

#endif

kni_net_init);

dev_net_set(net_dev, net);

kni = netdev_priv(net_dev);

kni->net_dev = net_dev;

kni->group_id = dev_info.group_id;

kni->core_id = dev_info.core_id;

strncpy(kni->name, dev_info.name, RTE_KNI_NAMESIZE);

/* Translate user space info into kernel space info */

kni->tx_q = phys_to_virt(dev_info.tx_phys);

kni->rx_q = phys_to_virt(dev_info.rx_phys);

kni->alloc_q = phys_to_virt(dev_info.alloc_phys);

kni->free_q = phys_to_virt(dev_info.free_phys);

kni->req_q = phys_to_virt(dev_info.req_phys);

kni->resp_q = phys_to_virt(dev_info.resp_phys);

kni->sync_va = dev_info.sync_va;

kni->sync_kva = phys_to_virt(dev_info.sync_phys);

kni->mbuf_size = dev_info.mbuf_size;

...

ret = register_netdev(net_dev);

}这里首先重点关注的还是那些资源的管理,因为这些地址都位于内核线性映射,所以简单的转换一下就好了。

其次是dev_net_set,这个函数指定了netdev->ops:

void kni_net_init(struct net_device *dev)

{

struct kni_dev *kni = netdev_priv(dev);

init_waitqueue_head(&kni->wq);

mutex_init(&kni->sync_lock);

ether_setup(dev); /* assign some of the fields */

dev->netdev_ops = &kni_net_netdev_ops;

dev->header_ops = &kni_net_header_ops;

dev->watchdog_timeo = WD_TIMEOUT;

}具体方法先贴一下:

static const struct net_device_ops kni_net_netdev_ops = {

.ndo_open = kni_net_open,

.ndo_stop = kni_net_release,

.ndo_set_config = kni_net_config,

.ndo_change_rx_flags = kni_net_set_promiscusity,

.ndo_start_xmit = kni_net_tx,

.ndo_change_mtu = kni_net_change_mtu,

.ndo_do_ioctl = kni_net_ioctl,

.ndo_set_rx_mode = kni_net_set_rx_mode,

.ndo_get_stats = kni_net_stats,

.ndo_tx_timeout = kni_net_tx_timeout,

.ndo_set_mac_address = kni_net_set_mac,

#ifdef HAVE_CHANGE_CARRIER_CB

.ndo_change_carrier = kni_net_change_carrier,

#endif

};还有一点就是kni thread:

netif_carrier_off(net_dev);

ret = kni_run_thread(knet, kni, dev_info.force_bind);

if (ret != 0)

return ret;

down_write(&knet->kni_list_lock);

list_add(&kni->list, &knet->kni_list_head);

up_write(&knet->kni_list_lock);具体看kni_run_thread

static int

kni_run_thread(struct kni_net *knet, struct kni_dev *kni, uint8_t force_bind)

{

/**

* Create a new kernel thread for multiple mode, set its core affinity,

* and finally wake it up.

*/

if (multiple_kthread_on) {

kni->pthread = kthread_create(kni_thread_multiple,

(void *)kni, "kni_%s", kni->name);

if (IS_ERR(kni->pthread)) {

kni_dev_remove(kni);

return -ECANCELED;

}

if (force_bind)

kthread_bind(kni->pthread, kni->core_id);

wake_up_process(kni->pthread);

} else {

mutex_lock(&knet->kni_kthread_lock);

if (knet->kni_kthread == NULL) {

knet->kni_kthread = kthread_create(kni_thread_single,

(void *)knet, "kni_single");

if (IS_ERR(knet->kni_kthread)) {

mutex_unlock(&knet->kni_kthread_lock);

kni_dev_remove(kni);

return -ECANCELED;

}

if (force_bind)

kthread_bind(knet->kni_kthread, kni->core_id);

wake_up_process(knet->kni_kthread);

}

mutex_unlock(&knet->kni_kthread_lock);

}

return 0;

}kni thread的作用就是扫描队列,响应收包事件,先看 multiple模式:

static int kni_thread_multiple(void *param)

{

int j;

struct kni_dev *dev = param;

while (!kthread_should_stop()) {

for (j = 0; j < KNI_RX_LOOP_NUM; j++) {

kni_net_rx(dev);

kni_net_poll_resp(dev);

}

#ifdef RTE_KNI_PREEMPT_DEFAULT

schedule_timeout_interruptible(

usecs_to_jiffies(KNI_KTHREAD_RESCHEDULE_INTERVAL));

#endif

}

return 0;

}同时也贴一下single mode:

static int kni_thread_single(void *data)

{

struct kni_net *knet = data;

int j;

struct kni_dev *dev;

while (!kthread_should_stop()) {

down_read(&knet->kni_list_lock);

for (j = 0; j < KNI_RX_LOOP_NUM; j++) {

list_for_each_entry(dev, &knet->kni_list_head, list) {

kni_net_rx(dev);

kni_net_poll_resp(dev);

}

}

up_read(&knet->kni_list_lock);

#ifdef RTE_KNI_PREEMPT_DEFAULT

/* reschedule out for a while */

schedule_timeout_interruptible(

usecs_to_jiffies(KNI_KTHREAD_RESCHEDULE_INTERVAL));

#endif

}

return 0;

}从代码上可以看出,single mode下只有一个内核线程:knet->kni_thread,因此在扫描时会遍历knet->list_head中挂的所有kni口。mutiple mode下则不同,每一个kni 口对应一个thread:kni->pthread。

那么看一下具体的收包情况:

static kni_net_rx_t kni_net_rx_func = kni_net_rx_normal;

void kni_net_rx(struct kni_dev *kni)

{

/**

* It doesn't need to check if it is NULL pointer,

* as it has a default value

*/

(*kni_net_rx_func)(kni);

}对照上面的图来看,在收包时,kni_thread负责rx_q和free_q。首先要确定一下rx_q出队数量,由于mbuf转换skb完成后要及时通过free_q归还,所以要考虑一下free_q当前空闲量:

/* Get the number of free entries in free_q */

num_fq = kni_fifo_free_count(kni->free_q);

if (num_fq == 0) {

/* No room on the free_q, bail out */

return;

}

/* Calculate the number of entries to dequeue from rx_q */

num_rx = min_t(uint32_t, num_fq, MBUF_BURST_SZ);

/* Burst dequeue from rx_q */

num_rx = kni_fifo_get(kni->rx_q, kni->pa, num_rx);接下来就是将取出的mbuf转换为skb:

/* Transfer received packets to netif */

for (i = 0; i < num_rx; i++) {

kva = pa2kva(kni->pa[i]);

len = kva->pkt_len;

data_kva = kva2data_kva(kva);

kni->va[i] = pa2va(kni->pa[i], kva);

skb = dev_alloc_skb(len + 2);

if (!skb) {

/* Update statistics */

kni->stats.rx_dropped++;

continue;

}

/* Align IP on 16B boundary */

skb_reserve(skb, 2);

if (kva->nb_segs == 1) {

memcpy(skb_put(skb, len), data_kva, len);

} else {

int nb_segs;

int kva_nb_segs = kva->nb_segs;

for (nb_segs = 0; nb_segs < kva_nb_segs; nb_segs++) {

memcpy(skb_put(skb, kva->data_len),

data_kva, kva->data_len);

if (!kva->next)

break;

kva = pa2kva(va2pa(kva->next, kva));

data_kva = kva2data_kva(kva);

}

}

skb->dev = dev;

skb->protocol = eth_type_trans(skb, dev);

skb->ip_summed = CHECKSUM_UNNECESSARY;

/* Call netif interface */

netif_rx_ni(skb);

/* Update statistics */

kni->stats.rx_bytes += len;

kni->stats.rx_packets++;

}转换完成后mbuf入free_q:

/* Burst enqueue mbufs into free_q */

ret = kni_fifo_put(kni->free_q, kni->va, num_rx);3.3 rte_kni_update_link

该函数就是通过向/sys/devices/virtual/net/[kni_name]/carrier,写0/1控制kni netdev的link情况。一般link状况和对应的physical port同步就可以了。

当然使用ifconfig将端口拉起来也是可以的

static int kni_net_open(struct net_device *dev)

{

int ret;

struct rte_kni_request req;

struct kni_dev *kni = netdev_priv(dev);

netif_start_queue(dev);

if (dflt_carrier == 1)

netif_carrier_on(dev);

else

netif_carrier_off(dev);

memset(&req, 0, sizeof(req));

req.req_id = RTE_KNI_REQ_CFG_NETWORK_IF;

/* Setting if_up to non-zero means up */

req.if_up = 1;

ret = kni_net_process_request(kni, &req);

return (ret == 0) ? req.result : ret;

}端口link状态up(netif_carrier_on)这里还是受到dflt_carrier的限制

3.4 rte_kni_tx_burst

unsigned rte_kni_tx_burst(struct rte_kni *kni, struct rte_mbuf **mbufs, unsigned num)

{

void *phy_mbufs[num];

unsigned int ret;

unsigned int i;

for (i = 0; i < num; i++)

phy_mbufs[i] = va2pa(mbufs[i]);

ret = kni_fifo_put(kni->rx_q, phy_mbufs, num);

/* Get mbufs from free_q and then free them */

kni_free_mbufs(kni);

return ret;

}ingress方向,mbuf是用户态分配的,这里注意二者交互的地址一定要是物理地址,这是因为1)内核态不知道用户态地址到物理地址的转换关系(可能是非线性)2)内核直接访问用户态空间在某些硬件条件下被禁止。稳妥起见,还是使用物理地址通过队列传递。

3.5 rte_kni_rx_burst

unsigned rte_kni_rx_burst(struct rte_kni *kni, struct rte_mbuf **mbufs, unsigned num)

{

unsigned ret = kni_fifo_get(kni->tx_q, (void **)mbufs, num);

/* If buffers removed, allocate mbufs and then put them into alloc_q */

if (ret)

kni_allocate_mbufs(kni);

return ret;

}同时看下内核发包:

static int kni_net_tx(struct sk_buff *skb, struct net_device *dev)

{

int len = 0;

uint32_t ret;

struct kni_dev *kni = netdev_priv(dev);

struct rte_kni_mbuf *pkt_kva = NULL;

void *pkt_pa = NULL;

void *pkt_va = NULL;

...

/* dequeue a mbuf from alloc_q */

ret = kni_fifo_get(kni->alloc_q, &pkt_pa, 1);

if (likely(ret == 1)) {

void *data_kva;

pkt_kva = pa2kva(pkt_pa);

data_kva = kva2data_kva(pkt_kva);

pkt_va = pa2va(pkt_pa, pkt_kva);

len = skb->len;

memcpy(data_kva, skb->data, len);

if (unlikely(len < ETH_ZLEN)) {

memset(data_kva + len, 0, ETH_ZLEN - len);

len = ETH_ZLEN;

}

pkt_kva->pkt_len = len;

pkt_kva->data_len = len;

/* enqueue mbuf into tx_q */

ret = kni_fifo_put(kni->tx_q, &pkt_va, 1);

if (unlikely(ret != 1)) {

/* Failing should not happen */

pr_err("Fail to enqueue mbuf into tx_q\n");

goto drop;

}

} else {

/* Failing should not happen */

pr_err("Fail to dequeue mbuf from alloc_q\n");

goto drop;

}

/* Free skb and update statistics */

dev_kfree_skb(skb);

kni->stats.tx_bytes += len;

kni->stats.tx_packets++;

return NETDEV_TX_OK;

}发包的情况很简单,就是从预先分配的alloc_q获取mbuf,注意这时候取出的物理地址,转换成kernel 虚拟地址进行skb内容转换,然后写入tx_q,注意写入的是user对应的虚拟地址,这样在用户态取出来就直接用了。

3.6 rte_kni_handle_request

改函数处理几种请求,当kni口相关属性变化时,对physical port进行响应的处理:

int rte_kni_handle_request(struct rte_kni *kni)

{

unsigned ret;

struct rte_kni_request *req = NULL;

if (kni == NULL)

return -1;

/* Get request mbuf */

ret = kni_fifo_get(kni->req_q, (void **)&req, 1);

if (req != kni->sync_addr) {

RTE_LOG(ERR, KNI, "Wrong req pointer %p\n", req);

return -1;

}

/* Analyze the request and call the relevant actions for it */

switch (req->req_id) {

case RTE_KNI_REQ_CHANGE_MTU: /* Change MTU */

if (kni->ops.change_mtu)

req->result = kni->ops.change_mtu(kni->ops.port_id,

req->new_mtu);

break;

case RTE_KNI_REQ_CFG_NETWORK_IF: /* Set network interface up/down */

if (kni->ops.config_network_if)

req->result = kni->ops.config_network_if(\

kni->ops.port_id, req->if_up);

break;

case RTE_KNI_REQ_CHANGE_MAC_ADDR: /* Change MAC Address */

if (kni->ops.config_mac_address)

req->result = kni->ops.config_mac_address(

kni->ops.port_id, req->mac_addr);

else if (kni->ops.port_id != UINT16_MAX)

req->result = kni_config_mac_address(

kni->ops.port_id, req->mac_addr);

break;

case RTE_KNI_REQ_CHANGE_PROMISC: /* Change PROMISCUOUS MODE */

if (kni->ops.config_promiscusity)

req->result = kni->ops.config_promiscusity(

kni->ops.port_id, req->promiscusity);

else if (kni->ops.port_id != UINT16_MAX)

req->result = kni_config_promiscusity(

kni->ops.port_id, req->promiscusity);

break;

default:

RTE_LOG(ERR, KNI, "Unknown request id %u\n", req->req_id);

req->result = -EINVAL;

break;

}

/* Construct response mbuf and put it back to resp_q */

ret = kni_fifo_put(kni->resp_q, (void **)&req, 1);

if (ret != 1) {

RTE_LOG(ERR, KNI, "Fail to put the muf back to resp_q\n");

return -1; /* It is an error of can't putting the mbuf back */

}

return 0;

}整体很简单,就是用到前面剩余的req_q,resp_q来响应请求。kni对应的事件就能通知给physical port并执行相应的动作了:

enum rte_kni_req_id {

RTE_KNI_REQ_UNKNOWN = 0,

RTE_KNI_REQ_CHANGE_MTU,

RTE_KNI_REQ_CFG_NETWORK_IF,

RTE_KNI_REQ_CHANGE_MAC_ADDR,

RTE_KNI_REQ_CHANGE_PROMISC,

RTE_KNI_REQ_MAX,

};内核驱动一般调用

static int kni_net_process_request(struct kni_dev *kni, struct rte_kni_request *req)

{

int ret = -1;

void *resp_va;

uint32_t num;

int ret_val;

if (!kni || !req) {

pr_err("No kni instance or request\n");

return -EINVAL;

}

mutex_lock(&kni->sync_lock);

/* Construct data */

memcpy(kni->sync_kva, req, sizeof(struct rte_kni_request));

num = kni_fifo_put(kni->req_q, &kni->sync_va, 1);

if (num < 1) {

pr_err("Cannot send to req_q\n");

ret = -EBUSY;

goto fail;

}

ret_val = wait_event_interruptible_timeout(kni->wq,

kni_fifo_count(kni->resp_q), 3 * HZ);

if (signal_pending(current) || ret_val <= 0) {

ret = -ETIME;

goto fail;

}

num = kni_fifo_get(kni->resp_q, (void **)&resp_va, 1);

if (num != 1 || resp_va != kni->sync_va) {

/* This should never happen */

pr_err("No data in resp_q\n");

ret = -ENODATA;

goto fail;

}

memcpy(req, kni->sync_kva, sizeof(struct rte_kni_request));

ret = 0;

fail:

mutex_unlock(&kni->sync_lock);

return ret;

}req_q对应kni->sync_kva的内存,入队用户态地址:

memcpy(kni->sync_kva, req, sizeof(struct rte_kni_request));

num = kni_fifo_put(kni->req_q, &kni->sync_va, 1);

了解这一点,其他的很好理解了。

参考

【1】sample_app_ug-master

【2】prog_guide-master