通过实现HAL插件可以实现虚拟摄像头插件,其中HAL意思是“Hardware Abstract Layer”,即硬件抽象层的意思。HAL插件的实现主要是使用CoreMediaIO.framework,这个框架提供了HAL插件的结构定义和接口声明,我们只需要按照约定声明和实现相关接口即可。

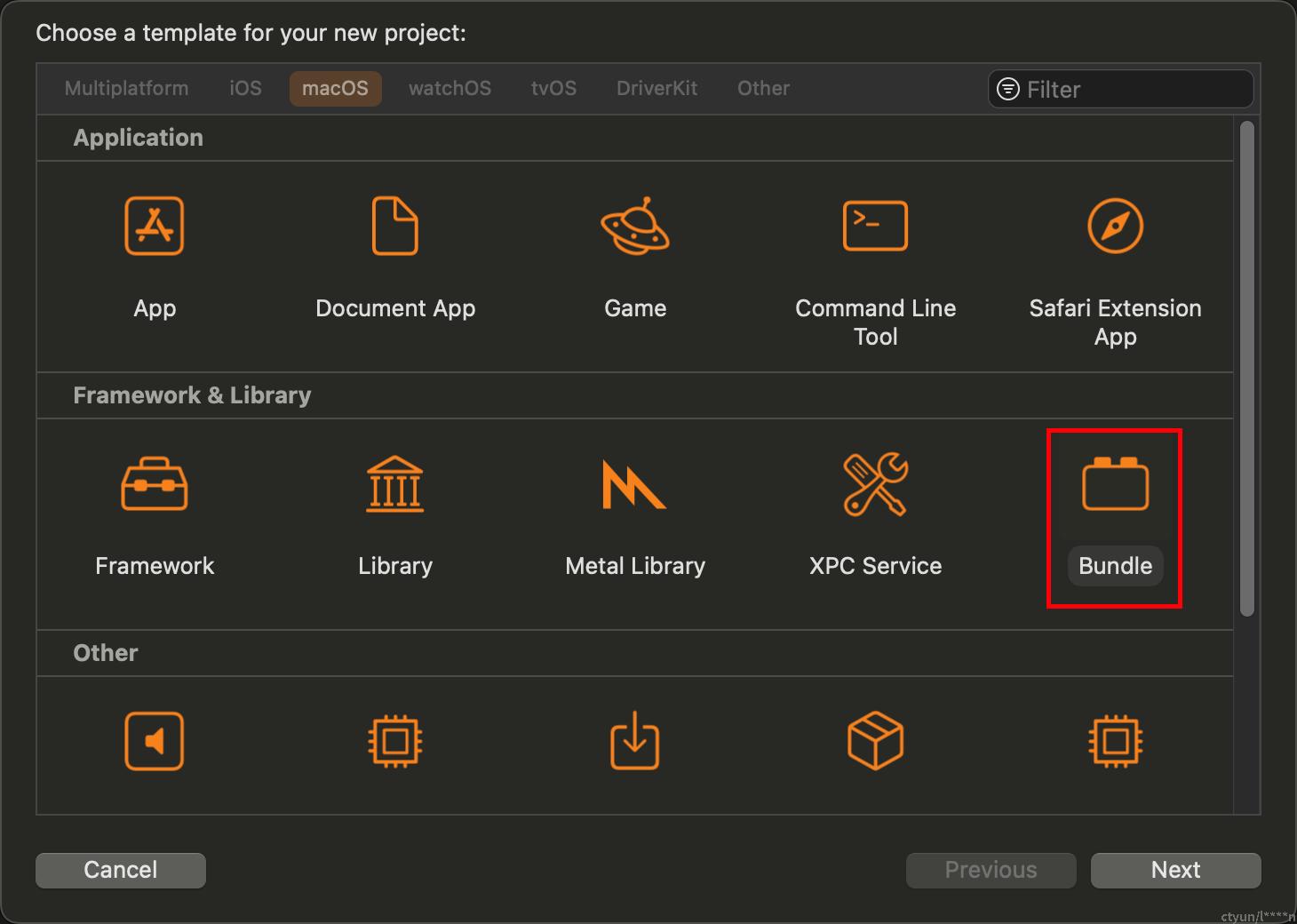

1. 首先创建类型为“Bundle”的工程(HAL插件都是以Bundle的格式打包):

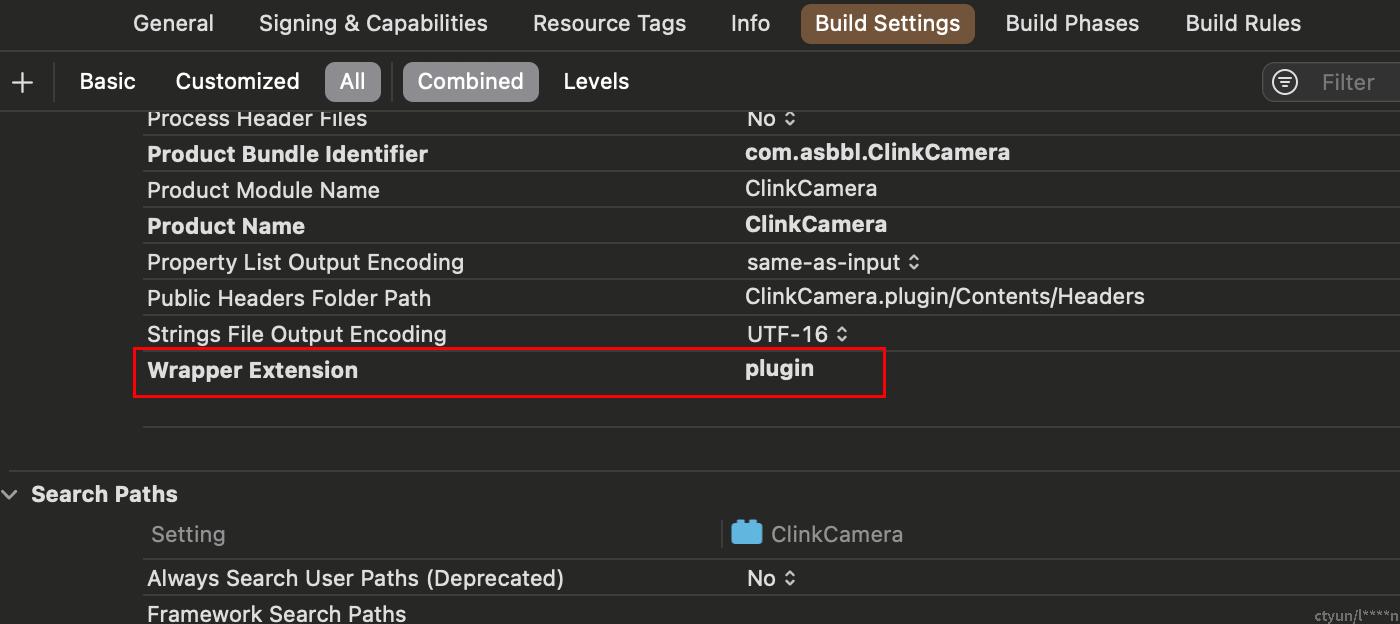

2. 修改“Wrapper Extension”为“plugin”(HAL插件安装包后缀名均为plugin)

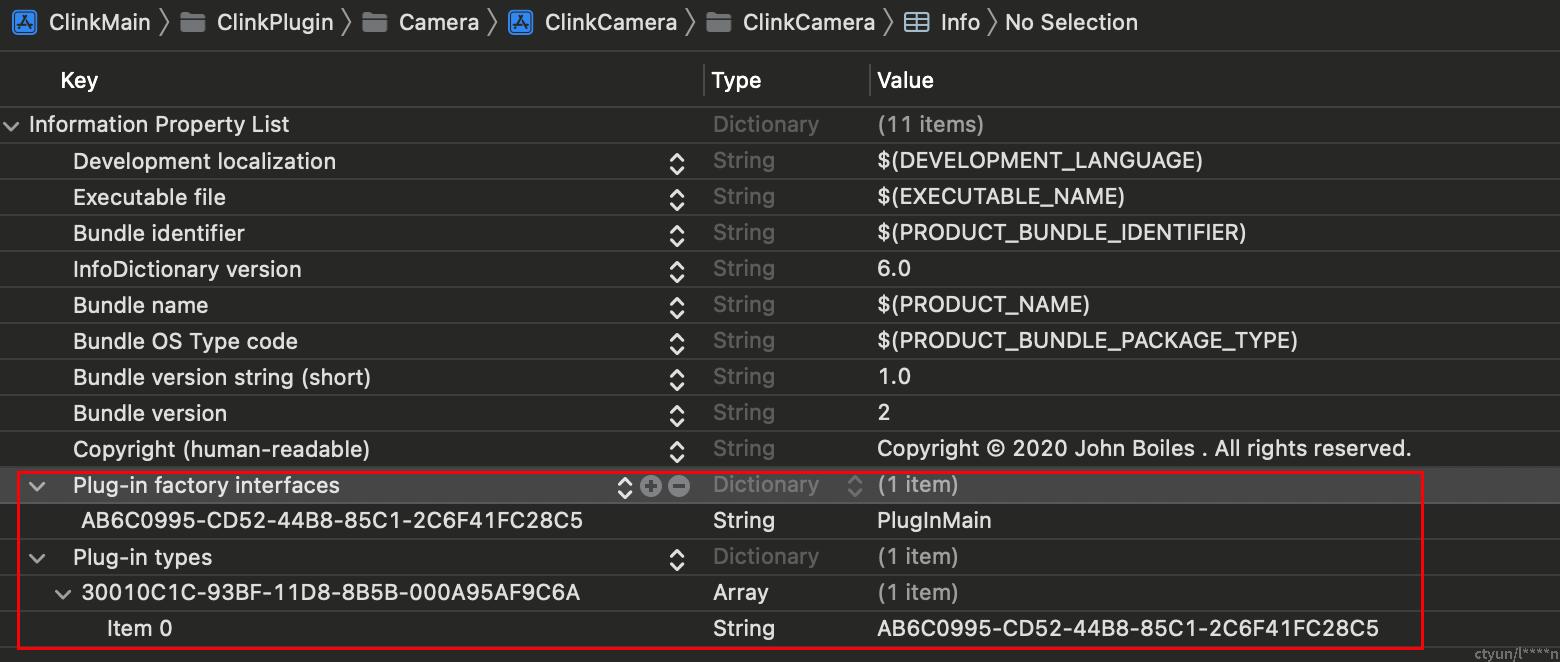

3. 接下来需要使用自定义的Info.plist文件,并且需要配置Plugin的入口函数:

其中“Plug-in factory interfaces”的Key为随机生成的UUID串,其Value为入口函数的名称,这个入口函数应该是一个C接口,比如:

extern "C" {

void* PlugInMain(CFAllocatorRef allocator, CFUUIDRef requestedTypeUUID) {

if (!CFEqual(requestedTypeUUID, kCMIOHardwarePlugInTypeID)) {

return 0;

}

return PlugInRef();

}

}“Plug-in types”用于表示HAL插件的类型,其中Key为固定值“kCMIOHardwarePlugInTypeID”,该值在<CoreMediaIO/CMIOHardwarePlugin.h>文件中定义,其Value为入口函数对应的UUID。

4. 实现插件接口

我们需要实现的是流媒体设备插件,因此需要实现的接口列表如下:

static CMIOHardwarePlugInInterface sInterface = {

// Padding for COM

NULL,

// IUnknown Routines

(HRESULT (*)(void*, CFUUIDBytes, void**))HardwarePlugIn_QueryInterface,

(ULONG (*)(void*))HardwarePlugIn_AddRef,

(ULONG (*)(void*))HardwarePlugIn_Release,

// DAL Plug-In Routines

HardwarePlugIn_Initialize,

HardwarePlugIn_InitializeWithObjectID,

HardwarePlugIn_Teardown,

HardwarePlugIn_ObjectShow,

HardwarePlugIn_ObjectHasProperty,

HardwarePlugIn_ObjectIsPropertySettable,

HardwarePlugIn_ObjectGetPropertyDataSize,

HardwarePlugIn_ObjectGetPropertyData,

HardwarePlugIn_ObjectSetPropertyData,

HardwarePlugIn_DeviceSuspend,

HardwarePlugIn_DeviceResume,

HardwarePlugIn_DeviceStartStream,

HardwarePlugIn_DeviceStopStream,

HardwarePlugIn_DeviceProcessAVCCommand,

HardwarePlugIn_DeviceProcessRS422Command,

HardwarePlugIn_StreamCopyBufferQueue,

HardwarePlugIn_StreamDeckPlay,

HardwarePlugIn_StreamDeckStop,

HardwarePlugIn_StreamDeckJog,

HardwarePlugIn_StreamDeckCueTo

};

4.1 HardwarePlugIn_QueryInterface,需要在该接口返回我们的插件实现,当有第三方应用加载我们插件的时候会调用该接口,我们需要为自己的插件实现增加一次引用:

*interface = NULL;

CFUUIDRef cfUuid = CFUUIDCreateFromUUIDBytes(kCFAllocatorDefault, uuid);

CFStringRef uuidString = CFUUIDCreateString(NULL, cfUuid);

CFStringRef hardwarePluginUuid = CFUUIDCreateString(NULL, kCMIOHardwarePlugInInterfaceID);

if (CFEqual(uuidString, hardwarePluginUuid)) {

sRefCount += 1;

*interface = PlugInRef();

return kCMIOHardwareNoError;

}

return E_NOINTERFACE;4.2 HardwarePlugIn_InitializeWithObjectID,初始化接口,在这个接口我们需要完成初始化的动作,关键是要创建Device、Stream并发布Device:

OSStatus error = kCMIOHardwareNoError;

CMIOObjectID deviceId;

// 创建设备对象

error = CMIOObjectCreate(PlugInRef(), kCMIOObjectSystemObject, kCMIODeviceClassID, &deviceId);

if (error != noErr) {

DLog(@"CMIOObjectCreate Error %d", error);

return error;

}

CMIOObjectID streamId;

// 创建多媒体流对象

error = CMIOObjectCreate(PlugInRef(), deviceId, kCMIOStreamClassID, &streamId);

if (error != noErr) {

DLog(@"CMIOObjectCreate Error %d", error);

return error;

}

// 发布设备对象

error = CMIOObjectsPublishedAndDied(PlugInRef(), kCMIOObjectSystemObject, 1, &deviceId, 0, 0);

if (error != kCMIOHardwareNoError) {

DLog(@"CMIOObjectsPublishedAndDied plugin/device Error %d", error);

return error;

}

// 发布多媒体流对象

error = CMIOObjectsPublishedAndDied(PlugInRef(), deviceId, 1, &streamId, 0, 0);

if (error != kCMIOHardwareNoError) {

DLog(@"CMIOObjectsPublishedAndDied device/stream Error %d", error);

return error;

}接下来就是关于Stream的几个接口:

4.3 HardwarePlugIn_StreamCopyBufferQueue,返回帧数据的缓存队列,第三方程序通过该队列获取帧数据;

4.4 HardwarePlugIn_DeviceStartStream,开始流传输,我们在这个接口开始视频流的生成并放到缓存队列;

4.5 HardwarePlugIn_DeviceStopStream,停止流传输;

我们可以把所有Stream相关的接口实现封装到一个独立的Stream类里面:

// 帧数据队列

- (CMSimpleQueueRef)queue

{

if (_queue == NULL) {

// Allocate a one-second long queue, which we can use our FPS constant for.

OSStatus err = CMSimpleQueueCreate(kCFAllocatorDefault, FPS, &_queue);

if (err != noErr) {

DLog(@"Err %d in CMSimpleQueueCreate", err);

}

}

return _queue;

}

// 该接口将队列返回给第三方应用,并且通过alteredProc通知数据变化

- (CMSimpleQueueRef)copyBufferQueueWithAlteredProc:(CMIODeviceStreamQueueAlteredProc)alteredProc alteredRefCon:(void *)alteredRefCon

{

self.alteredProc = alteredProc;

self.alteredRefCon = alteredRefCon;

// Retain this since it's a copy operation

CFRetain(self.queue);

return self.queue;

}

// 往队列中填充视频帧数据

- (void)queueFrameWithSize:(NSSize)size timestamp:(uint64_t)timestamp fpsNumerator:(uint32_t)fpsNumerator fpsDenominator:(uint32_t)fpsDenominator frameData:(NSData *)frameData rowBytes:(uint32_t)rowBytes

{

// DLog("ClinkCamera[%i]: queueFrameWithSize: %@", kClient_vid, NSStringFromSize(size));

// check queue size

if (CMSimpleQueueGetFullness(self.queue) >= 1.0) {

DLog(@"Queue is full, bailing out");

return;

}

OSStatus err = noErr;

CMSampleTimingInfo timingInfo = ClinkCMSampleTimingInfoForTimestamp(timestamp, fpsNumerator, fpsDenominator);

err = CMIOStreamClockPostTimingEvent(timingInfo.presentationTimeStamp, mach_absolute_time(), true, self.clock);

if (err != noErr) {

DLog(@"CMIOStreamClockPostTimingEvent err %d", err);

}

self.sequenceNumber = CMIOGetNextSequenceNumber(self.sequenceNumber);

CMSampleBufferRef sampleBuffer;

ClinkCMSampleBufferCreateFromData(size, timingInfo, self.sequenceNumber, frameData, rowBytes, &sampleBuffer);

CMSimpleQueueEnqueue(self.queue, sampleBuffer);

// Inform the clients that the queue has been altered

if (self.alteredProc != NULL) {

(self.alteredProc)(self.objectId, sampleBuffer, self.alteredRefCon);

}

}此外还有一些和Stream相关的属性设置也可以根据需要进行设置:

// 所有支持的视频格式(分辨率,数据格式)

- (NSArray *)getFormatDescriptions {

NSMutableArray *array = [NSMutableArray array];

uint32_t w, h;

for (int i = 0; i < r_count; i++) {

w = r_width[i];

h = r_height[i];

CMVideoFormatDescriptionRef formatDescription;

OSStatus err = CMVideoFormatDescriptionCreate(kCFAllocatorDefault, kCMVideoCodecType_422YpCbCr8, w, h, NULL, &formatDescription);

if (err != noErr) {

DLog(@"Error %d from CMVideoFormatDescriptionCreate", err);

} else {

[array addObject:(__bridge NSObject *)formatDescription];

}

}

return [array copy];

}

// 当前设置的视频格式

- (CMVideoFormatDescriptionRef)getFormatDescription {

uint32_t width = DEFAULT_WIDTH;

uint32_t height = DEFAULT_HEIGHT;

@synchronized (self) {

width = self.width;

height = self.height;

}

CMVideoFormatDescriptionRef formatDescription;

OSStatus err = CMVideoFormatDescriptionCreate(kCFAllocatorDefault, kCMVideoCodecType_422YpCbCr8, width, height, NULL, &formatDescription);

if (err != noErr) {

DLog(@"Error %d from CMVideoFormatDescriptionCreate", err);

}

return formatDescription;

}

// 由第三方应用调用,查询相应属性值

- (void)getPropertyDataWithAddress:(CMIOObjectPropertyAddress)address qualifierDataSize:(UInt32)qualifierDataSize qualifierData:(nonnull const void *)qualifierData dataSize:(UInt32)dataSize dataUsed:(nonnull UInt32 *)dataUsed data:(nonnull void *)data

{

switch (address.mSelector) {

case kCMIOObjectPropertyName:

*static_cast<CFStringRef*>(data) = CFSTR(kStream_Name);

*dataUsed = sizeof(CFStringRef);

break;

case kCMIOObjectPropertyElementName:

*static_cast<CFStringRef*>(data) = CFSTR(kStreamElement_Name);

*dataUsed = sizeof(CFStringRef);

break;

case kCMIOObjectPropertyManufacturer:

case kCMIOObjectPropertyElementCategoryName:

case kCMIOObjectPropertyElementNumberName:

case kCMIOStreamPropertyTerminalType:

case kCMIOStreamPropertyStartingChannel:

case kCMIOStreamPropertyLatency:

case kCMIOStreamPropertyInitialPresentationTimeStampForLinkedAndSyncedAudio:

case kCMIOStreamPropertyOutputBuffersNeededForThrottledPlayback:

DLog(@"TODO: %@", [ClinkStore StringFromPropertySelector:address.mSelector]);

break;

case kCMIOStreamPropertyDirection:

*static_cast<UInt32*>(data) = 1;

*dataUsed = sizeof(UInt32);

break;

case kCMIOStreamPropertyFormatDescriptions:

*static_cast<CFArrayRef*>(data) = (__bridge_retained CFArrayRef)[self getFormatDescriptions];

*dataUsed = sizeof(CFArrayRef);

break;

case kCMIOStreamPropertyFormatDescription:

*static_cast<CMVideoFormatDescriptionRef*>(data) = [self getFormatDescription];

*dataUsed = sizeof(CMVideoFormatDescriptionRef);

break;

case kCMIOStreamPropertyFrameRateRanges:

AudioValueRange range;

range.mMinimum = FPS;

range.mMaximum = FPS;

*static_cast<AudioValueRange*>(data) = range;

*dataUsed = sizeof(AudioValueRange);

break;

case kCMIOStreamPropertyFrameRate:

case kCMIOStreamPropertyFrameRates:

*static_cast<Float64*>(data) = FPS;

*dataUsed = sizeof(Float64);

break;

case kCMIOStreamPropertyMinimumFrameRate:

*static_cast<Float64*>(data) = FPS;

*dataUsed = sizeof(Float64);

break;

case kCMIOStreamPropertyClock:

*static_cast<CFTypeRef*>(data) = self.clock;

// This one was incredibly tricky and cost me many hours to find. It seems that DAL expects

// the clock to be retained when returned. It's unclear why, and that seems inconsistent

// with other properties that don't have the same behavior. But this is what Apple's sample

// code does.

// https://github.com/lvsti/CoreMediaIO-DAL-Example/blob/0392cb/Sources/Extras/CoreMediaIO/DeviceAbstractionLayer/Devices/DP/Properties/CMIO_DP_Property_Clock.cpp#L75

CFRetain(*static_cast<CFTypeRef*>(data));

*dataUsed = sizeof(CFTypeRef);

break;

default:

DLog(@"Stream unhandled getPropertyDataWithAddress for %@", [ClinkStore StringFromPropertySelector:address.mSelector]);

*dataUsed = 0;

};

}至此,我们的HAL插件主要部分就完成了,只需要往视频帧队列放入想要的图像内容就可以了。

5. 打包安装

对工程打包之后得到plugin安装包,只要把这个安装包放到目录“/Library/CoreMediaIO/Plug-Ins/DAL/”就可以被第三方应用使用。

主要安装完成之后需要重启第三方应用才可以。