1 下载训练脚本

git clone xxxxx://github.com/LlamaFamily/Llama-Chinese.git

cd Llama2-Chinese-main2 安装依赖

pip3 install torch bitsandbytes accelerate numpy gekko pandas protobuf scipy packaging sentencepiece datasets evaluate pytest==7.4.3 peft transformers scikit-learn torchvision torchaudio tensorboard gradio flash_attn deepspeed==0.13.1 - 为什么下载deepspeed==0.13.1? 解决如下问题(xxxxx://github.com/huggingface/transformers/issues/29157)

raise TypeError(f'Object of type {o.class.name} '

TypeError: Object of type Tensor is not JSON serializable- 为什么下载pytest==7.4.3?解决如下问题

Traceback (most recent call last):

File "xxxx/Llama-Chinese-7B/LLM/Llama-Chinese/train/sft/finetune_clm_lora.py", line 65, in <module>

from transformers.testing_utils import CaptureLogger

File "xxxx/lib/python3.9/site-packages/transformers/testing_utils.py", line 131, in <module>

from _pytest.doctest import (

ImportError: cannot import name 'import_path' from '_pytest.doctest' (/usr/local/python3/lib/python3.9/site-packages/_pytest/doctest.py)- 可以正常训练的依赖版本参考

root@xxxx:/# pip list

Package Version

------------------------- -----------

absl-py 2.1.0

accelerate 0.27.2

aiofiles 23.2.1

aiohttp 3.9.3

aiosignal 1.3.1

altair 5.2.0

annotated-types 0.6.0

anyio 4.3.0

async-timeout 4.0.3

attrs 23.2.0

bitsandbytes 0.42.0

certifi 2024.2.2

charset-normalizer 3.3.2

click 8.1.7

colorama 0.4.6

contourpy 1.2.0

cycler 0.12.1

datasets 2.18.0

deepspeed 0.13.1

dill 0.3.8

einops 0.7.0

evaluate 0.4.1

exceptiongroup 1.2.0

fastapi 0.110.0

ffmpy 0.3.2

filelock 3.13.1

flash-attn 2.5.4

fonttools 4.49.0

frozenlist 1.4.1

fsspec 2024.2.0

gekko 1.0.6

gradio 4.19.2

gradio_client 0.10.1

grpcio 1.62.0

h11 0.14.0

hjson 3.1.0

httpcore 1.0.4

httpx 0.27.0

huggingface-hub 0.21.3

idna 3.6

importlib-metadata 7.0.1

importlib_resources 6.1.2

iniconfig 2.0.0

Jinja2 3.1.3

joblib 1.3.2

jsonschema 4.21.1

jsonschema-specifications 2023.12.1

kiwisolver 1.4.5

Markdown 3.5.2

markdown-it-py 3.0.0

MarkupSafe 2.1.5

matplotlib 3.8.3

mdurl 0.1.2

mpmath 1.3.0

multidict 6.0.5

multiprocess 0.70.16

networkx 3.2.1

ninja 1.11.1.1

numpy 1.26.4

nvidia-cublas-cu12 12.1.3.1

nvidia-cuda-cupti-cu12 12.1.105

nvidia-cuda-nvrtc-cu12 12.1.105

nvidia-cuda-runtime-cu12 12.1.105

nvidia-cudnn-cu12 8.9.2.26

nvidia-cufft-cu12 11.0.2.54

nvidia-curand-cu12 10.3.2.106

nvidia-cusolver-cu12 11.4.5.107

nvidia-cusparse-cu12 12.1.0.106

nvidia-nccl-cu12 2.19.3

nvidia-nvjitlink-cu12 12.3.101

nvidia-nvtx-cu12 12.1.105

orjson 3.9.15

packaging 23.2

pandas 2.2.1

peft 0.9.0

pillow 10.2.0

pip 24.0

pluggy 1.4.0

protobuf 4.25.3

psutil 5.9.8

py-cpuinfo 9.0.0

pyarrow 15.0.0

pyarrow-hotfix 0.6

pydantic 2.6.3

pydantic_core 2.16.3

pydub 0.25.1

Pygments 2.17.2

pynvml 11.5.0

pyparsing 3.1.1

pytest 7.4.3

python-dateutil 2.9.0.post0

python-multipart 0.0.9

pytz 2024.1

PyYAML 6.0.1

referencing 0.33.0

regex 2023.12.25

requests 2.31.0

responses 0.18.0

rich 13.7.1

rpds-py 0.18.0

ruff 0.3.0

safetensors 0.4.2

scikit-learn 1.4.1.post1

scipy 1.12.0

semantic-version 2.10.0

sentencepiece 0.2.0

setuptools 58.1.0

shellingham 1.5.4

six 1.16.0

sniffio 1.3.1

starlette 0.36.3

sympy 1.12

tensorboard 2.16.2

tensorboard-data-server 0.7.2

threadpoolctl 3.3.0

tokenizers 0.15.2

tomli 2.0.1

tomlkit 0.12.0

toolz 0.12.1

torch 2.2.1

torchaudio 2.2.1

torchvision 0.17.1

tqdm 4.66.2

transformers 4.38.2

triton 2.2.0

typer 0.9.0

typing_extensions 4.10.0

tzdata 2024.1

urllib3 2.2.1

uvicorn 0.27.1

websockets 11.0.3

Werkzeug 3.0.1

xxhash 3.4.1

yarl 1.9.4

zipp 3.17.0

3 配置使python环境生效

vi /etc/profile

添加export PATH=$PATH:/usr/local/python3/bin/到/etc/profile

启动生效 source /etc/profile

- 为什么配置?解决依赖安装好了,但是not found问题:

- finetune lora.sh: 7: deepspeed: not found

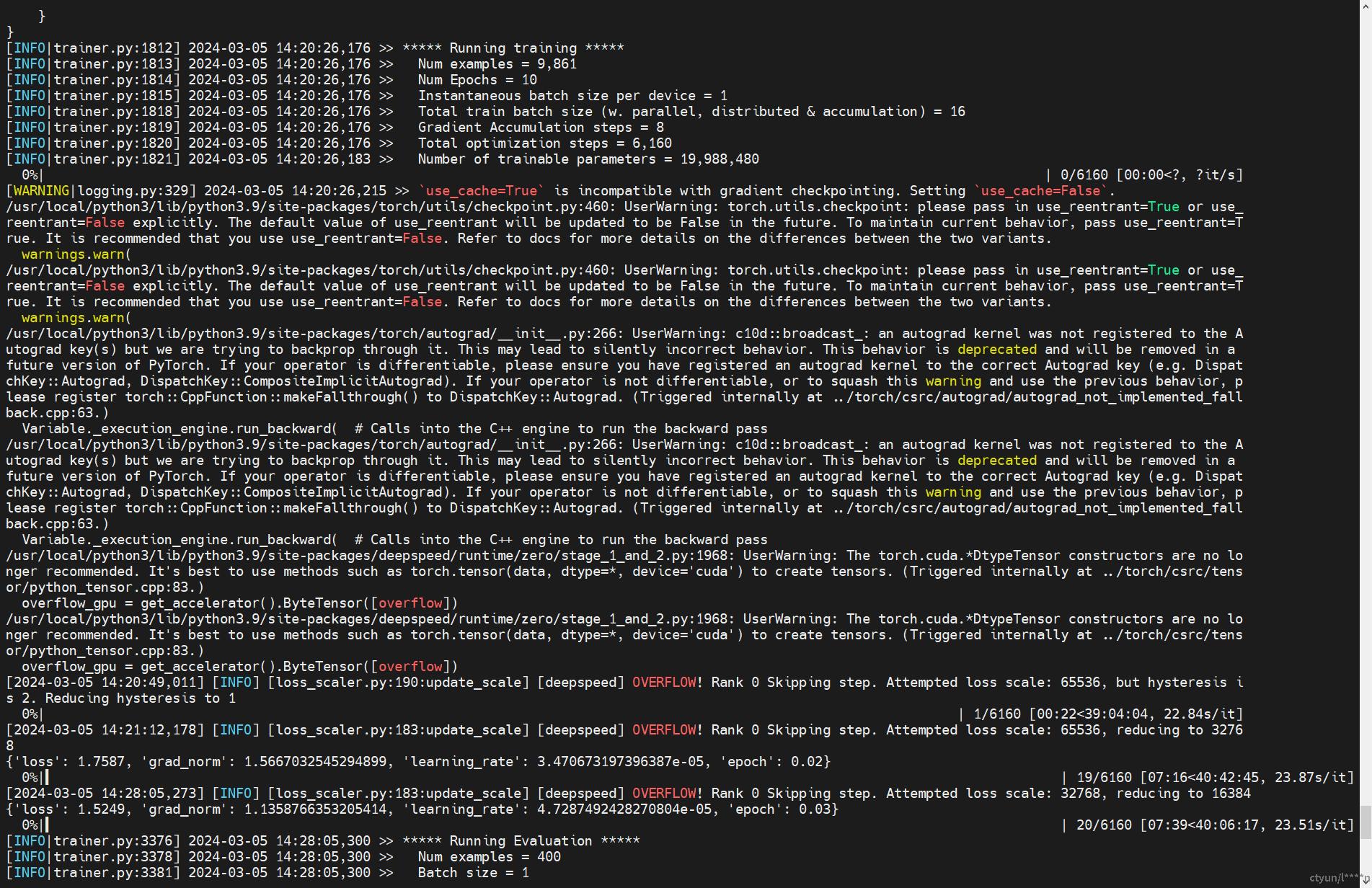

5 启动训练(启动前保证nvidia-smi可以查看显卡信息)

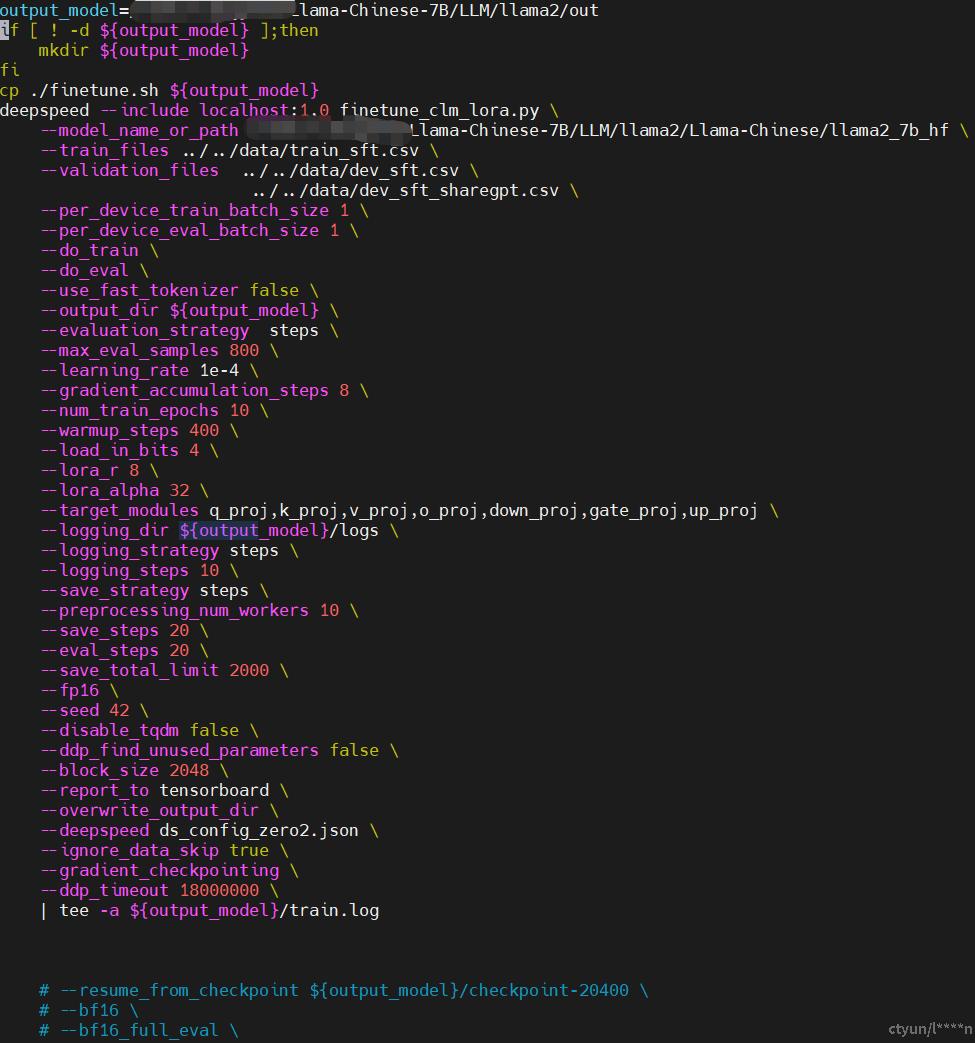

修改训练脚本 vi xxxx/Llama-Chinese-7B/LLM/Llama-Chinese/train/sft/finetune_lora.sh(设置输出路径和模型文件路径,开启fp16训练)

启动训练 sh finetune_lora.sh