一、离线环境搭建

参考llama2大模型训练实战连载文《Chinese LLaMA2预训练和指令精调实战-离线环境搭建》

二、训练前准备

(一)、离线安装llama2运行环境的依赖包

- 本地docker环境安装

- 掌握以下基本使用就可以自由进入docker容器环境操作

# 获取centos镜像

docker pull centos

# 启动容器

docker run -it centos /bin/bash

# 退出

exit

# 启动已停止运行的容器

docker ps -a

docker start <容器ID>

# 后台运行

docker run -itd --name centos-test centos /bin/bash

# 停止一个容器

docker stop <容器 ID>

# 重新进入容器

docker restart <容器 ID>

# 进入容器

docker attach <容器 ID> (exit,容器会停止)

docker exec <容器 ID> (exit,容器不会停止)

# 导出和导入容器

# 导出容器

# docker export <容器 ID> centos.tar

# 导入容器快照

cat docker/centos.tar | docker import - test/centos:v1

# 删除容器

docker rm -f <容器 ID>

- 配置yum

- yum是CentOS操作系统中的一个软件包管理器,用于自动化地进行软件的安装、更新和卸载

cd /etc/yum.repos.d/

sed -i 's/mirrorlist/#mirrorlist/g' /etc/yum.repos.d/CentOS-*

sed -i 's|#baseurl=mirror.centos.org|baseurl=vault.centos.org|g' /etc/yum.repos.d/CentOS-*

yum makecache

yum update -y

# 下载vim

yum -y install vim

# 下载git

yum install git

# 下载 wget,是从网络上自动下载文件的自由工具

yum install wget

- 下载python

wget python.org/ftp/python/3.10.5/Python-3.10.5.tgz

yum install -y wget

yum install -y gcc patch libffi-devel zlib-devel bzip2-devel openssl-devel ncurses-devel sqlite-devel readline-devel tk-devel gdbm-devel xz-devel

tar -zxf Python-3.10.5.tgz

cd Python-3.10.5

./configure --prefix=/usr/local/python3/

yum -y install make

make && make install

#添加python的环境变量

vi /etc/profile

PATH=/usr/local/python3/bin:$PATH

#保存后,刷新配置文件

source /etc/profile

添加软连接

ln -s /usr/local/python3/bin/python3 /usr/bin/python

ln -s /usr/local/python3/bin/pip3 /usr/bin/pip

python -V

#显示:

Python 3.10.5- 在本地docker环境下,下载llama2可运行的依赖包并打包进行迁移

- 下载llama模型代码,下载安装依赖

git clone github.com/facebookresearch/llama.git

python -m pip install --upgrade pip -i mirrors.aliyun.com/pypi/simple/ --trusted-host mirrors.aliyun.com

pip install -e . -i mirrors.aliyun.com/pypi/simple/ --trusted-host mirrors.aliyun.com

pip install tensorboard -i mirrors.aliyun.com/pypi/simple/ --trusted-host mirrors.aliyun.com-

- 获取依赖包列表,下载依赖

# 获取依赖包列表

pip freeze >requirements.txt

# 下载依赖

pip download -r requirements.txt-

- 打包依赖包成压缩文件,在离线服务器上传到site-packages下解压后,通过下载好的依赖包和依赖列表安装

pip install --no-index --find-links=/root/miniconda3/lib/python3.10/site-packages -r /root/miniconda3/lib/python3.10/site-packages/requirements.txt

-

-

-

.whl包的安装直接pip install .whl,但是有的依赖是tar.gz的文件,不能安装,所以我这里按依赖顺序手动安装了;.tar.gz的包需要解压后,需要进去目录安装,安装后暂时生效,但是会被别的tar.gz的包挤掉,最后才发现得把目录拷贝到site-packages目录下

-

-

# pytorch

pip install filelock-3.12.2-py3-none-any.whl

pip install typing_extensions-4.7.1-py3-none-any.whl

pip install mpmath-1.3.0-py3-none-any.whl

pip install sympy-1.12-py3-none-any.whl

pip install networkx-3.1-py3-none-any.whl

pip install MarkupSafe-2.1.3-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl

pip install Jinja2-3.1.2-py3-none-any.whl

pip install nvidia_cuda_nvrtc_cu11-11.7.99-2-py3-none-manylinux1_x86_64.whl

pip install nvidia_cuda_runtime_cu11-11.7.99-py3-none-manylinux1_x86_64.whl

pip install nvidia_cuda_cupti_cu11-11.7.101-py3-none-manylinux1_x86_64.whl

pip install nvidia_nccl_cu11-2.14.3-py3-none-manylinux1_x86_64.whl

pip install nvidia_nvtx_cu11-11.7.91-py3-none-manylinux1_x86_64.whl

pip install cmake-3.27.2-py2.py3-none-manylinux2014_x86_64.manylinux_2_17_x86_64.whl

pip install triton-2.0.0-1-cp310-cp310-manylinux2014_x86_64.manylinux_2_17_x86_64.whl torch-2.0.1-cp310-cp310-manylinux1_x86_64.whl

# 查看GPU是否可用

python -c "import torch; print(torch.cuda.device_count()) "

# fairscale

pip install numpy-1.25.2-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl

pip install ninja-1.11.1-py2.py3-none-manylinux_2_12_x86_64.manylinux2010_x86_64.whl

pip install setuptools-68.1.2-py3-none-any.whl

cd 目录

python setup.py install

cp -rf /root/miniconda3/lib/python3.10/site-packages/fairscale-0.4.13/fairscale /root/miniconda3/lib/python3.10/site-packages/

# fire

cd 目录

pip install termcolor-2.3.0-py3-none-any.whl

python setup.py install

cp -rf /root/miniconda3/lib/python3.10/site-packages/fire-0.5.0/fire /root/miniconda3/lib/python3.10/site-packages/

pip install sentencepiece-0.1.99-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl

#tensorboard

pip install absl_py-1.4.0-py3-none-any.whl

pip install grpcio-1.57.0-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl

pip install cachetools-5.3.1-py3-none-any.whl

pip install pyasn1-0.5.0-py2.py3-none-any.whl

pip install pyasn1_modules-0.3.0-py2.py3-none-any.whl

pip install rsa-4.9-py3-none-any.whl

pip install google_auth-2.22.0-py2.py3-none-any.whl

pip install oauthlib-3.2.2-py3-none-any.whl

pip install requests_oauthlib-1.3.1-py2.py3-none-any.whl

pip install google_auth_oauthlib-1.0.0-py2.py3-none-any.whl

pip install Markdown-3.4.4-py3-none-any.whl

pip install protobuf-4.24.2-cp37-abi3-manylinux2014_x86_64.whl

pip install werkzeug-2.3.7-py3-none-any.whl

pip install tensorboard_data_server-0.7.1-py3-none-manylinux2014_x86_64.whl

(二)、下载llama2模型并测试

-

申请(需科学上网)并下载模型

-

下载网址huggingface.co/meta-llama

-

- 测试文本生成example

torchrun --nproc_per_node 1 --nnodes 1 example_text_completion.py --ckpt_dir llama-2-7b/ --tokenizer_path llama-2-7b/tokenizer.model --max_seq_len 128 --max_batch_size 4- 直接下载hf,无需转换

- huggingface.co/meta-llama/Llama-2-7b-hf/tree/main

- 下载llama2 7b后执行转换代码转换成hf,才能进行后续预训练和指令精调

- 转换代码从github.com/huggingface/transformers下载

- 或者只下载该文件github/huggingface/transformers/blob/main/src/transformers/models/llama/convert_llama_weights_to_hf.py

- 安装transformer和accelerate依赖包,可以按上述步骤在本地docker环境下载好后迁移,我这里给出依赖包安装顺序

- 直接下载hf,无需转换

# transformers

fsspec-2023.6.0-py3-none-any.whl

PyYAML-6.0.1-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl

huggingface_hub-0.16.4-py3-none-any.whl

regex-2023.8.8-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl

tokenizers-0.13.3-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl

safetensors-0.3.3-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl

transformers-4.32.1-py3-none-any.whl

# accelerate

psutil-5.9.5-cp36-abi3-manylinux_2_12_x86_64.manylinux2010_x86_64.manylinux_2_17_x86_64.manylinux2014_x86_64.whl

accelerate-0.22.0-py3-none-any.whl -

-

-

- 执行转化

-

-

mv llama-2-7b 7B

python src/transformers/models/llama/convert_llama_weights_to_hf.py \

--input_dir ./7B \

--model_size 7B \

--output_dir ./transformer_out

# 参数解释

# 将原版LLaMA 7B模型放在--input_dir指定的目录

# --output_dir中将存放转换好的HF版权重

(三)、离线安装chinese llama2运行环境的依赖包

-

git安装 git-scm.com/download/linux

tar -zxvf git-2.42.0.tar.gz

cd /usr/local/git

./configure –prefix=/usr/local/git

make && make install

# 配置环境变量

export PATH=$PATH:/usr/local/git/bin

# 让该配置文件立即生效

source /etc/profile

# 验证

git --version- 安装peft

# 在跳板机上下载peft

git clone github.com/huggingface/peft.git

# 传到离线服务器上切换分支,安装特定版本peft

git checkout 13e53fc

# 离线安装

python setup.py install

cd -rf peft文件夹/peft site-packages目录下

# 在线

pip install . -i pypi.tuna.tsinghua.edu.cn/simple --trusted-host pypi.tuna.tsinghua.edu.cn- Deepspeed

git clone github.com/microsoft/DeepSpeed.git

cd DeepSpeed

git checkout .

git checkout master

./install.sh -r

# 补充安装一些依赖包

hjson-3.1.0-py3-none-any.whl

py_cpuinfo-9.0.0-py3-none-any.whl

pydantic-1.9.0-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl

# 测试

python -c "import deepspeed; print(deepspeed.__version__)"(四)、准备chinese llama2代码、增量LoRA模型、以及合并成完整Chinese LLaMA2模型

-

代码准备

git clone github.com/ymcui/Chinese-LLaMA-Alpaca-2.git -

原版LLaMA2模型权重及Tokenizer准备(上面步骤已经完成)

git lfs install git lfs clone huggingface.co/meta-llama/Llama-2-7b-hf/ - LoRA增量模型权重及中文LLaMA2 Tokenizer准备

- 从github.com/ymcui/Chinese-LLaMA-Alpaca2 中选择对应LoRA增量模型下载后上传到离线服务器上

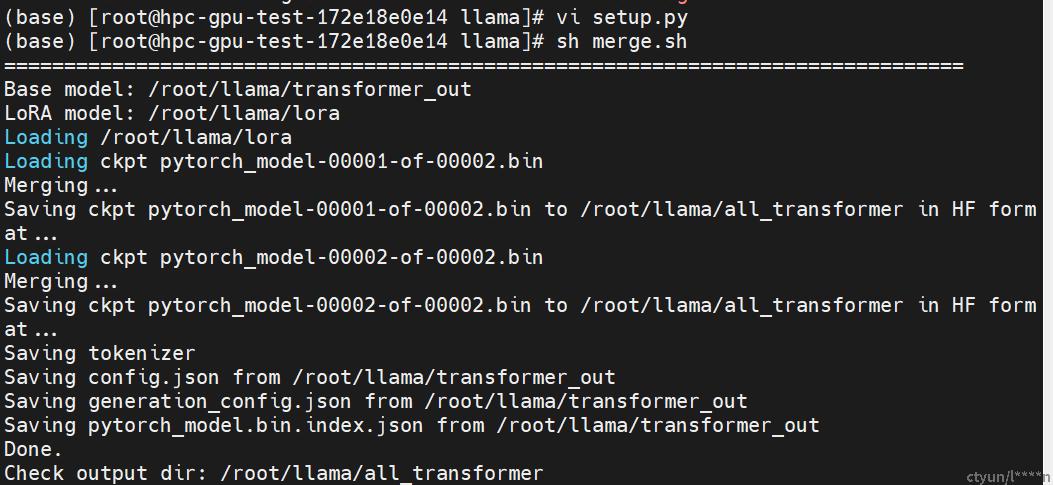

- 原版LLaMA2模型与增量LoRA模型合并

- 将chinese llama2项目代码中scripts脚本文件夹移动到llama目录下

- 执行模型合并脚本

python scripts/merge_llama2_with_chinese_lora_low_mem.py \

--base_model /root/llama/transformer_out \

--lora_model /root/llama/lora \

--output_type huggingface \

--output_dir /root/llama/all_transformer

# 参数说明

`--base_model`:存放HF格式的Llama-2模型权重和配置文件的目录

`--lora_model`:中文LLaMA-2/Alpaca-2 LoRA解压后文件所在目录

`--output_type`:指定输出格式,可为`pth`或`huggingface`。若不指定,默认为`huggingface`

`--output_dir`:指定保存全量模型权重的目录,默认为`.

三、预训练

参考llama2大模型训练实战连载文《Chinese LLaMA2预训练和指令精调实战-预训练》