1 JuiceFS 技术架构

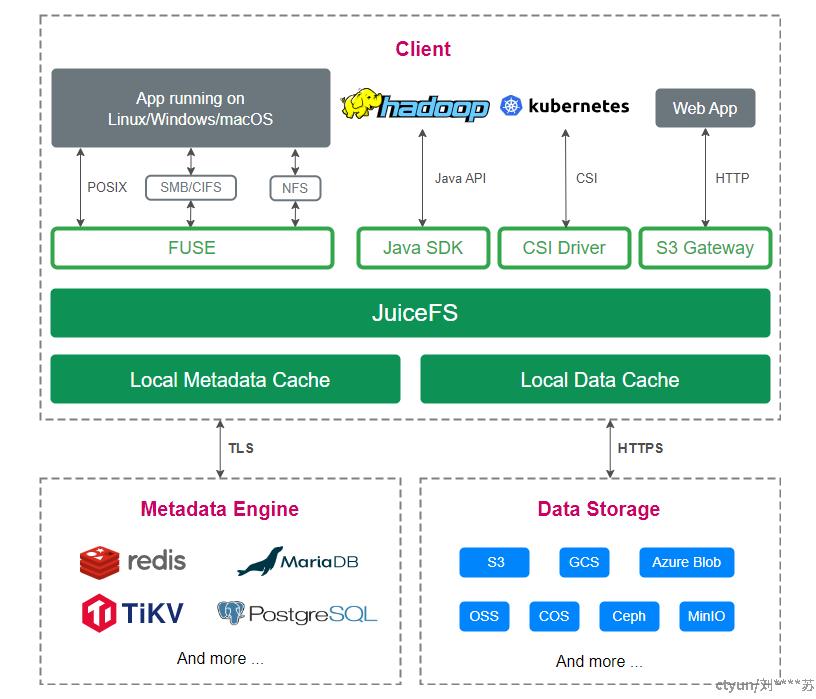

JuiceFS 文件系统由三个部分组成:

JuiceFS 客户端(Client):所有文件读写,以及碎片合并、回收站文件过期删除等后台任务,均在客户端发生。客户端需要同时与对象存储和元数据引擎打交道。客户端支持多种接入方式:

- 通过 FUSE:JuiceFS 文件系统能够以 POSIX 兼容的方式挂载到服务器,将海量云端存储直接当做本地存储来使用。

- 通过 Hadoop Java SDK:JuiceFS 文件系统能够直接替代 HDFS,为 Hadoop 提供低成本的海量存储。

- 通过 Kubernetes CSI 驱动:JuiceFS 文件系统能够直接为 Kubernetes 提供海量存储。

- 通过 S3 网关:使用 S3 作为存储层的应用可直接接入,同时可以使用 AWS CLI、s3cmd、MinIO client 等工具访问 JuiceFS 文件系统。

- 通过 WebDAV 服务:以 HTTP 协议,以类似 RESTful API 的方式接入 JuiceFS 并直接操作其中的文件。

数据存储(Data Storage):文件将会被切分上传至对象存储服务。JuiceFS 支持几乎所有的公有云对象存储,同时也支持 OpenStack Swift、Ceph、MinIO 等私有化的对象存储。

元数据引擎(Metadata Engine):用于存储文件元数据(metadata),包含以下内容:

- 常规文件系统的元数据:文件名、文件大小、权限信息、创建修改时间、目录结构、文件属性、符号链接、文件锁等。

- 文件数据的索引:文件的数据分配和引用计数、客户端会话等。

JuiceFS 采用多引擎设计,目前已支持 Redis、TiKV、MySQL/MariaDB、PostgreSQL、SQLite 等作为元数据服务引擎。

2 JuiceFS CSI 架构

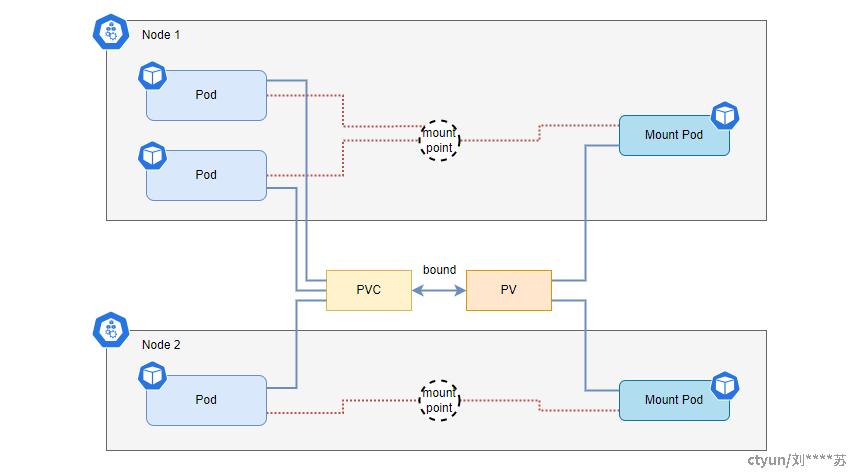

JuiceFS CSI 驱动遵循 CSI 规范,实现了容器编排系统与 JuiceFS 文件系统之间的接口。在 Kubernetes 下,JuiceFS 可以用持久卷(PersistentVolume)的形式提供给 Pod 使用。

JuiceFS CSI 驱动包含以下组件:JuiceFS CSI Controller(StatefulSet)以及 JuiceFS CSI Node Service(DaemonSet),你可以方便的用 kubectl 查看:

root@node1:~# kubectl get pod -l app.kubernetes.io/name=juicefs-csi-driver

NAME READY STATUS RESTARTS AGE

juicefs-csi-controller-0 4/4 Running 0 26m

juicefs-csi-controller-1 4/4 Running 0 26m

juicefs-csi-node-5qr7b 3/3 Running 0 26mCSI 默认采用容器挂载(Mount Pod)模式,也就是让 JuiceFS 客户端运行在独立的 Pod 中,其架构如下:

采用独立 Mount Pod 来运行 JuiceFS 客户端,并由 CSI Node Service 来管理 Mount Pod 的生命周期。这样的架构提供如下好处:

- 多个 Pod 共用 PV 时,不会新建 Mount Pod,而是对已有的 Mount Pod 做引用计数,计数归零时删除 Mount Pod。

- CSI 驱动组件与客户端解耦,方便 CSI 驱动自身的升级。详见。

在同一个节点上,一个 PVC 会对应一个 Mount Pod。而使用了相同 PV 的容器,则可以共享一个 Mount Pod。PVC、PV、Mount Pod 之间的关系如下图所示:

3 使用方式

你可以以静态配置和动态配置的方式来使用 CSI 驱动。

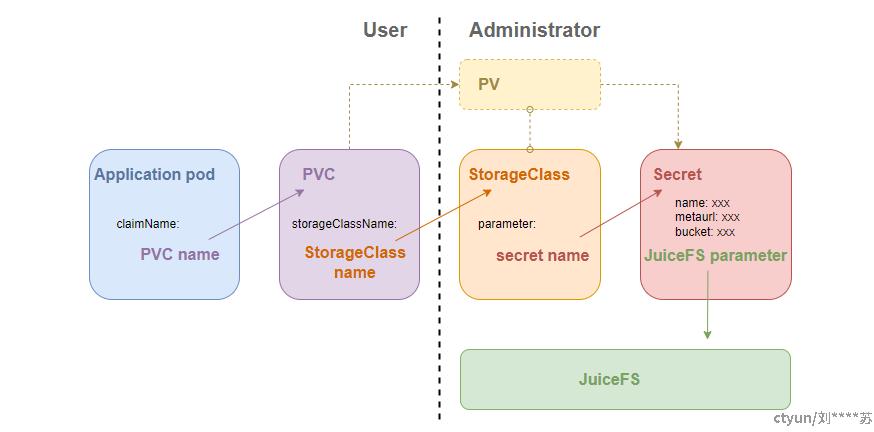

3.1 静态配置

静态配置方式最为简单直接,需要 Kubernetes 管理员创建 PersistentVolume(PV)以及文件系统认证信息(以 Kubernetes Secret 形式保存),然后用户创建 PersistentVolumeClaim(PVC),在定义中绑定该 PV,最后在 Pod 定义中引用该 PVC。资源间关系如下图所示:

一般在以下场景使用静态配置:

- 你在 JuiceFS 中已经存储了大量数据,想要直接在 Kubernetes 容器中访问;

- 对 CSI 驱动功能做简单验证;

3.2 动态配置

考虑到静态配置的管理更加复杂,规模化使用 CSI 驱动时,一般会以「动态配置」方式使用,管理员不再需要手动创建 PV,同时实现应用间的数据隔离。这种模式下,管理员会负责创建一个或多个 StorageClass,用户只需要创建 PVC,指定 StorageClass,并且在 Pod 中引用该 PVC,CSI 驱动就会按照 StorageClass 中配置好的参数,为你自动创建 PV。资源间关系如下:

以容器挂载模式为例,从创建到使用的流程大致如下:

- 用户创建 PVC,指定已经创建好的 StorageClass;

- CSI Controller 负责在 JuiceFS 文件系统中做初始化,默认以 PV ID 为名字创建子目录,同时创建对应的 PV。该过程所需的配置,都在 StorageClass 中指定或引用;

- Kubernetes (PV Controller 组件) 将上述用户创建的 PVC 与 CSI Controller 创建的 PV 进行绑定,此时 PVC 与 PV 的状态变为「Bound」;

- 用户创建应用 Pod,声明使用先前创建的 PVC;

- CSI Node Service 负责在应用 Pod 所在节点创建 Mount Pod;

- Mount Pod 启动,执行 JuiceFS 客户端挂载,将挂载点暴露给宿主机,路径为

/var/lib/juicefs/volume/[pv-name]; - CSI Node Service 等待 Mount Pod 启动成功后,将 PV 对应的 JuiceFS 子目录 bind 到容器内,路径为其声明的 VolumeMount 路径;

- Kubelet 启动应用 Pod。

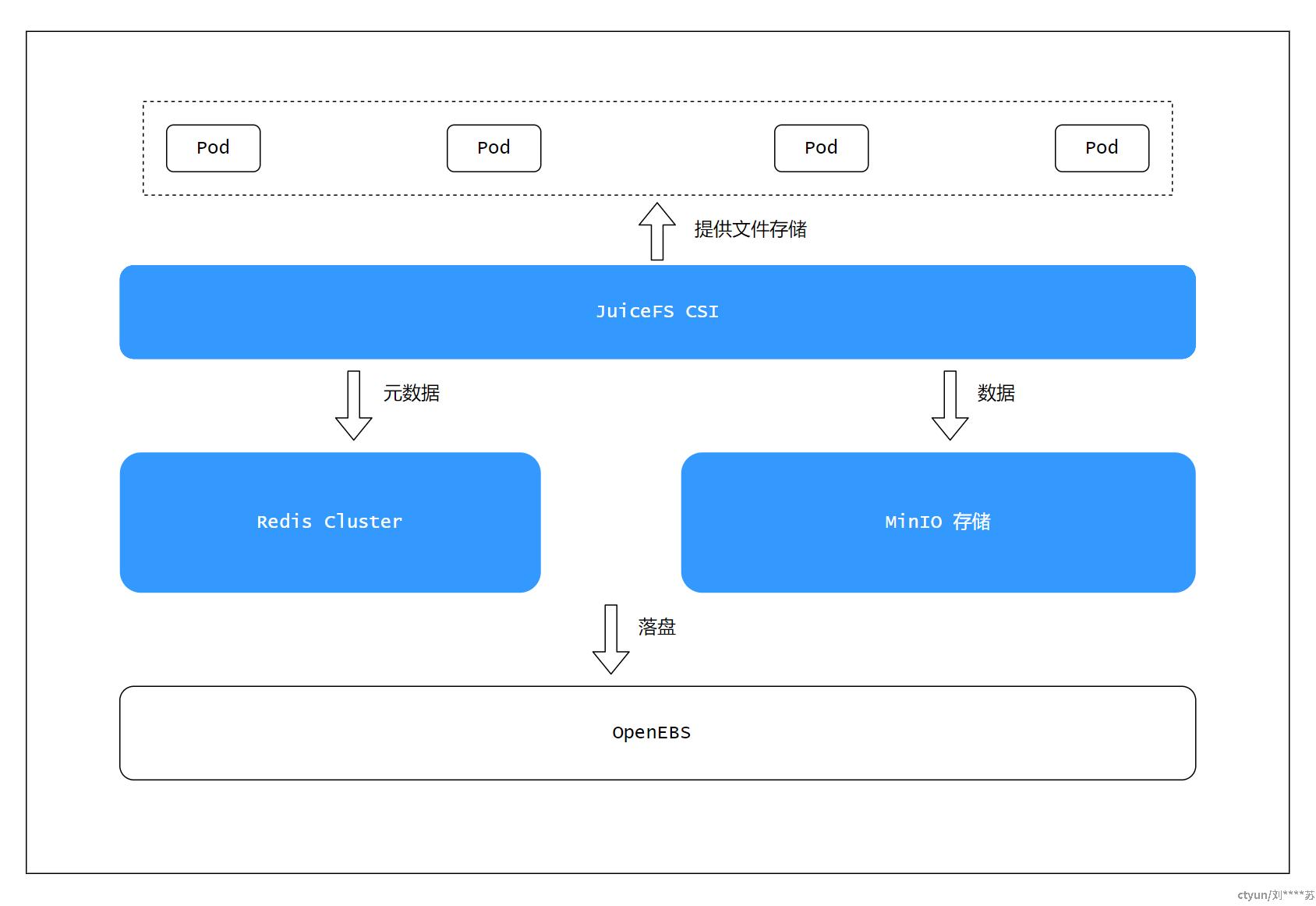

4 部署 JuiceFS

元数据采用 Redis,数据存储使用 MinIO。Redis、MinIO 和 JuiceFS CSI 都统一采用 Helm 方式来部署。

4.1 部署 Redis

执行如下步骤添加 bitnmai 源,拉取 redis-cluster charts包:

root@node1:~#

root@node1:~# helm search repo bitnami |grep redis

bitnami/redis 18.3.3 7.2.3 Redis(R) is an open source, advanced key-value ...

bitnami/redis-cluster 9.1.3 7.2.3 Redis(R) is an open source, scalable, distribut...

root@node1:~# helm search repo bitnami |grep redis-cluster

bitnami/redis-cluster 9.1.3 7.2.3 Redis(R) is an open source, scalable, distribut...

root@node1:~#

root@node1:~# help pull bitnami/redis-cluster --version 9.1.3

root@node1:~# tar -xvzf redis-cluster-9.1.3.tgz

root@node1:~# cd redis-cluster/其中,values.yaml 示例:

root@node1:~/redis-cluster# cat values.yaml |grep -v "#"

global:

imageRegistry: "dockerhub.enlightencloud.local"

imagePullSecrets: []

storageClass: "local"

redis:

password: "Moya8788"

nameOverride: ""

fullnameOverride: ""

clusterDomain: cluster.local

commonAnnotations: {}

commonLabels: {}

extraDeploy: []

diagnosticMode:

enabled: false

command:

- sleep

args:

- infinity

image:

registry: dockerhub.enlightencloud.local

repository: bitnami/redis-cluster

tag: 7.2.3-debian-11-r1

digest: ""

pullPolicy: IfNotPresent

pullSecrets: []

debug: false

networkPolicy:

enabled: false

allowExternal: true

ingressNSMatchLabels: {}

ingressNSPodMatchLabels: {}

serviceAccount:

create: false

name: ""

annotations: {}

automountServiceAccountToken: false

rbac:

create: false

role:

rules: []

podSecurityContext:

enabled: true

fsGroup: 1001

sysctls: []

podDisruptionBudget: {}

minAvailable: ""

maxUnavailable: ""

containerSecurityContext:

enabled: true

runAsUser: 1001

runAsNonRoot: true

privileged: false

readOnlyRootFilesystem: false

allowPrivilegeEscalation: false

capabilities:

drop: ["ALL"]

seccompProfile:

type: "RuntimeDefault"

usePassword: true

password: ""

existingSecret: ""

existingSecretPasswordKey: ""

usePasswordFile: false

tls:

enabled: false

authClients: true

autoGenerated: false

existingSecret: ""

certificatesSecret: ""

certFilename: ""

certKeyFilename: ""

certCAFilename: ""

dhParamsFilename: ""

service:

ports:

redis: 6379

nodePorts:

redis: ""

extraPorts: []

annotations: {}

labels: {}

type: ClusterIP

clusterIP: ""

loadBalancerIP: ""

loadBalancerSourceRanges: []

externalTrafficPolicy: Cluster

sessionAffinity: None

sessionAffinityConfig: {}

headless:

annotations: {}

persistence:

enabled: true

path: /bitnami/redis/data

subPath: ""

storageClass: ""

annotations: {}

accessModes:

- ReadWriteOnce

size: 8Gi

matchLabels: {}

matchExpressions: {}

persistentVolumeClaimRetentionPolicy:

enabled: false

whenScaled: Retain

whenDeleted: Retain

volumePermissions:

enabled: false

image:

registry: dockerhub.enlightencloud.local

repository: bitnami/os-shell

tag: 11-debian-11-r91

digest: ""

pullPolicy: IfNotPresent

pullSecrets: []

containerSecurityContext:

enabled: true

runAsUser: 0

privileged: false

resources:

limits: {}

requests: {}

podSecurityPolicy:

create: false

redis:

command: []

args: []

updateStrategy:

type: RollingUpdate

rollingUpdate:

partition: 0

podManagementPolicy: Parallel

hostAliases: []

hostNetwork: false

useAOFPersistence: "yes"

containerPorts:

redis: 6379

bus: 16379

lifecycleHooks: {}

extraVolumes: []

extraVolumeMounts: []

customLivenessProbe: {}

customReadinessProbe: {}

customStartupProbe: {}

initContainers: []

sidecars: []

podLabels: {}

priorityClassName: ""

defaultConfigOverride: ""

configmap: ""

extraEnvVars: []

extraEnvVarsCM: ""

extraEnvVarsSecret: ""

podAnnotations: {}

resources:

limits: {}

requests: {}

schedulerName: ""

shareProcessNamespace: false

livenessProbe:

enabled: true

initialDelaySeconds: 5

periodSeconds: 5

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

enabled: true

initialDelaySeconds: 5

periodSeconds: 5

timeoutSeconds: 1

successThreshold: 1

failureThreshold: 5

startupProbe:

enabled: false

path: /

initialDelaySeconds: 300

periodSeconds: 10

timeoutSeconds: 5

failureThreshold: 6

successThreshold: 1

podAffinityPreset: ""

podAntiAffinityPreset: soft

nodeAffinityPreset:

type: ""

key: ""

values: []

affinity: {}

nodeSelector: {}

tolerations: []

topologySpreadConstraints: []

updateJob:

activeDeadlineSeconds: 600

command: []

args: []

hostAliases: []

helmHook: post-upgrade

annotations: {}

podAnnotations: {}

podLabels: {}

extraEnvVars: []

extraEnvVarsCM: ""

extraEnvVarsSecret: ""

extraVolumes: []

extraVolumeMounts: []

initContainers: []

podAffinityPreset: ""

podAntiAffinityPreset: soft

nodeAffinityPreset:

type: ""

key: ""

values: []

affinity: {}

nodeSelector: {}

tolerations: []

priorityClassName: ""

resources:

limits: {}

requests: {}

cluster:

init: true

nodes: 6

replicas: 1

externalAccess:

enabled: false

hostMode: false

service:

disableLoadBalancerIP: false

loadBalancerIPAnnotaion: ""

type: LoadBalancer

port: 6379

loadBalancerIP: []

loadBalancerSourceRanges: []

annotations: {}

update:

addNodes: false

currentNumberOfNodes: 6

currentNumberOfReplicas: 1

newExternalIPs: []

metrics:

enabled: false

image:

registry: dockerhub.enlightencloud.local

repository: bitnami/redis-exporter

tag: 1.55.0-debian-11-r2

digest: ""

pullPolicy: IfNotPresent

pullSecrets: []

resources: {}

extraArgs: {}

extraEnvVars: []

podAnnotations:

prometheus.io/scrape: "true"

prometheus.io/port: "9121"

podLabels: {}

containerSecurityContext:

enabled: false

allowPrivilegeEscalation: false

serviceMonitor:

enabled: false

namespace: ""

interval: ""

scrapeTimeout: ""

selector: {}

labels: {}

annotations: {}

jobLabel: ""

relabelings: []

metricRelabelings: []

prometheusRule:

enabled: false

additionalLabels: {}

namespace: ""

rules: []

priorityClassName: ""

service:

type: ClusterIP

clusterIP: ""

loadBalancerIP: ""

annotations: {}

labels: {}

sysctlImage:

enabled: false

command: []

registry:

repository: bitnami/os-shell

tag: 11-debian-11-r91

digest: ""

pullPolicy: IfNotPresent

pullSecrets: []

mountHostSys: false

containerSecurityContext:

enabled: true

runAsUser: 0

privileged: true

resources:

limits: {}

requests: {}执行如下命令部署 Redis 集群:

root@node1:~/redis-cluster# helm install juicefs-redis .

NAME: juicefs-redis

LAST DEPLOYED: Wed Nov 15 16:24:40 2023

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: redis-cluster

CHART VERSION: 9.1.3

APP VERSION: 7.2.3** Please be patient while the chart is being deployed **

To get your password run:

export REDIS_PASSWORD=$(kubectl get secret --namespace "default" juicefs-redis-redis-cluster -o jsonpath="{.data.redis-password}" | base64 -d)

You have deployed a Redis® Cluster accessible only from within you Kubernetes Cluster.INFO: The Job to create the cluster will be created.To connect to your Redis® cluster:

1. Run a Redis® pod that you can use as a client:

kubectl run --namespace default juicefs-redis-redis-cluster-client --rm --tty -i --restart='Never' \

--env REDIS_PASSWORD=$REDIS_PASSWORD \

--image dockerhub.enlightencloud.local/bitnami/redis-cluster:7.2.3-debian-11-r1 -- bash

2. Connect using the Redis® CLI:

redis-cli -c -h juicefs-redis-redis-cluster -a $REDIS_PASSWORD

root@node1:~/redis-cluster#

root@node1:~/redis-cluster# export REDIS_PASSWORD=$(kubectl get secret --namespace "default" juicefs-redis-redis-cluster -o jsonpath="{.data.redis-password}" | base64 -d)

root@node1:~/redis-cluster# kubectl run --namespace default juicefs-redis-redis-cluster-client --rm --tty -i --restart='Never' \

> --env REDIS_PASSWORD=$REDIS_PASSWORD \

> --image dockerhub.enlightencloud.local/bitnami/redis-cluster:7.2.3-debian-11-r1 -- bash

If you don't see a command prompt, try pressing enter.

I have no name!@juicefs-redis-redis-cluster-client:/$ redis-cli -c -h juicefs-redis-redis-cluster -a $REDIS_PASSWORD

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

juicefs-redis-redis-cluster:6379>

juicefs-redis-redis-cluster:6379> config get databases

1) "databases"

2) "1"

juicefs-redis-redis-cluster:6379> select 0

OK

juicefs-redis-redis-cluster:6379> keys查看 Redis 资源:

root@node1:~# kubectl get pods |grep redis

juicefs-redis-redis-cluster-0 1/1 Running 1 (101m ago) 103m

juicefs-redis-redis-cluster-1 1/1 Running 1 (101m ago) 103m

juicefs-redis-redis-cluster-2 1/1 Running 1 (101m ago) 103m

juicefs-redis-redis-cluster-3 1/1 Running 1 (101m ago) 103m

juicefs-redis-redis-cluster-4 1/1 Running 0 103m

juicefs-redis-redis-cluster-5 1/1 Running 0 102m

juicefs-redis-redis-cluster-client 1/1 Running 0 100m

root@node1:~/redis-cluster#

root@node1:~# kubectl get services |grep redis

juicefs-redis-redis-cluster ClusterIP 10.233.34.103 <none> 6379/TCP 103m

juicefs-redis-redis-cluster-headless ClusterIP None <none> 6379/TCP,16379/TCP 103m4.2 部署 MinIO

执行如下步骤添加 minio 源,并拉取 minio charts包:

root@node1:~# helm repo add minio xxx

root@node1:~# helm search repo minio

NAME CHART VERSION APP VERSION DESCRIPTION

aliyun/minio 0.5.5 Distributed object storage server built for clo...

bitnami/minio 12.10.0 2023.11.11 MinIO(R) is an object storage server, compatibl...

minio/minio 8.0.10 master High Performance, Kubernetes Native Object Storage

root@node1:~# helm pull minio/minio --version 8.0.10

root@node1:~# tar -xvzf minio-8.0.10.tgz

root@node1:~# cd minio/其中,values.yaml 示例:

root@node1:~/minio# cat values.yaml |grep -v "#"

nameOverride: ""

fullnameOverride: ""

clusterDomain: cluster.local

image:

repository: dockerhub.enlightencloud.local/minio/minio

tag: RELEASE.2021-02-14T04-01-33Z

pullPolicy: IfNotPresent

mcImage:

repository: dockerhub.enlightencloud.local/minio/mc

tag: RELEASE.2021-02-14T04-28-06Z

pullPolicy: IfNotPresent

helmKubectlJqImage:

repository: dockerhub.enlightencloud.local/minio/helm-kubectl-jq

tag: 3.1.0

pullPolicy: IfNotPresent

mode: standalone

additionalLabels: []

additionalAnnotations: []

extraArgs: []

DeploymentUpdate:

type: RollingUpdate

maxUnavailable: 0

maxSurge: 100%

StatefulSetUpdate:

updateStrategy: RollingUpdate

priorityClassName: ""

accessKey: "Moya8788"

secretKey: "Moya8788"

certsPath: "/etc/minio/certs/"

configPathmc: "/etc/minio/mc/"

mountPath: "/export"

existingSecret: ""

bucketRoot: ""

drivesPerNode: 1

replicas: 4

zones: 1

tls:

enabled: false

certSecret: ""

publicCrt: public.crt

privateKey: private.key

trustedCertsSecret: ""

persistence:

enabled: true

existingClaim: ""

storageClass: "local"

VolumeName: ""

accessMode: ReadWriteOnce

size: 50Gi

subPath: ""

service:

type: ClusterIP

clusterIP: ~

port: 9000

nodePort: 32000

externalIPs: []

annotations: {}

imagePullSecrets: []

ingress:

enabled: false

labels: {}

annotations: {}

path: /

hosts:

- chart-example.local

tls: []

nodeSelector: {}

tolerations: []

affinity: {}

securityContext:

enabled: true

runAsUser: 1000

runAsGroup: 1000

fsGroup: 1000

podAnnotations: {}

podLabels: {}

resources:

requests:

memory: 4Gi

defaultBucket:

enabled: false

name: bucket

policy: none

purge: false

buckets: []

makeBucketJob:

podAnnotations:

annotations:

securityContext:

enabled: false

runAsUser: 1000

runAsGroup: 1000

fsGroup: 1000

resources:

requests:

memory: 128Mi

updatePrometheusJob:

podAnnotations:

annotations:

securityContext:

enabled: false

runAsUser: 1000

runAsGroup: 1000

fsGroup: 1000

s3gateway:

enabled: false

replicas: 4

serviceEndpoint: ""

accessKey: ""

secretKey: ""

azuregateway:

enabled: false

replicas: 4

gcsgateway:

enabled: false

replicas: 4

gcsKeyJson: ""

projectId: ""

nasgateway:

enabled: false

replicas: 4

pv: ~

environment: {}

networkPolicy:

enabled: false

allowExternal: true

podDisruptionBudget:

enabled: false

maxUnavailable: 1

serviceAccount:

create: true

name:

metrics:

serviceMonitor:

enabled: false

additionalLabels: {}

relabelConfigs: {}

etcd:

endpoints: []

pathPrefix: ""

corednsPathPrefix: ""

clientCert: ""

clientCertKey: ""执行如下命令部署 MinIO:

root@node1:~/minio# helm install juicefs-minio .查看 MinIO 资源:

root@node1:~/minio# kubectl get pods |grep minio

juicefs-minio-58bfcf5574-5nrwh 1/1 Running 0 75m

root@node1:~/minio#

root@node1:~/minio# kubectl get service |grep minio

juicefs-minio ClusterIP 10.233.61.184 <none> 9000/TCP 75m4.3 部署 JuiceFS CSI

执行如下命令添加 juicefs 的 源 ,下载 jucefs-csi-driver charts:

root@node1:~# helm repo add juicefs xxx

root@node1:~# helm pull juicefs/juicefs-csi-driver --version 0.18.1

root@node1:~# tar -xvzf juicefs-csi-driver-0.18.1.tgz

root@node1:~# cd juicefs-csi-driver/其中,values.yaml 示例:

root@node1:~/juicefs-csi-driver# cat values.yaml |grep -v "#"

image:

repository: dockerhub.enlightencloud.local/juicedata/juicefs-csi-driver

tag: "v0.22.1"

pullPolicy: ""

sidecars:

livenessProbeImage:

repository: dockerhub.enlightencloud.local/k8scsi/livenessprobe

tag: "v1.1.0"

pullPolicy: ""

nodeDriverRegistrarImage:

repository: dockerhub.enlightencloud.local/k8scsi/csi-node-driver-registrar

tag: "v2.1.0"

pullPolicy: ""

csiProvisionerImage:

repository: dockerhub.enlightencloud.local/k8scsi/csi-provisioner

tag: "v1.6.0"

pullPolicy: ""

csiResizerImage:

repository: dockerhub.enlightencloud.local/k8scsi/csi-resizer

tag: "v1.0.1"

pullPolicy: ""

imagePullSecrets: []

mountMode: mountpod

hostAliases: []

kubeletDir: /var/lib/kubelet

jfsMountDir: /var/lib/juicefs/volume

jfsConfigDir: /var/lib/juicefs/config

immutable: false

dnsPolicy: ClusterFirstWithHostNet

dnsConfig:

{}

serviceAccount:

controller:

create: true

annotations: {}

name: "juicefs-csi-controller-sa"

node:

create: true

annotations: {}

name: "juicefs-csi-node-sa"

controller:

enabled: true

leaderElection:

enabled: true

leaderElectionNamespace: ""

leaseDuration: ""

provisioner: false

replicas: 2

resources:

limits:

cpu: 1000m

memory: 1Gi

requests:

cpu: 100m

memory: 512Mi

terminationGracePeriodSeconds: 30

affinity: {}

nodeSelector: {}

tolerations:

- key: CriticalAddonsOnly

operator: Exists

service:

port: 9909

type: ClusterIP

priorityClassName: system-cluster-critical

envs:

node:

enabled: true

hostNetwork: false

resources:

limits:

cpu: 1000m

memory: 1Gi

requests:

cpu: 100m

memory: 512Mi

storageClassShareMount: false

mountPodNonPreempting: false

terminationGracePeriodSeconds: 30

affinity: {}

nodeSelector: {}

tolerations:

- key: CriticalAddonsOnly

operator: Exists

priorityClassName: system-node-critical

envs: []

updateStrategy:

rollingUpdate:

maxUnavailable: 50%

defaultMountImage:

ce: ""

ee: ""

webhook:

certManager:

enabled: true

caBundlePEM: |

crtPEM: |

keyPEM: |

timeoutSeconds: 5

FailurePolicy: Fail

storageClasses:

- name: "juicefs-sc"

enabled: true

reclaimPolicy: Delete

allowVolumeExpansion: true

backend:

name: "liusu-juicefs"

metaurl: "redis://:Moya8788@juicefs-redis-redis-cluster:6379/0"

storage: "minio"

token: ""

accessKey: "Moya8788"

secretKey: "Moya8788"

envs: ""

configs: ""

trashDays: ""

formatOptions: ""

mountOptions:

pathPattern: ""

cachePVC: ""

mountPod:

resources:

limits:

cpu: 5000m

memory: 5Gi

requests:

cpu: 1000m

memory: 1Gi

image: ""执行如下命令来部署 juicefs-csi-driver:

root@node1:~/juicefs-csi-driver# helm install liusu-juicefs .查看资源:

root@node1:~/juicefs-csi-driver# kubectl get pods |grep csi

juicefs-csi-controller-0 4/4 Running 0 71m

juicefs-csi-controller-1 4/4 Running 0 70m

juicefs-csi-node-5qr7b 3/3 Running 0 71m

root@node1:~/juicefs-csi-driver# kubectl get storageclasses.storage.k8s.io -o wide |grep juice

juicefs-sc csi.juicefs.com Delete Immediate true 71m

root@node1:~/juicefs-csi-driver#

root@node1:~/juicefs-csi-driver# kubectl get storageclasses.storage.k8s.io -o wide juicefs-sc -o yaml

allowVolumeExpansion: true

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

annotations:

meta.helm.sh/release-name: liusu-juicefs

meta.helm.sh/release-namespace: default

storageclass.kubesphere.io/allow-clone: "true"

storageclass.kubesphere.io/allow-snapshot: "true"

creationTimestamp: "2023-11-15T09:08:04Z"

labels:

app.kubernetes.io/instance: liusu-juicefs

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: juicefs-csi-driver

app.kubernetes.io/version: 0.22.1

helm.sh/chart: juicefs-csi-driver-0.18.1

name: juicefs-sc

resourceVersion: "16500"

uid: a1d42963-2e80-43fa-a275-fd162032d408

parameters:

csi.storage.k8s.io/controller-expand-secret-name: juicefs-sc-secret

csi.storage.k8s.io/controller-expand-secret-namespace: default

csi.storage.k8s.io/node-publish-secret-name: juicefs-sc-secret

csi.storage.k8s.io/node-publish-secret-namespace: default

csi.storage.k8s.io/provisioner-secret-name: juicefs-sc-secret

csi.storage.k8s.io/provisioner-secret-namespace: default

juicefs/mount-cpu-limit: 5000m

juicefs/mount-cpu-request: 1000m

juicefs/mount-memory-limit: 5Gi

juicefs/mount-memory-request: 1Gi

provisioner: csi.juicefs.com

reclaimPolicy: Delete

volumeBindingMode: Immediate4.4 验证结果

4.4.1 创建 PVC

如下所示创建了 juicefs 类型的 pvc:

root@node1:~/juicefs-csi-driver# kubectl get pvc |grep liusu-juicefs

liusu-juicefs-pvc1 Bound pvc-157aa1a1-1e85-4396-a44e-7ea9d4a66b6e 10Gi RWX juicefs-sc 63m

liusu-juicefs-pvc2 Bound pvc-02a38e1b-e2d0-44c3-9917-090c008904cd 10Gi RWX juicefs-sc 62m

liusu-juicefs-pvc3 Bound pvc-a45192ad-f882-446f-b3a2-70cbfb8565cd 10Gi RWX juicefs-sc 62m4.4.2 创建 Pod

使用 centos 容器镜像创建一个工作负载,挂载上述步骤创建的 pvc,挂载至容器的 /data 目录下,启动命令执行一个脚本向文件中循环打印日期:

root@node1:~/juicefs-csi-driver# kubectl get pods liusu-juicefs-test1-76bdcc77f7-8657p -o yaml

apiVersion: v1

kind: Pod

metadata:

annotations:

cni.projectcalico.org/containerID: f4862b25dad5b674a35c8c535220ab07a4da0034173dc1b30a90dfd9c6ad00f2

cni.projectcalico.org/podIP: 10.233.90.39/32

cni.projectcalico.org/podIPs: 10.233.90.39/32

juicefs-mountpod-pvc-157aa1a1-1e85-4396-a44e-7ea9d4a66b6e: default/juicefs-node1-pvc-157aa1a1-1e85-4396-a44e-7ea9d4a66b6e-cfsuav

kubesphere.io/creator: admin

kubesphere.io/imagepullsecrets: '{}'

logging.kubesphere.io/logsidecar-config: '{}'

creationTimestamp: "2023-11-15T09:19:43Z"

generateName: liusu-juicefs-test1-76bdcc77f7-

labels:

app: liusu-juicefs-test1

juicefs-uniqueid: ""

pod-template-hash: 76bdcc77f7

name: liusu-juicefs-test1-76bdcc77f7-8657p

namespace: default

ownerReferences:

- apiVersion: apps/v1

blockOwnerDeletion: true

controller: true

kind: ReplicaSet

name: liusu-juicefs-test1-76bdcc77f7

uid: ced8e092-65a9-41b0-ac13-7e3be08ac647

resourceVersion: "19965"

uid: 8bb042a2-38b1-492b-9951-2d5012785028

spec:

containers:

- command:

- sh

- -c

- while true; do echo $(date -u) >> /data/out1.txt; sleep 5; done

image: centos:7.9.2009

imagePullPolicy: IfNotPresent

name: container-t0tqdm

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /data

name: volume-8qnjf8

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: kube-api-access-w45h8

readOnly: true

dnsPolicy: ClusterFirst

enableServiceLinks: true

nodeName: node1

preemptionPolicy: PreemptLowerPriority

priority: 0

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

serviceAccount: default

serviceAccountName: default

terminationGracePeriodSeconds: 30

tolerations:

- effect: NoExecute

key: node.kubernetes.io/not-ready

operator: Exists

tolerationSeconds: 300

- effect: NoExecute

key: node.kubernetes.io/unreachable

operator: Exists

tolerationSeconds: 300

volumes:

- name: volume-8qnjf8

persistentVolumeClaim:

claimName: liusu-juicefs-pvc1

- name: kube-api-access-w45h8

projected:

defaultMode: 420

sources:

- serviceAccountToken:

expirationSeconds: 3607

path: token

- configMap:

items:

- key: ca.crt

path: ca.crt

name: kube-root-ca.crt

- downwardAPI:

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

path: namespace

status:

conditions:

- lastProbeTime: null

lastTransitionTime: "2023-11-15T09:19:43Z"

status: "True"

type: Initialized

- lastProbeTime: null

lastTransitionTime: "2023-11-15T09:20:44Z"

status: "True"

type: Ready

- lastProbeTime: null

lastTransitionTime: "2023-11-15T09:20:44Z"

status: "True"

type: ContainersReady

- lastProbeTime: null

lastTransitionTime: "2023-11-15T09:19:43Z"

status: "True"

type: PodScheduled

containerStatuses:

- containerID: docker://8af50aa5d547dc5dec1a6deaca900311051af2b70ba86de62f72382421433706

image: centos:7.9.2009

imageID: docker-pullable://centos@sha256:be65f488b7764ad3638f236b7b515b3678369a5124c47b8d32916d6487418ea4

lastState: {}

name: container-t0tqdm

ready: true

restartCount: 0

started: true

state:

running:

startedAt: "2023-11-15T09:20:44Z"

hostIP: 172.24.21.221

phase: Running

podIP: 10.233.90.39

podIPs:

- ip: 10.233.90.39

qosClass: BestEffort

startTime: "2023-11-15T09:19:43Z"4.4.3 查看元数据和对象存储

在 redis 客户端 Pod 里面查看 0 号 数据里面已经保存了元数据信息:

juicefs-redis-redis-cluster:6379> select 0

OK

juicefs-redis-redis-cluster:6379> keys *

1) "{0}dirQuotaUsedInodes"

2) "{0}lastCleanupFiles"

3) "{0}dirQuota"

4) "{0}i2"

5) "{0}setting"

6) "{0}delSlices"

7) "{0}sessionInfos"

8) "{0}nextchunk"

9) "{0}d1"

10) "{0}c3_0"

11) "{0}totalInodes"

12) "{0}allSessions"

13) "{0}dirDataLength"

14) "{0}d2"

15) "{0}x1"

16) "{0}dirUsedInodes"

17) "{0}i9223372032828243968"

18) "{0}dirQuotaUsedSpace"

19) "{0}nextinode"

20) "{0}usedSpace"

21) "{0}i3"

22) "{0}lastCleanupSessions"

23) "{0}nextsession"

24) "{0}nextCleanupSlices"

25) "{0}dirUsedSpace"

26) "{0}lastCleanupTrash"

27) "{0}i1"查看存储:

root@node1:~# kubectl get pvc -A |grep juicefs-minio

default juicefs-minio Bound pvc-c6cd65fa-5191-4dba-84d4-a392399b2c0c 50Gi RWO local 87m

root@node1:~#

root@node1:~# cd /var/openebs/local/pvc-c6cd65fa-5191-4dba-84d4-a392399b2c0c/

root@node1:/var/openebs/local/pvc-c6cd65fa-5191-4dba-84d4-a392399b2c0c#

root@node1:/var/openebs/local/pvc-c6cd65fa-5191-4dba-84d4-a392399b2c0c#

root@node1:/var/openebs/local/pvc-c6cd65fa-5191-4dba-84d4-a392399b2c0c# ls -l

total 4

drwxr-sr-x 3 liusu liusu 4096 11月 15 17:20 test008

root@node1:/var/openebs/local/pvc-c6cd65fa-5191-4dba-84d4-a392399b2c0c# cd test008/

root@node1:/var/openebs/local/pvc-c6cd65fa-5191-4dba-84d4-a392399b2c0c/test008#

root@node1:/var/openebs/local/pvc-c6cd65fa-5191-4dba-84d4-a392399b2c0c/test008# ls -l

total 4

drwxr-sr-x 4 liusu liusu 4096 11月 15 17:26 liusu-juicefs

root@node1:/var/openebs/local/pvc-c6cd65fa-5191-4dba-84d4-a392399b2c0c/test008# cd liusu-juicefs/

root@node1:/var/openebs/local/pvc-c6cd65fa-5191-4dba-84d4-a392399b2c0c/test008/liusu-juicefs#

root@node1:/var/openebs/local/pvc-c6cd65fa-5191-4dba-84d4-a392399b2c0c/test008/liusu-juicefs# ls -l

total 12

drwxr-sr-x 3 liusu liusu 4096 11月 15 17:20 chunks

-rw-r--r-- 1 liusu liusu 36 11月 15 17:20 juicefs_uuid

drwxr-sr-x 2 liusu liusu 4096 11月 15 17:26 meta已经将数据分按 Chunk 保存在了 MinIO 中,这个环境中 MinIO使用的是 OpenEBS 类型的 Local PV。并且 MinIO 采用的是单节点部署方式,后续需要在验证 MinIO 为集群版部署的场景。