minio 介绍

一、minio简介

1、简介

对象存储(Object Storage Service,OSS),也叫基于对象的存储,是一种解决和处理离散单元的方法,可提供基于分布式系统之上的对象形式的数据存储服务。对象存储和我们经常接触到的块和文件系统等存储形态不同,它提供RESTful API数据读写接口及丰富的SDK接口,并且常以网络服务的形式提供数据的访问。

对象存储的核心优势所在:对象存储直接提供API给应用使用,采用扁平化的结构管理所有桶(Bucket)和对象。每个桶和对象都有一个全局唯一的ID,根据ID可快速实现对象的查找和数据的访问。对象存储支持基于策略的自动化管理机制,使得每个应用可根据业务需要动态地控制每个桶的数据冗余策略、数据访问权限控制及数据生命周期管理。

MinIO对象存储系统是为海量数据存储、人工智能、大数据分析而设计,基于Apache License v2.0开源协议的对象存储系统,它完全兼容Amazon S3接口,单个对象最大可达5TB,适合存储海量图片、视频、日志文件、备份数据和容器/虚拟机镜像等。MinIO主要采用Golang语言实现,整个系统都运行在操作系统的用户态空间,客户端与存储服务器之间采用http/https通信协议。

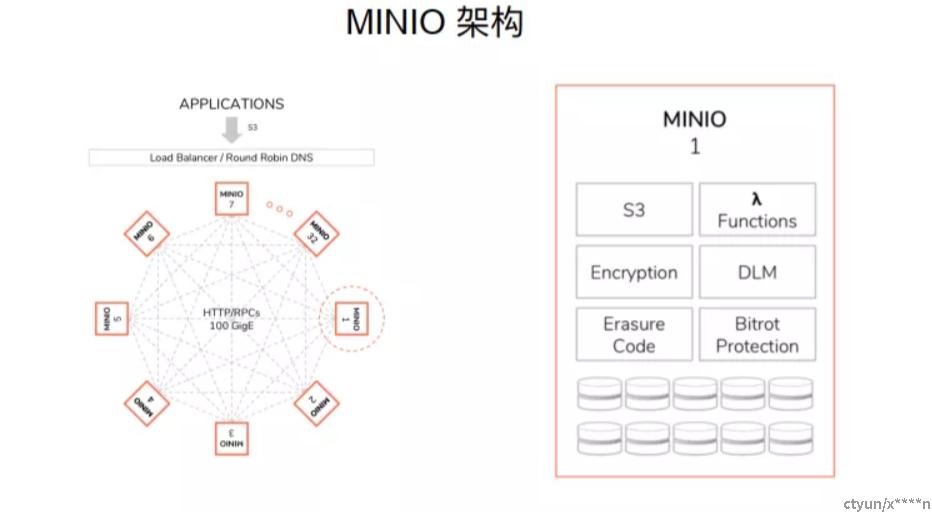

2、架构

左边是 MINIO 集群的示意图,整个集群是由多个角色完全相同的节点所组成的。因为没有特殊的节点,所以任何节点宕机都不会影响整个集群节点之间的通信。 通过 rest 跟 RPC 去通信的,主要是实现分布式的锁跟文件的一些操作。

右边这张图是单个节点的示意图,每个节点都单独对外提供兼容 S3 的服务。

3、概念

Drive:可以理解为一块磁盘 Set:一组Drive的集合

一个对象存储在一个Set上 一个集群划分为多个Set 一个Set包含的Drive数量是固定的 默认由系统根据集群规模自动计算得出 MINIO_ERASURE_SET_DRIVE_COUNT 一个SET中的Drive尽可能分布在不同的节点上

上图中,每一行是一个机器节点,这里有32个集群,每个节点里有一个小方块,我们称之为Drive,Drive可简单地理解为磁盘。一个节点有32个Drive,相当于32个磁盘。 Set是另外的概念,Set是一组Drive的集合,所有红色标识的Drive组成了一个Set。

一个对象最终是存储在一个Set里面的。

二、minio优势

-

Minio 有良好的存储机制

-

Minio 有很好纠删码的算法与擦除编码算法

-

拥有RS code 编码数据恢复原理

1、存储机制

Minio使用纠删码erasure code和校验和checksum来保护数据免受硬件故障和无声数据损坏。 即便丢失一半数量(N/2)的硬盘,仍然可以恢复数据。

2、纠删码

纠删码是一种恢复丢失和损坏数据的数学算法,目前,纠删码技术在分布式存储系统中的应用主要有三类,阵列纠删码(Array Code: RAID5、RAID6 等)、RS(Reed-Solomon)里德-所罗门类纠删码和 LDPC(LowDensity Parity Check Code)低密度奇偶校验纠删码。Erasure Code 是一种编码技术,它可以将 n 份原始数据,增加 m 份数据,并能通过 n+m 份中的任意 n 份数据,还原为原始数据。即如果有任意小于等于 m 份的数据失效,仍然能通过剩下的数据还原出来。

纠删码是存储领域常用的数据冗余技术, 相比多副本复制而言, 纠删码能够以更小的数据冗余度获得更高数据可靠性 。

3、RS code 编码数据恢复原理

Reed Solomon Coding是存储领域常用的一种纠删码,它的基本原理如下: 给定n个数据块d1, d2,…, dn,n和一个正整数m, RS根据n个数据块生成m个校验块, c1, c2,…, cm。 对于任意的n和m, 从n个原始数据块和m 个校验块中任取n块就能解码出原始数据, 即RS最多容忍m个数据块或者校验块同时丢失(纠删码只能容忍数据丢失,无法容忍数据篡改,纠删码正是得名与此)。

RS的特点:

-

低冗余度,高可靠性。

-

数据恢复代价高。 丢失数据块或者编码块时, RS需要读取n个数据块和校验块才能恢复数据, 数据恢复效率也在一定程度上制约了RS的可靠性。

-

数据更新代价高。 数据更新相当于重新编码, 代价很高, 因此常常针对只读数据,或者冷数据。

-

RS编码依赖于两张2^w-1大小的log表, 通常只能采用16位或者8位字长,不能充分利用64位服务器的计算能力, 具体实现上可能要做一些优化。

三、minio部署

1、单节点模式

1.1、 创建NFS

# 安装nfs-utils rpcbind

yum install nfs-utils nfs-common rpcbind -y

# 创建目录

mkdir -p /data/minio

chmod 666 /data/minio/

userad d nfsnobaby

chown nfsnobaby /data/minio/

# 添加访问策略

vi /etc/exports

# 输入内容

/data/minio *(rw,no_root_squash,no_all_squash,sync)

# 启动

systemctl start rpcbind nfs

# 查看

showmount -e

Export list for master-46:

/data/minio *

1.2、创建PV & PVC

# vim minio-pv.yaml

# 添加

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv-minio

spec:

capacity:

storage: 2Gi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Recycle

nfs:

path: /data/minio

server: 192.168.1.46

# 创建

kubectl create -f minio-pv.yaml

# 查看

kubectl get pv -o wide

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE VOLUMEMODE

pv-minio 2Gi RWX Recycle Available 9s Filesystem

#

vim minio-pvc.yaml

# 内容

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-minio

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

# 查看

kubectl get pvc -o wide

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE VOLUMEMODE

pvc-minio Bound pv-minio 2Gi RWX 5s Filesystem

1.3、创建pod & service

#

vim minio.yaml

# 添加

apiVersion: v1

kind: Service

metadata:

name: minio-svc

labels:

app: minio

spec:

type: NodePort

ports:

- name: minio-port

protocol: TCP

port: 9000

nodePort: 32600

targetPort: 9000

selector:

app: minio

---

apiVersion: v1

kind: Pod

metadata:

name: minio

labels:

app: minio

spec:

containers:

- name: minio

env:

- name: MINIO_ACCESS_KEY

value: "minio"

- name: MINIO_SECRET_KEY

value: "minio123"

image: minio/minio:latest

args:

- server

- /data

ports:

- name: minio

containerPort: 9000

volumeMounts:

- name: data

mountPath: /data

volumes:

- name: data

persistentVolumeClaim:

claimName: pvc-minio

# 创建

kubectl apply -f minio.yaml

# 查看pod

kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

minio 1/1 Running 0 13s 10.233.118.8 master-46 <none> <none>

# 查看service

kubectl get svc -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

minio-svc NodePort 10.233.27.17 <none> 9000:32600/TCP 23s app=minio

1.4、测试

192.168.1.46节点上

# 启动 docker 客户端容器

docker run -it --entrypoint=/bin/sh minio/mc

# 添加 server(名字 minio, access key minio, secret key minio123)

mc alias set minio http ://192.168.1.46:32600 minio minio123

# 在 minio server 上创建 bucket harbor-st

mc mb minio/bucket-t1

sh-4.4# echo "hello word" >tt.txt

sh-4.4# put tt.txt minio/bucket-t1/

sh: put: command not found

sh-4.4# mc cp tt.txt minio/bucket-t1/

tt.txt: 11 B / 11 B ┃▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓┃ 483 B/s

sh-4.4# mc ls minio/bucket-t1

[2021-04-08 09:54:40 UTC] 11B tt.txt

192.168.1.48节点上

# 启动 docker 客户端容器

docker run -it --entrypoint=/bin/sh minio/mc

# 添加 server(名字 minio, access key minio, secret key minio123)

mc alias set minio http ://minio-svc:9000 minio minio123

2、集群模式

2.1、准备

##磁盘分区、格式化、挂载。至少需要4块硬盘(分区)

略

##给node打标签

kubectl label node master-49 minio-server=true

kubectl label node master-50 minio-server=true

2.2、创建service

cat minio-distributed-headless-service.yaml

apiVersion: v1

kind: Service

metadata:

name: minio

labels:

app: minio

spec:

publishNotReadyAd dresses: true

clusterIP: None

ports:

- port: 9000

name: minio

selector:

app: minio

kubectl create -f minio-distributed-headless-service.yaml

kubectl get service -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes ClusterIP 10.233.0.1 <none> 443/TCP 4h1m <none>

minio ClusterIP None <none> 9000/TCP 53m app=minio

2.3、创建daemonset

cat minio-distributed-daemonset.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: minio

labels:

app: minio

spec:

selector:

matchLabels:

app: minio

template:

metadata:

labels:

app: minio

spec:

# We only deploy minio to the specified nodes. select your nodes by using `kubectl label node hostname1 -l minio-server=true`

nodeSelector:

minio-server: "true"

# This is to maximize network performance, the headless service can be used to connect to a random host.

hostNetwork: true

# We're just using a hostpath. This path must be the same on all servers, and should be the largest, fastest block device you can fit.

volumes:

- name: storage

hostPath:

path: /mounts/minio1

containers:

- name: minio

env:

- name: MINIO_ACCESS_KEY

value: "minio"

- name: MINIO_SECRET_KEY

value: "minio123"

image: minio/minio:RELEASE.2020-04-04T05-39-31Z

# Unfortunately you must manually define each server. Perhaps autodiscovery via DNS can be implemented in the future.

args:

- server

- http ://master-{49...50}/mnt/disk{1...3}/minio/minio1/data

ports:

- containerPort: 9000

volumeMounts:

- name: storage

mountPath: /mounts/minio1/

kubectl create -f minio-distributed-daemonset.yaml

kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

minio-lrvwj 1/1 Running 0 41m 10.217.148.50 master-50 <none> <none>

minio-n4vdx 1/1 Running 0 41m 10.217.148.49 master-49 <none> <none>

2.4、部署mc

# 启动 docker 客户端容器

docker run -it --entrypoint=/bin/sh minio/mc

# 添加 server(名字 minio, access key minio, secret key minio123)

mc alias set minio http ://minio-svc:9000 minio minio123

# 在 minio server 上创建 bucket bucket-t1

mc mb minio/bucket-t1

#echo "test" >tt.txt

# 上传文件

sh-4.4# mc cp tt.txt minio/bucket-t1

0 B / ? ┃░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░tt.txt: 5 B / 5 B ┃▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓┃ 119 B/s 0ssh-4.4#

sh-4.4# mc ls minio

[2021-04-07 07:14:17 UTC] 0B bucket-t1/

sh-4.4# mc ls minio/bucket-t1

[2021-04-07 07:14:17 UTC] 5B tt.txt

#删除文件

mc rm minio/bucket-t1/tt.txt

Removing `minio/bucket-t1/tt.txt`.

sh-4.4# mc ls minio/bucket-t1

sh-4.4# mc ls minio

[2021-04-14 06:53:06 UTC] 0B bucket-t1/

#删除bucket

sh-4.4# mc rb minio/bucket-t1

Removed `minio/bucket-t1` successfully.

sh-4.4# mc ls minio

四、其他

1、fio测试

1.1 fio安装

yum install libaio-devel fio -y

wget http ://brick.kernel.dk/snaps/fio-2.2.10.tar.gz

tar -zxvf fio-2.2.10.tar.gz

cd fio-2.2.10

./configure

make && make install

fio -filename=/dev/sdc1 -direct=1 -iodepth 1 -thread -rw=randrw -rwmixread=30 -ioengine=psync -bs=8k -size=1G -numjobs=30 -runtime=1000 -group_reporting -name=rw_read30_8k

rw_read30_8k: (g=0): rw=randrw, bs=8K-8K/8K-8K/8K-8K, ioengine=psync, iodepth=1

...

fio-2.2.10

Starting 30 threads

Jobs: 30 (f=30): [m(30)] [100.0% done] [51228KB/117.7MB/0KB /s] [6403/15.7K/0 iops] [eta 00m:00s]

rw_read30_8k: (groupid=0, jobs=30): err= 0: pid=16046: Thu Apr 22 16:34:32 2021

read : io=9283.7MB, bw=51602KB/s, iops=6450, runt=184224msec

clat (usec): min=336, max=79840, avg=807.74, stdev=570.77

lat (usec): min=336, max=79841, avg=808.05, stdev=570.82

clat percentiles (usec):

| 1.00th=[ 458], 5.00th=[ 498], 10.00th=[ 524], 20.00th=[ 564],

| 30.00th=[ 596], 40.00th=[ 636], 50.00th=[ 676], 60.00th=[ 724],

| 70.00th=[ 796], 80.00th=[ 908], 90.00th=[ 1128], 95.00th=[ 1448],

| 99.00th=[ 3216], 99.50th=[ 4448], 99.90th=[ 6752], 99.95th=[ 7968],

| 99.99th=[11968]

bw (KB /s): min= 1037, max= 2411, per=3.34%, avg=1724.63, stdev=188.75

write: io=21436MB, bw=119153KB/s, iops=14894, runt=184224msec

clat (usec): min=867, max=89872, avg=1656.82, stdev=933.76

lat (usec): min=868, max=89872, avg=1657.42, stdev=933.83

clat percentiles (usec):

| 1.00th=[ 1096], 5.00th=[ 1192], 10.00th=[ 1240], 20.00th=[ 1320],

| 30.00th=[ 1368], 40.00th=[ 1432], 50.00th=[ 1496], 60.00th=[ 1560],

| 70.00th=[ 1640], 80.00th=[ 1768], 90.00th=[ 2064], 95.00th=[ 2576],

| 99.00th=[ 5024], 99.50th=[ 6048], 99.90th=[ 9024], 99.95th=[10816],

| 99.99th=[43264]

bw (KB /s): min= 2400, max= 4672, per=3.34%, avg=3981.64, stdev=214.69

lat (usec) : 500=1.65%, 750=17.57%, 1000=6.64%

lat (msec) : 2=65.61%, 4=7.16%, 10=1.32%, 20=0.04%, 50=0.01%

lat (msec) : 100=0.01%

cpu : usr=0.23%, sys=0.97%, ctx=3978440, majf=0, minf=10

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued : total=r=1188300/w=2743860/d=0, short=r=0/w=0/d=0, drop=r=0/w=0/d=0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

READ: io=9283.7MB, aggrb=51602KB/s, minb=51602KB/s, maxb=51602KB/s, mint=184224msec, maxt=184224msec

WRITE: io=21436MB, aggrb=119153KB/s, minb=119153KB/s, maxb=119153KB/s, mint=184224msec, maxt=184224msec

Disk stats (read/write):

sdc: ios=1188206/2743495, merge=0/0, ticks=894573/4360279, in_queue=5255261, util=99.67%

1.2 fio测试

2、cosbench测试

2.1 cosbench安装

2.1.1 单controller、单driver

wget https ://github.com/intel-cloud/cosbench/releases/download/v0.4.2.c4/0.4.2.c4.zip

yum install nmap-ncat java curl java-1.8.0-openjdk-devel unzip -y

unzip 0.4.2.c4.zip

cd 0.4.2.c4

grep java -nr cosbench-start.sh

46:/usr/bin/nohup java -Dcom.amazonaws.services.s3.disableGetObjectMD5Validation=true -Dcosbench.tomcat.config=$TOMCAT_CONFIG -server -cp main/* org.eclipse.equinox.launcher.Main -configuration $OSGI_CONFIG -console $OSGI_CONSOLE_PORT 1> $BOOT_LOG 2>&1 &

chmod -x *.sh

sh -x start-all.sh

+ bash start-driver.sh

Launching osgi framwork ...

Successfully launched osgi framework!

Booting cosbench driver ...

.

Starting cosbench-log_0.4.2 [OK]

Starting cosbench-tomcat_0.4.2 [OK]

Starting cosbench-config_0.4.2 [OK]

Starting cosbench-http_0.4.2 [OK]

Starting cosbench-cdmi-util_0.4.2 [OK]

Starting cosbench-core_0.4.2 [OK]

Starting cosbench-core-web_0.4.2 [OK]

Starting cosbench-api_0.4.2 [OK]

Starting cosbench-mock_0.4.2 [OK]

Starting cosbench-ampli_0.4.2 [OK]

Starting cosbench-swift_0.4.2 [OK]

Starting cosbench-keystone_0.4.2 [OK]

Starting cosbench-httpauth_0.4.2 [OK]

Starting cosbench-s3_0.4.2 [OK]

Starting cosbench-librados_0.4.2 [OK]

Starting cosbench-scality_0.4.2 [OK]

Starting cosbench-cdmi-swift_0.4.2 [OK]

Starting cosbench-cdmi-base_0.4.2 [OK]

Starting cosbench-driver_0.4.2 [OK]

Starting cosbench-driver-web_0.4.2 [OK]

Successfully started cosbench driver!

Listening on port 0.0.0.0/0.0.0.0:18089 ...

Persistence bundle starting...

Persistence bundle started.

----------------------------------------------

!!! Service will listen on web port: 18088 !!!

----------------------------------------------

+ echo

+ echo ======================================================

======================================================

+ echo

+ bash start-controller.sh

Launching osgi framwork ...

Successfully launched osgi framework!

Booting cosbench controller ...

.

Starting cosbench-log_0.4.2 [OK]

Starting cosbench-tomcat_0.4.2 [OK]

Starting cosbench-config_0.4.2 [OK]

Starting cosbench-core_0.4.2 [OK]

Starting cosbench-core-web_0.4.2 [OK]

Starting cosbench-controller_0.4.2 [OK]

Starting cosbench-controller-web_0.4.2 [OK]

Successfully started cosbench controller!

Listening on port 0.0.0.0/0.0.0.0:19089 ...

Persistence bundle starting...

Persistence bundle started.

----------------------------------------------

!!! Service will listen on web port: 19088 !!!

----------------------------------------------

2.1.2 单controller、多driver在一台服务器上

##在一个节点上先启动三个driver

sh ./start-driver.sh 3

Launching osgi framwork ...

Successfully launched osgi framework!

Booting cosbench driver ...

.

Starting cosbench-log_0.4.2 [OK]

Starting cosbench-tomcat_0.4.2 [OK]

Starting cosbench-config_0.4.2 [OK]

Starting cosbench-http_0.4.2 [OK]

Starting cosbench-cdmi-util_0.4.2 [OK]

Starting cosbench-core_0.4.2 [OK]

Starting cosbench-core-web_0.4.2 [OK]

Starting cosbench-api_0.4.2 [OK]

Starting cosbench-mock_0.4.2 [OK]

Starting cosbench-ampli_0.4.2 [OK]

Starting cosbench-swift_0.4.2 [OK]

Starting cosbench-keystone_0.4.2 [OK]

Starting cosbench-httpauth_0.4.2 [OK]

Starting cosbench-s3_0.4.2 [OK]

Starting cosbench-librados_0.4.2 [OK]

Starting cosbench-scality_0.4.2 [OK]

Starting cosbench-cdmi-swift_0.4.2 [OK]

Starting cosbench-cdmi-base_0.4.2 [OK]

Starting cosbench-driver_0.4.2 [OK]

Starting cosbench-driver-web_0.4.2 [OK]

Successfully started cosbench driver!

Listening on port 0.0.0.0/0.0.0.0:18089 ...

Persistence bundle starting...

Persistence bundle started.

----------------------------------------------

!!! Service will listen on web port: 18088 !!!

----------------------------------------------

Launching osgi framwork ...

Successfully launched osgi framework!

Booting cosbench driver ...

.

Starting cosbench-log_0.4.2 [OK]

Starting cosbench-tomcat_0.4.2 [OK]

Starting cosbench-config_0.4.2 [OK]

Starting cosbench-http_0.4.2 [OK]

Starting cosbench-cdmi-util_0.4.2 [OK]

Starting cosbench-core_0.4.2 [OK]

Starting cosbench-core-web_0.4.2 [OK]

Starting cosbench-api_0.4.2 [OK]

Starting cosbench-mock_0.4.2 [OK]

Starting cosbench-ampli_0.4.2 [OK]

Starting cosbench-swift_0.4.2 [OK]

Starting cosbench-keystone_0.4.2 [OK]

Starting cosbench-httpauth_0.4.2 [OK]

Starting cosbench-s3_0.4.2 [OK]

Starting cosbench-librados_0.4.2 [OK]

Starting cosbench-scality_0.4.2 [OK]

Starting cosbench-cdmi-swift_0.4.2 [OK]

Starting cosbench-cdmi-base_0.4.2 [OK]

Starting cosbench-driver_0.4.2 [OK]

Starting cosbench-driver-web_0.4.2 [OK]

Successfully started cosbench driver!

Listening on port 0.0.0.0/0.0.0.0:18189 ...

Persistence bundle starting...

Persistence bundle started.

----------------------------------------------

!!! Service will listen on web port: 18188 !!!

----------------------------------------------

Launching osgi framwork ...

Successfully launched osgi framework!

Booting cosbench driver ...

.

Starting cosbench-log_0.4.2 [OK]

Starting cosbench-tomcat_0.4.2 [OK]

Starting cosbench-config_0.4.2 [OK]

Starting cosbench-http_0.4.2 [OK]

Starting cosbench-cdmi-util_0.4.2 [OK]

Starting cosbench-core_0.4.2 [OK]

Starting cosbench-core-web_0.4.2 [OK]

Starting cosbench-api_0.4.2 [OK]

Starting cosbench-mock_0.4.2 [OK]

Starting cosbench-ampli_0.4.2 [OK]

Starting cosbench-swift_0.4.2 [OK]

Starting cosbench-keystone_0.4.2 [OK]

Starting cosbench-httpauth_0.4.2 [OK]

Starting cosbench-s3_0.4.2 [OK]

Starting cosbench-librados_0.4.2 [OK]

Starting cosbench-scality_0.4.2 [OK]

Starting cosbench-cdmi-swift_0.4.2 [OK]

Starting cosbench-cdmi-base_0.4.2 [OK]

Starting cosbench-driver_0.4.2 [OK]

Starting cosbench-driver-web_0.4.2 [OK]

Successfully started cosbench driver!

Listening on port 0.0.0.0/0.0.0.0:18289 ...

Persistence bundle starting...

Persistence bundle started.

----------------------------------------------

!!! Service will listen on web port: 18288 !!!

----------------------------------------------

[root@controller-1 0.4.2.c4]# netstat -lntp |grep 88

tcp6 0 0 :::18088 :::* LISTEN 16551/java

tcp6 0 0 :::18188 :::* LISTEN 17458/java

tcp6 0 0 :::18288 :::* LISTEN 18127/java

##修改controller配置文件

cat conf/controller.conf

[controller]

concurrency=1

drivers = 3

log_level = DEBUG

log_file = log/system.log

archive_dir = archive

[driver1]

name = driver1

url = http ://12.147.0.6:18088/driver

[driver2]

name = driver2

url = http ://12.147.0.6:18188/driver

[driver3]

name = driver3

url = http ://12.147.0.6:18288/driver

##启动controller

sh ./start-controller.sh

2.2 cosbench测试

单controller、单driver

cat conf/s3-config-sample.xml

<?xml version="1.0" encoding="UTF-8" ?>

<workload name="s3-sample" description="sample benchmark for s3">

<storage type="s3" config="accesskey=minio;secretkey=minio123;endpoint=http ://192.168.1.46:32600" />

<workflow>

<workstage name="init">

<work type="init" workers="1" config="cprefix=s3testqwer;containers=r(1,2)" />

</workstage>

<workstage name="prepare">

<work type="prepare" workers="1" config="cprefix=s3testqwer;containers=r(1,2);objects=r(1,10);sizes=c(64)KB" />

</workstage>

<workstage name="main">

<work name="main" workers="8" runtime="30">

<operation type="read" ratio="80" config="cprefix=s3testqwer;containers=u(1,2);objects=u(1,10)" />

<operation type="write" ratio="20" config="cprefix=s3testqwer;containers=u(1,2);objects=u(11,20);sizes=c(64)KB" />

</work>

</workstage>

<workstage name="cleanup">

<work type="cleanup" workers="1" config="cprefix=s3testqwer;containers=r(1,2);objects=r(1,20)" />

</workstage>

<workstage name="dispose">

<work type="dispose" workers="1" config="cprefix=s3testqwer;containers=r(1,2)" />

</workstage>

</workflow>

</workload>

sh cli.sh submit conf/s3-config-sample.xml

单controller、多driver

<?xml version="1.0" encoding="UTF-8" ?>

<workload name="s3-sample" description="sample benchmark for s3">

<storage type="s3" config="accesskey=minio;secretkey=minio123;endpoint=http ://192.168.1.46:32600" />

<workflow>

<workstage name="init">

<work type="init" workers="1" config="cprefix=s3testqwer;containers=r(1,2)" />

</workstage>

<workstage name="prepare">

<work type="prepare" workers="1" config="cprefix=s3testqwer;containers=r(1,2);objects=r(1,50);sizes=c(64)KB" />

</workstage>

<workstage name="main">

<work name="main" workers="24" runtime="50">

<operation type="read" ratio="80" config="cprefix=s3testqwer;containers=u(1,2);objects=u(1,50)" />

<operation type="write" ratio="20" config="cprefix=s3testqwer;containers=u(1,2);objects=u(51,100);sizes=c(64)KB" />

</work>

</workstage>

<workstage name="cleanup">

<work type="cleanup" workers="1" config="cprefix=s3testqwer;containers=r(1,2);objects=r(1,100)" />

</workstage>

<workstage name="dispose">

<work type="dispose" workers="1" config="cprefix=s3testqwer;containers=r(1,2)" />

</workstage>

</workflow>

</workload>