根据KIP-595: A Raft Protocol for the Metadata Quorum中所述:

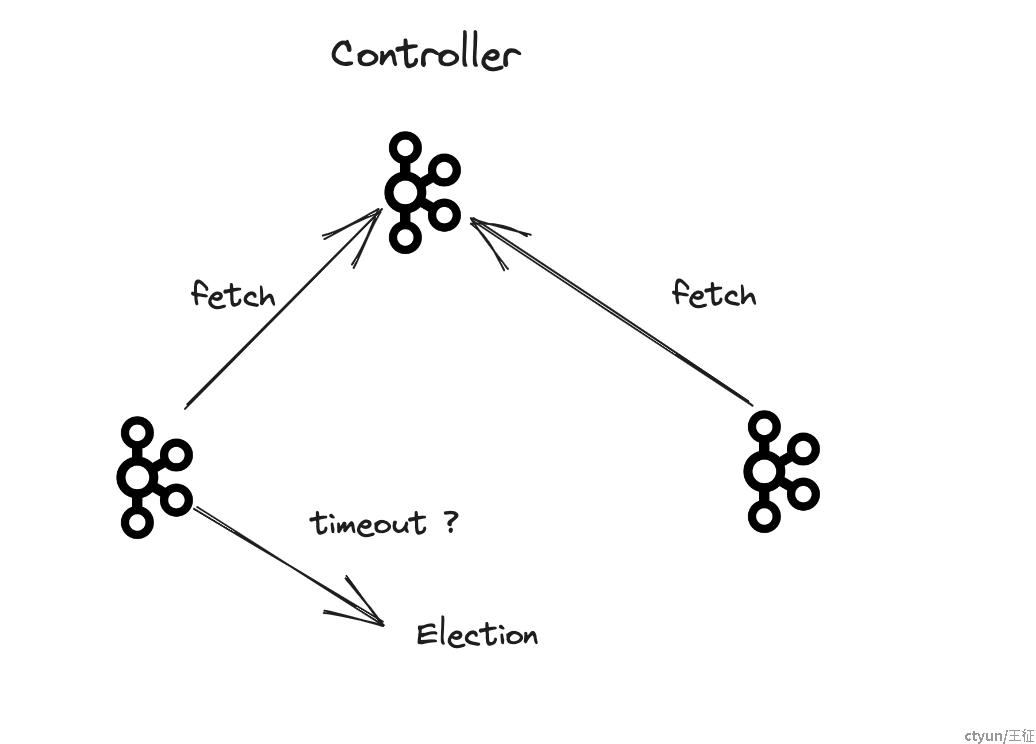

- 当Quorum节点向Leader拉取超时时,会进行新的Controller Leader选举。

private long pollFollowerAsObserver(FollowerState state, long currentTimeMs) {

if (state.hasFetchTimeoutExpired(currentTimeMs)) {

return maybeSendAnyVoterFetch(currentTimeMs);

} else {

final long backoffMs;

ConnectionState connection = requestManager.getOrCreate(state.leaderId());

if (connection.hasRequestTimedOut(currentTimeMs)) {

backoffMs = maybeSendAnyVoterFetch(currentTimeMs);

connection.reset();

} else if (connection.isBackingOff(currentTimeMs)) {

backoffMs = maybeSendAnyVoterFetch(currentTimeMs);

} else {

backoffMs = maybeSendFetchOrFetchSnapshot(state, currentTimeMs);

}

return Math.min(backoffMs, state.remainingFetchTimeMs(currentTimeMs));

}

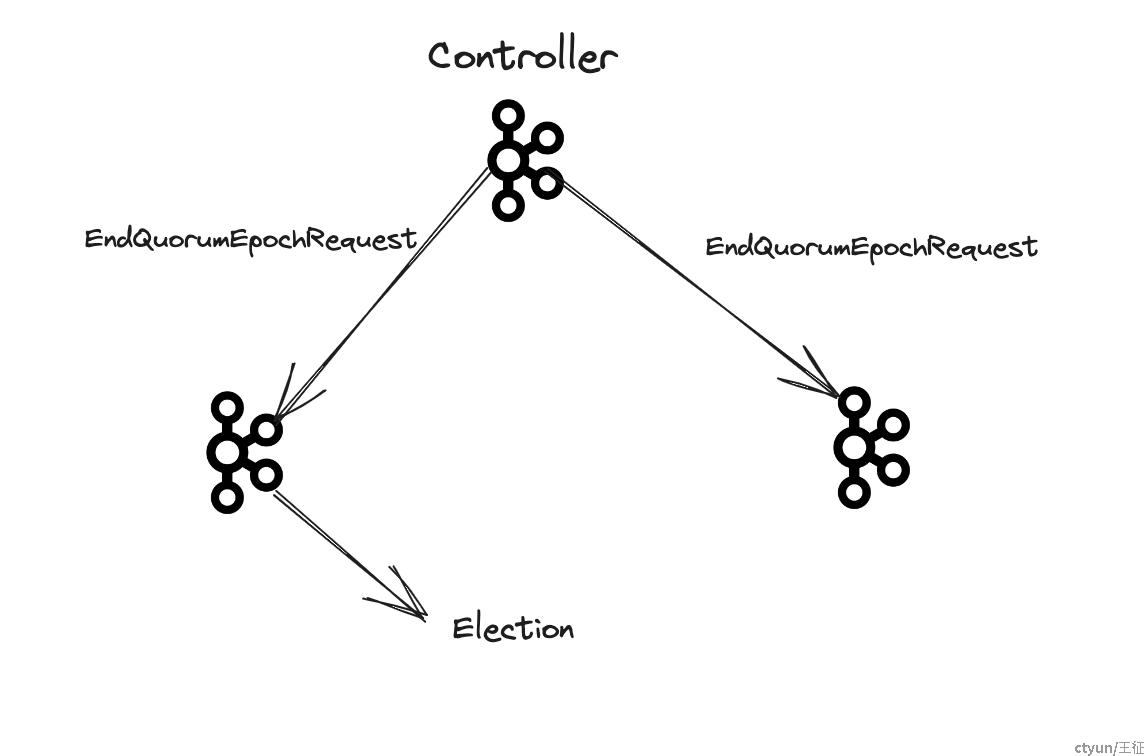

}- 当Controller节点降级Leader身份时,会向其它的Quorum节点发送

EndQuorumEpochRequest请求,从而Quorum节点进行下一轮Leader的选举。

然而当Controller和Quorum节点出现网络分区时,集群中的其余Quorum节点开始新的选举,由于当前版本的KRaft实现,被隔离的Leader节点无法进行自动降级,导致集群中出现多个Leader,从而导致脑裂。如下所示:

脑裂的解决方案

在KIP-595中已经有讲到该问题,只是并没有在当前的KRaft中实现,如下取自于KIP-595内容:

In the pull-based model, however, say a new leader has been elected with a new epoch and everyone has learned about it except the old leader (e.g. that leader was not in the voters anymore and hence not receiving the BeginQuorumEpoch as well), then that old leader would not be notified by anyone about the new leader / epoch and become a pure "zombie leader", as there is no regular heartbeats being pushed from leader to the follower. This could lead to stale information being served to the observers and clients inside the cluster. To resolve this issue, we will piggy-back on the "quorum.fetch.timeout.ms" config, such that if the leader did not receive Fetch requests from a majority of the quorum for that amount of time, it would begin a new election and start sending VoteRequest to voter nodes in the cluster to understand the latest quorum. If it couldn't connect to any known voter, the old leader shall keep starting new elections and bump the epoch.

总的解决方法为在Controller没有收到来自Majotity数量Quorum节点的Fetch请求时,将会进行新的Leader选举,从而将当前Controller节点(被隔离的Leader)进行降级。