在某些业务场景下,用户需要将表按天按月等周期进行分区划分,定时执行数据处理任务,这就需要使用方手动管理分区,否则可能由于使用方没有创建分区导致数据导入失败,这给使用方带来了额外的维护成本。我们在使用Hadoop 分布式环境的hive时,可以利用其本身自带的动态分区功能,基于HDFS上数据存储位置来判断分区的名称,从而建立分区。在进行数据加载的时候,按照分区字段的值,自动地将相应的数据划分到各自的分区内。

Doris的动态分区功能是在0.12 版本中引入的新功能,可以实现动态添加分区及动态删除分区的功能。但是相对于习惯于使用Hive的用户来说还是有较大的区别。Doris目前只支持根据时间字段的Range值来进行动态分区设置。通过动态分区功能,用户可以在建表时设定动态分区的规则。FE 会启动一个后台线程,根据用户指定的规则创建或删除分区。用户也可以在运行时对现有规则进行变更。

接下来,我们在doris数据库里实际操作演示一下具体的动态分区功能。

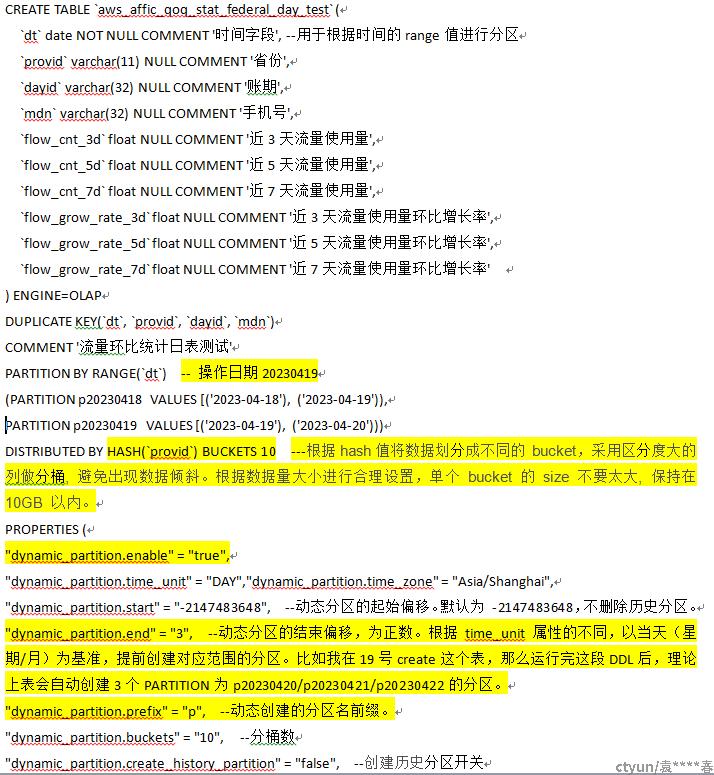

首先创建一张测试表并配置好动态分区规则参数。

CREATE TABLE `aws_affic_qoq_stat_federal_day_test` (

`dt` date NOT NULL COMMENT '时间字段', --用于根据时间的range值进行分区

`provid` varchar(11) NULL COMMENT '省份',

`dayid` varchar(32) NULL COMMENT '账期',

`mdn` varchar(32) NULL COMMENT '手机号',

`flow_cnt_3d` float NULL COMMENT '近3天流量使用量',

`flow_cnt_5d` float NULL COMMENT '近5天流量使用量',

`flow_cnt_7d` float NULL COMMENT '近7天流量使用量',

`flow_grow_rate_3d` float NULL COMMENT '近3天流量使用量环比增长率',

`flow_grow_rate_5d` float NULL COMMENT '近5天流量使用量环比增长率',

`flow_grow_rate_7d` float NULL COMMENT '近7天流量使用量环比增长率'

) ENGINE=OLAP

DUPLICATE KEY(`dt`, `provid`, `dayid`, `mdn`)

COMMENT '流量环比统计日表测试'

PARTITION BY RANGE(`dt`) -- 操作日期20230419

(PARTITION p20230418 VALUES [('2023-04-18'), ('2023-04-19')),

PARTITION p20230419 VALUES [('2023-04-19'), ('2023-04-20'))

)

DISTRIBUTED BY HASH(`provid`) BUCKETS 10 ---根据hash值将数据划分成不同的 bucket,采用区分度大的列做分桶, 避免出现数据倾斜。根据数据量大小进行合理设置,单个 bucket 的 size 不要太大, 保持在 10GB 以内。

PROPERTIES (

"dynamic_partition.enable" = "true",

"dynamic_partition.time_unit" = "DAY",

"dynamic_partition.time_zone" = "Asia/Shanghai",

"dynamic_partition.start" = "-2147483648", --动态分区的起始偏移,为负数。默认为 -2147483648,不删除历史分区。

"dynamic_partition.end" = "3", --动态分区的结束偏移,为正数。根据 time_unit 属性的不同,以当天(星期/月)为基准,提前创建对应范围的分区。比如我在19号create这个表,那么运行完这段DDL后,理论上表会自动创建3个PARTITION为p20230420/p20230421/p20230422的分区。

"dynamic_partition.prefix" = "p", --动态创建的分区名前缀。

"dynamic_partition.buckets" = "10", --分桶数

"dynamic_partition.create_history_partition" = "false", --创建历史分区开关

"dynamic_partition.history_partition_num" = "-1"

---当 create_history_partition 为 true 时,该参数用于指定创建历史分区数量。默认值为 -1, 即未设置。

);

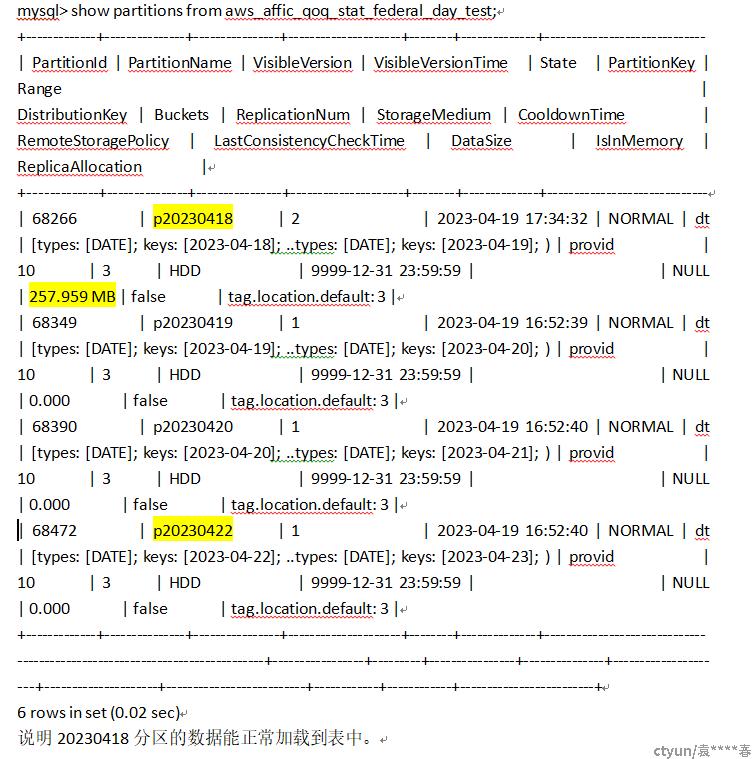

mysql> show partitions from aws_affic_qoq_stat_federal_day_test;

+-------------+---------------+----------------+---------------------+--------+--------------+----------------------------------------------------------------------------+-----------------+---------+----------------+---------------+---------------------+---------------------+--------------------------+----------+------------+-------------------------+

| PartitionId | PartitionName | VisibleVersion | VisibleVersionTime | State | PartitionKey | Range | DistributionKey | Buckets | ReplicationNum | StorageMedium | CooldownTime | RemoteStoragePolicy | LastConsistencyCheckTime | DataSize | IsInMemory | ReplicaAllocation |

+-------------+---------------+----------------+---------------------+--------+--------------+----------------------------------------------------------------------------+-----------------+---------+----------------+---------------+---------------------+---------------------+--------------------------+----------+------------+-------------------------+

| 68266 | p20230418 | 1 | 2023-04-19 16:52:36 | NORMAL | dt | [types: [DATE]; keys: [2023-04-18]; ..types: [DATE]; keys: [2023-04-19]; ) | provid | 10 | 3 | HDD | 9999-12-31 23:59:59 | | NULL | 0.000 | false | tag.location.default: 3 |

| 68349 | p20230419 | 1 | 2023-04-19 16:52:39 | NORMAL | dt | [types: [DATE]; keys: [2023-04-19]; ..types: [DATE]; keys: [2023-04-20]; ) | provid | 10 | 3 | HDD | 9999-12-31 23:59:59 | | NULL | 0.000 | false | tag.location.default: 3 |

| 68390 | p20230420 | 1 | 2023-04-19 16:52:40 | NORMAL | dt | [types: [DATE]; keys: [2023-04-20]; ..types: [DATE]; keys: [2023-04-21]; ) | provid | 10 | 3 | HDD | 9999-12-31 23:59:59 | | NULL | 0.000 | false | tag.location.default: 3 |

| 68431 | p20230421 | 1 | 2023-04-19 16:52:40 | NORMAL | dt | [types: [DATE]; keys: [2023-04-21]; ..types: [DATE]; keys: [2023-04-22]; ) | provid | 10 | 3 | HDD | 9999-12-31 23:59:59 | | NULL | 0.000 | false | tag.location.default: 3 |

| 68472 | p20230422 | 1 | 2023-04-19 16:52:40 | NORMAL | dt | [types: [DATE]; keys: [2023-04-22]; ..types: [DATE]; keys: [2023-04-23]; ) | provid | 10 | 3 | HDD | 9999-12-31 23:59:59 | | NULL | 0.000 | false | tag.location.default: 3 |

+-------------+---------------+----------------+---------------------+--------+--------------+----------------------------------------------------------------------------+-----------------+---------+----------------+---------------+---------------------+---------------------+--------------------------+----------+------------+-------------------------+

6 rows in set (0.03 sec)

现在我们通过broker load针对表中已有分区导入一下测试数据:

LOAD LABEL aws_affic_qoq_stat_federal_day_test_load_20230418

(

DATA INFILE("hdfs://ctyunns/warehouse/tablespace/managed/hive/bdmkt.db/AWS_AFFIC_QOQ_STAT_FEDERAL_DAY/day_id=20230418/prov_id=812/*")

INTO TABLE aws_affic_qoq_stat_federal_day_test

COLUMNS TERMINATED BY "\\x05"

(provid,dayid,mdn,flow_cnt_3d,flow_cnt_5d,flow_cnt_7d,flow_cnt_14d,flow_cnt_30d,flow_grow_rate_3d,flow_grow_rate_5d,flow_grow_rate_7d,flow_grow_rate_14d,flow_grow_rate_30d)

COLUMNS FROM PATH AS (`day_id`)

SET(dt=STR_TO_DATE(`day_id`,'%Y%m%d'),provid=provid,dayid=dayid,mdn=mdn,flow_cnt_3d=flow_cnt_3d,flow_cnt_5d=flow_cnt_5d,flow_cnt_7d=flow_cnt_7d,flow_cnt_14d=flow_cnt_14d,flow_cnt_30d=flow_cnt_30d,flow_grow_rate_3d=flow_grow_rate_3d,flow_grow_rate_5d=flow_grow_rate_5d,flow_grow_rate_7d=flow_grow_rate_7d,flow_grow_rate_14d=flow_grow_rate_14d,flow_grow_rate_30d=flow_grow_rate_30d)

)

WITH BROKER "my_broker"

(

'fs.defaultFS'='hdfs://ctyunns',

'dfs.nameservices'='ctyunns',

'dfs.ha.namenodes.ctyunns'='nn1,nn2',

'dfs.namenode.rpc-address.ctyunns.nn1'='xx.xx.xx.xx:54310',

'dfs.namenode.rpc-address.ctyunns.nn2'=' xx.xx.xx.xx:54310',

'dfs.client.failover.proxy.provider.ctyunns'='org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider',

'dfs.namenode.kerberos.principal'='hdfs/_HOST@TEST.BIGDATA. XX.CN',

'hadoop.security.authentication'='kerberos',

'kerberos_principal'='bdmp/10.31.0.42@TEST.BIGDATA.XX.CN',

'kerberos_keytab_content'='XXXXX'

);

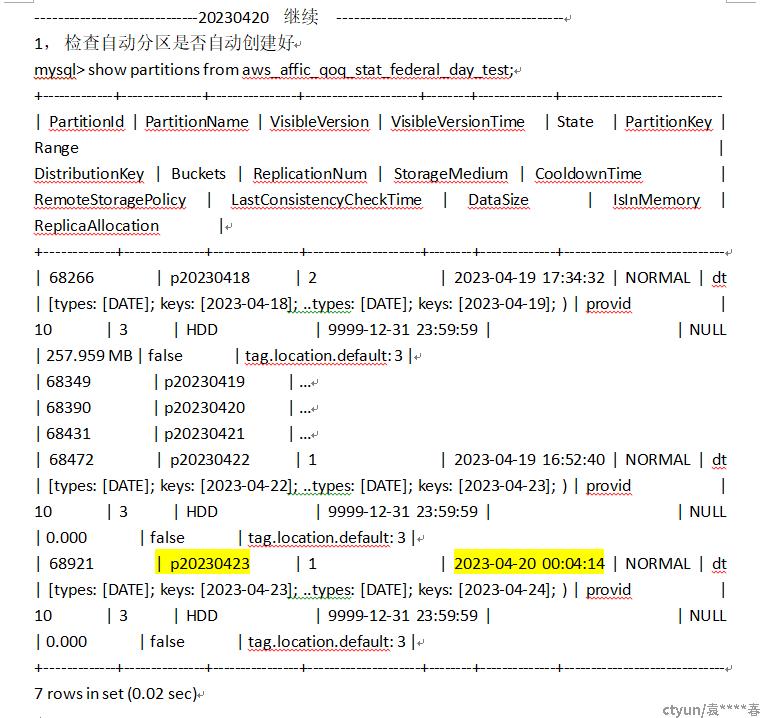

截止20230419,当前表中分区是到p20230422, 接下来需要验证动态分区是否生效。即接下来20230420天分区是否会自动增加至p20230423 ?

show create table

(PARTITION p20230417 VALUES [('2023-04-17'), ('2023-04-18')),

PARTITION p20230418 VALUES [('2023-04-18'), ('2023-04-19')),

PARTITION p20230419 VALUES [('2023-04-19'), ('2023-04-20')),

PARTITION p20230420 VALUES [('2023-04-20'), ('2023-04-21')),

PARTITION p20230421 VALUES [('2023-04-21'), ('2023-04-22')),

PARTITION p20230422 VALUES [('2023-04-22'), ('2023-04-23')))

说明新的分区会每天凌晨自动创建好。

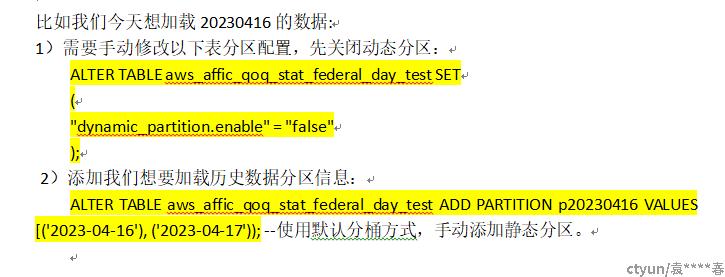

有些情况下,我们需要加载历史数据到建有动态分区的数据表中。这时就需要用到Doris的静态分区功能,先修改表属性为静态分区,否则在导入历史数据时会报分区不存在的错误。

导入数据测试:

LOAD LABEL aws_affic_qoq_stat_federal_day_test_load_20230416_2

(

DATA INFILE("hdfs://ctyunns/warehouse/tablespace/managed/hive/bdmkt.db/AWS_AFFIC_QOQ_STAT_FEDERAL_DAY/day_id=20230416/prov_id=*/*")

INTO TABLE aws_affic_qoq_stat_federal_day_test

COLUMNS TERMINATED BY "\\x05"

(…..)

………

mysql> show partitions from aws_affic_qoq_stat_federal_day_test;

+-------------+---------------+----------------+---------------------+--------+--------------+---------------------------------------------------

| PartitionId | PartitionName | VisibleVersion | VisibleVersionTime | State | PartitionKey | Range | DistributionKey | Buckets | ReplicationNum | StorageMedium | CooldownTime | RemoteStoragePolicy | LastConsistencyCheckTime | DataSize | IsInMemory | ReplicaAllocation |

+-------------+---------------+----------------+---------------------+--------+--------------+---------------------------------------------------

| 69614 | p20230416 | 2 | 2023-04-20 14:57:49 | NORMAL | dt | [types: [DATE]; keys: [2023-04-16]; ..types: [DATE]; keys: [2023-04-17]; ) | provid | 10 | 3 | HDD | 9999-12-31 23:59:59 | | NULL | 48.311 GB | false | tag.location.default: 3 |

| 68265 | p20230417 | 2 | 2023-04-19 17:09:07 | NORMAL | dt | [types: [DATE]; keys: [2023-04-17]; ..types: [DATE]; keys: [2023-04-18]; ) | provid | 10 | 3 | HDD | 9999-12-31 23:59:59 | | NULL | 46.424 GB | false | tag.location.default: 3 |

| 68266 | p20230418 | 2 | 2023-04-19 17:34:32 | NORMAL | dt | [types: [DATE]; keys: [2023-04-18]; ..types: [DATE]; keys: [2023-04-19]; ) | provid | 10 | 3 | HDD | 9999-12-31 23:59:59 | | NULL | 257.959 MB | false | tag.location.default: 3 |

| 68349 | p20230419 | 1 | 2023-04-19 16:52:39 | NORMAL | dt | [types: [DATE]; keys: [2023-04-19]; ..types: [DATE]; keys: [2023-04-20]; ) | provid | 10 | 3 | HDD | 9999-12-31 23:59:59 | | NULL | 0.000 | false | tag.location.default: 3 |

| 68390 | p20230420 | 1 | 2023-04-19 16:52:40 | NORMAL | dt | [types: [DATE]; keys: [2023-04-20]; ..types: [DATE]; keys: [2023-04-21]; ) | provid | 10 | 3 | HDD | 9999-12-31 23:59:59 | | NULL | 0.000 | false | tag.location.default: 3 |

| 68431 | p20230421 | 1 | 2023-04-19 16:52:40 | NORMAL | dt | [types: [DATE]; keys: [2023-04-21]; ..types: [DATE]; keys: [2023-04-22]; ) | provid | 10 | 3 | HDD | 9999-12-31 23:59:59 | | NULL | 0.000 | false | tag.location.default: 3 |

| 68472 | p20230422 | 1 | 2023-04-19 16:52:40 | NORMAL | dt | [types: [DATE]; keys: [2023-04-22]; ..types: [DATE]; keys: [2023-04-23]; ) | provid | 10 | 3 | HDD | 9999-12-31 23:59:59 | | NULL | 0.000 | false | tag.location.default: 3 |

| 68921 | p20230423 | 1 | 2023-04-20 00:04:14 | NORMAL | dt | [types: [DATE]; keys: [2023-04-23]; ..types: [DATE]; keys: [2023-04-24]; ) | provid | 10 | 3 | HDD | 9999-12-31 23:59:59 | | NULL | 0.000 | false | tag.location.default: 3 |

+-------------+---------------+----------------+---------------------+--------+--------------+----------------------------------------------------------------------------+-----------------+---------+----------------+---------------+---------------------+---------------------+--------------------------+------------+------------+-------------------------+

8 rows in set (0.01 sec)

3)最后,加载好历史数据后再修改回动态分区设置:

ALTER TABLE aws_affic_qoq_stat_federal_day_test SET

(

"dynamic_partition.enable" = "true"

);