1 部署安装

除了Neutron组件,其余的组件部署安装过程不变。因此下面仅针对Neutron组件部署安装展开描述。

使用OVN之后,不再需要各类Agent(如:ovs agent、l3 agent、dhcp agent、metadata agent)。因此,无需再部署这些Agent。

1.1 控制节点

1.1.1 Neutron Server

- 数据库,账户,Service,Endpoint部署过程保持不变(按照官网流程操作即可)。

- 执行命令安装neutron server: yum install openstack-neutron openstack-neutron-ml2。

对于/etc/neutron/neutron.conf,/etc/neutron/plugins/ml2/ml2_conf.ini,/etc/nova/nova.conf里的neutron配置均先按照官网流程操作,待后续部署OVN时再调整过来。

1.1.2 OVN

1.1.2.1 更新源

在/etc/yum.repos.d目录下增加源,文件名为:ovs-master.repo,内容如下:

[copr:copr.fedorainfracloud.org:leifmadsen:ovs-master]

name=Copr repo for ovs-master owned by leifmadsen

baseurl=https://download.copr.fedorainfracloud.org/results/leifmadsen/ovs-master/epel-7-$basearch/

type=rpm-md

skip_if_unavailable=True

gpgcheck=1

gpgkey=https://download.copr.fedorainfracloud.org/results/leifmadsen/ovs-master/pubkey.gpg

repo_gpgcheck=0

enabled=1

enabled_metadata=1

执行命令生效:yum clean all,yum makecache

1.1.2.2 安装包

执行命令:yum install openvswitch-ovn-*安装 openvswitch-ovn

执行命令:yum install python-networking-ovn安装networking-ovn

安装完成后,执行如下:

ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf \

--config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

1.1.3 配置

- /etc/neutron/neutron.conf

[DEFAULT]

service_plugins = networking_ovn.l3.l3_ovn.OVNL3RouterPlugin

---》增加这个配置即可,其余的在1.1.1节已完成了配置。

- /etc/neutron/plugins/ml2/ml2_conf.ini

[ml2]

mechanism_drivers = ovn

type_drivers = local,flat,vlan,geneve

tenant_network_types = geneve

extension_drivers = port_security

overlay_ip_version = 4

--->覆盖1.1.1节配置的内容

[ml2_type_geneve]

vni_ranges = 1:65536

max_header_size = 38

[securitygroup]

enable_security_group = true

[ovn]

ovn_nb_connection = tcp:IP_ADDRESS:6641

ovn_sb_connection = tcp:IP_ADDRESS:6642

ovn_l3_scheduler = leastloaded

enable_distributed_floating_ip = True

1.1.4启动服务

- 启动nova-api: systemctl restart openstack-nova-api.service

- 启动ovs: systemctl start openvswitch、systemctl enable openvswitch

- 启动ovn-northd: systemctl start ovn-northd、systemctl enable ovn-northd

- 启动相关的ovsdb server服务(如果不想监听所有网卡的IP,下面的0.0.0地址需要替换为管理IP地址):

- ovn-nbctl set-connection ptcp:6641:0.0.0.0 -- set connection . inactivity_probe=60000

- ovn-sbctl set-connection ptcp:6642:0.0.0.0 -- set connection . inactivity_probe=60000

如果需要使用VTEP功能,则还要执行如下:

- ovs-appctl -t ovsdb-server ovsdb-server/add-remote ptcp:6640:0.0.0.0

- 启动neutron server: systemctl enable neutron-server.service, systemctl start neutron-server.service

1.1 网络节点

无需网络节点(因为可以使用计算节点充当网关节点)。

1.2 计算节点

1.2.1 OVN

1.2.1.1 更新源

同1.1.2.1。

1.2.1.2 安装包

执行命令:yum install openvswitch-ovn-*安装 openvswitch-ovn

执行命令:yum install python-networking-ovn安装networking-ovn

1.3.1.3 配置

/etc/neutron/neutron.conf、/etc/nova/nova.conf里的neutron配置均按照官网流程操作即可。

1.2.1.3 启动服务

- 启动nova-compute:systemctl restart openstack-nova-compute.service

- 启动ovs: systemctl start openvswitch、systemctl enable openvswitch

- 配置使用控制节点的ovsdb数据库:ovs-vsctl set open . external-ids:ovn-remote=tcp:<控制节点管理网IP>:6642

- 配置支持的overlay协议:ovs-vsctl set open . external-ids:ovn-encap-type=geneve,vxlan

- 配置vtep ip: ovs-vsctl set open . external-ids:ovn-encap-ip=IP_ADDRESS

- 注册为Gateway节点:

- ovs-vsctl set open . external-ids:ovn-cms-options=enable-chassis-as-gw

- ovs-vsctl set open . external-ids:ovn-bridge-mappings=provider:br-ex

- ovs-vsctl --may-exist add-br br-ex

- ovs-vsctl --may-exist add-port br-ex <INTERFACE_NAME>

- 启动ovn-controller:systemctl start ovn-controller、systemctl enable ovn-controller

1 数据结构

这节主要介绍OVN一些重要的数据结构。涉及的命令请参考第5章节。

2.1 NB

2.1.1 Logical_Switch

指逻辑交换机,对应Neutron里的network,即:一个network无论拥有多少个subnets,在OVN里都会创建一个逻辑交换机。下面是一个具体的示例。

[root@controller ~]# ovn-nbctl list Logical_Switch

_uuid : 588ab948-6348-4298-a913-f0454d752bb8

acls : []

dns_records : []

external_ids : {"neutron:network_name"="n1", "neutron:revision_number"="4"}

load_balancer : []

name : "neutron-087e9517-8a82-43b5-9c84-24d20a15b16d" --->neutron-<network uuid>

other_config : {}

ports : [4bac01c0-671e-4a8b-873c-16fc7a448546, 8d98b779-e195-42a8-9210-afc5d9b6ebe1, a6a777b5-3902-44bd-b74b-8a3a6ceda61e, aee3b083-fa68-4586-81a0-f4fb090adc42, d29c9381-58e3-45fd-9e4a-e9a2cb8cd84f, e6d97a25-dd5b-4203-8bd5-2ec0f248cee5, f7cc7872-22aa-4a2a-9bbd-d4c47b243eb0]

qos_rules : []

_uuid : a7f84ef5-28f5-4b5d-a3aa-935251cde27f

acls : []

dns_records : []

external_ids : {"neutron:network_name"=ext, "neutron:revision_number"="6"}

load_balancer : []

name : "neutron-fc8dfbcb-0272-4025-8596-850345f86e13"

other_config : {}

ports : [1258f0b9-a2ec-4a08-8689-9ed38f7f43c0, 9daf6121-d459-4caf-bfec-a58d8104ba47]

qos_rules : []

2.1.2 Logical_Switch_Port

指逻辑交换机端口。下面是一个具体的示例。

[root@controller ~]# ovn-nbctl list Logical_Switch_Port

_uuid : 4bac01c0-671e-4a8b-873c-16fc7a448546

addresses : [router]

dhcpv4_options : []

dhcpv6_options : []

dynamic_addresses : []

enabled : true

--->device_id=router uuid

external_ids : {"neutron:cidrs"="10.1.2.1/24", "neutron:device_id"="0e2754a2-c506-4593-a94c-1b9e0549c8d9", "neutron:device_owner"="network:router_interface", "neutron:network_name"="neutron-087e9517-8a82-43b5-9c84-24d20a15b16d", "neutron:port_name"="", "neutron:project_id"="4f1de4c6c6704b618f5ce2eeb8aa4135", "neutron:revision_number"="7", "neutron:security_group_ids"=""}

name : "d46cf500-9d21-49c5-8099-0b7c53ae5e32" --->port uuid

options : {router-port="lrp-d46cf500-9d21-49c5-8099-0b7c53ae5e32"}

parent_name : []

port_security : []

tag : []

tag_request : []

type : router

up : true

_uuid : 9daf6121-d459-4caf-bfec-a58d8104ba47

addresses : [router]

dhcpv4_options : []

dhcpv6_options : []

dynamic_addresses : []

enabled : true

external_ids : {"neutron:cidrs"="172.168.1.4/24", "neutron:device_id"="0e2754a2-c506-4593-a94c-1b9e0549c8d9", "neutron:device_owner"="network:router_gateway", "neutron:network_name"="neutron-fc8dfbcb-0272-4025-8596-850345f86e13", "neutron:port_name"="", "neutron:project_id"="", "neutron:revision_number"="7", "neutron:security_group_ids"=""}

name : "4cdf4897-c0e5-4a00-b182-f9eae17b499e"

options : {nat-addresses=router, router-port="lrp-4cdf4897-c0e5-4a00-b182-f9eae17b499e"}

parent_name : []

port_security : []

tag : []

tag_request : []

type : router

up : true

_uuid : 8d98b779-e195-42a8-9210-afc5d9b6ebe1

addresses : ["fa:16:3e:f1:da:40 10.1.1.11"]

dhcpv4_options : 59f9d0ee-cde4-48fc-b97a-826e53d412ce

dhcpv6_options : []

dynamic_addresses : []

enabled : true

--->neutron:device_id为vm uuid

external_ids : {"neutron:cidrs"="10.1.1.11/24", "neutron:device_id"="afda5e0d-9698-4b3e-8b7a-cb0b03ad733e", "neutron:device_owner"="compute:nova", "neutron:network_name"="neutron-087e9517-8a82-43b5-9c84-24d20a15b16d", "neutron:port_name"="n1subnet1_vm1", "neutron:project_id"="4f1de4c6c6704b618f5ce2eeb8aa4135", "neutron:revision_number"="22", "neutron:security_group_ids"="847a5488-34f9-4c42-96d5-807a30c0fd03"}

name : "a2d480dc-ec4c-4a91-aac7-b2c015afeab6" --->port uuid

options : {requested-chassis=compute}

parent_name : []

port_security : ["fa:16:3e:f1:da:40 10.1.1.11"]

tag : []

tag_request : []

type : ""

up : true

_uuid : d29c9381-58e3-45fd-9e4a-e9a2cb8cd84f

addresses : ["fa:16:3e:92:06:64 10.1.1.6"]

dhcpv4_options : 59f9d0ee-cde4-48fc-b97a-826e53d412ce

dhcpv6_options : []

dynamic_addresses : []

enabled : true

external_ids : {"neutron:cidrs"="10.1.1.6/24", "neutron:device_id"="f3726b8a-0587-4755-b97d-27fe28ed8d66", "neutron:device_owner"="compute:nova", "neutron:network_name"="neutron-087e9517-8a82-43b5-9c84-24d20a15b16d", "neutron:port_name"="n1subnet1_vm2", "neutron:project_id"="4f1de4c6c6704b618f5ce2eeb8aa4135", "neutron:revision_number"="22", "neutron:security_group_ids"="847a5488-34f9-4c42-96d5-807a30c0fd03"}

name : "40b3a54d-4cf4-4a8d-bc03-d0f418824e10"

options : {requested-chassis=compute}

parent_name : []

port_security : ["fa:16:3e:92:06:64 10.1.1.6"]

tag : []

tag_request : []

type : ""

up : true

_uuid : 1258f0b9-a2ec-4a08-8689-9ed38f7f43c0

addresses : [unknown]

dhcpv4_options : []

dhcpv6_options : []

dynamic_addresses : []

enabled : []

external_ids : {}

name : "provnet-fc8dfbcb-0272-4025-8596-850345f86e13" --->对应provnet-<ext uuid>

options : {network_name=provider}

parent_name : []

port_security : []

tag : []

tag_request : []

type : localnet

up : false

_uuid : f7cc7872-22aa-4a2a-9bbd-d4c47b243eb0

addresses : ["fa:16:3e:76:75:49 10.1.1.4"]

dhcpv4_options : 59f9d0ee-cde4-48fc-b97a-826e53d412ce

dhcpv6_options : []

dynamic_addresses : []

enabled : true

external_ids : {"neutron:cidrs"="10.1.1.4/24", "neutron:device_id"="ec1b9d7d-e916-4aca-82be-bf5883d7d252", "neutron:device_owner"="compute:nova", "neutron:network_name"="neutron-087e9517-8a82-43b5-9c84-24d20a15b16d", "neutron:port_name"="n1subnet1_vm3", "neutron:project_id"="4f1de4c6c6704b618f5ce2eeb8aa4135", "neutron:revision_number"="22", "neutron:security_group_ids"="847a5488-34f9-4c42-96d5-807a30c0fd03"}

name : "c0e5c1f7-3d77-4bc8-b842-9b49f2878a10"

options : {requested-chassis=controller}

parent_name : []

port_security : ["fa:16:3e:76:75:49 10.1.1.4"]

tag : []

tag_request : []

type : ""

up : true

_uuid : aee3b083-fa68-4586-81a0-f4fb090adc42

addresses : ["fa:16:3e:9e:d8:3a 10.1.2.8"]

dhcpv4_options : 4569e4ed-c093-4c72-a80c-d84314bb977a

dhcpv6_options : []

dynamic_addresses : []

enabled : true

external_ids : {"neutron:cidrs"="10.1.2.8/24", "neutron:device_id"="8401ffa7-73d1-4ddb-b2c0-816f6e632ad3", "neutron:device_owner"="compute:nova", "neutron:network_name"="neutron-087e9517-8a82-43b5-9c84-24d20a15b16d", "neutron:port_name"="n1subnet2_vm1", "neutron:project_id"="4f1de4c6c6704b618f5ce2eeb8aa4135", "neutron:revision_number"="26", "neutron:security_group_ids"="847a5488-34f9-4c42-96d5-807a30c0fd03"}

name : "ce2635b2-ce67-487b-8d9c-af1025fdb5c0"

options : {requested-chassis=controller}

parent_name : []

port_security : ["fa:16:3e:9e:d8:3a 10.1.2.8"]

tag : []

tag_request : []

type : ""

up : true

_uuid : a6a777b5-3902-44bd-b74b-8a3a6ceda61e

addresses : ["fa:16:3e:34:b3:21 10.1.2.6"]

dhcpv4_options : 4569e4ed-c093-4c72-a80c-d84314bb977a

dhcpv6_options : []

dynamic_addresses : []

enabled : true

external_ids : {"neutron:cidrs"="10.1.2.6/24", "neutron:device_id"="4d7ce472-b75d-44c7-9a67-23884653e8be", "neutron:device_owner"="compute:nova", "neutron:network_name"="neutron-087e9517-8a82-43b5-9c84-24d20a15b16d", "neutron:port_name"="n1subnet2_vm2", "neutron:project_id"="4f1de4c6c6704b618f5ce2eeb8aa4135", "neutron:revision_number"="26", "neutron:security_group_ids"="847a5488-34f9-4c42-96d5-807a30c0fd03"}

name : "d2bd73be-6cfa-441a-94fc-579532d75025"

options : {requested-chassis=compute}

parent_name : []

port_security : ["fa:16:3e:34:b3:21 10.1.2.6"]

tag : []

tag_request : []

type : ""

up : true

_uuid : e6d97a25-dd5b-4203-8bd5-2ec0f248cee5

addresses : [router]

dhcpv4_options : []

dhcpv6_options : []

dynamic_addresses : []

enabled : true

external_ids : {"neutron:cidrs"="10.1.1.1/24", "neutron:device_id"="0e2754a2-c506-4593-a94c-1b9e0549c8d9", "neutron:device_owner"="network:router_interface", "neutron:network_name"="neutron-087e9517-8a82-43b5-9c84-24d20a15b16d", "neutron:port_name"="", "neutron:project_id"="4f1de4c6c6704b618f5ce2eeb8aa4135", "neutron:revision_number"="7", "neutron:security_group_ids"=""}

name : "5478dcf9-9cd4-4410-b7a5-340ee014a17e"

options : {router-port="lrp-5478dcf9-9cd4-4410-b7a5-340ee014a17e"}

parent_name : []

port_security : []

tag : []

tag_request : []

type : router

up : true

2.1.3 ACL

对应neutron中的安全组规则。下面是一个具体的示例。

[root@controller ~]# ovn-nbctl list ACL

_uuid : c2e79968-7b13-4d58-ae56-641a623940de

action : allow-related

direction : from-lport

external_ids : {"neutron:security_group_rule_id"="c90f79f7-dc01-4351-ad38-768b427895e7"}

log : false

match : "inport == @pg_847a5488_34f9_4c42_96d5_807a30c0fd03 && ip4"

meter : []

name : []

priority : 1002

severity : []

_uuid : 4474cf48-2a2b-4c90-b6a7-9b4237b22029

action : allow-related

direction : to-lport

external_ids : {"neutron:security_group_rule_id"="f3238c94-cf23-4d69-9051-89998a794f66"}

log : false

match : "outport == @pg_847a5488_34f9_4c42_96d5_807a30c0fd03 && ip4"

meter : []

name : []

priority : 1002

severity : []

_uuid : b55d5780-0276-43fb-b09e-45d22d711a45

action : allow-related

direction : to-lport

external_ids : {"neutron:security_group_rule_id"="99c01bce-17b2-4e81-8264-f6de1d0f9cb2"}

log : false

match : "outport == @pg_847a5488_34f9_4c42_96d5_807a30c0fd03 && ip4 && ip4.src == 1.1.1.1/32"

meter : []

name : []

priority : 1002

severity : []

_uuid : 9edf0469-3d97-46f9-a727-d44430d6155f

action : drop

direction : from-lport

external_ids : {}

log : false

match : "inport == @neutron_pg_drop && ip"

meter : []

name : []

priority : 1001

severity : []

_uuid : 178f5842-13a6-421b-976d-667e2b077dad

action : allow-related

direction : from-lport

external_ids : {"neutron:security_group_rule_id"="735c8c38-87e6-4e9c-8200-5bea4eb65f12"}

log : false

match : "inport == @pg_847a5488_34f9_4c42_96d5_807a30c0fd03 && ip6"

meter : []

name : []

priority : 1002

severity : []

_uuid : 7f1b3d0b-8aed-4a88-bccc-1db667087a7f

action : allow-related

direction : from-lport

external_ids : {"neutron:security_group_rule_id"="465061a5-4ccb-40e7-9857-95a874a626a3"}

log : false

match : "inport == @pg_025465a5_649f_4b6c_980c_68d0c14c4086 && ip4"

meter : []

name : []

priority : 1002

severity : []

_uuid : 36e3c1f4-f9dc-4e4a-a6b9-aebf5bc351f6

action : allow-related

direction : from-lport

external_ids : {"neutron:security_group_rule_id"="6c7a29b0-ca86-4dff-861c-002cecea4735"}

log : false

match : "inport == @pg_025465a5_649f_4b6c_980c_68d0c14c4086 && ip6"

meter : []

name : []

priority : 1002

severity : []

_uuid : 637ec7dc-7bd7-487b-9c83-47f3a3464a8f

action : allow-related

direction : to-lport

external_ids : {"neutron:security_group_rule_id"="6e1aa839-2f49-45f9-881a-c97ea022f117"}

log : false

match : "outport == @pg_025465a5_649f_4b6c_980c_68d0c14c4086 && ip6 && ip6.src == $pg_025465a5_649f_4b6c_980c_68d0c14c4086_ip6"

meter : []

name : []

priority : 1002

severity : []

_uuid : ab46762d-0837-40ed-8d0d-9bfa841e1df9

action : allow-related

direction : from-lport

external_ids : {"neutron:security_group_rule_id"="40991cd8-16e4-43ec-a1c5-b362c4a11039"}

log : false

match : "inport == @pg_847a5488_34f9_4c42_96d5_807a30c0fd03 && ip4 && ip4.dst == 114.114.114.114/32"

meter : []

name : []

priority : 1002

severity : []

_uuid : 441fa13d-c99a-4e1d-8626-549d6aa3bdfa

action : allow-related

direction : to-lport

external_ids : {"neutron:security_group_rule_id"="4142c778-6d0d-4109-90ff-250d036a2476"}

log : false

match : "outport == @pg_025465a5_649f_4b6c_980c_68d0c14c4086 && ip4 && ip4.src == $pg_025465a5_649f_4b6c_980c_68d0c14c4086_ip4"

meter : []

name : []

priority : 1002

severity : []

_uuid : 3c4f5216-0a38-4d51-9c06-9928176e08e9

action : drop

direction : to-lport

external_ids : {}

log : false

match : "outport == @neutron_pg_drop && ip"

meter : []

name : []

priority : 1001

severity : []

_uuid : 0a72da16-8ff3-46ff-975b-1bf87bc19826

action : allow-related

direction : to-lport

external_ids : {"neutron:security_group_rule_id"="64e6e8e7-f4f5-475e-8b7e-5216e76ebd0e"}

log : false

match : "outport == @pg_847a5488_34f9_4c42_96d5_807a30c0fd03 && ip6 && ip6.src == $pg_847a5488_34f9_4c42_96d5_807a30c0fd03_ip6"

meter : []

name : []

priority : 1002

severity : []

_uuid : e61acd2f-0706-49da-a957-53514da71c02

action : allow-related

direction : to-lport

external_ids : {"neutron:security_group_rule_id"="bda3a8e5-6aa6-4cce-bb70-0efdbea88e77"}

log : false

match : "outport == @pg_847a5488_34f9_4c42_96d5_807a30c0fd03 && ip4 && ip4.src == $pg_847a5488_34f9_4c42_96d5_807a30c0fd03_ip4"

meter : []

name : []

priority : 1002

severity : []

2.1.4 Logical_Router

对应neutron qrouter。下面是一个具体的示例。

[root@controller ~]# ovn-nbctl list Logical_Router

_uuid : 22c3eb7c-ae8c-4fea-9b63-552bc95a407e

enabled : true

--->neutron:gw_port_id对应qg port uuid

external_ids : {"neutron:gw_port_id"="4cdf4897-c0e5-4a00-b182-f9eae17b499e", "neutron:revision_number"="4", "neutron:router_name"="n1router"}

load_balancer : []

name : "neutron-0e2754a2-c506-4593-a94c-1b9e0549c8d9" --->neutron-<qrouter uuid>

nat : [598db320-5c89-439d-a6bb-227588860626, 5b0d2928-f03c-4bf5-b060-f29fb4ca8392, 9c1020ab-ecdf-4873-a511-5345f88991df, 9f7253e0-4bdf-46a1-b7ba-6aef55e41710]

options : {}

ports : [6a36518a-b7c3-4aa4-87b7-d1f4da2e3cb9, a74b0fda-6031-4221-af52-30995b574ea5, b93b2ff7-0dbe-4ef1-8747-e0c434649c96]

static_routes : [247c1bca-c533-409e-8acc-c3ebe823a3f6]

2.1.5 Logical_Router_Port

对应neutron qrouter上的port。下面是一个具体的示例。

[root@controller ~]# ovn-nbctl list Logical_Router_Port

_uuid : 6a36518a-b7c3-4aa4-87b7-d1f4da2e3cb9

enabled : []

--->neutron:router_name为qrouter uuid

external_ids : {"neutron:revision_number"="7", "neutron:router_name"="0e2754a2-c506-4593-a94c-1b9e0549c8d9", "neutron:subnet_ids"="6120c237-783e-4292-a39e-abc80af97605"}

gateway_chassis : []

ipv6_ra_configs : {}

mac : "fa:16:3e:e9:ac:85"

name : "lrp-d46cf500-9d21-49c5-8099-0b7c53ae5e32" --->lrp-<qr port uuid>

networks : ["10.1.2.1/24"]

options : {}

peer : []

_uuid : a74b0fda-6031-4221-af52-30995b574ea5

enabled : []

external_ids : {"neutron:revision_number"="7", "neutron:router_name"="0e2754a2-c506-4593-a94c-1b9e0549c8d9", "neutron:subnet_ids"="1069bf66-b8c7-44da-b7dc-978258e49c37"}

gateway_chassis : []

ipv6_ra_configs : {}

mac : "fa:16:3e:cc:5e:81"

name : "lrp-5478dcf9-9cd4-4410-b7a5-340ee014a17e"

networks : ["10.1.1.1/24"]

options : {}

peer : []

_uuid : b93b2ff7-0dbe-4ef1-8747-e0c434649c96

enabled : []

external_ids : {"neutron:revision_number"="7", "neutron:router_name"="0e2754a2-c506-4593-a94c-1b9e0549c8d9", "neutron:subnet_ids"="ce1c85bb-f45a-4aa6-8ef3-8f8eed700150"}

gateway_chassis : [2edf9f18-6cf0-450a-8787-66c4b7b3c6f1, 9390af18-e3e2-467d-ae09-6312e3d0cf79] --->2个网关节点做HA

ipv6_ra_configs : {}

mac : "fa:16:3e:dc:9a:73"

name : "lrp-4cdf4897-c0e5-4a00-b182-f9eae17b499e" --->lrp-<qg port uuid>

networks : ["172.168.1.4/24"]

options : {}

peer : []

2.1.6 Logical_Router_Static_Route

对应neutron qrouter的静态路由。下面是一个具体的示例。

[root@controller ~]# ovn-nbctl list Logical_Router_Static_Route

_uuid : 247c1bca-c533-409e-8acc-c3ebe823a3f6

external_ids : {"neutron:is_ext_gw"="true", "neutron:subnet_id"="ce1c85bb-f45a-4aa6-8ef3-8f8eed700150"}

ip_prefix : "0.0.0.0/0"

nexthop : "172.168.1.1"

output_port : []

policy : []

2.1.7 NAT

对应neutron里的snat、dnat、snat&dnat。下面是一个具体的示例。

[root@controller ~]# ovn-nbctl list NAT

_uuid : 9f7253e0-4bdf-46a1-b7ba-6aef55e41710

--->neutron:fip_id为floatingip uuid; neutron:fip_port_id为绑定的VM port uuid.

external_ids : {"neutron:fip_external_mac"="fa:16:3e:4d:89:bc", "neutron:fip_id"="09c2c4e1-2a5a-4579-a12a-aa2d9b891cd4", "neutron:fip_port_id"="a2d480dc-ec4c-4a91-aac7-b2c015afeab6", "neutron:revision_number"="6", "neutron:router_name"="neutron-0e2754a2-c506-4593-a94c-1b9e0549c8d9"}

external_ip : "172.168.1.5" --->与VM绑定的FIP地址

external_mac : "fa:16:3e:4d:89:bc" --->对应172.168.1.5 floatingip port的mac地址

logical_ip : "10.1.1.11" --->绑定的VM IP地址

logical_port : "a2d480dc-ec4c-4a91-aac7-b2c015afeab6" --->对应FIP绑定的VM PORT UUID

type : dnat_and_snat

_uuid : 5b0d2928-f03c-4bf5-b060-f29fb4ca8392

external_ids : {}

external_ip : "172.168.1.4"

external_mac : []

logical_ip : "10.1.2.0/24"

logical_port : []

type : snat

_uuid : 598db320-5c89-439d-a6bb-227588860626

external_ids : {}

external_ip : "172.168.1.4"

external_mac : []

logical_ip : "10.1.1.0/24"

logical_port : []

type : snat

_uuid : 9c1020ab-ecdf-4873-a511-5345f88991df

external_ids : {"neutron:fip_external_mac"="fa:16:3e:0a:28:e1", "neutron:fip_id"="90b39396-84f5-4b91-80ec-b0fcd26b3d62", "neutron:fip_port_id"="40b3a54d-4cf4-4a8d-bc03-d0f418824e10", "neutron:revision_number"="14", "neutron:router_name"="neutron-0e2754a2-c506-4593-a94c-1b9e0549c8d9"}

external_ip : "172.168.1.2"

external_mac : "fa:16:3e:0a:28:e1" --->对应172.168.1.2 floatingip port的mac地址

logical_ip : "10.1.1.6"

logical_port : "40b3a54d-4cf4-4a8d-bc03-d0f418824e10" --->对应FIP绑定的VM PORT UUID

type : dnat_and_snat

2.1.8 DHCP_Options

对应neutron subnet dhcp配置信息。下面是一个具体是示例。

[root@controller ~]# ovn-nbctl list DHCP_Options

_uuid : 4569e4ed-c093-4c72-a80c-d84314bb977a

cidr : "10.1.2.0/24"

external_ids : {"neutron:revision_number"="0", subnet_id="6120c237-783e-4292-a39e-abc80af97605"}

options : {dns_server="{114.114.114.114}", lease_time="43200", mtu="1442", router="10.1.2.1", server_id="10.1.2.1", server_mac="fa:16:3e:a2:89:9b"}

_uuid : 59f9d0ee-cde4-48fc-b97a-826e53d412ce

cidr : "10.1.1.0/24"

external_ids : {"neutron:revision_number"="0", subnet_id="1069bf66-b8c7-44da-b7dc-978258e49c37"}

options : {dns_server="{114.114.114.114}", lease_time="43200", mtu="1442", router="10.1.1.1", server_id="10.1.1.1", server_mac="fa:16:3e:7f:ae:06"}

2.1.9 Gateway_Chassis

对应neutron网络节点。下面是一个具体的示例。

[root@controller ~]# ovn-nbctl list Gateway_Chassis

_uuid : 2edf9f18-6cf0-450a-8787-66c4b7b3c6f1

chassis_name : "1ee0be4a-0aed-4d44-9ac6-de27f1ca2e1c"

external_ids : {}

name : "lrp-4cdf4897-c0e5-4a00-b182-f9eae17b499e_1ee0be4a-0aed-4d44-9ac6-de27f1ca2e1c" --->lrp-<qg port uuid>_<chassis_name>

options : {}

priority : 1

_uuid : 9390af18-e3e2-467d-ae09-6312e3d0cf79

chassis_name : "85470817-23d3-4e39-9375-f4fe3ec7e986"

external_ids : {}

name : "lrp-4cdf4897-c0e5-4a00-b182-f9eae17b499e_85470817-23d3-4e39-9375-f4fe3ec7e986"

options : {}

priority : 2

2.2 SB

2.2.1 Chassis

对应openstack的节点。下面是一个具体的示例。

[root@controller ~]# ovn-sbctl list Chassis

_uuid : a0d7f332-0310-4e7d-b9ba-ecaed9f3aec7

encaps : [50d00e60-a616-4909-9356-2f8772b69bca, 554b9f7c-168d-4e2a-a5cc-5a54caecfd61]

external_ids : {datapath-type=system, iface-types="erspan,geneve,gre,internal,ip6erspan,ip6gre,lisp,patch,stt,system,tap,vxlan", ovn-bridge-mappings="provider:br-ex", ovn-cms-options=enable-chassis-as-gw}

hostname : compute

name : "1ee0be4a-0aed-4d44-9ac6-de27f1ca2e1c"

nb_cfg : 0

vtep_logical_switches: []

_uuid : d374cbd2-f305-48e4-897a-430b279626d8

encaps : [26121127-9b0f-40ec-b88d-258590384722, ae4202a0-5346-4cce-9ada-46f6f7c84fc1]

external_ids : {datapath-type=system, iface-types="erspan,geneve,gre,internal,ip6erspan,ip6gre,lisp,patch,stt,system,tap,vxlan", ovn-bridge-mappings="provider:br-ex", ovn-cms-options=enable-chassis-as-gw}

hostname : controller

name : "85470817-23d3-4e39-9375-f4fe3ec7e986"

nb_cfg : 0

vtep_logical_switches: []

2.2.2 Encap

Overlay隧道封装协议配置信息。下面是一个具体的示例。

[root@controller ~]# ovn-sbctl list Encap

_uuid : ae4202a0-5346-4cce-9ada-46f6f7c84fc1

chassis_name : "85470817-23d3-4e39-9375-f4fe3ec7e986"

ip : "192.168.0.201"

options : {csum="true"}

type : geneve

_uuid : 554b9f7c-168d-4e2a-a5cc-5a54caecfd61

chassis_name : "1ee0be4a-0aed-4d44-9ac6-de27f1ca2e1c"

ip : "192.168.0.202"

options : {csum="true"}

type : geneve

_uuid : 50d00e60-a616-4909-9356-2f8772b69bca

chassis_name : "1ee0be4a-0aed-4d44-9ac6-de27f1ca2e1c"

ip : "192.168.0.202"

options : {csum="true"}

type : vxlan

_uuid : 26121127-9b0f-40ec-b88d-258590384722

chassis_name : "85470817-23d3-4e39-9375-f4fe3ec7e986"

ip : "192.168.0.201"

options : {csum="true"}

type : vxlan

2.2.3 LFlow

参见第3章节。

2.2.4 Multicast_Group

组播组。

[root@controller ~]# ovn-sbctl list Multicast_Group

_uuid : 9382799c-fa31-410d-b190-df071af739ad

datapath : 5b08b120-ac39-4999-93a6-291b93e24e80

name : _MC_unknown

ports : [4bd5f0e3-80f9-4974-847a-e1a07a1b4529] --->localnet port

tunnel_key : 65534

_uuid : 941f8cd2-b3e3-4eaa-ad92-db8c6cdb12f6

datapath : a201e301-758b-454c-90da-105cc1dbdd12

name : _MC_flood

ports : [0933146a-fa22-4d16-8bac-6078c6a2e424, 1a46a473-a2d9-40d4-b9c4-c20aff1bea35, 8faf754b-bff2-491f-b5a5-a8385fb1a606, 9fa1dc7e-6191-48b6-a2df-7012aa00fb23, b5a2fcf8-5d19-4332-bf9c-fb49d5fe6123, cc6137e9-e330-43c5-b709-45ff9a7dd86c, d09604f1-4d5c-4968-8b23-db2a1706baf4]

tunnel_key : 65535

_uuid : 0d209daa-6131-4abb-bc60-ca9e057d669b

datapath : 5b08b120-ac39-4999-93a6-291b93e24e80

name : _MC_flood

ports : [37aa2ef1-a16a-4e42-aeda-d55ad50edde9, 4bd5f0e3-80f9-4974-847a-e1a07a1b4529] --->qg port和localnet port

tunnel_key : 65535

2.2.5 Datapath_Binding

Logical_Switch和Logical_Router都会对应一个Datapath,其中的datapath_id为结构里的tunnel_key值,全局唯一,流量进入到某个datapath匹配流表时,会匹配metadata数值,该metadata保存的是datapath id,即:tunnel_key值,具体参见第3章节。下面是一个具体的示例。

[root@controller ~]# ovn-sbctl list Datapath_Binding

_uuid : 5b08b120-ac39-4999-93a6-291b93e24e80

external_ids : {logical-switch="a7f84ef5-28f5-4b5d-a3aa-935251cde27f", name="neutron-fc8dfbcb-0272-4025-8596-850345f86e13", "name2"=ext}

tunnel_key : 3

_uuid : a201e301-758b-454c-90da-105cc1dbdd12

external_ids : {logical-switch="588ab948-6348-4298-a913-f0454d752bb8", name="neutron-087e9517-8a82-43b5-9c84-24d20a15b16d", "name2"="n1"}

tunnel_key : 1

_uuid : 079867e3-b06e-451c-9866-745f750ad59d

external_ids : {logical-router="22c3eb7c-ae8c-4fea-9b63-552bc95a407e", name="neutron-0e2754a2-c506-4593-a94c-1b9e0549c8d9", "name2"="n1router"}

tunnel_key : 4

2.2.6 Port_Binding

其中tunnel_key值仅局限于某个datapath,即:在datapath内,tunnel_key是唯一的,不同datapath独立管理tunnel_key的分配。tunnel_key值会在流表中匹配使用,用于标识入口和出口对应哪个port。其中入口会保存到reg14,出口会保存到reg15,具体可参见第3章节。

[root@controller ~]# ovn-sbctl list Port_Binding

_uuid : da2c2558-b04d-4546-b8b7-8b1939778cc6

chassis : []

datapath : 079867e3-b06e-451c-9866-745f750ad59d

external_ids : {}

gateway_chassis : []

logical_port : "lrp-5478dcf9-9cd4-4410-b7a5-340ee014a17e"

mac : ["fa:16:3e:cc:5e:81 10.1.1.1/24"]

nat_addresses : []

options : {peer="5478dcf9-9cd4-4410-b7a5-340ee014a17e"}

parent_port : []

tag : []

tunnel_key : 1

type : patch

_uuid : b5a2fcf8-5d19-4332-bf9c-fb49d5fe6123

chassis : []

datapath : a201e301-758b-454c-90da-105cc1dbdd12

external_ids : {"neutron:cidrs"="10.1.1.1/24", "neutron:device_id"="0e2754a2-c506-4593-a94c-1b9e0549c8d9", "neutron:device_owner"="network:router_interface", "neutron:network_name"="neutron-087e9517-8a82-43b5-9c84-24d20a15b16d", "neutron:port_name"="", "neutron:project_id"="4f1de4c6c6704b618f5ce2eeb8aa4135", "neutron:revision_number"="7", "neutron:security_group_ids"=""}

gateway_chassis : []

logical_port : "5478dcf9-9cd4-4410-b7a5-340ee014a17e"

mac : [router]

nat_addresses : []

options : {peer="lrp-5478dcf9-9cd4-4410-b7a5-340ee014a17e"}

parent_port : []

tag : []

tunnel_key : 8

type : patch

_uuid : 37aa2ef1-a16a-4e42-aeda-d55ad50edde9

chassis : []

datapath : 5b08b120-ac39-4999-93a6-291b93e24e80

external_ids : {"neutron:cidrs"="172.168.1.4/24", "neutron:device_id"="0e2754a2-c506-4593-a94c-1b9e0549c8d9", "neutron:device_owner"="network:router_gateway", "neutron:network_name"="neutron-fc8dfbcb-0272-4025-8596-850345f86e13", "neutron:port_name"="", "neutron:project_id"="", "neutron:revision_number"="7", "neutron:security_group_ids"=""}

gateway_chassis : []

logical_port : "4cdf4897-c0e5-4a00-b182-f9eae17b499e"

mac : [router]

nat_addresses : ["fa:16:3e:0a:28:e1 172.168.1.2 is_chassis_resident(\"40b3a54d-4cf4-4a8d-bc03-d0f418824e10\")", "fa:16:3e:4d:89:bc 172.168.1.5 is_chassis_resident(\"a2d480dc-ec4c-4a91-aac7-b2c015afeab6\")", "fa:16:3e:dc:9a:73 172.168.1.4 172.168.1.4 is_chassis_resident(\"cr-lrp-4cdf4897-c0e5-4a00-b182-f9eae17b499e\")"]

options : {peer="lrp-4cdf4897-c0e5-4a00-b182-f9eae17b499e"}

parent_port : []

tag : []

tunnel_key : 2

type : patch

_uuid : d09604f1-4d5c-4968-8b23-db2a1706baf4

chassis : []

datapath : a201e301-758b-454c-90da-105cc1dbdd12

external_ids : {"neutron:cidrs"="10.1.2.1/24", "neutron:device_id"="0e2754a2-c506-4593-a94c-1b9e0549c8d9", "neutron:device_owner"="network:router_interface", "neutron:network_name"="neutron-087e9517-8a82-43b5-9c84-24d20a15b16d", "neutron:port_name"="", "neutron:project_id"="4f1de4c6c6704b618f5ce2eeb8aa4135", "neutron:revision_number"="7", "neutron:security_group_ids"=""}

gateway_chassis : []

logical_port : "d46cf500-9d21-49c5-8099-0b7c53ae5e32"

mac : [router]

nat_addresses : []

options : {peer="lrp-d46cf500-9d21-49c5-8099-0b7c53ae5e32"}

parent_port : []

tag : []

tunnel_key : 9

type : patch

_uuid : cc6137e9-e330-43c5-b709-45ff9a7dd86c

chassis : a0d7f332-0310-4e7d-b9ba-ecaed9f3aec7

datapath : a201e301-758b-454c-90da-105cc1dbdd12

external_ids : {name="n1subnet1_vm2", "neutron:cidrs"="10.1.1.6/24", "neutron:device_id"="f3726b8a-0587-4755-b97d-27fe28ed8d66", "neutron:device_owner"="compute:nova", "neutron:network_name"="neutron-087e9517-8a82-43b5-9c84-24d20a15b16d", "neutron:port_name"="n1subnet1_vm2", "neutron:project_id"="4f1de4c6c6704b618f5ce2eeb8aa4135", "neutron:revision_number"="22", "neutron:security_group_ids"="847a5488-34f9-4c42-96d5-807a30c0fd03"}

gateway_chassis : []

logical_port : "40b3a54d-4cf4-4a8d-bc03-d0f418824e10"

mac : ["fa:16:3e:92:06:64 10.1.1.6"]

nat_addresses : []

options : {requested-chassis=compute}

parent_port : []

tag : []

tunnel_key : 2

type : ""

_uuid : bb591372-c196-4e93-8bc6-6e8332c61d5f

chassis : d374cbd2-f305-48e4-897a-430b279626d8

datapath : 079867e3-b06e-451c-9866-745f750ad59d

external_ids : {}

gateway_chassis : [6578dfff-f69d-4eff-b2da-ad659c6c3c1e, 9d192820-4d76-4f9b-9ab1-589ff34011e7]

logical_port : "cr-lrp-4cdf4897-c0e5-4a00-b182-f9eae17b499e"

mac : ["fa:16:3e:dc:9a:73 172.168.1.4/24"]

nat_addresses : []

options : {distributed-port="lrp-4cdf4897-c0e5-4a00-b182-f9eae17b499e"}

parent_port : []

tag : []

tunnel_key : 4

type : chassisredirect

_uuid : 0933146a-fa22-4d16-8bac-6078c6a2e424

chassis : d374cbd2-f305-48e4-897a-430b279626d8

datapath : a201e301-758b-454c-90da-105cc1dbdd12

external_ids : {name="n1subnet2_vm1", "neutron:cidrs"="10.1.2.8/24", "neutron:device_id"="8401ffa7-73d1-4ddb-b2c0-816f6e632ad3", "neutron:device_owner"="compute:nova", "neutron:network_name"="neutron-087e9517-8a82-43b5-9c84-24d20a15b16d", "neutron:port_name"="n1subnet2_vm1", "neutron:project_id"="4f1de4c6c6704b618f5ce2eeb8aa4135", "neutron:revision_number"="26", "neutron:security_group_ids"="847a5488-34f9-4c42-96d5-807a30c0fd03"}

gateway_chassis : []

logical_port : "ce2635b2-ce67-487b-8d9c-af1025fdb5c0"

mac : ["fa:16:3e:9e:d8:3a 10.1.2.8"]

nat_addresses : []

options : {requested-chassis=controller}

parent_port : []

tag : []

tunnel_key : 4

type : ""

_uuid : 8faf754b-bff2-491f-b5a5-a8385fb1a606

chassis : d374cbd2-f305-48e4-897a-430b279626d8

datapath : a201e301-758b-454c-90da-105cc1dbdd12

external_ids : {name="n1subnet1_vm3", "neutron:cidrs"="10.1.1.4/24", "neutron:device_id"="ec1b9d7d-e916-4aca-82be-bf5883d7d252", "neutron:device_owner"="compute:nova", "neutron:network_name"="neutron-087e9517-8a82-43b5-9c84-24d20a15b16d", "neutron:port_name"="n1subnet1_vm3", "neutron:project_id"="4f1de4c6c6704b618f5ce2eeb8aa4135", "neutron:revision_number"="22", "neutron:security_group_ids"="847a5488-34f9-4c42-96d5-807a30c0fd03"}

gateway_chassis : []

logical_port : "c0e5c1f7-3d77-4bc8-b842-9b49f2878a10"

mac : ["fa:16:3e:76:75:49 10.1.1.4"]

nat_addresses : []

options : {requested-chassis=controller}

parent_port : []

tag : []

tunnel_key : 3

type : ""

_uuid : 4bd5f0e3-80f9-4974-847a-e1a07a1b4529

chassis : []

datapath : 5b08b120-ac39-4999-93a6-291b93e24e80

external_ids : {}

gateway_chassis : []

logical_port : "provnet-fc8dfbcb-0272-4025-8596-850345f86e13"

mac : [unknown]

nat_addresses : []

options : {network_name=provider}

parent_port : []

tag : []

tunnel_key : 1

type : localnet

_uuid : 1a46a473-a2d9-40d4-b9c4-c20aff1bea35

chassis : a0d7f332-0310-4e7d-b9ba-ecaed9f3aec7

datapath : a201e301-758b-454c-90da-105cc1dbdd12

external_ids : {name="n1subnet1_vm1", "neutron:cidrs"="10.1.1.11/24", "neutron:device_id"="afda5e0d-9698-4b3e-8b7a-cb0b03ad733e", "neutron:device_owner"="compute:nova", "neutron:network_name"="neutron-087e9517-8a82-43b5-9c84-24d20a15b16d", "neutron:port_name"="n1subnet1_vm1", "neutron:project_id"="4f1de4c6c6704b618f5ce2eeb8aa4135", "neutron:revision_number"="22", "neutron:security_group_ids"="847a5488-34f9-4c42-96d5-807a30c0fd03"}

gateway_chassis : []

logical_port : "a2d480dc-ec4c-4a91-aac7-b2c015afeab6"

mac : ["fa:16:3e:f1:da:40 10.1.1.11"]

nat_addresses : []

options : {requested-chassis=compute}

parent_port : []

tag : []

tunnel_key : 1

type : ""

_uuid : 77c6af15-6ebc-4a99-824b-889f18bb632e

chassis : []

datapath : 079867e3-b06e-451c-9866-745f750ad59d

external_ids : {}

gateway_chassis : []

logical_port : "lrp-d46cf500-9d21-49c5-8099-0b7c53ae5e32"

mac : ["fa:16:3e:e9:ac:85 10.1.2.1/24"]

nat_addresses : []

options : {peer="d46cf500-9d21-49c5-8099-0b7c53ae5e32"}

parent_port : []

tag : []

tunnel_key : 2

type : patch

_uuid : 8cac665d-e9da-4405-897c-381e84d74807

chassis : []

datapath : 079867e3-b06e-451c-9866-745f750ad59d

external_ids : {}

gateway_chassis : []

logical_port : "lrp-4cdf4897-c0e5-4a00-b182-f9eae17b499e"

mac : ["fa:16:3e:dc:9a:73 172.168.1.4/24"]

nat_addresses : []

options : {peer="4cdf4897-c0e5-4a00-b182-f9eae17b499e"}

parent_port : []

tag : []

tunnel_key : 3

type : patch

_uuid : 9fa1dc7e-6191-48b6-a2df-7012aa00fb23

chassis : a0d7f332-0310-4e7d-b9ba-ecaed9f3aec7

datapath : a201e301-758b-454c-90da-105cc1dbdd12

external_ids : {name="n1subnet2_vm2", "neutron:cidrs"="10.1.2.6/24", "neutron:device_id"="4d7ce472-b75d-44c7-9a67-23884653e8be", "neutron:device_owner"="compute:nova", "neutron:network_name"="neutron-087e9517-8a82-43b5-9c84-24d20a15b16d", "neutron:port_name"="n1subnet2_vm2", "neutron:project_id"="4f1de4c6c6704b618f5ce2eeb8aa4135", "neutron:revision_number"="26", "neutron:security_group_ids"="847a5488-34f9-4c42-96d5-807a30c0fd03"}

gateway_chassis : []

logical_port : "d2bd73be-6cfa-441a-94fc-579532d75025"

mac : ["fa:16:3e:34:b3:21 10.1.2.6"]

nat_addresses : []

options : {requested-chassis=compute}

parent_port : []

tag : []

tunnel_key : 7

type : ""

2.2.7 MAC_Binding

[root@controller ~]# ovn-sbctl list MAC_Binding

_uuid : 7fc0f6c1-fe9e-433b-a68f-7a1dcec57d5c

datapath : f615d779-2e3c-4bd2-ac9c-55d2d6ee6931

ip : "172.168.1.1"

logical_port : "lrp-171b3982-1141-44c9-a782-d92c02c9fa3f"

mac : "ce:1c:ea:79:dd:44"

_uuid : eb33f839-cd7a-4978-9587-499de3dc919a

datapath : f615d779-2e3c-4bd2-ac9c-55d2d6ee6931

ip : "::"

logical_port : "lrp-171b3982-1141-44c9-a782-d92c02c9fa3f"

mac : "00:00:00:00:00:00"

2.2.8 DHCP_Options

NA

2.2.9 Gateway_Chassis

[root@controller ~]# ovn-sbctl list Gateway_Chassis

_uuid : 9d192820-4d76-4f9b-9ab1-589ff34011e7

chassis : d374cbd2-f305-48e4-897a-430b279626d8

external_ids : {}

name : "lrp-4cdf4897-c0e5-4a00-b182-f9eae17b499e_85470817-23d3-4e39-9375-f4fe3ec7e986"

options : {}

priority : 2

_uuid : 6578dfff-f69d-4eff-b2da-ad659c6c3c1e

chassis : a0d7f332-0310-4e7d-b9ba-ecaed9f3aec7

external_ids : {}

name : "lrp-4cdf4897-c0e5-4a00-b182-f9eae17b499e_1ee0be4a-0aed-4d44-9ac6-de27f1ca2e1c"

options : {}

priority : 1

1 Flow Pipeline

1.1 寄存器

- metadata:保存datapath id,即datapath_binding里的tunnel_key

- reg14:保存logical input port

- reg15:保存logical output port

- reg13:contrack zone field for logical ports(仅本地有效,不支持跨节点)

- reg11:contrack zone field for routers DNAT(仅本地有效,不支持跨节点)

- reg12:contrack zone field for routers SNAT(仅本地有效,不支持跨节点)

- reg10:Flow Table之间的context(仅本地有效,不支持跨节点)

- xxreg*

|

xxreg0 |

NXM_NX_XXREG0 |

bit0~31(reg3);bit32~63(reg2);bit64~95(reg1);bit96~127(reg0) |

|

xxreg1 |

NXM_NX_XXREG1 |

bit0~31(reg7);bit32~63(reg6);bit64~95(reg5);bit96~127(reg4) |

|

xxreg2 |

NXM_NX_XXREG2 |

bit0~31(reg11);bit32~63(reg10);bit64~95(reg9);bit96~127(reg8) |

|

xxreg3 |

NXM_NX_XXREG3 |

bit0~31(reg15);bit32~63(reg14);bit64~95(reg13);bit96~127(reg12) |

- xreg*

|

xreg0 |

OXM_OF_PKT_REG0 |

bit0~31(reg1);bit32~63(reg0) |

|

xreg1 |

OXM_OF_PKT_REG1 |

bit0~31(reg3);bit32~63(reg2) |

|

xreg2 |

OXM_OF_PKT_REG2 |

bit0~31(reg5);bit32~63(reg4) |

|

xreg3 |

OXM_OF_PKT_REG3 |

bit0~31(reg7);bit32~63(reg6) |

|

xreg4 |

OXM_OF_PKT_REG4 |

bit0~31(reg9);bit32~63(reg8) |

|

xreg5 |

OXM_OF_PKT_REG5 |

bit0~31(reg11);bit32~63(reg10) |

|

xreg6 |

OXM_OF_PKT_REG6 |

bit0~31(reg13);bit32~63(reg12) |

|

xreg7 |

OXM_OF_PKT_REG7 |

bit0~31(reg15);bit32~63(reg14) |

1.1 Overlay协议

推荐使用Geneve协议,具体原因可以参考ovn-architecture手册里的Tunnel Encapsulations描述。

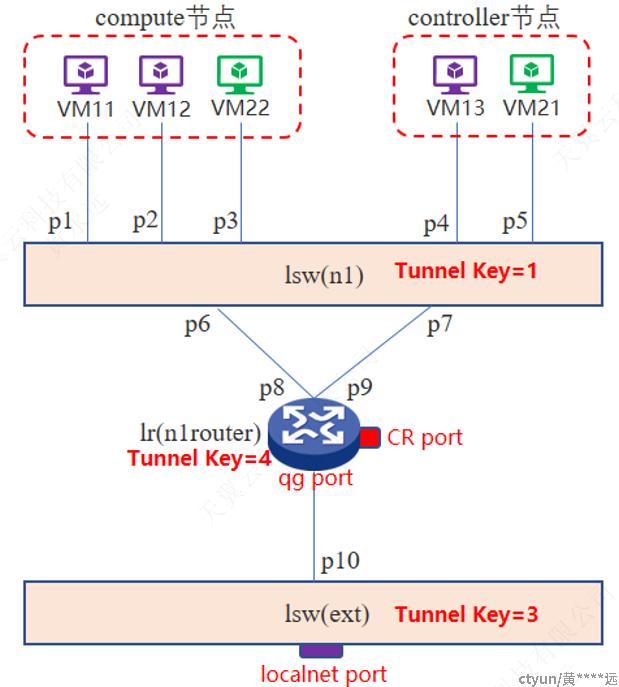

1.2 网络拓扑

1.2.1 OVN View

上图是本次实验对应的OVN逻辑网络拓扑图。下表给出了更详细的配置信息。

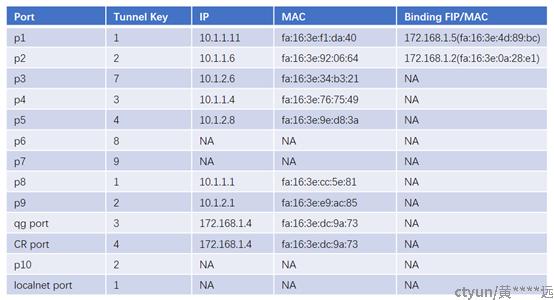

1.2.2 OVS View

1.3 流表匹配

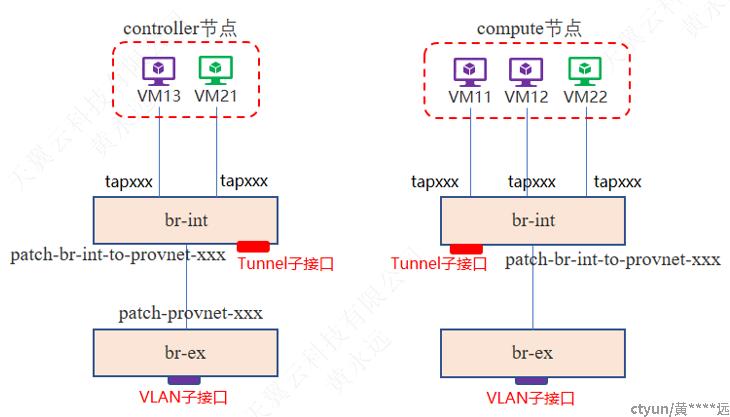

1.1.1 Tables

1.1.1.1 公共Tables

|

OpenFlow Table ID |

描述 |

|

0 |

物理到逻辑的转换。指如下: Ø 匹配ovs br-int tap口,将对应的datapat tunnel key保存到metadata、logical ingress port tunnel key保存到reg14,转OpenFlow Table8(LFlow Ingress Table0)继续处理。 Ø 匹配ovs br-int tunnel口,将对应的tun_id保存到metadata、 logical ingress port tunnel key保存到reg14、logical egress port tunnel key保存到reg15,转OpenFlow Table33继续处理。 Ø 匹配ovs br-int patch口(与br-ex互联),将datapath tunnel key设置为ext logical switch tunnel key、logical ingress port tunnel key设置为localnet port tunnel key,转OpenFlow Table8(LFlow Ingress Table0)继续处理。 |

|

32 |

处理报文到远程节点。如下: Ø 出口在其他节点,将datapath tunnel key保存在metadata、将logical ingress/egress port tunnel key保存到tun_metadata0(bit0~15为egress port,bit16~30为ingress port),从tunnel port送出。--->这个对于ext lsw不存在这条规则,即使出口在其他节点。因此会继续往下走到table33特殊处理。 Ø 匹配metadata为logical router datapath tunnel key,出口为CR port,此时指示需要将流量送至Active Gateway_Chassis,因此,将datapath tunnel key保存在metadata、将logical ingress/egress port tunnel key保存到tun_metadata0(bit0~15为egress port,bit16~30为ingress port),从tunnel port送出。--->这条流表在非Active Gateway Chassis以及所有计算节点都存在,在Active Gateway Chassis节点则不存在,因此会默认转到OpenFlow Table33继续处理。 Ø 匹配metadata为ext logical switch tunnel key,出口为组播/广播,动作1:设置出口为lsw(ext)与lr之间的patch port tunnel key,转OpenFlow Table34继续处理;动作2:设置出口为组播/广播,转OpenFlow Table33继续处理。--->总结:ext逻辑交换机收到组播/广播报文,发一份到本地的logical router port(通过lsw与lr互联的patch口),最后转OpenFlow Table33(会往本地的所有tap口发送---正常情况下只会有一个localnet port,当然也要考虑VM接入ext的情况,这个时候就需要包含这类VM的tap口)。 Ø 匹配metadata为普通logical switch tunnel key,出口为组播/广播,动作1:设置出口为router patch port(与logical router互联),转OpenFlow Table34继续处理(多个router patch port则有多个这样的动作);动作2:将datapath tunnel key保存在metadata、将logical ingress/egress port tunnel key保存到tun_metadata0(bit0~15为egress port,bit16~30为ingress port),从tunnel port送出;动作3:转OpenFlow Table33继续处理。--->总结:普通逻辑交换机收到组播/广播报文,发一份到本地的logical router,发一份到其他节点(走隧道),最后转OpenFlow Table33(会往本地的所有tap口发送)。 Ø 其余条件:继续转OpenFlow Table33继续处理。 |

|

33 |

处理报文到本地节点。如下: Ø 组播/广播情况:设置出口为其他口(除隧道口/router patch口,因此剩余的口则为VM或localnet port了),转OpenFlow Table34继续处理。--->仅在logical switch datapath出现,logical router datapath不存在此情况。 Ø 匹配datapath tunnel key和出口为本节点端口,转OpenFlow Table34继续处理。--->这里针对普通lsw,对于ext lsw下面特殊说明 Ø 匹配datapath tunnel key为ext lsw datapath tunnel key,若出口在本节点(含vm/localnet/router port),则转OpenFlow Table34继续处理;若出口在其他节点(这种情况为VM的情况),则设在出口为localnet port,然后再转回本table=33递归处理,目的是从br-ex出外网再到其他节点。 Ø 匹配datapath tunnel key为router datapath tunnel key,logical egress port tunnel key为CR port tunnel key,则执行:logical egress port tunnel key设置为qg port tunnel key,转OpenFlow Table34继续处理。--->这条流表仅仅在Active Gateway Chassis节点存在,其他节点不存在。 Ø 匹配datapath tunnel key,logical egress port tunnel key为0xfffe(即:未知名单播),则设置logical egress port tunnel key为localnet port tunnel key,继续转到OpenFlow34处理。--->这条流表仅存在于ext lsw datapath。 |

|

34 |

处理hairpin,即:入口和出口相同的场景 Ø 入口和出口相同,且reg10=0/0x1,则丢弃。 Ø 其余情况:清空reg0~reg9,继续转OpenFlow Table40(LFlow Egress Table0)--->此表进入某个datapath的egress flow pipepine。 |

|

64 |

Ø 当loopback标记时(即: reg10=0x1/0x1),为了阻止ovs默认拒绝这种行为,先保存IN_PORT值,再置为0,转OpenFlow Table65继续处理,最后恢复IN_PORT值。 Ø 其他情况下,转OpenFlow Table65继续处理。 |

|

65 |

与OpenFlow Table0相反,即:逻辑到物理的转换。 Ø 匹配datapath tunnel key,出口为vm logical egress port tunnel key,直接output到br-int上的对应tap口。--->针对VM,含VM直接接入ext网络的情况。 Ø 涉及跨datapath的转发情况:匹配datapath tunnel key,出口为path port(与其他datapath互联),则:清空寄存器的值(reg0~reg15),设置新的datapath tunnel key 到metadata,设置新datapath上的logical ingress port tunnel key到reg14,继续转到OpenFlow Table8(LFlow Ingress Table0)。 Ø 最后一个场景是:匹配ext lsw datapath tunnel key,出口为localnet port,在直接从patch-br-int-to送出至br-ex网桥。到br-ex网桥只有一条NORMAL流表直接转发的外部物理网络。 |

3.4.1.2 逻辑交换机

|

Direction |

LFlow Table ID |

OpenFlow Table ID |

描述 |

|

Ingress |

0 |

8 |

准入控制与L2 Port Security Ø 源MAC为组播/广播地址,丢弃 Ø 带VLAN ID的报文,丢弃 Ø 对于port security enabled的logical port,严格匹配logical ingress port tunnel key以及源MAC为此port的MAC地址,继续转到下表OpenFlow Table9。--->这里相当于实现了MAC地址防欺骗。 Ø 对于port security not enabled的logical port(例如router port),仅仅需要匹配logical ingress port tunnel key,继续转到下表OpenFlow Table9。 Ø 不匹配,默认丢弃(没有显示的流表)。 |

|

1 |

9

|

IP Port Security Ø 放行DHCP请求:匹配datapath tunnel key,logical ingress port tunnel key,源MAC地址为此port的MAC地址,源IP为0/0,udp,源端口为68,目的端口为67,继续转给OpenFlow Table 10处理。--->针对vm Ø 匹配datapath tunnel key,logical ingress port tunnel key,源MAC地址为此port的MAC地址,源IP为此port的IP地址,继续转给OpenFlow Table 10处理。--->针对vm,相当于IP地址防欺骗。 Ø 不匹配上面2条时,匹配datapath tunnel key,logical ingress port tunnel key,源MAC地址为此port的MAC地址,丢弃。--->针对VM。 Ø 其他情况,继续转给OpenFlow Table 10处理。 |

|

|

2 |

10 |

ARP Port Security Ø 匹配datapath tunnel key,logical ingress port tunnel key,arp报文,源MAC地址为此port的MAC地址,源IP为此port的IP地址,继续转给OpenFlow Table 11处理。--->针对vm,相当于ARP防欺骗。 Ø 不匹配上面规则时,匹配datapath tunnel key,logical ingress port tunnel key,arp报文,丢弃。--->针对VM。 Ø 其他情况,继续转给OpenFlow Table 11处理。 |

|

|

3 |

11 |

Pre-ACLs Ø 对于router port,不需要送ct处理(这样可以跳过stateful ACL的处理),因此:匹配datapath tunnel key,logical ingress port tunnel key,ip报文,继续转给OpenFlow Table 12处理。 Ø 对于localnet port,不需要送ct处理(这样可以跳过stateful ACL的处理),因此:匹配datapath tunnel key,logical ingress port tunnel key,ip报文,继续转给OpenFlow Table 12处理。--->localnet port在ext lsw Ø 一些特殊的报文,也不需要送ct处理(这样可以跳过stateful ACL的处理),这些情况直接转给OpenFlow Table 12处理。 Ø 需要送ct处理的情况:匹配datapath tunnel key,ip报文,设置reg0=0x1/0x1(打标记,说明需要ct处理),继续转给OpenFlow Table 12处理。 Ø 其他情况,继续转给OpenFlow Table 12处理。 |

|

|

4 |

12 |

Pre-LB:负载均衡相关,暂时跳过。 |

|

|

5 |

13 |

Pre-Stateful Ø 需要送ct处理的情况:匹配datapath tunnel key,ip报文,reg0=0x1/0x1(在Pre-ACLs标记的),执行ct动作,ct_zone为reg13的值,继续转给OpenFlow Table 14处理。 Ø 其他情况,继续转给OpenFlow Table 14处理。 |

|

|

6 |

14 |

ACL:处理安全组,暂时跳过。 |

|

|

7 |

15 |

Qos Marking: 针对DSCP Marking,暂时跳过。 |

|

|

8 |

16 |

Qos Meter: 针对Bandwidth,暂时跳过。 |

|

|

9 |

17 |

LB:负载均衡相关,暂时跳过。 |

|

|

10 |

18 |

Stateful:暂时跳过。 |

|

|

11 |

19 |

ARP代答: Ø VM(仅本地)和router port为自己的IP地址发送一个ARP请求(通常这样做是为了检测是否存在IP冲突),这里需要跳过代答,如果这里代答回去的话,会让VM和或router port误以为IP冲突了而不会继续使用此IP地址.这种情况下,匹配datapath tunnel key,logical ingress port tunnel key,arp请求报文,目标IP=VM/router port自己的IP,继续转给OpenFlow Table 20处理。 Ø 全量已知的IP/MAC信息,下发ARP代答:匹配datpath tunnel key,arp请求,目的IP=VM或router port IP(含其他节点上的VM IP,含qg router port,对于qg router port在每个节点都有),执行ARP代答,入口变出口,标记reg10[0]=0x1。继续转给OpenFlow Table 32处理。 Ø 匹配datapath tunnel key,入口为localnet port,继续转给OpenFlow Table 20处理。 Ø 其他情况,继续转给OpenFlow Table 20处理。--->后续会一个一个Table匹配,直到OpenFlow Table24匹配广播,再送给OpenFlow Table32处理。 |

|

|

12 |

20 |

DHCP option processing:DHCP相关,暂时跳过。 |

|

|

13 |

21 |

DHCP代答:DHCP相关,暂时跳过。 |

|

|

14 |

22 |

DNS Lookup:DNS相关,暂时跳过。 |

|

|

15 |

23 |

DNS代答:DNS相关,暂时跳过。 |

|

|

16 |

24 |

Destination Lookup:实现二层交换的功能。 Ø 组播/广播:匹配datapath tunnel key,目的MAC=组播/广播MAC,则设置出口=0xffff,继续转给OpenFlow Table32处理。 Ø 匹配datapath tunnel key,目的MAC=VM/Router网关 MAC,则设置对应的logical egress port tunnel key,继续转给OpenFlow Table32处理。--->这里有全量的VM转发表项,含VM直接接入ext网络的情况。 Ø 匹配datapath tunnel key,目的MAC=FIP MAC,则设置出口=ext sw与router互联的patch port tunnel key,继续转给OpenFlow Table32处理。--->仅存在于FIP绑定的VM节点上。 Ø 匹配datapath tunnel key,目的MAC=qg port MAC,则设置出口=ext sw与router互联的patch port tunnel key,继续转给OpenFlow Table32处理。--->仅存在于Active Gateway Chassis Ø 未知名单播:匹配ext lsw datapath tunnel key,设置出口=0xfffe,继续转给OpenFlow Table32处理。--->仅在ext lsw上有,普通lsw不存在这条匹配。 |

|

|

Egress |

0 |

40 |

Pre-LB:负载均衡相关,暂时跳过。 |

|

1 |

41 |

Pre-ACLs:与Ingress的Pre-ACLs类似,仅仅是方向不一样,不再赘述。 |

|

|

2 |

42 |

Pre-stateful:与Ingress的Pre-stateful类似,仅仅是方向不一样,不再赘述。 |

|

|

3 |

43 |

LB:负载均衡相关,暂时跳过。 |

|

|

4 |

44 |

ACLs:安全组,暂时跳过。 |

|

|

5 |

45 |

Qos Marking: 针对DSCP Marking,暂时跳过。 |

|

|

6 |

46 |

Qos Meter: 针对Bandwidth,暂时跳过。 |

|

|

7 |

47 |

Stateful:与Ingress的stateful类似,仅仅是方向不一样,不再赘述。 |

|

|

8 |

48 |

IP Port Security Ø 匹配datapath tunnel key,logical egress port tunnel key,目的MAC地址为此port的MAC地址,目的IP为此port的IP地址/组播IP/广播IP,继续转给OpenFlow Table 49处理。--->针对vm,含vm直接接入ext网络的情况。 Ø 不匹配上面这条时,匹配datapath tunnel key,logical egress port tunnel key,目的MAC地址为此port的MAC地址,丢弃。--->针对VM,含vm直接接入ext网络的情况。。 Ø 其他情况,继续转给OpenFlow Table 49处理。 |

|

|

9 |

49 |

L2 Port Security Ø 目的MAC为组播/广播地址,继续转给OpenFlow Table64处理。 Ø 对于port security enabled的logical port,严格匹配logical egress port tunnel key以及目的MAC为此port的MAC地址,继续转给OpenFlow Table64处理。 Ø 对于port security not enabled的logical port(例如router port),仅仅需要匹配logical egress port tunnel key,继续转给OpenFlow Table64处理。 Ø 不匹配,默认丢弃(没有显示的流表)。 |

3.4.1.3 逻辑路由器

|

Direction |

LFlow Table ID |

OpenFlow Table ID |

描述 |

|

Ingress |

0 |

8 |

L2准入控制 Ø 源MAC为组播/广播地址,丢弃 Ø 带VLAN ID的报文,丢弃 Ø vm与fip绑定的情况:匹配datapath tunnel key,logical ingress port tunnel key为qg port tunnel key,目的MAC=fip mac,继续转到OpenFlow Table9处理。--->仅在VM所在节点存在这类流表。 Ø qr口:匹配datapath tunnel key,logical ingress port tunnel key,目的MAC=qr口MAC,继续转到OpenFlow Table9处理。 Ø qr口:匹配datapath tunnel key,logical ingress port tunnel key,目的MAC=组播&广播MAC,继续转到OpenFlow Table9处理。 Ø qg口:匹配datapath tunnel key,logical ingress port tunnel key,目的MAC=qg口MAC,继续转到OpenFlow Table9处理。--->仅在Active Gateway Chassis节点存在此流表。 Ø qg口:匹配datapath tunnel key,logical ingress port tunnel key,目的MAC=组播&广播MAC,继续转到OpenFlow Table9处理。 |

|

1 |

9 |

IP Input Ø 丢弃一些不合法的流量(不细说,具体可以看流表)。 Ø ARP回应处理:匹配datapath tunnel key,arp回应,提取出SPA和SHA,构造logical router的ARP表(个人觉得上送controller,由controller来完成这些功能)--->这条官网解释:ARP reply handling. This flow uses ARP replies topopulate the logical router’s ARP table. A priority-90 flow with match arp.op == 2 has actions put_arp(inport, arp.spa, arp.sha);例如vm访问ext外部IP,在qg口由控制器触发arp广播请求报文经过br-ex发送出去,等回应报文到达这里时,会主动学习到外部IP的ARP信息。

Ø ARP请求代答: n qg口:匹配datapath tunnel key,arp请求,logical ingress port tunnel key为qg port tunnel key,源IP=ext子网网段,目的IP=qg口IP,执行代答封装,设置logical egress port tunnel key为qg port tunnel key,reg10[0]=0x1,继续转到OpenFlow Table 32处理。--->仅在Active Gateway Chassis节点存在此流表。 n qr口:匹配datapath tunnel key,arp请求,logical ingress port tunnel key为qr port tunnel key,源IP=qr口子网网段,目的IP=qr口IP,执行代答封装,设置logical egress port tunnel key为qr port tunnel key,reg10[0]=0x1,继续转到OpenFlow Table 32处理。 --->上面2个匹配,官网解释:These flows reply to ARP requests for the router’s own IP address and populates mac binding table of the logical router port(hyy: put_arp(inport, arp.spa, arp.sha)). The ARP requests are handled only if the requestor’s IP belongs to the same subnets of the logical router portVM绑定FIP:匹配datapath tunnel key,arp请求,logical ingress port tunnel key为qg port tunnel key,目的IP=FIP,执行代答封装(使用FIP MAC),设置logical egress port tunnel key为qg port tunnel key,reg10[0]=0x1,继续转到OpenFlow Table 32处理。--->仅在VM所在节点存在此流表。(这条官网解释:If the corresponding NAT rule can be handled in a distributed manner, then this flow is only programmed on the gateway port instance where the logical_port specified in the NAT rule resides. Some of the actions are different for this case, using the external_mac specified in the NAT rule rather than the gateway port’s Ethernet address E:) n qr口:匹配datapath tunnel key,arp请求,logical ingress port tunnel key为qr port tunnel key,目的IP=FIP,执行代答封装(使用qr mac),设置logical egress port tunnel key为qr port tunnel key,reg10[0]=0x1,继续转到OpenFlow Table 32处理。--->所有节点都存在(???用不上)。 --->上面2个官网解释:These flows reply to ARP requests for the virtual IP addresses configured in the router for DNAT or load balancing. For a configured DNAT IP address or a load balancer IPv4 VIP A, for each router port P with Ethernet address E, a priority-90 flow matches inport == P && arp.op == 1 && arp.tpa == A (ARP request) with the following actions:

Ø ICMP代答: n 匹配datapath tunnel key,icmp请求,目的IP=qr口IP/qg口IP,执行:ICMP代答封装,reg10[0]设置为0x1,继续转到OpenFlow Table10处理。--->这里只是调换一下源/目的IP,会在后面的路由匹配时,按路由转发的流程走下去。 Ø GARP情况:ARP请求,目标并非是qrouter,会提取SPA和SHA来为logical router port构建MAC Binding。 n qg口:匹配datapath tunnel key,logical ingress port tunnel key=qg port tunnel key,arp请求,源IP=ext子网网段,执行:上送controller。--->仅在Active Gateway Chassis节点存在。 n qr口:匹配datapath tunnel key,logical ingress port tunnel key=qr port tunnel key,arp请求,源IP=qr口子网网段,执行:上送controller。 --->上面2种情况,官网的解释:These flows handles ARP requests not for router’s own IP address. They use the SPA and SHA to populate the logical router port’s mac binding(hyy: put_arp(inport, arp.spa, arp.sha)) table, with priority 80. The typical use case of these flows are GARP requests handling

Ø 以上不匹配时,匹配datapath tunnel key,ip报文,目的IP=qr口IP,丢弃。 Ø 以上的流表主要用于处理发给logical router自身的流量,下面剩余的则是处理用于经过logical router转发的流量。 n 目的MAC=全ff的,丢弃。 n 处理TTL为0或1,发送ICMP Time exceeded。 Ø 其他情况,默认转发到OpenFlow Table10处理。 (IP Input里有多个put_arp的,一个是从qr口进来的arp请求;一个是从qr/qg口进来的arp回应;一个是从qr/qg口进来的GARP) |

|

|

2 |

10 |

DEFRAG:暂时跳过。 |

|

|

3 |

11 |

UNSNAT:针对请求报文已经经过egress pipeline做了snat,然后回来的报文进入ingress pipeline到这里继续匹配。 Ø 匹配datapath tunnel key,logical ingress port tunnel key=qg口tunnel key,目的IP=FIP,执行: ct(table=12,zone=NXM_NX_REG12[0..15],nat)--->这里的nat告诉ovs之前做个了snat,直接从状态将目的IP从FIP恢复到原始VM IP地址。--->仅在VM所在节点以及Gateway Chassis节点存在。 Ø 匹配datapath tunnel key,logical ingress port tunnel key=qg口tunnel key,目的IP=qg口IP,执行: ct(table=12,zone=NXM_NX_REG12[0..15],nat)--->仅在Active Gateway Chassis节点存在。 Ø 匹配datapath tunnel key,目的IP=qg口IP/FIP,执行:设置reg9[0]=0x1,继续转到OpenFlow Table12处理。--->这类流表主要用于从qr口访问这些IP地址时。 Ø 其他情况,继续转到OpenFlow Table12处理。 |

|

|

4 |

12 |

DNAT Ø 从外面主动访问FIP时:匹配datapath tunnel key,logical ingress port tunnel key为qg port tunnel key,目的IP=FIP,执行: ct(commit,table=13,zone=NXM_NX_REG11[0..15],nat(dst=VM_IP)) Ø 下面是考虑东西向流量访问FIP时(FIP绑定了VM,因此实际是访问VM)的情况,需要设置标记,在下面的Ingress Gateway Redirect Table匹配时将流量引导到Active Gateway Chassis节点。 n 匹配datapath tunnel key,目的IP=FIP,执行:设置reg9[0]=0x1,继续转到OpenFlow Table13处理。 Ø 其他情况,继续转到OpenFlow Table13处理。 |

|

|

5 |

13 |

IPv6 ND RA option processing:暂时跳过。 |

|

|

6 |

14 |

IPv6 ND RA responder:暂时跳过。 |

|

|

7 |

15 |

IP Routing Ø 匹配datapath tunnel key, reg9=0x1/0x1,执行:ttl减一,继续转到OpenFlow Table16处理。 Ø 匹配datapath tunnel key,目的网段=qr口子网网段,执行:ttl减一,下一跳IP(因为是直连路由,因此为目的IP)保存到reg0,出口IP(即qr口IP)保存到reg1,设置源MAC=qr口 MAC,设置logical egress port tunnel key为qr port tunnel key,reg10[0]=0x1,继续转到OpenFlow Table16处理。--->vpc子网直连路由 Ø 匹配datapath tunnel key,目的网段=qg口子网网段,执行:ttl减一,下一跳IP(因为是直连路由,因此为目的IP)保存到reg0,出口IP(即qg口IP)保存到reg1,设置源MAC=qg口 MAC,设置logical egress port tunnel key为qg port tunnel key,reg10[0]=0x1,继续转到OpenFlow Table16处理。--->ext网直连路由,当ext有多个子网时,这里的直连网段取决于qg口分配的是哪个网段。 Ø 默认路由:匹配datapath tunnel key,执行:ttl减一,下一跳IP(ext网关)保存到reg0,出口IP(qg口IP)保存到reg1,设置源MAC=qg口MAC,设置logical egress port tunnel key为qg port tunnel key,reg10[0]=0x1,继续转到OpenFlow Table16处理。 |

|

|

8 |

16 |

ARP/ND Resolution:接上一步,继续解析下一跳的ARP信息,进而封装目的MAC Ø 匹配datapath tunnel key, reg9=0x1,则设置目的MAC为qg口MAC,继续转到OpenFlow Table17处理。 Ø 匹配datapath tunnel key,logical egress port tunnel key为qr口tunnel key,下一跳(reg0)为VM IP(含跨节点)/qr口IP(另外一个子网),则设置目的MAC为VM MAC/qr口MAC,继续转到OpenFlow Table17处理。 Ø 匹配datapath tunnel key,logical egress port tunnel key为qg口tunnel key,下一跳(reg0)为VM IP(含跨节点),则设置目的MAC为VM MAC,继续转到OpenFlow Table17处理。--->针对VM直接接入ext网络(仅限于qg口所属的子网,如果ext存在多个子网,其他子网的VM ARP信息则不存在这里)。 Ø 未知情况:设置目的MAC为全00,继续转到OpenFlow Table66处理(table=66, priority=100,reg0=<ext默认网关IP>,reg15=<qg port tunnel key>,metadata=<datapath tunnel key> actions=mod_dl_dst:<ext默认网关MAC>--->没有这条流表时则默认丢弃,即使丢弃了也会继续执行下一个动作的,table66的流表是由controller根据mac binding生成的),接着继续转到OpenFlow Table17处理。 |

|

|

9 |

17 |

Gateway Redirect Ø 如果reg9=0x1/0x1,则设置logical egress port tunnel key为CR port tunnel key,继续转到OpenFlow Table18处理。 Ø 匹配datapath tunnel key, logical egress port tunnel key为qg port tunnel key,目的MAC为全00,则设置logical egress port tunnel key为CR port tunnel key,继续转到OpenFlow Table18处理。 Ø 匹配datapath tunnel key, logical egress port tunnel key为qg port tunnel key,源IP为与FIP有绑定的VM IP,继续转到OpenFlow Table18处理。 Ø 匹配datapath tunnel key, logical egress port tunnel key为qg port tunnel key,则设置logical egress port tunnel key为CR port tunnel key,继续转到OpenFlow Table18处理。 Ø 其他情况:继续转到OpenFlow Table18处理。 |

|

|

10 |

18 |

ARP请求: Ø 未知目的MAC:目的MAC为全00,上送controller处理。---》这里会上送控制器,由controller构造ARP广播请求发送,执行output动作,即:转table32处理。因为前面已经设置了出口=cr口,因此如果节点不是active gateway chassis的话,会走隧道到达active gateway chassi,再修改出口为qg口,再从br-ex广播出去(此时arp广播请求源mac=gq口mac,这也是不允许从本节点出去的原因,因为会导致外部学习gq口mac混乱的问题)。 Ø 已知目的MAC:继续转到OpenFlow Table32处理。 |

|

|

Egress |

0 |

40 |

UNDNAT:从外到内,经过ingress pipeline做了DNAT转换了目的IP。当内的VM回应时,经过此,匹配,告诉ct做过了nat转换,因此会根据ct里的连接状态自动将源IP替换为FIP。 Ø 匹配datapath tunnel key,logical egress port tunnel key,源IP=绑定了FIP的VM IP,执行:设置源MAC=FIP MAC,ct(table=41,zone=NXM_NX_REG11[0..15],nat)。 Ø 其他情况,继续转到OpenFlow Table41处理。 |

|

1 |

41 |

SNAT Ø VM与FIP绑定的情况:匹配datapath tunnel key,logical egress port tunnel key为qg port tunnel key,源IP=VM IP,则设置源MAC=FIP MAC,ct(commit,table=42,zone=NXM_NX_REG12[0..15],nat(src=FIP)) Ø 集中式SNAT:匹配datapath tunnel key,logical egress port tunnel key为qg port tunnel key,源IP=qr口子网网段,则执行ct(commit,table=42,zone=NXM_NX_REG12[0..15],nat(src=qg口IP)) Ø 其他情况,继续转到OpenFlow Table42处理。 |

|

|

2 |

42 |

Egress Loopback:从qg口出去访问qg口的IP以及FIP,走环回路径,这个场景相对复杂。 Ø 匹配datapath tunnel key,logical egress port tunnel key为qg port tunnel key,目的IP=qg口IP/FIP,则出口变入口,出口tunnel key设置为0,reg10[0]设置为0x1,其他寄存器值清空。继续转到OpenFlow Table8处理(又环回到相同datapath的ingress pipeline)。 Ø 其他情况,继续转到OpenFlow Table43处理。 |

|

|

3 |

43 |

Delivery Ø 匹配datapath tunnel key,logical egress port tunnel为qg port tunnel key/qr port tunnel key,继续转到OpenFlow Table64处理。 Ø 默认情况丢弃(没有显示的流表)。 |

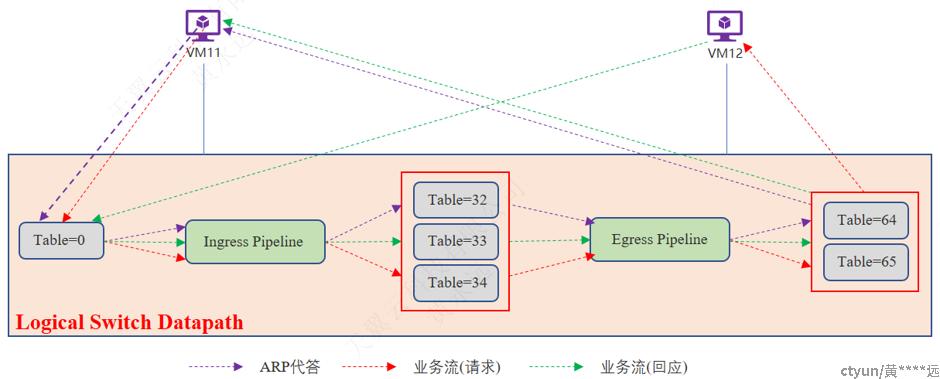

3.4.2 业务流

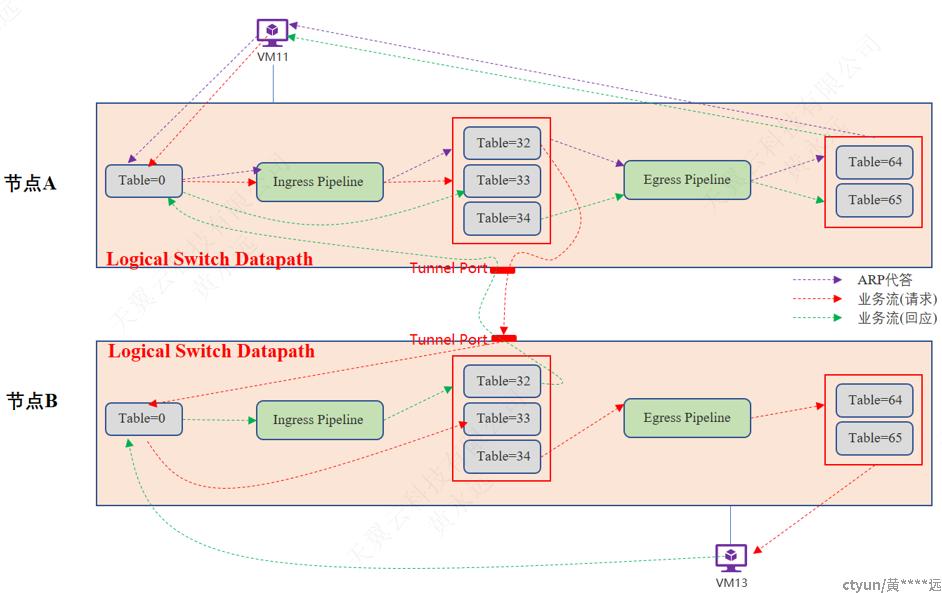

3.4.2.1 东西向-二层-同节点

3.4.2.2 东西向-二层-跨节点

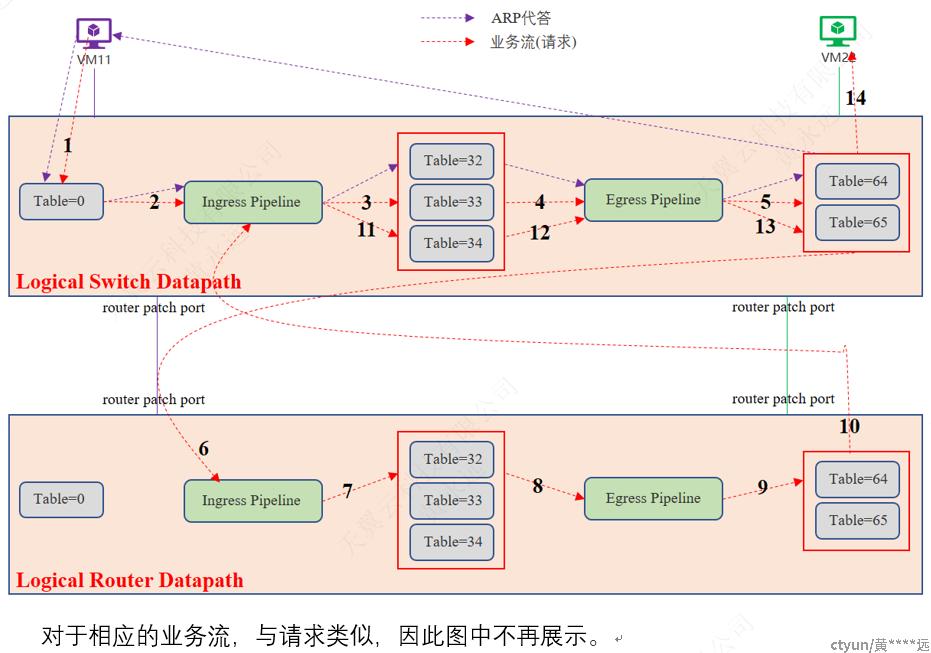

3.4.2.3 东西向-三层-同节点

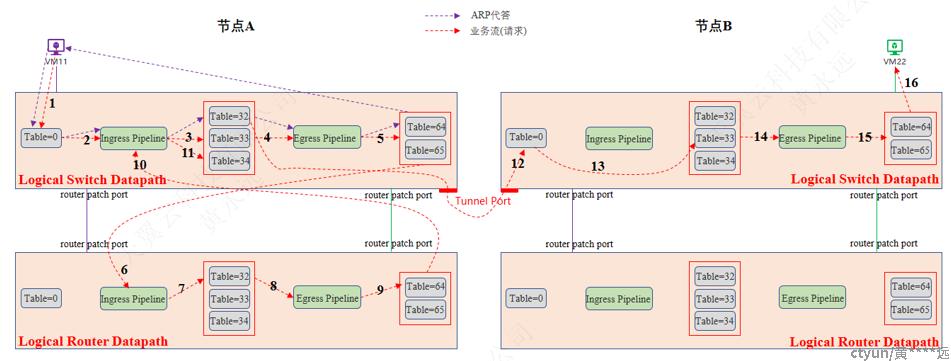

3.4.2.4 东西向-三层-跨节点

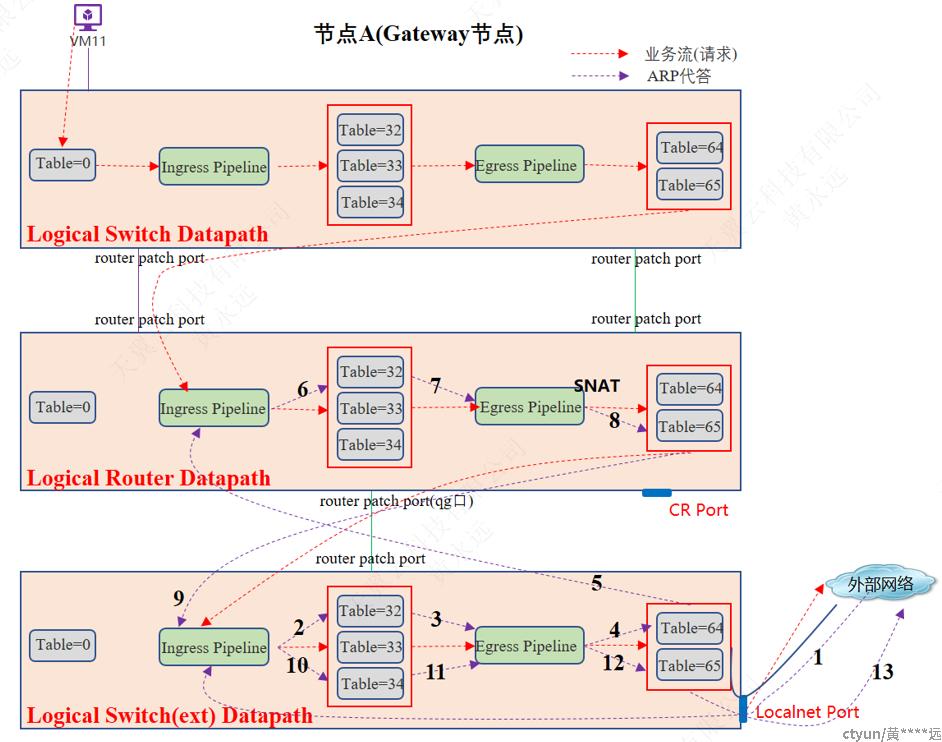

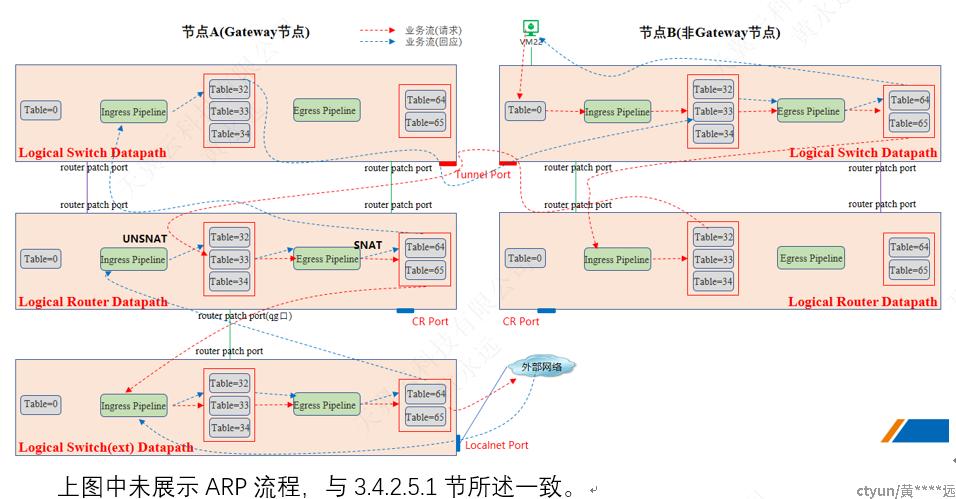

3.4.2.5 南北向-集中式SNAT

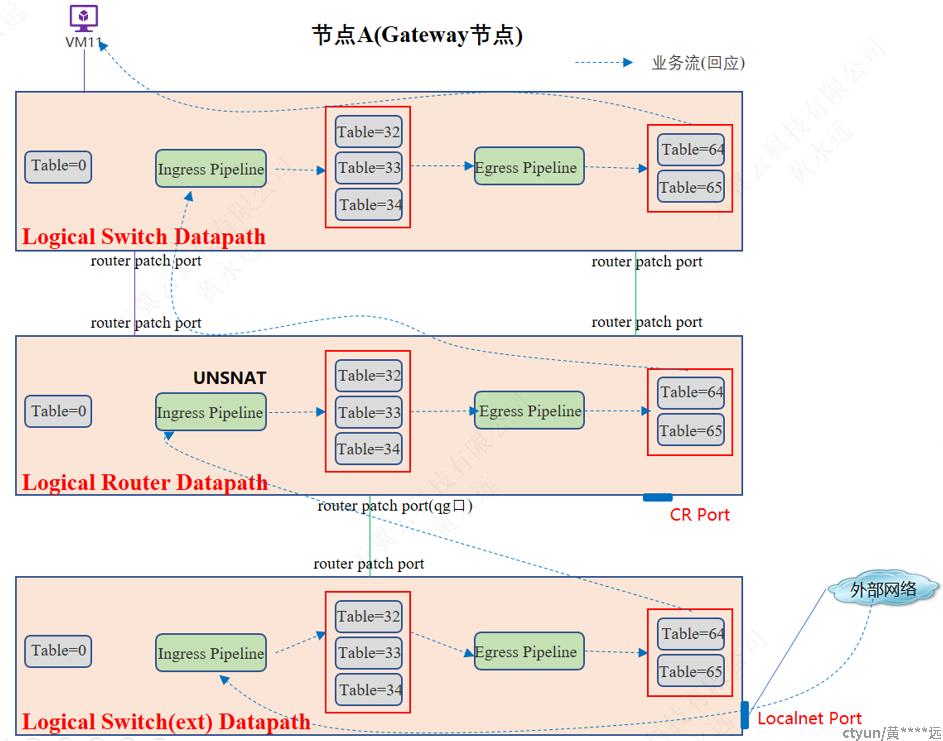

3.4.2.5.1 网关节点

此种情况为在Active Gateway Chassis节点,且该节点复用为计算节点。

3.4.2.5.2 其他节点

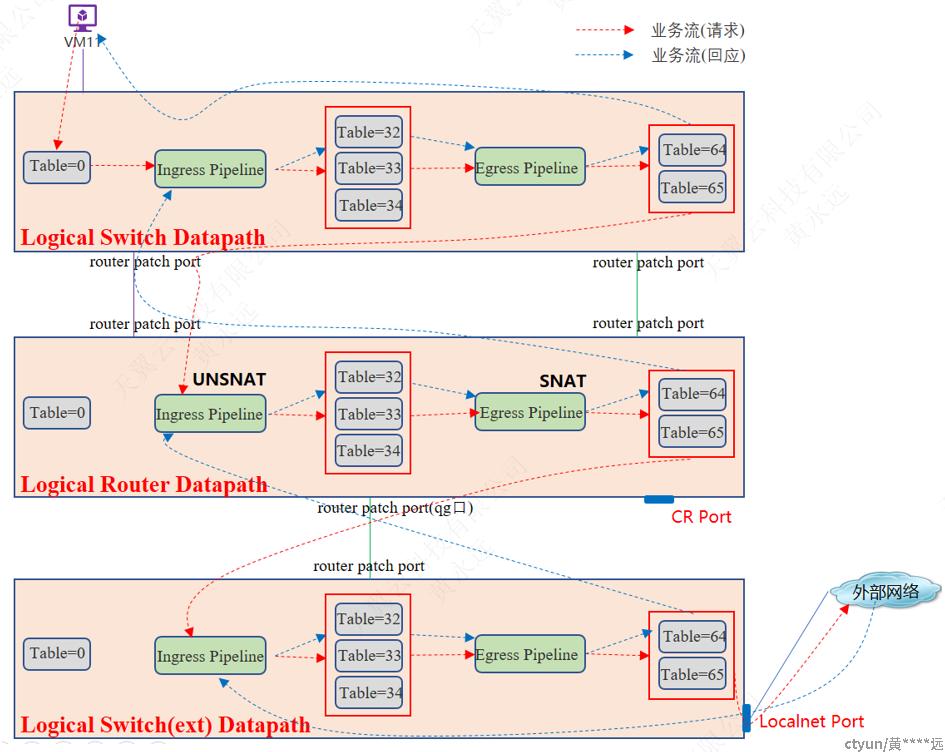

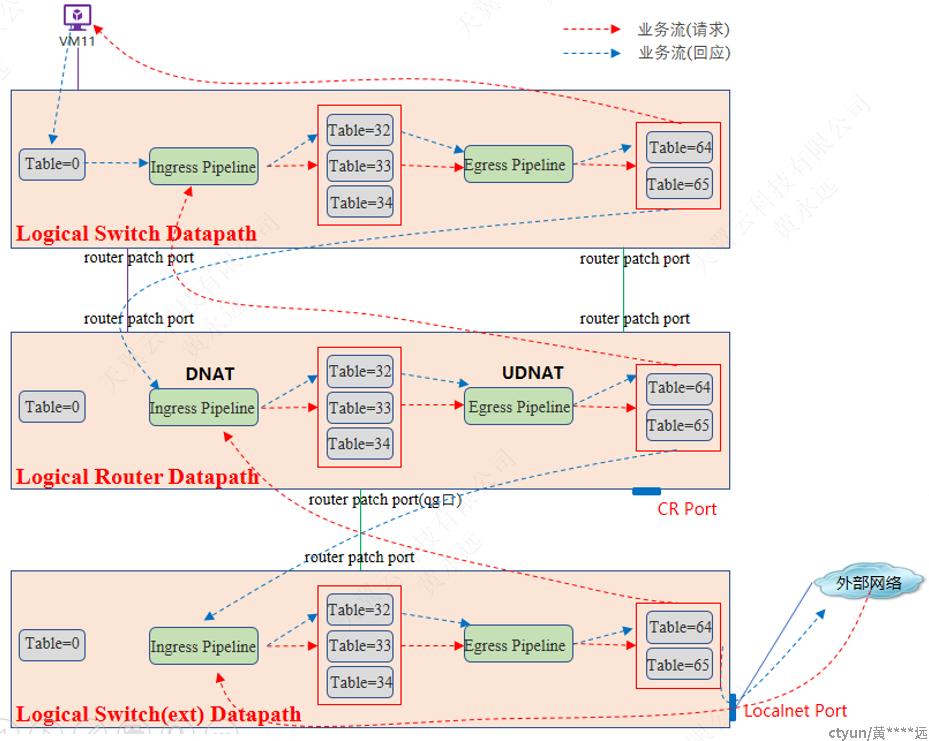

3.4.2.6 南北向-VM绑定FIP

下图为VM主动访问外部的情况,未展示ARP流程,与3.4.2.5.1节所述一致。

下图为外部主动访问VM的情况,未展示ARP流程,与3.4.2.5.1节所述一致。

3.4.2.7 东西向-访问qr/qg口IP

因为这些IP在router上,因此,访问这些IP,在logical router datapath OpenFlow Table9(ingress pipeline IP Input)执行了ICMP代答(将源IP和目标IP交换即可),然后继续逐表处理,最后会在logical router datapath OpenFlow Table15(ingress pipeline IP Routing)查询路由(目标IP已经交换了),后续的流程再上述已经涉及很多了,这里不再赘述。

3.4.2.8 控制器触发ARP请求

ovn-trace --db tcp:192.168.0.201:6642 --ovs n1 'inport == "a2d480dc-ec4c-4a91-aac7-b2c015afeab6" && eth.src == fa:16:3e:f1:da:40 && eth.dst == fa:16:3e:cc:5e:81 && ip4.src == 10.1.1.11 && ip4.dst == 172.168.1.100 && ip.ttl == 64 && icmp4.type == 8'

使用上面的跟踪命令,跟踪一个绑定了FIP的VM去访问FIP同网段的ext ip(一个没有在MAC Binding表记录的)的情况。跟踪内容如下所示:

[root@compute ~]# ovn-trace --db tcp:192.168.0.201:6642 --ovs n1 'inport == "a2d480dc-ec4c-4a91-aac7-b2c015afeab6" && eth.src == fa:16:3e:f1:da:40 && eth.dst == fa:16:3e:cc:5e:81 && ip4.src == 10.1.1.11 && ip4.dst == 172.168.1.100 && ip.ttl == 64 && icmp4.type == 8'

# icmp,reg14=0x1,vlan_tci=0x0000,dl_src=fa:16:3e:f1:da:40,dl_dst=fa:16:3e:cc:5e:81,nw_src=10.1.1.11,nw_dst=172.168.1.100,nw_tos=0,nw_ecn=0,nw_ttl=64,icmp_type=8,icmp_code=0

ingress(dp="n1", inport="n1subnet1_vm1")

----------------------------------------

- ls_in_port_sec_l2 (ovn-northd.c:4138): inport == "n1subnet1_vm1" && eth.src == {fa:16:3e:f1:da:40}, priority 50, uuid b381b75d

cookie=0xb381b75d, duration=3368.850s, table=8, n_packets=98, n_bytes=7646, priority=50,reg14=0x1,metadata=0x1,dl_src=fa:16:3e:f1:da:40 actions=resubmit(,9)

next;

- ls_in_port_sec_ip (ovn-northd.c:2893): inport == "n1subnet1_vm1" && eth.src == fa:16:3e:f1:da:40 && ip4.src == {10.1.1.11}, priority 90, uuid 5f4f92fa

cookie=0x5f4f92fa, duration=3368.848s, table=9, n_packets=81, n_bytes=6018, priority=90,ip,reg14=0x1,metadata=0x1,dl_src=fa:16:3e:f1:da:40,nw_src=10.1.1.11 actions=resubmit(,10)

next;

- ls_in_pre_acl (ovn-northd.c:3270): ip, priority 100, uuid def0a930

cookie=0xdef0a930, duration=7428.277s, table=11, n_packets=249, n_bytes=20112, priority=100,ip,metadata=0x1 actions=load:0x1->NXM_NX_XXREG0[96],resubmit(,12)

cookie=0xdef0a930, duration=7428.276s, table=11, n_packets=0, n_bytes=0, priority=100,ipv6,metadata=0x1 actions=load:0x1->NXM_NX_XXREG0[96],resubmit(,12)

reg0[0] = 1;

next;

- ls_in_pre_stateful (ovn-northd.c:3397): reg0[0] == 1, priority 100, uuid 3cfa5bf1

cookie=0x3cfa5bf1, duration=7428.315s, table=13, n_packets=0, n_bytes=0, priority=100,ipv6,reg0=0x1/0x1,metadata=0x1 actions=ct(table=14,zone=NXM_NX_REG13[0..15])

cookie=0x3cfa5bf1, duration=7428.271s, table=13, n_packets=249, n_bytes=20112, priority=100,ip,reg0=0x1/0x1,metadata=0x1 actions=ct(table=14,zone=NXM_NX_REG13[0..15])

ct_next;

ct_next(ct_state=est|trk /* default (use --ct to customize) */)

---------------------------------------------------------------

- ls_in_acl (ovn-northd.c:3584): !ct.new && ct.est && !ct.rpl && ct_label.blocked == 0 && (inport == @pg_847a5488_34f9_4c42_96d5_807a30c0fd03 && ip4), priority 2002, uuid 63a63db6

cookie=0x63a63db6, duration=3368.851s, table=14, n_packets=0, n_bytes=0, priority=2002,ct_state=-new+est-rpl+trk,ct_label=0/0x1,ip,reg14=0x1,metadata=0x1 actions=resubmit(,15)

cookie=0x63a63db6, duration=3315.271s, table=14, n_packets=0, n_bytes=0, priority=2002,ct_state=-new+est-rpl+trk,ct_label=0/0x1,ip,reg14=0x7,metadata=0x1 actions=resubmit(,15)

cookie=0x63a63db6, duration=3355.842s, table=14, n_packets=0, n_bytes=0, priority=2002,ct_state=-new+est-rpl+trk,ct_label=0/0x1,ip,reg14=0x2,metadata=0x1 actions=resubmit(,15)

next;

- ls_in_l2_lkup (ovn-northd.c:4532): eth.dst == fa:16:3e:cc:5e:81, priority 50, uuid 1a1e5f17

cookie=0x1a1e5f17, duration=7428.281s, table=24, n_packets=162, n_bytes=12036, priority=50,metadata=0x1,dl_dst=fa:16:3e:cc:5e:81 actions=set_field:0x8->reg15,resubmit(,32)

outport = "5478dc";

output;

egress(dp="n1", inport="n1subnet1_vm1", outport="5478dc")

---------------------------------------------------------

- ls_out_pre_acl (ovn-northd.c:3226): ip && outport == "5478dc", priority 110, uuid 0adc79ea

cookie=0xadc79ea, duration=7428.277s, table=41, n_packets=162, n_bytes=12036, priority=110,ip,reg15=0x8,metadata=0x1 actions=resubmit(,42)

cookie=0xadc79ea, duration=7428.272s, table=41, n_packets=0, n_bytes=0, priority=110,ipv6,reg15=0x8,metadata=0x1 actions=resubmit(,42)

next;

- ls_out_port_sec_l2 (ovn-northd.c:4615): outport == "5478dc", priority 50, uuid a653bcfe

cookie=0xa653bcfe, duration=7428.314s, table=49, n_packets=162, n_bytes=12036, priority=50,reg15=0x8,metadata=0x1 actions=resubmit(,64)

output;

/* output to "5478dc", type "patch" */

ingress(dp="n1router", inport="lrp-5478dc")

-------------------------------------------

- lr_in_admission (ovn-northd.c:5161): eth.dst == fa:16:3e:cc:5e:81 && inport == "lrp-5478dc", priority 50, uuid 95c8af40

cookie=0x95c8af40, duration=7428.270s, table=8, n_packets=162, n_bytes=12036, priority=50,reg14=0x1,metadata=0x4,dl_dst=fa:16:3e:cc:5e:81 actions=resubmit(,9)

next;

- lr_in_ip_routing (ovn-northd.c:4743): ip4.dst == 172.168.1.0/24, priority 49, uuid 9c5d0fa1

cookie=0x9c5d0fa1, duration=7428.277s, table=15, n_packets=0, n_bytes=0, priority=49,ip,metadata=0x4,nw_dst=172.168.1.0/24 actions=dec_ttl(),move:NXM_OF_IP_DST[]->NXM_NX_XXREG0[96..127],load:0xaca80104->NXM_NX_XXREG0[64..95],set_field:fa:16:3e:dc:9a:73->eth_src,set_field:0x3->reg15,load:0x1->NXM_NX_REG10[0],resubmit(,16)

ip.ttl--;

reg0 = ip4.dst;

reg1 = 172.168.1.4;

eth.src = fa:16:3e:dc:9a:73;

outport = "lrp-4cdf48";

flags.loopback = 1;

next;

- lr_in_arp_resolve (ovn-northd.c:6606): ip4, priority 0, uuid 90311048

cookie=0x90311048, duration=7428.282s, table=16, n_packets=240, n_bytes=17760, priority=0,ip,metadata=0x4 actions=push:NXM_NX_REG0[],push:NXM_NX_XXREG0[96..127],pop:NXM_NX_REG0[],set_field:00:00:00:00:00:00->eth_dst,resubmit(,66),pop:NXM_NX_REG0[],resubmit(,17)

get_arp(outport, reg0);

/* No MAC binding. */

next;

- lr_in_gw_redirect (ovn-northd.c:6645): outport == "lrp-4cdf48" && eth.dst == 00:00:00:00:00:00, priority 150, uuid c8886b99

cookie=0xc8886b99, duration=7428.272s, table=17, n_packets=240, n_bytes=17760, priority=150,reg15=0x3,metadata=0x4,dl_dst=00:00:00:00:00:00 actions=set_field:0x4->reg15,resubmit(,18)

outport = "cr-lrp-4cdf48";

next;

- lr_in_arp_request (ovn-northd.c:6706): eth.dst == 00:00:00:00:00:00, priority 100, uuid 817a2139

cookie=0x817a2139, duration=7428.277s, table=18, n_packets=240, n_bytes=17760, priority=100,ip,metadata=0x4,dl_dst=00:00:00:00:00:00 actions=controller(userdata=00.00.00.00.00.00.00.00.00.19.00.10.80.00.06.06.ff.ff.ff.ff.ff.ff.00.00.ff.ff.00.18.00.00.23.20.00.06.00.20.00.40.00.00.00.01.de.10.00.00.20.04.ff.ff.00.18.00.00.23.20.00.06.00.20.00.60.00.00.00.01.de.10.00.00.22.04.00.19.00.10.80.00.2a.02.00.01.00.00.00.00.00.00.ff.ff.00.10.00.00.23.20.00.0e.ff.f8.20.00.00.00)

arp { eth.dst = ff:ff:ff:ff:ff:ff; arp.spa = reg1; arp.tpa = reg0; arp.op = 1; output; };

arp

---

eth.dst = ff:ff:ff:ff:ff:ff;

arp.spa = reg1;

arp.tpa = reg0;

arp.op = 1;

output; ---》output指resubmit(,32)

/* Replacing type "chassisredirect" outport "cr-lrp-4cdf48" with distributed port "lrp-4cdf48". */

egress(dp="n1router", inport="lrp-5478dc", outport="lrp-4cdf48") ---》lrp-4cdf48为qg口,lrp-5478dc为qr口。这里已经跳转到了active gateway chassis节点处理了。

----------------------------------------------------------------

- lr_out_delivery (ovn-northd.c:6741): outport == "lrp-4cdf48", priority 100, uuid 772daa53

cookie=0x772daa53, duration=7428.315s, table=43, n_packets=0, n_bytes=0, priority=100,reg15=0x3,metadata=0x4 actions=resubmit(,64)

output;

/* output to "lrp-4cdf48", type "patch" */

ingress(dp="ext", inport="4cdf48")

----------------------------------

- ls_in_port_sec_l2 (ovn-northd.c:4138): inport == "4cdf48", priority 50, uuid af04b72e

cookie=0xaf04b72e, duration=7428.272s, table=8, n_packets=15, n_bytes=630, priority=50,reg14=0x2,metadata=0x3 actions=resubmit(,9)

next;

- ls_in_l2_lkup (ovn-northd.c:4448): eth.mcast, priority 100, uuid aa24dca5

cookie=0xaa24dca5, duration=7428.283s, table=24, n_packets=39, n_bytes=3758, priority=100,metadata=0x3,dl_dst=01:00:00:00:00:00/01:00:00:00:00:00 actions=set_field:0xffff->reg15,resubmit(,32)

outport = "_MC_flood";

output;

multicast(dp="ext", mcgroup="_MC_flood")

----------------------------------------

egress(dp="ext", inport="4cdf48", outport="vm_ext1")

----------------------------------------------------

- ls_out_port_sec_l2 (ovn-northd.c:4592): eth.mcast, priority 100, uuid c27fb446

cookie=0xc27fb446, duration=7428.278s, table=49, n_packets=65, n_bytes=6778, priority=100,metadata=0x3,dl_dst=01:00:00:00:00:00/01:00:00:00:00:00 actions=resubmit(,64)

output;

/* output to "vm_ext1", type "" */

egress(dp="ext", inport="4cdf48", outport="4cdf48")

---------------------------------------------------

/* omitting output because inport == outport && !flags.loopback */

egress(dp="ext", inport="4cdf48", outport="vm_ext2")

----------------------------------------------------

- ls_out_port_sec_l2 (ovn-northd.c:4592): eth.mcast, priority 100, uuid c27fb446

cookie=0xc27fb446, duration=7428.278s, table=49, n_packets=65, n_bytes=6778, priority=100,metadata=0x3,dl_dst=01:00:00:00:00:00/01:00:00:00:00:00 actions=resubmit(,64)

output;

/* output to "vm_ext2", type "" */

egress(dp="ext", inport="4cdf48", outport="provnet-fc8dfb")

-----------------------------------------------------------

- ls_out_port_sec_l2 (ovn-northd.c:4592): eth.mcast, priority 100, uuid c27fb446

cookie=0xc27fb446, duration=7428.278s, table=49, n_packets=65, n_bytes=6778, priority=100,metadata=0x3,dl_dst=01:00:00:00:00:00/01:00:00:00:00:00 actions=resubmit(,64)

output;

/* output to "provnet-fc8dfb", type "localnet" */

从上面跟踪内容可以看到,ARP请求上送了控制器,由控制器构造了arp请求packet out报文,经过ext lsw向外发送arp广播请求。

待arp回应从br-ex进入到dvr qg口,会在IP Input Table里学习到ARP信息,写入MAC_Binding,最后控制器会生成table66的流表。

4 资料参考

- Openstack Queens版本安装

https://docs.openstack.org/queens/install/

- Openstack networking-ovn Document

https://docs.openstack.org/networking-ovn/latest/index.html

- Ovs-fields

https://www.openvswitch.org/support/dist-docs/ovs-fields.7.html

- Ovn-northd8(2.11版本对应的老文档)

https://www.man7.org/linux/man-pages/man8/ovn-northd.8.html

(详细介绍了flow pipeline)

- OVN Document

https://docs.ovn.org/en/latest/index.html

- OVN官网手册

https://docs.ovn.org/en/latest/ref/index.html

5 常用命令

ovn-nbctl list Logical_Switch

ovn-nbctl list Logical_Switch_Port

ovn-nbctl list ACL

ovn-nbctl list Logical_Router

ovn-nbctl list Logical_Router_Port

ovn-nbctl list Logical_Router_Static_Route

ovn-nbctl list NAT

ovn-nbctl list DHCP_Options

ovn-nbctl list Gateway_Chassis

ovn-sbctl list Chassis

ovn-sbctl list Encap

ovn-sbctl lflow-list

ovn-sbctl --db tcp:<IP>:6642 --ovs lflow-list

ovn-sbctl list Multicast_Group

ovn-sbctl list Datapath_Binding

ovn-sbctl list Port_Binding

ovn-sbctl list MAC_Binding

ovn-sbctl list DHCP_Options

ovn-sbctl list Gateway_Chassis